[GitHub] [airflow] codecov-io edited a comment on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

codecov-io edited a comment on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#issuecomment-574641714 # [Codecov](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=h1) Report > Merging [#7163](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/2cc8d20fcfff64717b492ed34f2808bf4a5c85c9?src=pr=desc) will **decrease** coverage by `53.92%`. > The diff coverage is `32.52%`. [](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=tree) ```diff @@ Coverage Diff @@ ## master#7163 +/- ## === - Coverage 86.82% 32.89% -53.93% === Files 896 899+3 Lines 4263542745 +110 === - Hits3701714062-22955 - Misses 561828683+23065 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=tree) | Coverage Δ | | |---|---|---| | [airflow/models/connection.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMvY29ubmVjdGlvbi5weQ==) | `53.52% <ø> (-41.55%)` | :arrow_down: | | [airflow/www/views.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvdmlld3MucHk=) | `25.74% <0%> (-50.5%)` | :arrow_down: | | [.../example\_dags/example\_spark\_kubernetes\_operator.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL2V4YW1wbGVfZGFncy9leGFtcGxlX3NwYXJrX2t1YmVybmV0ZXNfb3BlcmF0b3IucHk=) | `0% <0%> (ø)` | | | [airflow/www/forms.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy93d3cvZm9ybXMucHk=) | `93.1% <100%> (-6.9%)` | :arrow_down: | | [...flow/providers/cncf/kubernetes/hooks/kubernetes.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL2hvb2tzL2t1YmVybmV0ZXMucHk=) | `28.57% <28.57%> (ø)` | | | [...viders/cncf/kubernetes/sensors/spark\_kubernetes.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL3NlbnNvcnMvc3Bhcmtfa3ViZXJuZXRlcy5weQ==) | `33.33% <33.33%> (ø)` | | | [...ders/cncf/kubernetes/operators/spark\_kubernetes.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL29wZXJhdG9ycy9zcGFya19rdWJlcm5ldGVzLnB5) | `39.47% <39.47%> (ø)` | | | [...low/contrib/operators/wasb\_delete\_blob\_operator.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL29wZXJhdG9ycy93YXNiX2RlbGV0ZV9ibG9iX29wZXJhdG9yLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [...ing\_platform/example\_dags/example\_display\_video.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvZ29vZ2xlL21hcmtldGluZ19wbGF0Zm9ybS9leGFtcGxlX2RhZ3MvZXhhbXBsZV9kaXNwbGF5X3ZpZGVvLnB5) | `0% <0%> (-100%)` | :arrow_down: | | [airflow/contrib/hooks/vertica\_hook.py](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree#diff-YWlyZmxvdy9jb250cmliL2hvb2tzL3ZlcnRpY2FfaG9vay5weQ==) | `0% <0%> (-100%)` | :arrow_down: | | ... and [774 more](https://codecov.io/gh/apache/airflow/pull/7163/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=footer). Last update [2cc8d20...b479022](https://codecov.io/gh/apache/airflow/pull/7163?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Created] (AIRFLOW-6948) Remove ASCII Airflow from version command

Tomasz Urbaszek created AIRFLOW-6948: Summary: Remove ASCII Airflow from version command Key: AIRFLOW-6948 URL: https://issues.apache.org/jira/browse/AIRFLOW-6948 Project: Apache Airflow Issue Type: Improvement Components: cli Affects Versions: 2.0.0 Reporter: Tomasz Urbaszek -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] konpap94 commented on issue #6337: [AIRFLOW-5659] - Add support for ephemeral storage on KubernetesPodOp…

konpap94 commented on issue #6337: [AIRFLOW-5659] - Add support for ephemeral storage on KubernetesPodOp… URL: https://github.com/apache/airflow/pull/6337#issuecomment-592023535 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] roitvt commented on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#issuecomment-592021693 @kaxil and @ashb I added test and fixed the things from your last review and you check again? Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] roitvt commented on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor

roitvt commented on issue #7163: [AIRFLOW-6542] add spark-on-k8s operator/hook/sensor URL: https://github.com/apache/airflow/pull/7163#issuecomment-592021378 @kaxil and @ashb I added test and fixed the things from your last review and you check again? Thanks This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] zhongjiajie commented on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax

zhongjiajie commented on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax URL: https://github.com/apache/airflow/pull/7343#issuecomment-592015585 @mik-laj Sure, but how could I do? submit a new PR to this repo? or create a PR to PolideaInternal:AIRFLOW-6719-pyupgrade? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun

mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun URL: https://github.com/apache/airflow/pull/7565#discussion_r385176259 ## File path: tests/models/test_dag.py ## @@ -1341,3 +1344,26 @@ class DAGsubclass(DAG): self.assertEqual(hash(dag_eq), hash(dag)) self.assertNotEqual(hash(dag_diff_name), hash(dag)) self.assertNotEqual(hash(dag_subclass), hash(dag)) + + +class TestPerformance(unittest.TestCase): Review comment: Work on other performance tests will start soon and then we should plan a solution on how we will mark the tests. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-6939) Executor configuration via import path

[ https://issues.apache.org/jira/browse/AIRFLOW-6939?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046714#comment-17046714 ] ASF subversion and git services commented on AIRFLOW-6939: -- Commit 37a8f6a91075207f56bb856661fb75b1c814f2de in airflow's branch refs/heads/master from Kamil Breguła [ https://gitbox.apache.org/repos/asf?p=airflow.git;h=37a8f6a ] [AIRFLOW-6939] Executor configuration via import path (#7563) > Executor configuration via import path > -- > > Key: AIRFLOW-6939 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6939 > Project: Apache Airflow > Issue Type: Improvement > Components: executors >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > Fix For: 2.0.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] ryw commented on a change in pull request #7553: [AIRFLOW-XXXX] Update LICENSE versions and remove old licenses

ryw commented on a change in pull request #7553: [AIRFLOW-] Update LICENSE versions and remove old licenses URL: https://github.com/apache/airflow/pull/7553#discussion_r385171624 ## File path: LICENSE ## @@ -229,36 +229,22 @@ MIT licenses The following components are provided under the MIT License. See project link for details. The text of each license is also included at licenses/LICENSE-[project].txt. -(MIT License) jquery v2.1.4 (https://jquery.org/license/) -(MIT License) dagre-d3 v0.6.1 (https://github.com/cpettitt/dagre-d3) +(MIT License) jquery v3.4.1 (https://jquery.org/license/) +(MIT License) dagre-d3 v0.8.5 (https://github.com/cpettitt/dagre-d3) Review comment: good catch, i was looking at current version of dagre rather than dagre-d3 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-6939) Executor configuration via import path

[ https://issues.apache.org/jira/browse/AIRFLOW-6939?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046712#comment-17046712 ] ASF GitHub Bot commented on AIRFLOW-6939: - mik-laj commented on pull request #7563: [AIRFLOW-6939] Executor configuration via import path URL: https://github.com/apache/airflow/pull/7563 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Executor configuration via import path > -- > > Key: AIRFLOW-6939 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6939 > Project: Apache Airflow > Issue Type: Improvement > Components: executors >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > Fix For: 2.0.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] mik-laj merged pull request #7563: [AIRFLOW-6939] Executor configuration via import path

mik-laj merged pull request #7563: [AIRFLOW-6939] Executor configuration via import path URL: https://github.com/apache/airflow/pull/7563 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun

mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun URL: https://github.com/apache/airflow/pull/7565#discussion_r385172573 ## File path: tests/models/test_dag.py ## @@ -1341,3 +1344,26 @@ class DAGsubclass(DAG): self.assertEqual(hash(dag_eq), hash(dag)) self.assertNotEqual(hash(dag_diff_name), hash(dag)) self.assertNotEqual(hash(dag_subclass), hash(dag)) + + +class TestPerformance(unittest.TestCase): Review comment: I don't think that's needed. These are very simple and small tests. In the future, when we introduce integration tests and tests for components, not just functions, we can do it. For me, this is a unit test, although it has slightly different assertions. Performance tests are tests that have metrics that can change in the environment. These tests do not change in the environment. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Resolved] (AIRFLOW-6939) Executor configuration via import path

[ https://issues.apache.org/jira/browse/AIRFLOW-6939?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Kamil Bregula resolved AIRFLOW-6939. Fix Version/s: 2.0.0 Resolution: Fixed > Executor configuration via import path > -- > > Key: AIRFLOW-6939 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6939 > Project: Apache Airflow > Issue Type: Improvement > Components: executors >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > Fix For: 2.0.0 > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun

mik-laj commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun URL: https://github.com/apache/airflow/pull/7565#discussion_r385170832 ## File path: tests/models/test_dag.py ## @@ -1341,3 +1344,26 @@ class DAGsubclass(DAG): self.assertEqual(hash(dag_eq), hash(dag)) self.assertNotEqual(hash(dag_diff_name), hash(dag)) self.assertNotEqual(hash(dag_subclass), hash(dag)) + + +class TestPerformance(unittest.TestCase): Review comment: I don't think that's needed. These are very simple and small tests. In the future, when we introduce integration tests and tests for components, not just functions, we can do it. For me, this is a unit test, although it has slightly different assertions. Performance tests are tests that have metrics that can change in the environment. These tests do not change in the environment. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] feluelle commented on issue #7536: [AIRFLOW-6918] don't use 'is' in if conditions comparing STATE

feluelle commented on issue #7536: [AIRFLOW-6918] don't use 'is' in if conditions comparing STATE URL: https://github.com/apache/airflow/pull/7536#issuecomment-592005586 Can you try a rebase? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] tooptoop4 commented on issue #7536: [AIRFLOW-6918] don't use 'is' in if conditions comparing STATE

tooptoop4 commented on issue #7536: [AIRFLOW-6918] don't use 'is' in if conditions comparing STATE URL: https://github.com/apache/airflow/pull/7536#issuecomment-592000630 @feluelle any idea why travis unhappy? maybe the current code (before my PR) was always evaluating to false This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] nuclearpinguin commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun

nuclearpinguin commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun URL: https://github.com/apache/airflow/pull/7565#discussion_r385154503 ## File path: tests/models/test_dag.py ## @@ -1341,3 +1344,26 @@ class DAGsubclass(DAG): self.assertEqual(hash(dag_eq), hash(dag)) self.assertNotEqual(hash(dag_diff_name), hash(dag)) self.assertNotEqual(hash(dag_subclass), hash(dag)) + + +class TestPerformance(unittest.TestCase): Review comment: I would like to suggest to add new marker `performance`. Then we don't have to run this test multiple times. What do you think @mik-laj ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] nuclearpinguin commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun

nuclearpinguin commented on a change in pull request #7565: [AIRFLOW-6941][WIP] Add queries count test for create_dagrun URL: https://github.com/apache/airflow/pull/7565#discussion_r385154503 ## File path: tests/models/test_dag.py ## @@ -1341,3 +1344,26 @@ class DAGsubclass(DAG): self.assertEqual(hash(dag_eq), hash(dag)) self.assertNotEqual(hash(dag_diff_name), hash(dag)) self.assertNotEqual(hash(dag_subclass), hash(dag)) + + +class TestPerformance(unittest.TestCase): Review comment: I would like to suggest to add new marker `performance`. Then we don't have to run this test multiple times. What do you think @mik-laj ? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385146211

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

None of that affects that you have created a

`scheduler_health_check_threshold` kwarg to the constructor that doesn't ever

do anything, but that looks like it does.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385146089

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

None of that affects that you have created a

`scheduler_health_check_threshold` kwarg to the constructor that doesn't ever

do anything, but that looks like it does.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] zhongjiajie commented on issue #7148: [AIRFLOW-6472] Correct short option in cli

zhongjiajie commented on issue #7148: [AIRFLOW-6472] Correct short option in cli URL: https://github.com/apache/airflow/pull/7148#issuecomment-591988120 @potiuk and @mik-laj CI green, could you please review this PR, don't forget we still have discuss in https://github.com/apache/airflow/pull/7148#issuecomment-591951660 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] nuclearpinguin commented on a change in pull request #6576: [AIRFLOW-5922] Add option to specify the mysql client library used in MySqlHook

nuclearpinguin commented on a change in pull request #6576: [AIRFLOW-5922] Add

option to specify the mysql client library used in MySqlHook

URL: https://github.com/apache/airflow/pull/6576#discussion_r385135203

##

File path: airflow/providers/mysql/hooks/mysql.py

##

@@ -113,8 +107,44 @@ def get_conn(self):

conn_config['unix_socket'] = conn.extra_dejson['unix_socket']

if local_infile:

conn_config["local_infile"] = 1

-conn = MySQLdb.connect(**conn_config)

-return conn

+return conn_config

+

+def _get_conn_config_mysql_connector_python(self, conn):

+conn_config = {

+'user': conn.login,

+'password': conn.password or '',

+'host': conn.host or 'localhost',

+'database': self.schema or conn.schema or '',

+'port': int(conn.port) if conn.port else 3306

+}

+

+if conn.extra_dejson.get('allow_local_infile', False):

+conn_config["allow_local_infile"] = True

+

+return conn_config

+

+def get_conn(self):

+"""

+Establishes a connection to a mysql database

+by extracting the connection configuration from the Airflow connection.

+

+.. note:: By default it connects to the database via the mysqlclient

library.

+But you can also choose the mysql-connector-python library which

lets you connect through ssl

+without any further ssl parameters required.

+

+:return: a mysql connection object

+"""

+conn = self.connection or self.get_connection(self.mysql_conn_id) #

pylint: disable=no-member

Review comment:

Why `#no-member` here?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client

feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks

should cache boto3 client

URL: https://github.com/apache/airflow/pull/7541#discussion_r385142816

##

File path: tests/providers/amazon/aws/operators/test_ecs.py

##

@@ -48,12 +48,10 @@

]

}

-

+# pylint: disable=unused-argument

+@mock.patch('airflow.providers.amazon.aws.operators.ecs.AwsBaseHook')

Review comment:

That's weird. It should work.

1. `@mock.patch('airflow.providers.amazon.aws.operators.ecs.AwsBaseHook')`

2. `self.aws_hook_mock = aws_hook_mock`

3. Access the mocked hook `self.aws_hook_mock` in the tests

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] codecov-io commented on issue #7547: [AIRFLOW-6926] Fix Google Tasks operators return types and idempotency

codecov-io commented on issue #7547: [AIRFLOW-6926] Fix Google Tasks operators return types and idempotency URL: https://github.com/apache/airflow/pull/7547#issuecomment-591984953 # [Codecov](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=h1) Report > Merging [#7547](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=desc) into [master](https://codecov.io/gh/apache/airflow/commit/bb552b2d9fd595cc3eb1b3a2f637f29b814878d7?src=pr=desc) will **decrease** coverage by `0.31%`. > The diff coverage is `92.85%`. [](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=tree) ```diff @@Coverage Diff @@ ## master#7547 +/- ## == - Coverage 86.86% 86.54% -0.32% == Files 896 896 Lines 4263842651 +13 == - Hits3703636912 -124 - Misses 5602 5739 +137 ``` | [Impacted Files](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=tree) | Coverage Δ | | |---|---|---| | [...oviders/google/cloud/example\_dags/example\_tasks.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvZ29vZ2xlL2Nsb3VkL2V4YW1wbGVfZGFncy9leGFtcGxlX3Rhc2tzLnB5) | `100% <100%> (ø)` | :arrow_up: | | [airflow/providers/google/cloud/operators/tasks.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvZ29vZ2xlL2Nsb3VkL29wZXJhdG9ycy90YXNrcy5weQ==) | `99.14% <92.59%> (-0.86%)` | :arrow_down: | | [airflow/kubernetes/volume\_mount.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZV9tb3VudC5weQ==) | `44.44% <0%> (-55.56%)` | :arrow_down: | | [airflow/kubernetes/volume.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3ZvbHVtZS5weQ==) | `52.94% <0%> (-47.06%)` | :arrow_down: | | [airflow/kubernetes/pod\_launcher.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3BvZF9sYXVuY2hlci5weQ==) | `47.18% <0%> (-45.08%)` | :arrow_down: | | [...viders/cncf/kubernetes/operators/kubernetes\_pod.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9wcm92aWRlcnMvY25jZi9rdWJlcm5ldGVzL29wZXJhdG9ycy9rdWJlcm5ldGVzX3BvZC5weQ==) | `69.69% <0%> (-25.26%)` | :arrow_down: | | [airflow/kubernetes/refresh\_config.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9rdWJlcm5ldGVzL3JlZnJlc2hfY29uZmlnLnB5) | `50.98% <0%> (-23.53%)` | :arrow_down: | | [airflow/utils/helpers.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9oZWxwZXJzLnB5) | `71.31% <0%> (-11.3%)` | :arrow_down: | | [airflow/utils/dag\_processing.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy91dGlscy9kYWdfcHJvY2Vzc2luZy5weQ==) | `86.51% <0%> (-1.83%)` | :arrow_down: | | [airflow/models/\_\_init\_\_.py](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree#diff-YWlyZmxvdy9tb2RlbHMvX19pbml0X18ucHk=) | `90.9% <0%> (-0.4%)` | :arrow_down: | | ... and [22 more](https://codecov.io/gh/apache/airflow/pull/7547/diff?src=pr=tree-more) | | -- [Continue to review full report at Codecov](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=continue). > **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta) > `Δ = absolute (impact)`, `ø = not affected`, `? = missing data` > Powered by [Codecov](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=footer). Last update [bb552b2...2a1df2a](https://codecov.io/gh/apache/airflow/pull/7547?src=pr=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments). This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client

feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client URL: https://github.com/apache/airflow/pull/7541#discussion_r385139258 ## File path: tests/providers/amazon/aws/operators/test_ecs.py ## @@ -171,7 +166,7 @@ def test_execute_with_failures(self): } ) -def test_wait_end_tasks(self): +def test_wait_end_tasks(self, aws_hook_mock): Review comment: Okay, but can you please then add the pylint disable rule only to these lines. So that other unused arguments won't be disabled. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client

feluelle commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client URL: https://github.com/apache/airflow/pull/7541#discussion_r385139258 ## File path: tests/providers/amazon/aws/operators/test_ecs.py ## @@ -171,7 +166,7 @@ def test_execute_with_failures(self): } ) -def test_wait_end_tasks(self): +def test_wait_end_tasks(self, aws_hook_mock): Review comment: Okay, but can you please then add the pylint disable rule only to these lines. So that other unused arguments won't be disabled, too. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7563: [AIRFLOW-6939] Executor configuration via import path

ashb commented on a change in pull request #7563: [AIRFLOW-6939] Executor configuration via import path URL: https://github.com/apache/airflow/pull/7563#discussion_r385131154 ## File path: UPDATING.md ## @@ -61,6 +61,29 @@ https://developers.google.com/style/inclusive-documentation --> +### Custom executors is loaded using full import path + +In previous versions of Airflow it was possible to use plugins to load custom executors. It is still +possible, but the configuration has changed. Now you don't have to create a plugin to configure a +custom executor, but you need to provide the full path to the module in the `executor` option +in the `core` section. The purpose of this change is to simplify the plugin mechanism and make +it easier to configure executor. + +If your module was in the path `my_acme_company.executors.MyCustomExecutor` and the plugin was +called `my_plugin` then your configuration looks like this + +```ini +[core] +executor = my_plguin.MyCustomExecutor Review comment: Oh wow missed that one totally! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] feluelle commented on issue #6576: [AIRFLOW-5922] Add option to specify the mysql client library used in MySqlHook

feluelle commented on issue #6576: [AIRFLOW-5922] Add option to specify the mysql client library used in MySqlHook URL: https://github.com/apache/airflow/pull/6576#issuecomment-591974065 It is green This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add CloudwatchTaskHandler option for remote task loggi…

ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add

CloudwatchTaskHandler option for remote task loggi…

URL: https://github.com/apache/airflow/pull/7437#discussion_r385123141

##

File path: airflow/utils/log/cloudwatch_task_handler.py

##

@@ -0,0 +1,114 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import watchtower

+from cached_property import cached_property

+

+from airflow.configuration import conf

+from airflow.utils.log.file_task_handler import FileTaskHandler

+from airflow.utils.log.logging_mixin import LoggingMixin

+

+

+class CloudwatchTaskHandler(FileTaskHandler, LoggingMixin):

+"""

+CloudwatchTaskHandler is a python log handler that handles and reads task

instance logs.

+

+It extends airflow FileTaskHandler and uploads to and reads from

Cloudwatch.

+

+:param base_log_folder: base folder to store logs locally

+:type base_log_folder: str

+:param log_group_arn: ARN of the Cloudwatch log group for remote log

storage

+:type log_group_arn: str

+:param filename_template: template for file name (local storage) or log

stream name (remote)

+:type filename_template: str

+"""

+def __init__(self, base_log_folder, log_group_arn, filename_template):

+super().__init__(base_log_folder, filename_template)

+split_arn = log_group_arn.split(':')

Review comment:

Could you add an example ARN here in a comment? Makes it easier to

tell/sanity check what the 4th and 7th parts are.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add CloudwatchTaskHandler option for remote task loggi…

ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add CloudwatchTaskHandler option for remote task loggi… URL: https://github.com/apache/airflow/pull/7437#discussion_r382901838 ## File path: setup.py ## @@ -157,6 +157,7 @@ def write_version(filename: str = os.path.join(*["airflow", "git_version"])): ] aws = [ 'boto3~=1.10', +'watchtower>=0.7.3', Review comment: `~=0.7.3` please This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add CloudwatchTaskHandler option for remote task loggi…

ashb commented on a change in pull request #7437: [AIRFLOW-2325] Add

CloudwatchTaskHandler option for remote task loggi…

URL: https://github.com/apache/airflow/pull/7437#discussion_r385128092

##

File path: docs/howto/write-logs.rst

##

@@ -115,6 +115,29 @@ To configure it, you must additionally set the endpoint

url to point to your loc

You can do this via the Connection Extra ``host`` field.

For example, ``{"host": "http://localstack:4572"}``

+.. _write-logs-amazon-cloudwatch:

+

+Writing Logs to Amazon Cloudwatch

+-

+

+

+Enabling remote logging

+'''

+

+To enable this feature, ``airflow.cfg`` must be configured as follows:

+

+.. code-block:: ini

+

+[logging]

+# Airflow can store logs remotely in AWS Cloudwatch. Users must supply a

log group

+# ARN (starting with 'cloudwatch://...') and an Airflow connection

+# id that provides write and read access to the log location.

+remote_logging = True

+remote_base_log_folder = cloudwatch://arn:aws:logs:::log-group::*

Review comment:

What is the `*` on the end here for? What other possible values would it

have?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] boring-cyborg[bot] commented on issue #7571: [AIRFLOW-XXXX] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst

boring-cyborg[bot] commented on issue #7571: [AIRFLOW-] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst URL: https://github.com/apache/airflow/pull/7571#issuecomment-591972889 Awesome work, congrats on your first merged pull request! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] kaxil merged pull request #7571: [AIRFLOW-XXXX] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst

kaxil merged pull request #7571: [AIRFLOW-] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst URL: https://github.com/apache/airflow/pull/7571 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] ryanahamilton opened a new pull request #7571: [AIRFLOW-XXXX] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst

ryanahamilton opened a new pull request #7571: [AIRFLOW-] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst URL: https://github.com/apache/airflow/pull/7571 --- Issue link: WILL BE INSERTED BY [boring-cyborg](https://github.com/kaxil/boring-cyborg) Make sure to mark the boxes below before creating PR: [x] - [x] Description above provides context of the change - [x] Commit message/PR title starts with `[AIRFLOW-]`. AIRFLOW- = JIRA ID* - [x] Unit tests coverage for changes (not needed for documentation changes) - [x] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)" - [x] Relevant documentation is updated including usage instructions. - [x] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example). * For document-only changes commit message can start with `[AIRFLOW-]`. --- In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed. In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x). In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md). Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] boring-cyborg[bot] commented on issue #7571: [AIRFLOW-XXXX] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst

boring-cyborg[bot] commented on issue #7571: [AIRFLOW-] Fix typos in best-practices.rst, yandexcloud.rst, and concepts.rst URL: https://github.com/apache/airflow/pull/7571#issuecomment-591972078 Congratulations on your first Pull Request and welcome to the Apache Airflow community! If you have any issues or are unsure about any anything please check our Contribution Guide (https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst) Here are some useful points: - Pay attention to the quality of your code (flake8, pylint and type annotations). Our [pre-commits]( https://github.com/apache/airflow/blob/master/STATIC_CODE_CHECKS.rst#prerequisites-for-pre-commit-hooks) will help you with that. - In case of a new feature add useful documentation (in docstrings or in `docs/` directory). Adding a new operator? Check this short [guide](https://github.com/apache/airflow/blob/master/docs/howto/custom-operator.rst) Consider adding an example DAG that shows how users should use it. - Consider using [Breeze environment](https://github.com/apache/airflow/blob/master/BREEZE.rst) for testing locally, it’s a heavy docker but it ships with a working Airflow and a lot of integrations. - Be patient and persistent. It might take some time to get a review or get the final approval from Committers. - Be sure to read the [Airflow Coding style]( https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#coding-style-and-best-practices). Apache Airflow is a community-driven project and together we are making it better . In case of doubts contact the developers at: Mailing List: d...@airflow.apache.org Slack: https://apache-airflow-slack.herokuapp.com/ This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Assigned] (AIRFLOW-6768) Graph view rendering angular edges

[ https://issues.apache.org/jira/browse/AIRFLOW-6768?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nathan Hadfield reassigned AIRFLOW-6768: Assignee: Ry Walker > Graph view rendering angular edges > -- > > Key: AIRFLOW-6768 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6768 > Project: Apache Airflow > Issue Type: Bug > Components: ui >Affects Versions: 1.10.8, 1.10.9 >Reporter: Nathan Hadfield >Assignee: Ry Walker >Priority: Minor > Fix For: 2.0.0, 1.10.10 > > Attachments: Screenshot 2020-02-10 at 08.51.02.png, Screenshot > 2020-02-10 at 08.51.20.png > > > Since the release of v1.10.8 the DAG graph view is rendering the edges > between nodes with angular lines rather than nice smooth curves. > Seems to have been caused by a bump of dagre-d3. > [https://github.com/apache/airflow/pull/7280] > [https://github.com/dagrejs/dagre-d3/issues/305] > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (AIRFLOW-6768) Graph view rendering angular edges

[ https://issues.apache.org/jira/browse/AIRFLOW-6768?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Nathan Hadfield reassigned AIRFLOW-6768: Assignee: (was: Nathan Hadfield) > Graph view rendering angular edges > -- > > Key: AIRFLOW-6768 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6768 > Project: Apache Airflow > Issue Type: Bug > Components: ui >Affects Versions: 1.10.8, 1.10.9 >Reporter: Nathan Hadfield >Priority: Minor > Fix For: 2.0.0, 1.10.10 > > Attachments: Screenshot 2020-02-10 at 08.51.02.png, Screenshot > 2020-02-10 at 08.51.20.png > > > Since the release of v1.10.8 the DAG graph view is rendering the edges > between nodes with angular lines rather than nice smooth curves. > Seems to have been caused by a bump of dagre-d3. > [https://github.com/apache/airflow/pull/7280] > [https://github.com/dagrejs/dagre-d3/issues/305] > > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] mik-laj commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

mik-laj commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385123975

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

Combining these two aspects in one class is also hell with cyclical imports.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] mik-laj commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

mik-laj commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385123579

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

The problem is related to the fact that we use the database entities as

elements of building the application. Not only as a place to store data. In

most cases, however, the schedulerJob is an application logic object and only

in rare cases a database object. I would like to separate it, but to do this,

most of the code must be used correctly ie the configuration parameters are

passed by the constructor. If we divide these two aspects in the code, we will

be able to make changes much easier and e.g. replace SQLAlchemy with Redis.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree view for large DAGs

ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree

view for large DAGs

URL: https://github.com/apache/airflow/pull/7492#discussion_r385121966

##

File path: airflow/www/views.py

##

@@ -1374,90 +1376,115 @@ def tree(self):

.all()

)

dag_runs = {

-dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs}

+dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs

+}

dates = sorted(list(dag_runs.keys()))

max_date = max(dates) if dates else None

min_date = min(dates) if dates else None

tis = dag.get_task_instances(start_date=min_date, end_date=base_date)

-task_instances = {}

+task_instances: Dict[Tuple[str, datetime], models.TaskInstance] = {}

for ti in tis:

-tid = alchemy_to_dict(ti)

-dr = dag_runs.get(ti.execution_date)

-tid['external_trigger'] = dr['external_trigger'] if dr else False

-task_instances[(ti.task_id, ti.execution_date)] = tid

+task_instances[(ti.task_id, ti.execution_date)] = ti

-expanded = []

+expanded = set()

# The default recursion traces every path so that tree view has full

# expand/collapse functionality. After 5,000 nodes we stop and fall

# back on a quick DFS search for performance. See PR #320.

-node_count = [0]

+node_count = 0

node_limit = 5000 / max(1, len(dag.leaves))

+def encode_ti(ti: Optional[models.TaskInstance]) -> Optional[List]:

+if not ti:

+return None

+

+# NOTE: order of entry is important here because client JS relies

on it for

+# tree node reconstruction. Remember to change JS code in tree.html

+# whenever order is altered.

+data = [

+ti.state,

+ti.try_number,

+None, # start_ts

+None, # duration

+]

+

+if ti.start_date:

+# round to seconds to reduce payload size

+data[2] = int(ti.start_date.timestamp())

+if ti.duration is not None:

+data[3] = int(ti.duration)

+

+return data

+

def recurse_nodes(task, visited):

+nonlocal node_count

+node_count += 1

visited.add(task)

-node_count[0] += 1

-

-children = [

-recurse_nodes(t, visited) for t in task.downstream_list

-if node_count[0] < node_limit or t not in visited]

-

-# D3 tree uses children vs _children to define what is

-# expanded or not. The following block makes it such that

-# repeated nodes are collapsed by default.

-children_key = 'children'

-if task.task_id not in expanded:

-expanded.append(task.task_id)

-elif children:

-children_key = "_children"

-

-def set_duration(tid):

-if (isinstance(tid, dict) and tid.get("state") ==

State.RUNNING and

-tid["start_date"] is not None):

-d = timezone.utcnow() - timezone.parse(tid["start_date"])

-tid["duration"] = d.total_seconds()

-return tid

-

-return {

+task_id = task.task_id

+

+node = {

'name': task.task_id,

'instances': [

-set_duration(task_instances.get((task.task_id, d))) or {

-'execution_date': d.isoformat(),

-'task_id': task.task_id

-}

-for d in dates],

-children_key: children,

+encode_ti(task_instances.get((task_id, d)))

+for d in dates

+],

'num_dep': len(task.downstream_list),

'operator': task.task_type,

'retries': task.retries,

'owner': task.owner,

-'start_date': task.start_date,

-'end_date': task.end_date,

-'depends_on_past': task.depends_on_past,

'ui_color': task.ui_color,

-'extra_links': task.extra_links,

}

+if task.downstream_list:

+children = [

+recurse_nodes(t, visited) for t in task.downstream_list

+if node_count < node_limit or t not in visited]

+

+# D3 tree uses children vs _children to define what is

+# expanded or not. The following block makes it such that

+# repeated nodes are collapsed by default.

+if task.task_id not in expanded:

+children_key = 'children'

+expanded.add(task.task_id)

+else:

+

[GitHub] [airflow] ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree view for large DAGs

ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree

view for large DAGs

URL: https://github.com/apache/airflow/pull/7492#discussion_r385121446

##

File path: airflow/www/views.py

##

@@ -1371,90 +1374,115 @@ def tree(self):

.all()

)

dag_runs = {

-dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs}

+dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs

+}

dates = sorted(list(dag_runs.keys()))

max_date = max(dates) if dates else None

min_date = min(dates) if dates else None

tis = dag.get_task_instances(start_date=min_date, end_date=base_date)

-task_instances = {}

+task_instances: Dict[Tuple[str, datetime], models.TaskInstance] = {}

for ti in tis:

-tid = alchemy_to_dict(ti)

-dr = dag_runs.get(ti.execution_date)

-tid['external_trigger'] = dr['external_trigger'] if dr else False

-task_instances[(ti.task_id, ti.execution_date)] = tid

+task_instances[(ti.task_id, ti.execution_date)] = ti

-expanded = []

+expanded = set()

# The default recursion traces every path so that tree view has full

# expand/collapse functionality. After 5,000 nodes we stop and fall

# back on a quick DFS search for performance. See PR #320.

-node_count = [0]

+node_count = 0

node_limit = 5000 / max(1, len(dag.leaves))

+def encode_ti(ti: Optional[models.TaskInstance]) -> Optional[List]:

+if not ti:

+return None

+

+# NOTE: order of entry is important here because client JS relies

on it for

+# tree node reconstruction. Remember to change JS code in tree.html

+# whenever order is altered.

+data = [

+ti.state,

+ti.try_number,

+None, # start_ts

+None, # duration

+]

+

+if ti.start_date:

+# round to seconds to reduce payload size

+data[2] = int(ti.start_date.timestamp())

+if ti.duration is not None:

+data[3] = int(ti.duration)

+

+return data

+

def recurse_nodes(task, visited):

+nonlocal node_count

+node_count += 1

visited.add(task)

-node_count[0] += 1

-

-children = [

-recurse_nodes(t, visited) for t in task.downstream_list

-if node_count[0] < node_limit or t not in visited]

-

-# D3 tree uses children vs _children to define what is

-# expanded or not. The following block makes it such that

-# repeated nodes are collapsed by default.

-children_key = 'children'

-if task.task_id not in expanded:

-expanded.append(task.task_id)

-elif children:

-children_key = "_children"

-

-def set_duration(tid):

-if (isinstance(tid, dict) and tid.get("state") ==

State.RUNNING and

-tid["start_date"] is not None):

-d = timezone.utcnow() - timezone.parse(tid["start_date"])

-tid["duration"] = d.total_seconds()

-return tid

-

-return {

+task_id = task.task_id

+

+node = {

'name': task.task_id,

'instances': [

-set_duration(task_instances.get((task.task_id, d))) or {

-'execution_date': d.isoformat(),

-'task_id': task.task_id

-}

-for d in dates],

-children_key: children,

+encode_ti(task_instances.get((task_id, d)))

+for d in dates

+],

'num_dep': len(task.downstream_list),

'operator': task.task_type,

'retries': task.retries,

'owner': task.owner,

-'start_date': task.start_date,

-'end_date': task.end_date,

-'depends_on_past': task.depends_on_past,

'ui_color': task.ui_color,

-'extra_links': task.extra_links,

}

+if task.downstream_list:

+children = [

+recurse_nodes(t, visited) for t in task.downstream_list

+if node_count < node_limit or t not in visited]

+

+# D3 tree uses children vs _children to define what is

+# expanded or not. The following block makes it such that

+# repeated nodes are collapsed by default.

+if task.task_id not in expanded:

+children_key = 'children'

+expanded.add(task.task_id)

+else:

+

[GitHub] [airflow] ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree view for large DAGs

ashb commented on a change in pull request #7492: [AIRFLOW-6871] optimize tree

view for large DAGs

URL: https://github.com/apache/airflow/pull/7492#discussion_r385120657

##

File path: airflow/www/views.py

##

@@ -1374,90 +1376,115 @@ def tree(self):

.all()

)

dag_runs = {

-dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs}

+dr.execution_date: alchemy_to_dict(dr) for dr in dag_runs

+}

dates = sorted(list(dag_runs.keys()))

max_date = max(dates) if dates else None

min_date = min(dates) if dates else None

tis = dag.get_task_instances(start_date=min_date, end_date=base_date)

-task_instances = {}

+task_instances: Dict[Tuple[str, datetime], models.TaskInstance] = {}

for ti in tis:

-tid = alchemy_to_dict(ti)

-dr = dag_runs.get(ti.execution_date)

-tid['external_trigger'] = dr['external_trigger'] if dr else False

-task_instances[(ti.task_id, ti.execution_date)] = tid

+task_instances[(ti.task_id, ti.execution_date)] = ti

-expanded = []

+expanded = set()

# The default recursion traces every path so that tree view has full

# expand/collapse functionality. After 5,000 nodes we stop and fall

# back on a quick DFS search for performance. See PR #320.

-node_count = [0]

+node_count = 0

node_limit = 5000 / max(1, len(dag.leaves))

+def encode_ti(ti: Optional[models.TaskInstance]) -> Optional[List]:

+if not ti:

+return None

+

+# NOTE: order of entry is important here because client JS relies

on it for

+# tree node reconstruction. Remember to change JS code in tree.html

+# whenever order is altered.

+data = [

+ti.state,

+ti.try_number,

+None, # start_ts

+None, # duration

+]

+

+if ti.start_date:

+# round to seconds to reduce payload size

+data[2] = int(ti.start_date.timestamp())

+if ti.duration is not None:

+data[3] = int(ti.duration)

+

+return data

+

def recurse_nodes(task, visited):

+nonlocal node_count

+node_count += 1

visited.add(task)

-node_count[0] += 1

-

-children = [

-recurse_nodes(t, visited) for t in task.downstream_list

-if node_count[0] < node_limit or t not in visited]

-

-# D3 tree uses children vs _children to define what is

-# expanded or not. The following block makes it such that

-# repeated nodes are collapsed by default.

-children_key = 'children'

-if task.task_id not in expanded:

-expanded.append(task.task_id)

-elif children:

-children_key = "_children"

-

-def set_duration(tid):

-if (isinstance(tid, dict) and tid.get("state") ==

State.RUNNING and

-tid["start_date"] is not None):

-d = timezone.utcnow() - timezone.parse(tid["start_date"])

-tid["duration"] = d.total_seconds()

-return tid

-

-return {

+task_id = task.task_id

+

+node = {

'name': task.task_id,

'instances': [

-set_duration(task_instances.get((task.task_id, d))) or {

-'execution_date': d.isoformat(),

-'task_id': task.task_id

-}

-for d in dates],

-children_key: children,

+encode_ti(task_instances.get((task_id, d)))

+for d in dates

+],

'num_dep': len(task.downstream_list),

'operator': task.task_type,

'retries': task.retries,

'owner': task.owner,

-'start_date': task.start_date,

-'end_date': task.end_date,

-'depends_on_past': task.depends_on_past,

'ui_color': task.ui_color,

-'extra_links': task.extra_links,

}

+if task.downstream_list:

+children = [

+recurse_nodes(t, visited) for t in task.downstream_list

+if node_count < node_limit or t not in visited]

+

+# D3 tree uses children vs _children to define what is

+# expanded or not. The following block makes it such that

+# repeated nodes are collapsed by default.

+if task.task_id not in expanded:

+children_key = 'children'

+expanded.add(task.task_id)

+else:

+

[GitHub] [airflow] ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385118219

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

The comment is helpful, but as a result there's no point storing this as an

attribute, a local variable makes more sense.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the SchedulerJob configuration using the constructor

ashb commented on a change in pull request #7559: [AIRFLOW-6935] Pass the

SchedulerJob configuration using the constructor

URL: https://github.com/apache/airflow/pull/7559#discussion_r385119442

##

File path: airflow/jobs/scheduler_job.py

##

@@ -991,10 +1057,13 @@ def is_alive(self, grace_multiplier=None):

if grace_multiplier is not None:

# Accept the same behaviour as superclass

return super().is_alive(grace_multiplier=grace_multiplier)

-scheduler_health_check_threshold = conf.getint('scheduler',

'scheduler_health_check_threshold')

+# The object can be retrieved from the database, so it does not

contain all the attributes.

+self.scheduler_health_check_threshold = conf.getint(

Review comment:

Which also means we shouldn't accept it as an argument in the constructor --

it won't ever be used/do anything.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[jira] [Commented] (AIRFLOW-6857) Bulk sync DAGs

[ https://issues.apache.org/jira/browse/AIRFLOW-6857?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046608#comment-17046608 ] ASF subversion and git services commented on AIRFLOW-6857: -- Commit 031b4b73e5ebd656b512628051c21a8e83803b4c in airflow's branch refs/heads/master from Kamil Breguła [ https://gitbox.apache.org/repos/asf?p=airflow.git;h=031b4b7 ] [AIRFLOW-6857] Bulk sync DAGs (#7477) > Bulk sync DAGs > -- > > Key: AIRFLOW-6857 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6857 > Project: Apache Airflow > Issue Type: Bug > Components: scheduler >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (AIRFLOW-6857) Bulk sync DAGs

[ https://issues.apache.org/jira/browse/AIRFLOW-6857?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046607#comment-17046607 ] ASF GitHub Bot commented on AIRFLOW-6857: - mik-laj commented on pull request #7477: [AIRFLOW-6857] Bulk sync DAGs URL: https://github.com/apache/airflow/pull/7477 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Bulk sync DAGs > -- > > Key: AIRFLOW-6857 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6857 > Project: Apache Airflow > Issue Type: Bug > Components: scheduler >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] mik-laj merged pull request #7477: [AIRFLOW-6857] Bulk sync DAGs

mik-laj merged pull request #7477: [AIRFLOW-6857] Bulk sync DAGs URL: https://github.com/apache/airflow/pull/7477 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] abdulbasitds commented on issue #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration

abdulbasitds commented on issue #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration URL: https://github.com/apache/airflow/pull/6007#issuecomment-591959953 @feluelle deleted 'tests/sensors/test_aws_glue_job_sensor.py' Not sure about how to exclude integration change, you asked to delete the changes to intergation.rst that this pull request has made This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] baolsen commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client

baolsen commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks

should cache boto3 client

URL: https://github.com/apache/airflow/pull/7541#discussion_r38577

##

File path: tests/providers/amazon/aws/operators/test_ecs.py

##

@@ -48,12 +48,10 @@

]

}

-

+# pylint: disable=unused-argument

+@mock.patch('airflow.providers.amazon.aws.operators.ecs.AwsBaseHook')

Review comment:

No luck. I can't mock the hook properly if I move it back to above the

setUp, regardless of how I patch it. I'm not sure how it was working before...

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

With regards,

Apache Git Services

[GitHub] [airflow] abdulbasitds commented on a change in pull request #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration

abdulbasitds commented on a change in pull request #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration URL: https://github.com/apache/airflow/pull/6007#discussion_r385110737 ## File path: docs/integration.rst ## @@ -17,7 +17,6 @@ Integration === - Review comment: Thanks, How can i exclude it? there is option 'delete file' but will it delete file from the repo? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] baolsen commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client

baolsen commented on a change in pull request #7541: [AIRFLOW-6822] AWS hooks should cache boto3 client URL: https://github.com/apache/airflow/pull/7541#discussion_r385110474 ## File path: tests/providers/amazon/aws/operators/test_ecs.py ## @@ -171,7 +166,7 @@ def test_execute_with_failures(self): } ) -def test_wait_end_tasks(self): +def test_wait_end_tasks(self, aws_hook_mock): Review comment: After moving the mock from setUp to above the class, all methods need to have this added (even if unused) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] feluelle commented on a change in pull request #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration

feluelle commented on a change in pull request #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration URL: https://github.com/apache/airflow/pull/6007#discussion_r385107981 ## File path: docs/integration.rst ## @@ -17,7 +17,6 @@ Integration === - Review comment: Please do not include this change. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] abdulbasitds commented on issue #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration

abdulbasitds commented on issue #6007: [AIRFLOW-2310] Enable AWS Glue Job Integration URL: https://github.com/apache/airflow/pull/6007#issuecomment-591954863 @feluelle I have made all changes and have rebased, does it look okay now? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] zhongjiajie commented on issue #7148: [AIRFLOW-6472] Correct short option in cli

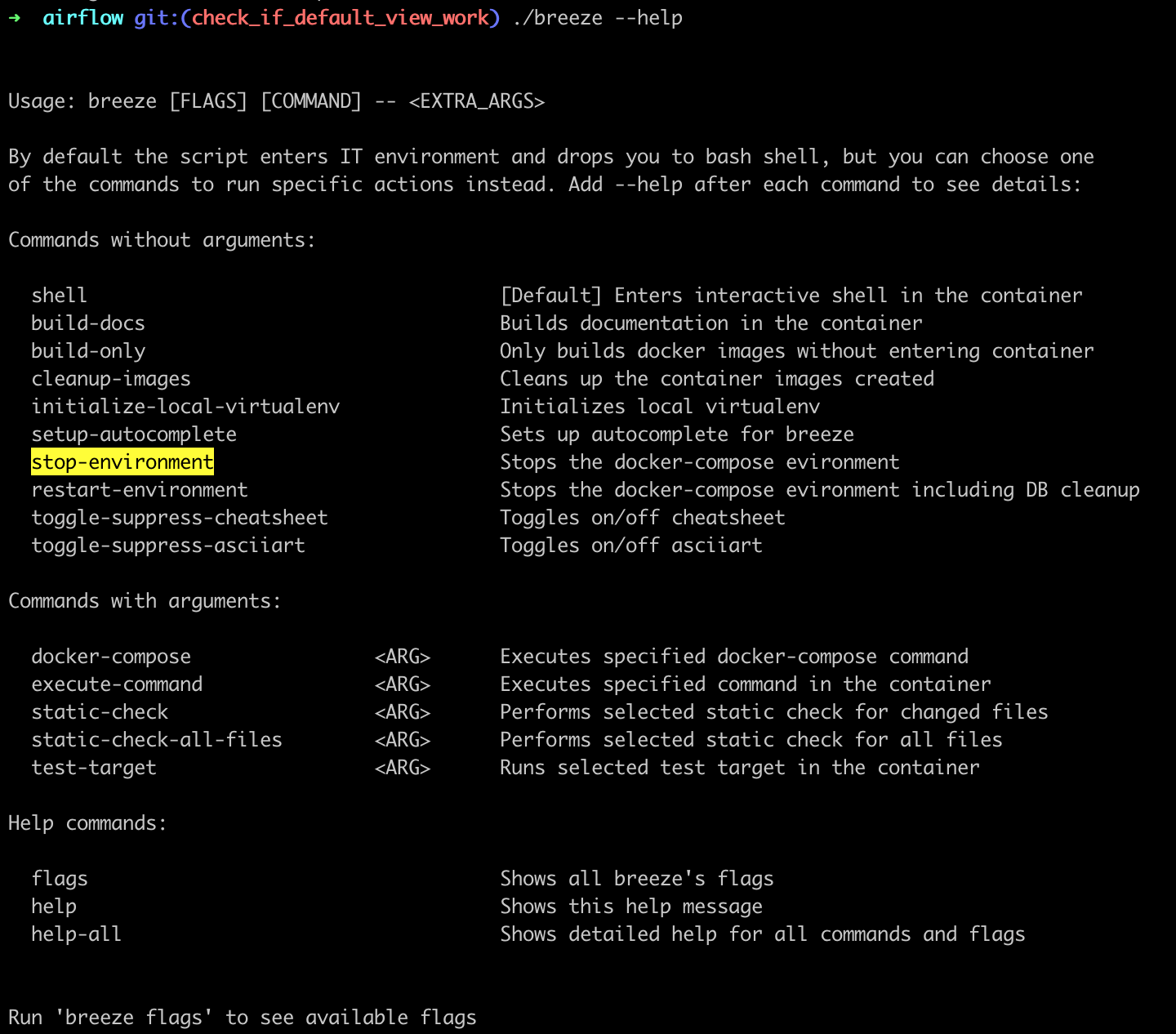

zhongjiajie commented on issue #7148: [AIRFLOW-6472] Correct short option in cli URL: https://github.com/apache/airflow/pull/7148#issuecomment-591951660 I find out our Breeze subcommand also name kebab-case style  But Airflow CLI still use snake_case style  So, should we change our subcommand? @potiuk @mik-laj This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Commented] (AIRFLOW-6938) Don't read dag_directory in Scheduler

[ https://issues.apache.org/jira/browse/AIRFLOW-6938?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046579#comment-17046579 ] ASF subversion and git services commented on AIRFLOW-6938: -- Commit 764aab63de5bb907b088a5bae5955d2869c33aa7 in airflow's branch refs/heads/master from Kamil Breguła [ https://gitbox.apache.org/repos/asf?p=airflow.git;h=764aab6 ] [AIRFLOW-6938] Don't read dag_directory in SchedulerJob (#7562) > Don't read dag_directory in Scheduler > - > > Key: AIRFLOW-6938 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6938 > Project: Apache Airflow > Issue Type: Improvement > Components: scheduler >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Commented] (AIRFLOW-6938) Don't read dag_directory in Scheduler

[ https://issues.apache.org/jira/browse/AIRFLOW-6938?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17046577#comment-17046577 ] ASF GitHub Bot commented on AIRFLOW-6938: - mik-laj commented on pull request #7562: [AIRFLOW-6938] Don't read dag_directory in SchedulerJob URL: https://github.com/apache/airflow/pull/7562 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Don't read dag_directory in Scheduler > - > > Key: AIRFLOW-6938 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6938 > Project: Apache Airflow > Issue Type: Improvement > Components: scheduler >Affects Versions: 1.10.9 >Reporter: Kamil Bregula >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] mik-laj merged pull request #7562: [AIRFLOW-6938] Don't read dag_directory in SchedulerJob

mik-laj merged pull request #7562: [AIRFLOW-6938] Don't read dag_directory in SchedulerJob URL: https://github.com/apache/airflow/pull/7562 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj commented on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax

mik-laj commented on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax URL: https://github.com/apache/airflow/pull/7343#issuecomment-591949011 @zhongjiajie I don't have time to finish this, because I've taken care of the scheduler performance. You want to take over? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[GitHub] [airflow] mik-laj edited a comment on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax

mik-laj edited a comment on issue #7343: [AIRFLOW-6719] Introduce pyupgrade to enforce latest syntax URL: https://github.com/apache/airflow/pull/7343#issuecomment-591949011 @zhongjiajie I don't have time to finish this, because I've taken care of the scheduler performance. Would you like to take over? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org With regards, Apache Git Services

[jira] [Updated] (AIRFLOW-6947) UTF8mb4 encoding for mysql does not work in Airflow 2.0