[GitHub] [airflow] XD-DENG commented on issue #12744: Difference of extras Airflow 2.0 vs. Airflow 1.10

XD-DENG commented on issue #12744: URL: https://github.com/apache/airflow/issues/12744#issuecomment-737056615 > The following should require explicitly installing them: > > "apache.pig": [], > "apache.sqoop": [], > "dingding": [], > "discord": [], > "openfaas": [], > "opsgenie": [], > "sqlite": [], I agree with @kaxil , **other than `sqlite`**. Personally I think `sqlite` should come together with Airflow core by default, without explicit extra installation, Considering two examples: - Most Linux distributions & MacOS has Sqlite available by default. - Python has `sqlite3` as one of its build-in standard libraries. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] michalslowikowski00 commented on a change in pull request #12710: Enable SparkSqlHook to use supplied connections

michalslowikowski00 commented on a change in pull request #12710: URL: https://github.com/apache/airflow/pull/12710#discussion_r533953467 ## File path: tests/providers/apache/spark/hooks/test_spark_sql.py ## @@ -213,3 +209,29 @@ def test_spark_process_runcmd_and_fail(self, mock_popen): sql, master, params, status ), ) + +def test_resolve_connection_yarn_default_connection(self): +hook = SparkSqlHook(conn_id='spark_default', sql='SELECT 1') Review comment: This can be put into setup() test method, after that you will be have access through `self` to the hook and you will avoid code duplication. Also connection = hook._resolve_connection() can be put in setUp(). ``` def setUp(self): self.hook = SparkSqlHook(conn_id='spark_yarn_cluster', sql='SELECT 1') self.connection = self.hook._resolve_connection() ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] michalslowikowski00 commented on a change in pull request #12710: Enable SparkSqlHook to use supplied connections

michalslowikowski00 commented on a change in pull request #12710: URL: https://github.com/apache/airflow/pull/12710#discussion_r533956161 ## File path: tests/providers/apache/spark/hooks/test_spark_sql.py ## @@ -213,3 +209,29 @@ def test_spark_process_runcmd_and_fail(self, mock_popen): sql, master, params, status ), ) + +def test_resolve_connection_yarn_default_connection(self): +hook = SparkSqlHook(conn_id='spark_default', sql='SELECT 1') Review comment: I would go even further and use https://github.com/wolever/parameterized. This is basically the same test case with different data `expected_spark_connection` that can be parametrized thorough @parameterized(). Just saying. :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] michalslowikowski00 commented on a change in pull request #12710: Enable SparkSqlHook to use supplied connections

michalslowikowski00 commented on a change in pull request #12710: URL: https://github.com/apache/airflow/pull/12710#discussion_r533953467 ## File path: tests/providers/apache/spark/hooks/test_spark_sql.py ## @@ -213,3 +209,29 @@ def test_spark_process_runcmd_and_fail(self, mock_popen): sql, master, params, status ), ) + +def test_resolve_connection_yarn_default_connection(self): +hook = SparkSqlHook(conn_id='spark_default', sql='SELECT 1') Review comment: This can be put into setup() test method, after that you will be have access through `self` to the hook and you will avoid code duplication. ``` def setUp(self): self.hook = SparkSqlHook(conn_id='spark_yarn_cluster', sql='SELECT 1') ``` This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[airflow] branch master updated (da2a7d6 -> ae0e8f4)

This is an automated email from the ASF dual-hosted git repository. xddeng pushed a change to branch master in repository https://gitbox.apache.org/repos/asf/airflow.git. from da2a7d6 Added Headout to INTHEWILD (#12734) add ae0e8f4 Move config item 'worker_precheck' from section [core] to [celery] (#12746) No new revisions were added by this update. Summary of changes: airflow/config_templates/config.yml | 14 +++--- airflow/config_templates/default_airflow.cfg | 6 +++--- airflow/config_templates/default_test.cfg| 2 +- airflow/configuration.py | 1 + airflow/settings.py | 2 +- tests/cli/commands/test_celery_command.py| 4 ++-- 6 files changed, 15 insertions(+), 14 deletions(-)

[GitHub] [airflow] XD-DENG merged pull request #12746: Move config item 'worker_precheck' from section [core] to [celery]

XD-DENG merged pull request #12746: URL: https://github.com/apache/airflow/pull/12746 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] patchandpray commented on issue #12489: Clicking Edit on TaskInstance Causes Crash

patchandpray commented on issue #12489: URL: https://github.com/apache/airflow/issues/12489#issuecomment-737027781 Hi, I think I need some help. I have been able to deduce that the issue arises upon form autogeneration for a taskinstance by flask-appbuilder. When editing a taskinstance. A taskinstance appears to have a one on one relationship with a DagRun and when generating the edit form flask_appbuilder uses `QuerySelectMultipleField.iter_choices()` which gets called with: ``. Since this is a non-iterable object the exception is raised. When using `QuerySelectField.iter_choices()` with this object the form does generate but I am stuck finding a way to force this behavior from airflow code. An option might be to not autogenerate a form and specify a form for taskinstance edit so we can explicitly define which fields to use but I am unsure if this is the correct way to implement a fix for this. Also, how is determined which fields are generated for this form? Furthermore when forcing the generation from flask_appbuilder and generating a form:  when trying to save a modification for a taskinstance I am presented with this error. I want to create a new bug report for this but it might be related to this bug.  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] rootcss commented on pull request #11850: Add Telegram hook and operator

rootcss commented on pull request #11850: URL: https://github.com/apache/airflow/pull/11850#issuecomment-737019905 @mik-laj https://github.com/apache/airflow/pull/12681/files is merged now. I'll try this and see if it works. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy closed pull request #10962: Fix doc preview error in editor for google operators doc

ephraimbuddy closed pull request #10962: URL: https://github.com/apache/airflow/pull/10962 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on pull request #10962: Fix doc preview error in editor for google operators doc

ephraimbuddy commented on pull request #10962: URL: https://github.com/apache/airflow/pull/10962#issuecomment-737019603 Sorry, I have to close it. Thanks @ryw This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] blcksrx closed pull request #12498: Impala hook implention

blcksrx closed pull request #12498: URL: https://github.com/apache/airflow/pull/12498 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ryw commented on pull request #10962: Fix doc preview error in editor for google operators doc

ryw commented on pull request #10962: URL: https://github.com/apache/airflow/pull/10962#issuecomment-736995415 @ephraimbuddy what's next step on this? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] jhtimmins commented on issue #12552: ExternalTaskSensor fails with ValueError on state comparison

jhtimmins commented on issue #12552: URL: https://github.com/apache/airflow/issues/12552#issuecomment-736993627 @vikramkoka I'm assigned but have not yet started. Will be starting tomorrow. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (AIRFLOW-6786) Adding KafkaConsumerHook, KafkaProducerHook, and KafkaSensor

[ https://issues.apache.org/jira/browse/AIRFLOW-6786?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17242040#comment-17242040 ] ASF GitHub Bot commented on AIRFLOW-6786: - ryw commented on pull request #10660: URL: https://github.com/apache/airflow/pull/10660#issuecomment-736991032 @dferguson992 curious why you closed this? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org > Adding KafkaConsumerHook, KafkaProducerHook, and KafkaSensor > > > Key: AIRFLOW-6786 > URL: https://issues.apache.org/jira/browse/AIRFLOW-6786 > Project: Apache Airflow > Issue Type: New Feature > Components: contrib, hooks >Affects Versions: 1.10.9 >Reporter: Daniel Ferguson >Assignee: Daniel Ferguson >Priority: Minor > > Add the KafkaProducerHook. > Add the KafkaConsumerHook. > Add the KafkaSensor which listens to messages with a specific topic. > Related Issue: > #1311 (Pre-dates Jira Migration) > Reminder to contributors: > You must add an Apache License header to all new files > Please squash your commits when possible and follow the 7 rules of good Git > commits > I am new to the community, I am not sure the files are at the right place or > missing anything. > The sensor could be used as the first node of a dag where the second node can > be a TriggerDagRunOperator. The messages are polled in a batch and the dag > runs are dynamically generated. > Thanks! > Note, as per denied PR [#1415|https://github.com/apache/airflow/pull/1415], > it is important to mention these integrations are not suitable for > low-latency/high-throughput/streaming. For reference, [#1415 > (comment)|https://github.com/apache/airflow/pull/1415#issuecomment-484429806]. > Co-authored-by: Dan Ferguson > [dferguson...@gmail.com|mailto:dferguson...@gmail.com] > Co-authored-by: YuanfΞi Zhu -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [airflow] ryw commented on pull request #10660: [AIRFLOW-6786] Added Kafka components

ryw commented on pull request #10660: URL: https://github.com/apache/airflow/pull/10660#issuecomment-736991032 @dferguson992 curious why you closed this? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ryw commented on issue #12489: Clicking Edit on TaskInstance Causes Crash

ryw commented on issue #12489: URL: https://github.com/apache/airflow/issues/12489#issuecomment-736989589 ok we'd like this bugfix in for Airflow 2.0rc1 so let us know if you get stuck / need help This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12472: Allow registering extra links for providers

github-actions[bot] commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736984146 [The Workflow run](https://github.com/apache/airflow/actions/runs/395334613) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12472: Allow registering extra links for providers

github-actions[bot] commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736983954 [The Workflow run](https://github.com/apache/airflow/actions/runs/395332741) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12472: Allow registering extra links for providers

github-actions[bot] commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736983895 [The Workflow run](https://github.com/apache/airflow/actions/runs/395332149) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ryw commented on issue #11989: Add Doc page containing list of Alembic DB Migrations

ryw commented on issue #11989: URL: https://github.com/apache/airflow/issues/11989#issuecomment-736983627 great to hear @ernest-kr fyi i do my pr's from a personal fork ryw/airflow but you could also do them from astronomer/airflow This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12558: Add connection form support to provider discovery

github-actions[bot] commented on pull request #12558: URL: https://github.com/apache/airflow/pull/12558#issuecomment-736983433 [The Workflow run](https://github.com/apache/airflow/actions/runs/395330878) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12472: Allow registering extra links for providers

github-actions[bot] commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736983441 [The Workflow run](https://github.com/apache/airflow/actions/runs/395330878) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ryw commented on issue #12744: Difference of extras Airflow 2.0 vs. Airflow 1.10

ryw commented on issue #12744: URL: https://github.com/apache/airflow/issues/12744#issuecomment-736981514 i like adding `imap` -- essentially we're saying lower-level protocols are core (ftp, http) so imap fits into that list This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #12751: Helm Chart: Provide option to specify loadBalancerIP in webserver service

boring-cyborg[bot] commented on issue #12751: URL: https://github.com/apache/airflow/issues/12751#issuecomment-736979827 Thanks for opening your first issue here! Be sure to follow the issue template! This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] cheunhong opened a new issue #12751: Helm Chart: Provide option to specify loadBalancerIP in webserver service

cheunhong opened a new issue #12751: URL: https://github.com/apache/airflow/issues/12751 **Description** The current service type for `webserver` is defaulted at `ClusterIP`. I am able to change it to `LoadBalancer` type, but the I was not able to specify the static IP. So every time we reinstall the chart, it will change the assigned IP of the loadbalancer being provisioned to us. **Use case / motivation** **Related Issues** This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12742: Allow using _CMD / _SECRET to set `[webserver] secret_key` config

github-actions[bot] commented on pull request #12742: URL: https://github.com/apache/airflow/pull/12742#issuecomment-736938507 [The Workflow run](https://github.com/apache/airflow/actions/runs/395025224) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

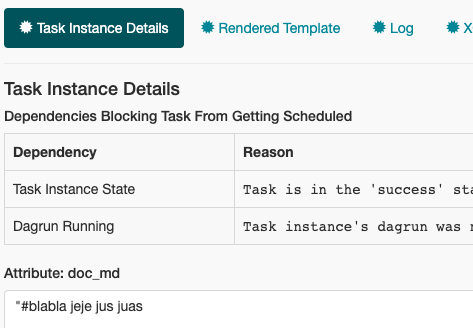

[GitHub] [airflow] JavierLopezT opened a new issue #12750: Task level documentation in other place than Task Details

JavierLopezT opened a new issue #12750: URL: https://github.com/apache/airflow/issues/12750 Right now you can add documentation to a task and it will be seen on the task instance details page  It will we nice to have it here (maybe not mandatorily, but optionally):  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] vikramkoka commented on issue #12744: Difference of extras Airflow 2.0 vs. Airflow 1.10

vikramkoka commented on issue #12744: URL: https://github.com/apache/airflow/issues/12744#issuecomment-736935563 Absolutely agree that http should be part of core. Strongly in favor of ftp as well being part of core, assuming no additional dependencies. Tempted with imap, but unsure on the dependencies. Nothing else comes close IMHO This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #12472: Allow registering extra links for providers

potiuk commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736934261 Shoudl be ready to merge everyone -> all comments applied. I hope it gets green. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #12472: Allow registering extra links for providers

potiuk commented on pull request #12472: URL: https://github.com/apache/airflow/pull/12472#issuecomment-736934075 Applied all comments @ashb This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12472: Allow registering extra links for providers

potiuk commented on a change in pull request #12472:

URL: https://github.com/apache/airflow/pull/12472#discussion_r533841635

##

File path: airflow/providers_manager.py

##

@@ -224,6 +226,43 @@ def _add_hook(self, hook_class_name, provider_package) ->

None:

self._hooks_dict[conn_type] = (hook_class_name,

connection_id_attribute_name)

+def _discover_extra_links(self) -> None:

+"""Retrieves all extra links defined in the providers"""

+for provider_package, (_, provider) in self._provider_dict.items():

+if provider.get("extra-links"):

+for extra_link in provider["extra-links"]:

+self.__add_extra_link(extra_link, provider_package)

+

+def __add_extra_link(self, extra_link_class_name, provider_package) ->

None:

+"""

+Adds extra link class name to the list of classes

+:param extra_link_class_name: name of the class to add

+:param provider_package: provider package adding the link

+:return:

+"""

+if provider_package.startswith("apache-airflow"):

+provider_path = provider_package[len("apache-") :].replace("-",

".")

+if not extra_link_class_name.startswith(provider_path):

+log.warning(

+"Sanity check failed when importing '%s' from '%s'

package. It should start with '%s'",

+extra_link_class_name,

+provider_package,

+provider_path,

+)

+return

+try:

+module, class_name = extra_link_class_name.rsplit('.', maxsplit=1)

+getattr(importlib.import_module(module), class_name)

Review comment:

Surely, I wanted to get more of a sanity check and "early" warning in

the logs of Airflow. The way it is done right now is that the class will be

anyhow imported in the "deserialize_operator" method, which I think is 'good

enough" so I am happy to remove it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12472: Allow registering extra links for providers

potiuk commented on a change in pull request #12472:

URL: https://github.com/apache/airflow/pull/12472#discussion_r533839528

##

File path: airflow/providers_manager.py

##

@@ -68,6 +68,7 @@ def __init__(self):

# Keeps dict of hooks keyed by connection type and value is

# Tuple: connection class, connection_id_attribute_name

self._hooks_dict: Dict[str, Tuple[str, str]] = {}

+self.__extra_link_class_name_set: Set[str] = set()

Review comment:

of course.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12472: Allow registering extra links for providers

potiuk commented on a change in pull request #12472:

URL: https://github.com/apache/airflow/pull/12472#discussion_r533839398

##

File path: airflow/provider.yaml.schema.json

##

@@ -180,6 +180,13 @@

"items": {

"type": "string"

}

+},

+"extra-links": {

+ "type": "array",

+ "description": "Class nme that provide extra link functionality",

Review comment:

Yep.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12472: Allow registering extra links for providers

potiuk commented on a change in pull request #12472:

URL: https://github.com/apache/airflow/pull/12472#discussion_r533839041

##

File path: airflow/cli/cli_parser.py

##

@@ -1171,6 +1171,12 @@ class GroupCommand(NamedTuple):

func=lazy_load_command('airflow.cli.commands.provider_command.provider_get'),

args=(ARG_OUTPUT, ARG_FULL, ARG_COLOR, ARG_PROVIDER_NAME),

),

+ActionCommand(

+name='extra_links',

Review comment:

Right!

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12472: Allow registering extra links for providers

potiuk commented on a change in pull request #12472:

URL: https://github.com/apache/airflow/pull/12472#discussion_r533838822

##

File path: airflow/serialization/serialized_objects.py

##

@@ -53,14 +55,23 @@

log = logging.getLogger(__name__)

FAILED = 'serialization_failed'

-BUILTIN_OPERATOR_EXTRA_LINKS: List[str] = [

-"airflow.providers.google.cloud.operators.bigquery.BigQueryConsoleLink",

-

"airflow.providers.google.cloud.operators.bigquery.BigQueryConsoleIndexableLink",

-"airflow.providers.google.cloud.operators.mlengine.AIPlatformConsoleLink",

-"airflow.providers.qubole.operators.qubole.QDSLink",

+_OPERATOR_EXTRA_LINKS: Set[str] = {

"airflow.operators.dagrun_operator.TriggerDagRunLink",

"airflow.sensors.external_task_sensor.ExternalTaskSensorLink",

-]

+}

+

+cache = lru_cache(maxsize=None)

+

+

+@cache

+def get_operator_extra_links():

+"""

+Returns operator extra links - both the ones that are built in and the

ones that come from

+the providers.

+:return: set of extra links

Review comment:

Right

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533837169

##

File path: scripts/in_container/entrypoint_ci.sh

##

@@ -98,13 +101,51 @@ if [[ -z ${INSTALL_AIRFLOW_VERSION=} ]]; then

mkdir -p "${AIRFLOW_SOURCES}"/logs/

mkdir -p "${AIRFLOW_SOURCES}"/tmp/

export PYTHONPATH=${AIRFLOW_SOURCES}

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "none" ]]; then

+echo

+echo "Skip installing airflow - only install wheel packages that are

present locally"

+echo

+uninstall_airflow_and_providers

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo

+echo "Install airflow from wheel package with [all] extras but

uninstalling providers."

+echo

+uninstall_airflow_and_providers

+install_airflow_from_wheel "[all]"

+uninstall_providers

else

-# Installs released airflow version without adding more extras

-install_released_airflow_version "" "${INSTALL_AIRFLOW_VERSION}"

+echo

+echo "Install airflow from PyPI including [all] extras"

+echo

+install_released_airflow_version "${INSTALL_AIRFLOW_VERSION}" "[all]"

fi

-

-if [[ ${INSTALL_WHEELS=} == "true" ]]; then

- pip install /dist/*.whl || true

+if [[ ${INSTALL_PACKAGES_FROM_DIST=} == "true" ]]; then

+echo

+echo "Install all packages from dist folder"

+if [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo "(except apache-airflow)"

+fi

Review comment:

I looked at it - and the way I implemented it is rather easy to adjust -

I could run preparing packages and build images jobs in matrix strategy witth

PACKAGE_FORMAT ["wheels", "sdist" ] - I could get it done rather quickly, but I

am still not sure if it gives us anything more than installing .whl - > if you

can think of a reason why this might be useful, I am happy to add it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533836451

##

File path: breeze

##

@@ -2211,6 +2259,12 @@ function breeze::flag_local_file_mounting() {

-l, --skip-mounting-local-sources

Skips mounting local volume with sources - you get exactly what is in

the

docker image rather than your current local sources of Airflow.

+

+--skip-mounting-files

Review comment:

Removed.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533834106

##

File path: breeze

##

@@ -2211,6 +2259,12 @@ function breeze::flag_local_file_mounting() {

-l, --skip-mounting-local-sources

Skips mounting local volume with sources - you get exactly what is in

the

docker image rather than your current local sources of Airflow.

+

+--skip-mounting-files

Review comment:

Ah. I see - in the snipped from GH I saw the sources option displayed in

the first line so I thought you were commenting on that.

There is no particular use case for that I can think of . It was just option

I realized I missed when I was moving /dist to the right docker compose file,

so I just added it.

But now that you mentioned it, indeed it can be removed - I checked that we

never switch it off in the CI anyway. So yeah. I am happy to remove it together

with the MOUNT_FILES env variable.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] Nick-0723 commented on a change in pull request #12740: Fix the exception that the port is empty when using db shell

Nick-0723 commented on a change in pull request #12740:

URL: https://github.com/apache/airflow/pull/12740#discussion_r533833066

##

File path: airflow/cli/commands/db_command.py

##

@@ -67,7 +67,7 @@ def shell(args):

host = {url.host}

user = {url.username}

password = {url.password or ""}

-port = {url.port or ""}

Review comment:

Thanks for your guidance, I should think more comprehensively

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12704: Refactor list rendering in commands

kaxil commented on a change in pull request #12704:

URL: https://github.com/apache/airflow/pull/12704#discussion_r533827933

##

File path: tests/cli/commands/test_task_command.py

##

@@ -55,6 +55,7 @@ def reset(dag_id):

runs.delete()

+# TODO: Check if tests needs side effects - locally there's missing DAG

Review comment:

Weird:

```

❯ ./breeze --python 3.6 --backend postgres --db-reset

===

Checking integrations and backends

===

PostgreSQL: OK.

---

---

Resetting the DB

DB: postgresql+psycopg2://postgres:***@postgres/airflow

[2020-12-02 01:05:00,245] {db.py:619} INFO - Dropping tables that exist

[2020-12-02 01:05:00,553] {migration.py:155} INFO - Context impl

PostgresqlImpl.

[2020-12-02 01:05:00,554] {migration.py:162} INFO - Will assume

transactional DDL.

[2020-12-02 01:05:00,968] {db.py:609} INFO - Creating tables

INFO [alembic.runtime.migration] Context impl PostgresqlImpl.

INFO [alembic.runtime.migration] Will assume transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> e3a246e0dc1, current

schema

INFO [alembic.runtime.migration] Running upgrade e3a246e0dc1 ->

1507a7289a2f, create is_encrypted

INFO [alembic.runtime.migration] Running upgrade 1507a7289a2f ->

13eb55f81627, maintain history for compatibility with earlier migrations

INFO [alembic.runtime.migration] Running upgrade 13eb55f81627 ->

338e90f54d61, More logging into task_instance

INFO [alembic.runtime.migration] Running upgrade 338e90f54d61 ->

52d714495f0, job_id indices

INFO [alembic.runtime.migration] Running upgrade 52d714495f0 ->

502898887f84, Adding extra to Log

INFO [alembic.runtime.migration] Running upgrade 502898887f84 ->

1b38cef5b76e, add dagrun

INFO [alembic.runtime.migration] Running upgrade 1b38cef5b76e ->

2e541a1dcfed, task_duration

INFO [alembic.runtime.migration] Running upgrade 2e541a1dcfed ->

40e67319e3a9, dagrun_config

INFO [alembic.runtime.migration] Running upgrade 40e67319e3a9 ->

561833c1c74b, add password column to user

INFO [alembic.runtime.migration] Running upgrade 561833c1c74b ->

4446e08588, dagrun start end

INFO [alembic.runtime.migration] Running upgrade 4446e08588 ->

bbc73705a13e, Add notification_sent column to sla_miss

INFO [alembic.runtime.migration] Running upgrade bbc73705a13e ->

bba5a7cfc896, Add a column to track the encryption state of the 'Extra' field

in connection

INFO [alembic.runtime.migration] Running upgrade bba5a7cfc896 ->

1968acfc09e3, add is_encrypted column to variable table

INFO [alembic.runtime.migration] Running upgrade 1968acfc09e3 ->

2e82aab8ef20, rename user table

INFO [alembic.runtime.migration] Running upgrade 2e82aab8ef20 ->

211e584da130, add TI state index

INFO [alembic.runtime.migration] Running upgrade 211e584da130 ->

64de9cddf6c9, add task fails journal table

INFO [alembic.runtime.migration] Running upgrade 64de9cddf6c9 ->

f2ca10b85618, add dag_stats table

INFO [alembic.runtime.migration] Running upgrade f2ca10b85618 ->

4addfa1236f1, Add fractional seconds to mysql tables

INFO [alembic.runtime.migration] Running upgrade 4addfa1236f1 ->

8504051e801b, xcom dag task indices

INFO [alembic.runtime.migration] Running upgrade 8504051e801b ->

5e7d17757c7a, add pid field to TaskInstance

INFO [alembic.runtime.migration] Running upgrade 5e7d17757c7a ->

127d2bf2dfa7, Add dag_id/state index on dag_run table

INFO [alembic.runtime.migration] Running upgrade 127d2bf2dfa7 ->

cc1e65623dc7, add max tries column to task instance

INFO [alembic.runtime.migration] Running upgrade cc1e65623dc7 ->

bdaa763e6c56, Make xcom value column a large binary

INFO [alembic.runtime.migration] Running upgrade bdaa763e6c56 ->

947454bf1dff, add ti job_id index

INFO [alembic.runtime.migration] Running upgrade 947454bf1dff ->

d2ae31099d61, Increase text size for MySQL (not relevant for other DBs' text

types)

INFO [alembic.runtime.migration] Running upgrade d2ae31099d61 ->

0e2a74e0fc9f, Add time zone awareness

INFO [alembic.runtime.migration] Running upgrade d2ae31099d61 ->

33ae817a1ff4, kubernetes_resource_checkpointing

INFO [alembic.runtime.migration] Running upgrade 33ae817a1ff4 ->

27c6a30d7c24, kubernetes_resource_checkpointing

INFO [alembic.runtime.migration] Running upgrade 27c6a30d7c24 ->

86770d1215c0, add kubernetes scheduler uniqueness

INFO [alembic.runtime.migration] Running upgrade 86770d1215c0, 0e2a74e0fc9f

-> 05f30312d566, merge heads

INFO

[GitHub] [airflow] kaxil commented on a change in pull request #12704: Refactor list rendering in commands

kaxil commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533827933 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: Weird: ``` ❯ ./breeze --python 3.6 --backend postgres --db-reset root@34129b165f1a:/opt/airflow# pytest tests/cli/commands/test_task_command.py::TestCliTasks test session starts = platform linux -- Python 3.6.12, pytest-6.1.2, py-1.9.0, pluggy-0.13.1 -- /usr/local/bin/python cachedir: .pytest_cache rootdir: /opt/airflow, configfile: pytest.ini plugins: forked-1.3.0, timeouts-1.2.1, cov-2.10.1, celery-4.4.7, flaky-3.7.0, xdist-2.1.0, instafail-0.4.2, requests-mock-1.8.0, rerunfailures-9.1.1 setup timeout: 0.0s, execution timeout: 0.0s, teardown timeout: 0.0s collected 17 items tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_list_tasks PASSED [ 5%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run PASSED [ 11%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_0__ignore_all_dependencies PASSED [ 17%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_1__ignore_depends_on_past PASSED [ 23%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_2__ignore_dependencies PASSED [ 29%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_3__force PASSED [ 35%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_mutually_exclusive PASSED [ 41%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test PASSED [ 47%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test_with_env_vars PASSED [ 52%] tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test_with_params PASSED [ 58%] tests/cli/commands/test_task_command.py::TestCliTasks::test_local_run SKIPPED [ 64%] tests/cli/commands/test_task_command.py::TestCliTasks::test_parentdag_downstream_clear PASSED [ 70%] tests/cli/commands/test_task_command.py::TestCliTasks::test_run_naive_taskinstance PASSED [ 76%] tests/cli/commands/test_task_command.py::TestCliTasks::test_subdag_clear PASSED [ 82%] tests/cli/commands/test_task_command.py::TestCliTasks::test_task_state PASSED

[GitHub] [airflow] vikramkoka commented on issue #12552: ExternalTaskSensor fails with ValueError on state comparison

vikramkoka commented on issue #12552: URL: https://github.com/apache/airflow/issues/12552#issuecomment-736911990 @jhtimmins I think you are working on this, correct? This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] danielenricocahall opened a new pull request #12749: Order broken DAG messages in UI

danielenricocahall opened a new pull request #12749: URL: https://github.com/apache/airflow/pull/12749 In case of existing issue, reference it using one of the following: closes: https://github.com/apache/airflow/issues/9669 Minor update to ensure that Broken DAG messages are ordered when displayed in the UI. Since the ordering doesn't really matter, it just has to be consistent, I chose to order by the `id` column. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on issue #12748: Code Coverage is Broken

potiuk commented on issue #12748: URL: https://github.com/apache/airflow/issues/12748#issuecomment-736909534 Oh yeah. nobody noticed for 6 months ;) . I think It actually works but in a weird way. I will look at that after we get RC out unless someone does it first. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on issue #12744: Difference of extras Airflow 2.0 vs. Airflow 1.10

kaxil commented on issue #12744: URL: https://github.com/apache/airflow/issues/12744#issuecomment-736894181 The following should require explicitly installing them: "apache.pig": [], "apache.sqoop": [], "dingding": [], "discord": [], "openfaas": [], "opsgenie": [], "sqlite": [], This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #12704: Refactor list rendering in commands

turbaszek commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533799409 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: > I can test that and fix it if that is the case in a separate PR Thanks for helping! The problems I observed: ``` root@01658a8a09d3:/opt/airflow# pytest -s tests/cli/commands/test_task_command.py ... = short test summary info == SKIPPED [1] tests/conftest.py:313: The test is skipped because it has quarantined marker. And --include-quarantined flag is passed to pytest. FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_list_tasks - airflow.exceptions.AirflowException: dag_id could not be found: example_bash_operator. Either the dag did not exist or it fai... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run - airflow.exceptions.AirflowException: dag_id could not be found: example_bash_operator. Either the dag did not exist or it failed to ... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_0__ignore_all_dependencies - AssertionError: "Option --raw does not work with some of the other options on this com... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_1__ignore_depends_on_past - AssertionError: "Option --raw does not work with some of the other options on this comm... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_2__ignore_dependencies - AssertionError: "Option --raw does not work with some of the other options on this command... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_invalid_raw_option_3__force - AssertionError: "Option --raw does not work with some of the other options on this command." does not ma... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_run_mutually_exclusive - AssertionError: "Option --raw and --local are mutually exclusive." does not match "dag_id could not be found: exa... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test - airflow.exceptions.AirflowException: dag_id could not be found: example_bash_operator. Either the dag did not exist or it failed to... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test_with_env_vars - airflow.exceptions.AirflowException: dag_id could not be found: example_passing_params_via_test_command. Either the d... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_cli_test_with_params - airflow.exceptions.AirflowException: dag_id could not be found: example_passing_params_via_test_command. Either the dag... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_parentdag_downstream_clear - airflow.exceptions.AirflowException: dag_id could not be found: example_subdag_operator.section-1. Either the dag... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_subdag_clear - airflow.exceptions.AirflowException: dag_id could not be found: example_subdag_operator. Either the dag did not exist or it fai... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_task_state - airflow.exceptions.AirflowException: dag_id could not be found: example_bash_operator. Either the dag did not exist or it failed ... FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_task_states_for_dag_run - KeyError: 'example_python_operator' FAILED tests/cli/commands/test_task_command.py::TestCliTasks::test_test - airflow.exceptions.AirflowException: dag_id could not be found: example_python_operator. Either the dag did not exist or it failed to p... FAILED tests/cli/commands/test_task_command.py::TestLogsfromTaskRunCommand::test_logging_with_run_task - AssertionError: 2 != 1 === 16 failed, 6 passed, 1 skipped, 1 warning in 41.37s ``` This is what I get using brezee and current main branch. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on issue #12744: Difference of extras Airflow 2.0 vs. Airflow 1.10

kaxil commented on issue #12744: URL: https://github.com/apache/airflow/issues/12744#issuecomment-736893808 http (& even ftp) does seem like they should be part of core. Atleast for HTTP it uses all the internal hooks or requirements that are part of Airflow core's requirement too. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil opened a new issue #12748: Code Coverage is Broken

kaxil opened a new issue #12748: URL: https://github.com/apache/airflow/issues/12748 https://codecov.io/github/apache/airflow?branch=master CodeCov code-coverage is broken on Master. It wasn't great but still useful to check which sections needed lacks tests. cc @potiuk This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12742: Allow using _CMD / _SECRET to set `[webserver] secret_key` config

kaxil commented on a change in pull request #12742:

URL: https://github.com/apache/airflow/pull/12742#discussion_r533796882

##

File path: tests/core/test_configuration.py

##

@@ -557,6 +557,23 @@ def test_command_from_env(self):

# the environment variable's echo command

self.assertEqual(test_cmdenv_conf.get('testcmdenv',

'notacommand'), 'OK')

+def test_sensitive_config_values(self):

Review comment:

:D Maybe a badly chosen example my part or I didn't convey it properly

in that case. Let's agree to dis-agree on this one.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12742: Allow using _CMD / _SECRET to set `[webserver] secret_key` config

kaxil commented on a change in pull request #12742:

URL: https://github.com/apache/airflow/pull/12742#discussion_r533796882

##

File path: tests/core/test_configuration.py

##

@@ -557,6 +557,23 @@ def test_command_from_env(self):

# the environment variable's echo command

self.assertEqual(test_cmdenv_conf.get('testcmdenv',

'notacommand'), 'OK')

+def test_sensitive_config_values(self):

Review comment:

:D Maybe a badly chosen example in that case. Let's agree to dis-agree

on this one.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #12742: Allow using _CMD / _SECRET to set `[webserver] secret_key` config

turbaszek commented on a change in pull request #12742:

URL: https://github.com/apache/airflow/pull/12742#discussion_r533796183

##

File path: tests/core/test_configuration.py

##

@@ -557,6 +557,23 @@ def test_command_from_env(self):

# the environment variable's echo command

self.assertEqual(test_cmdenv_conf.get('testcmdenv',

'notacommand'), 'OK')

+def test_sensitive_config_values(self):

Review comment:

> This is what I meant:

>

> ```python

> assert DataProcSparkOperator.template_fields == ['arguments', 'job_name',

'cluster_name', 'region', 'dataproc_jars', 'dataproc_properties']

> ```

On this one I must agree with Kamil. This test is useless. This test will

work even if someone renames `self.job_name` to `self.magic_dataproc_job_name`.

The proper test would be like:

```py

assert all(t in op.__dict__ for t in op.template_fileds)

```

the problem if I'm not mistaken arises when there's difference between

`template_fields` and operator attributes.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12704: Refactor list rendering in commands

kaxil commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533795846 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: I can test that and fix it if that is the case in a separate PR This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #12704: Refactor list rendering in commands

turbaszek commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533790668 ## File path: tests/cli/commands/test_dag_command.py ## @@ -47,6 +47,7 @@ ) +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: See https://github.com/apache/airflow/pull/12704#discussion_r533789920 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #12704: Refactor list rendering in commands

turbaszek commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533789920 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: That's the problem - they don't work locally when triggered without whole test suite. I plan to create an issue once this get merged so I can reference those comments. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #12704: Refactor list rendering in commands

turbaszek commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533789920 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: That's the problem - they don't work locally when triggered without whole test suite This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12743: Create HostnameCallableRule to ease upgrade to Airflow 2.0

github-actions[bot] commented on pull request #12743: URL: https://github.com/apache/airflow/pull/12743#issuecomment-736875673 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest master at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12747: Ensure webserver isn't running with old config

github-actions[bot] commented on pull request #12747: URL: https://github.com/apache/airflow/pull/12747#issuecomment-736873311 The PR is likely OK to be merged with just subset of tests for default Python and Database versions without running the full matrix of tests, because it does not modify the core of Airflow. If the committers decide that the full tests matrix is needed, they will add the label 'full tests needed'. Then you should rebase to the latest master or amend the last commit of the PR, and push it with --force-with-lease. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12745: Optimize subclasses of DummyOperator for Scheduling

github-actions[bot] commented on pull request #12745: URL: https://github.com/apache/airflow/pull/12745#issuecomment-736871498 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest master at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #12685: Production images on CI are now built from packages

ashb commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533774989

##

File path: breeze

##

@@ -2211,6 +2259,12 @@ function breeze::flag_local_file_mounting() {

-l, --skip-mounting-local-sources

Skips mounting local volume with sources - you get exactly what is in

the

docker image rather than your current local sources of Airflow.

+

+--skip-mounting-files

Review comment:

This is not sources though - it's controlling if `/files` and `/dist`

are mounted. They seems safe to do always

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #12685: Production images on CI are now built from packages

ashb commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533774397

##

File path: scripts/in_container/entrypoint_ci.sh

##

@@ -98,13 +101,51 @@ if [[ -z ${INSTALL_AIRFLOW_VERSION=} ]]; then

mkdir -p "${AIRFLOW_SOURCES}"/logs/

mkdir -p "${AIRFLOW_SOURCES}"/tmp/

export PYTHONPATH=${AIRFLOW_SOURCES}

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "none" ]]; then

+echo

+echo "Skip installing airflow - only install wheel packages that are

present locally"

+echo

+uninstall_airflow_and_providers

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo

+echo "Install airflow from wheel package with [all] extras but

uninstalling providers."

+echo

+uninstall_airflow_and_providers

+install_airflow_from_wheel "[all]"

+uninstall_providers

else

-# Installs released airflow version without adding more extras

-install_released_airflow_version "" "${INSTALL_AIRFLOW_VERSION}"

+echo

+echo "Install airflow from PyPI including [all] extras"

+echo

+install_released_airflow_version "${INSTALL_AIRFLOW_VERSION}" "[all]"

fi

-

-if [[ ${INSTALL_WHEELS=} == "true" ]]; then

- pip install /dist/*.whl || true

+if [[ ${INSTALL_PACKAGES_FROM_DIST=} == "true" ]]; then

+echo

+echo "Install all packages from dist folder"

+if [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo "(except apache-airflow)"

+fi

Review comment:

Haven't got an opinion (yet, can form one if needed) - just wondering

why this was done

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533773125

##

File path: scripts/in_container/entrypoint_ci.sh

##

@@ -98,13 +101,51 @@ if [[ -z ${INSTALL_AIRFLOW_VERSION=} ]]; then

mkdir -p "${AIRFLOW_SOURCES}"/logs/

mkdir -p "${AIRFLOW_SOURCES}"/tmp/

export PYTHONPATH=${AIRFLOW_SOURCES}

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "none" ]]; then

+echo

+echo "Skip installing airflow - only install wheel packages that are

present locally"

+echo

+uninstall_airflow_and_providers

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo

+echo "Install airflow from wheel package with [all] extras but

uninstalling providers."

+echo

+uninstall_airflow_and_providers

+install_airflow_from_wheel "[all]"

+uninstall_providers

else

-# Installs released airflow version without adding more extras

-install_released_airflow_version "" "${INSTALL_AIRFLOW_VERSION}"

+echo

+echo "Install airflow from PyPI including [all] extras"

+echo

+install_released_airflow_version "${INSTALL_AIRFLOW_VERSION}" "[all]"

fi

-

-if [[ ${INSTALL_WHEELS=} == "true" ]]; then

- pip install /dist/*.whl || true

+if [[ ${INSTALL_PACKAGES_FROM_DIST=} == "true" ]]; then

+echo

+echo "Install all packages from dist folder"

+if [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo "(except apache-airflow)"

+fi

Review comment:

BTW. I think we'd loose 4/5 times more than from optimising the extra

step in build workflow. to be honest if we install .sdist. Do you think it's

worth it ?

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533772296

##

File path: .github/workflows/build-images-workflow-run.yml

##

@@ -336,7 +336,8 @@ jobs:

if: steps.defaults.outputs.proceed == 'true'

- name: "Build CI images ${{ matrix.python-version }}:${{

github.event.workflow_run.id }}"

run: ./scripts/ci/images/ci_prepare_ci_image_on_ci.sh

-if: matrix.image-type == 'CI' && steps.defaults.outputs.proceed ==

'true'

+# locally built CI image is needed to prepare packages for PROD image

build

Review comment:

4 minutes for prod image builds only. For most builds it is 4 minutes

for 1 job because we only prepare default image. We need it anyway, because we

need consistent environment in which we need to prepare all packages. We could

potentially optimise in the future and make a parallel environment for that (we

would have to see, fi this is faster or slower). We can also wait with

starting production images until CI image is ready and pull it - which will be

1 minute overhead, For now this is the best way if we want to make it before

2.0. There are a few ways we can optimise it. But i want to have ti in place

before 2.0 is out.

That was a deliberate decision.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #11350: Allow execution of multiple sql statements in SnowflakeHook

kaxil commented on a change in pull request #11350: URL: https://github.com/apache/airflow/pull/11350#discussion_r533770600 ## File path: airflow/providers/snowflake/hooks/snowflake.py ## @@ -142,3 +141,18 @@ def set_autocommit(self, conn, autocommit: Any) -> None: def get_autocommit(self, conn): return getattr(conn, 'autocommit_mode', False) + +def run(self, sql, autocommit=False, parameters=None): +""" +Snowflake-connector doesn't allow natively the execution of multiple SQL statements in the same +call. So for allowing to pass files or strings with several queries this method is coded, +that relies on run from DBApiHook +""" +if isinstance(sql, str): +with closing(self.get_conn()) as conn: +if self.supports_autocommit: +self.set_autocommit(conn, autocommit) + +conn.execute_string(sql, parameters) +else: +super().run(sql, autocommit, parameters) Review comment: Can you add a test for this @JavierLopezT This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533769785

##

File path: breeze

##

@@ -2211,6 +2259,12 @@ function breeze::flag_local_file_mounting() {

-l, --skip-mounting-local-sources

Skips mounting local volume with sources - you get exactly what is in

the

docker image rather than your current local sources of Airflow.

+

+--skip-mounting-files

Review comment:

We need it badly. The problem is that when you install from wheels AND

have sources mounted, python interpreter behaves differently in python 3 is to

look for airflow in the current working directory. so if you mount sources AND

have packages installed, behaviour depends on which directory is your cwd. This

is bad and confusing.

On the other hand there are cases where you do need the sources - even if

you install the packages, for example when you want to test setup.py behaviour

with installed dependencies. It helped me a lot with my dependency work. So we

need that option.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #11631: Show Celery stats in webui

kaxil commented on pull request #11631: URL: https://github.com/apache/airflow/pull/11631#issuecomment-736865155 Yeah, let's only spend time on it after other high-priority items are done. And once those are done, I have no problems merging this in :) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #12685: Production images on CI are now built from packages

potiuk commented on a change in pull request #12685:

URL: https://github.com/apache/airflow/pull/12685#discussion_r533767494

##

File path: scripts/in_container/entrypoint_ci.sh

##

@@ -98,13 +101,51 @@ if [[ -z ${INSTALL_AIRFLOW_VERSION=} ]]; then

mkdir -p "${AIRFLOW_SOURCES}"/logs/

mkdir -p "${AIRFLOW_SOURCES}"/tmp/

export PYTHONPATH=${AIRFLOW_SOURCES}

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "none" ]]; then

+echo

+echo "Skip installing airflow - only install wheel packages that are

present locally"

+echo

+uninstall_airflow_and_providers

+elif [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo

+echo "Install airflow from wheel package with [all] extras but

uninstalling providers."

+echo

+uninstall_airflow_and_providers

+install_airflow_from_wheel "[all]"

+uninstall_providers

else

-# Installs released airflow version without adding more extras

-install_released_airflow_version "" "${INSTALL_AIRFLOW_VERSION}"

+echo

+echo "Install airflow from PyPI including [all] extras"

+echo

+install_released_airflow_version "${INSTALL_AIRFLOW_VERSION}" "[all]"

fi

-

-if [[ ${INSTALL_WHEELS=} == "true" ]]; then

- pip install /dist/*.whl || true

+if [[ ${INSTALL_PACKAGES_FROM_DIST=} == "true" ]]; then

+echo

+echo "Install all packages from dist folder"

+if [[ ${INSTALL_AIRFLOW_VERSION} == "wheel" ]]; then

+echo "(except apache-airflow)"

+fi

Review comment:

Because it takes much longer. That's the only reason. From observations,

it takes usually 2-4 times longer to install an sdist package than .whl, And

this test already runs for quite a few minutes, so I wanted to reduce the

strain on CI

Do you think @ashb we should? Do you expect any problems with sdist

comparing to wheel? I can easily change it to install from sdist additionally

to wheels, but it will make our tests quite a bit longer, so unless there is a

good reason we suspect something wrong we should not do it.

The only reason I am installing sdist for providers is that the setup.py are

generated there and they were wrong initially, but i was considering to drop

that as well, as I do not expect any more problems there - sdist should behave

same as .wh if setup.py is there

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #12660: Add upgrade check rule for unrecognized arguments to Operators

potiuk commented on pull request #12660: URL: https://github.com/apache/airflow/pull/12660#issuecomment-736860313 > Weird, passed for me locally too As discussed here: https://apache-airflow.slack.com/archives/C0146STM600/p1606855518349800 - I belive this might side-effect from another test using the same "test" dag_id. We have a few of those and there are a number of tests that are likely leaving some remnants in the DB. My guess would be, this is the case and cleaning in setUp/tearDown might solve the issue. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] potiuk commented on issue #11549: Operator for prometheus API interaction

potiuk commented on issue #11549: URL: https://github.com/apache/airflow/issues/11549#issuecomment-736858766 I think we need to discuss this in the devlist. What is the future of Airflow in terms of new "providers" - whether we should incorporate more providers in Airflow after 2.0 and support them by community (and possibly grow it by accepting more committers from different areas) or whether we should slim it down and not accept wider contributions). This has never been discussed openly - and while I feel there are some opinions there, there, they are just that - opinions, until we make it a point and discuss in the community and make decisions. I do not know personally what is my opinion at that because it is exactly this - it has not been discussed, neither pros/cons nor actual approach here has ever been discussed in the devlist as far as I can remember. @ashb -> I think it would be great if you start this discussion at the devlist, before we lightly discard such ideas whether new providers will be added in the community or as 3rd-party implementations ? I think, whatever decision will be made, it should be made after raising the issue and public discussion at the devlist. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on pull request #12660: Add upgrade check rule for unrecognized arguments to Operators

kaxil commented on pull request #12660: URL: https://github.com/apache/airflow/pull/12660#issuecomment-736855218 Weird, passed for me locally too This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kishvanchee commented on pull request #11293: Move dummy_operator.py to dummy.py (#11178)

kishvanchee commented on pull request #11293: URL: https://github.com/apache/airflow/pull/11293#issuecomment-736850672 @ashb Yes sure, I will do this tomorrow. Apologies for the delay. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #12746: Move config item 'worker_precheck' from section [core] to [celery]

github-actions[bot] commented on pull request #12746: URL: https://github.com/apache/airflow/pull/12746#issuecomment-736849519 The PR most likely needs to run full matrix of tests because it modifies parts of the core of Airflow. However, committers might decide to merge it quickly and take the risk. If they don't merge it quickly - please rebase it to the latest master at your convenience, or amend the last commit of the PR, and push it with --force-with-lease. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12704: Refactor list rendering in commands

kaxil commented on a change in pull request #12704: URL: https://github.com/apache/airflow/pull/12704#discussion_r533748403 ## File path: tests/cli/commands/test_task_command.py ## @@ -55,6 +55,7 @@ def reset(dag_id): runs.delete() +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: Can this be removed, looks like tests are passing ## File path: tests/cli/commands/test_dag_command.py ## @@ -47,6 +47,7 @@ ) +# TODO: Check if tests needs side effects - locally there's missing DAG Review comment: Can this be removed, looks like tests are passing This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #12704: Refactor list rendering in commands