[GitHub] flink issue #6031: [FLINK-9386] Embed netty router

Github user uce commented on the issue: https://github.com/apache/flink/pull/6031 > I planned to upgrade netty and drop netty-router in one step of upgrading flink-shaded-netty. Do you think it should be split somehow? No, if the ticket for upgrading covers that, we are all good. ð to merge. Thanks for taking care of this. Looking forward to finally have a recent Netty version. ---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190274800

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/MethodlessRouter.java

---

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.LinkedHashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

+ *

+ * Router that doesn't contain information about HTTP request methods

and route

+ * matching orders.

+ */

+final class MethodlessRouter {

+ private static final Logger log =

LoggerFactory.getLogger(MethodlessRouter.class);

+

+ // A path pattern can only point to one target

+ private final Map<PathPattern, T> routes = new LinkedHashMap<>();

--- End diff --

Fine with me to not invest more time into this and keep it as is ð

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190274982

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/RouterHandler.java

---

@@ -0,0 +1,109 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.util.HandlerUtils;

+import org.apache.flink.runtime.rest.messages.ErrorResponseBody;

+

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandler;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandlerContext;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelPipeline;

+import

org.apache.flink.shaded.netty4.io.netty.channel.SimpleChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.DefaultFullHttpResponse;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpHeaders;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpRequest;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpVersion;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.QueryStringDecoder;

+

+import java.util.Map;

+

+import static java.util.Objects.requireNonNull;

+

+/**

+ * This class replaces the standard error response to be identical with

those sent by the {@link AbstractRestHandler}.

+ */

+public class RouterHandler extends

SimpleChannelInboundHandler {

+ public static final String ROUTER_HANDLER_NAME =

RouterHandler.class.getName() + "_ROUTER_HANDLER";

+ public static final String ROUTED_HANDLER_NAME =

RouterHandler.class.getName() + "_ROUTED_HANDLER";

+

+ private final Map<String, String> responseHeaders;

+ private final Router router;

+

+ public RouterHandler(Router router, final Map<String, String>

responseHeaders) {

+ this.router = requireNonNull(router);

+ this.responseHeaders = requireNonNull(responseHeaders);

+ }

+

+ public String getName() {

+ return ROUTER_HANDLER_NAME;

+ }

+

+ @Override

+ protected void channelRead0(ChannelHandlerContext

channelHandlerContext, HttpRequest httpRequest) throws Exception {

+ if (HttpHeaders.is100ContinueExpected(httpRequest)) {

+ channelHandlerContext.writeAndFlush(new

DefaultFullHttpResponse(HttpVersion.HTTP_1_1, HttpResponseStatus.CONTINUE));

+ return;

+ }

+

+ // Route

+ HttpMethod method = httpRequest.getMethod();

+ QueryStringDecoder qsd = new

QueryStringDecoder(httpRequest.getUri());

+ RouteResult routeResult = router.route(method, qsd.path(),

qsd.parameters());

+

+ if (routeResult == null) {

+ respondNotFound(channelHandlerContext, httpRequest);

+ return;

+ }

+

+ routed(channelHandlerContext, routeResult, httpRequest);

+ }

+

+ private void routed(

+ ChannelHandlerContext channelHandlerContext,

+ RouteResult routeResult,

+ HttpRequest httpRequest) throws Exception {

+ ChannelInboundHandler handler = (ChannelInboundHandler)

routeResult.target();

+

+ // The handler may have been added (keep alive)

+ ChannelPipeline pipeline = channelHandlerContext.pipeline();

+ ChannelHandler addedHandler = pipeline.get(ROUTED_HANDLE

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190214988

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/RouterHandler.java

---

@@ -0,0 +1,109 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.util.HandlerUtils;

+import org.apache.flink.runtime.rest.messages.ErrorResponseBody;

+

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandler;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandlerContext;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelPipeline;

+import

org.apache.flink.shaded.netty4.io.netty.channel.SimpleChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.DefaultFullHttpResponse;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpHeaders;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpRequest;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpVersion;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.QueryStringDecoder;

+

+import java.util.Map;

+

+import static java.util.Objects.requireNonNull;

+

+/**

+ * This class replaces the standard error response to be identical with

those sent by the {@link AbstractRestHandler}.

+ */

+public class RouterHandler extends

SimpleChannelInboundHandler {

+ public static final String ROUTER_HANDLER_NAME =

RouterHandler.class.getName() + "_ROUTER_HANDLER";

+ public static final String ROUTED_HANDLER_NAME =

RouterHandler.class.getName() + "_ROUTED_HANDLER";

+

+ private final Map<String, String> responseHeaders;

+ private final Router router;

+

+ public RouterHandler(Router router, final Map<String, String>

responseHeaders) {

+ this.router = requireNonNull(router);

+ this.responseHeaders = requireNonNull(responseHeaders);

+ }

+

+ public String getName() {

+ return ROUTER_HANDLER_NAME;

+ }

+

+ @Override

+ protected void channelRead0(ChannelHandlerContext

channelHandlerContext, HttpRequest httpRequest) throws Exception {

+ if (HttpHeaders.is100ContinueExpected(httpRequest)) {

+ channelHandlerContext.writeAndFlush(new

DefaultFullHttpResponse(HttpVersion.HTTP_1_1, HttpResponseStatus.CONTINUE));

+ return;

+ }

+

+ // Route

+ HttpMethod method = httpRequest.getMethod();

+ QueryStringDecoder qsd = new

QueryStringDecoder(httpRequest.getUri());

+ RouteResult routeResult = router.route(method, qsd.path(),

qsd.parameters());

+

+ if (routeResult == null) {

+ respondNotFound(channelHandlerContext, httpRequest);

+ return;

+ }

+

+ routed(channelHandlerContext, routeResult, httpRequest);

+ }

+

+ private void routed(

+ ChannelHandlerContext channelHandlerContext,

+ RouteResult routeResult,

+ HttpRequest httpRequest) throws Exception {

+ ChannelInboundHandler handler = (ChannelInboundHandler)

routeResult.target();

+

+ // The handler may have been added (keep alive)

+ ChannelPipeline pipeline = channelHandlerContext.pipeline();

+ ChannelHandler addedHandler = pipeline.get(ROUTED_HANDLE

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190248429

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/RouteResult.java

---

@@ -0,0 +1,136 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod;

+import org.apache.flink.shaded.netty4.io.netty.util.internal.ObjectUtil;

+

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.List;

+import java.util.Map;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

+ *

+ * Result of calling {@link Router#route(HttpMethod, String)}.

+ */

+public class RouteResult {

--- End diff --

Maybe add reference to

https://github.com/sinetja/netty-router/blob/2.2.0/src/main/java/io/netty/handler/codec/http/router/RouteResult.java

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190244366

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/MethodlessRouter.java

---

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.LinkedHashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

+ *

+ * Router that doesn't contain information about HTTP request methods

and route

+ * matching orders.

+ */

+final class MethodlessRouter {

+ private static final Logger log =

LoggerFactory.getLogger(MethodlessRouter.class);

+

+ // A path pattern can only point to one target

+ private final Map<PathPattern, T> routes = new LinkedHashMap<>();

--- End diff --

Please correct me if I'm wrong but I have a question regarding memory

visibility: The thread that updates this map and the Netty event loop threads

are different, so there might theoretically be a memory visibility issue if

routes are added after the router has been passed to the `RouterHandler`. I

don't think that we do this currently, but the API theoretically allows it. I'm

wondering whether it makes sense to make the routes immutable, maybe something

like creating the routes with a builder:

```java

Routes routes = new RoutesBuilder()

.addGet(...)

...

.build();

Router router = new Router(routes);

```

Or use a thread-safe map here that also preserves ordering (maybe wrap

using `synchronizedMap`).

---

Since we currently rely on correct registration order (e.g.

`/jobs/overview` before `/jobs/:id` for correct matching), the immutable

approach would allow us to include a utility in `Routes` that sorts pattern as

done in `RestServerEndpoint:143`:

```java

Collections.sort(handlers,RestHandlerUrlComparator.INSTANCE);

```

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190191066

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/RouterHandler.java

---

@@ -0,0 +1,109 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.util.HandlerUtils;

+import org.apache.flink.runtime.rest.messages.ErrorResponseBody;

+

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandler;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandlerContext;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelPipeline;

+import

org.apache.flink.shaded.netty4.io.netty.channel.SimpleChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.DefaultFullHttpResponse;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpHeaders;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpRequest;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpVersion;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.QueryStringDecoder;

+

+import java.util.Map;

+

+import static java.util.Objects.requireNonNull;

+

+/**

+ * This class replaces the standard error response to be identical with

those sent by the {@link AbstractRestHandler}.

+ */

+public class RouterHandler extends

SimpleChannelInboundHandler {

--- End diff --

I've verified that this class merges the behaviour of `Handler` and

`AbstractHandler`. ð

Since we copied the code, we might leave it as is, but I noticed the

following minor things (there are similar warnings in the other copied classes):

- `L46`, `L47`: fields can be private

- `L62`: `throws Exception` can be removed

- `L98`: I get a unchecked call warning for `RouteResult`. We could use

`` to get rid of it I think

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190246117

--- Diff:

flink-runtime-web/src/main/java/org/apache/flink/runtime/webmonitor/RuntimeMonitorHandler.java

---

@@ -89,19 +91,20 @@ public RuntimeMonitorHandler(

}

@Override

- protected void respondAsLeader(ChannelHandlerContext ctx, Routed

routed, JobManagerGateway jobManagerGateway) {

+ protected void respondAsLeader(ChannelHandlerContext ctx, RoutedRequest

routedRequest, JobManagerGateway jobManagerGateway) {

CompletableFuture responseFuture;

+ RouteResult result = routedRequest.getRouteResult();

--- End diff --

I think if you do `RouteResult result = routedRequest.getRouteResult();`

you don't need the cast to `Set` in lines 101 and 106.

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request: https://github.com/apache/flink/pull/6031#discussion_r190248637 --- Diff: flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/Router.java --- @@ -0,0 +1,398 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one + * or more contributor license agreements. See the NOTICE file + * distributed with this work for additional information + * regarding copyright ownership. The ASF licenses this file + * to you under the Apache License, Version 2.0 (the + * "License"); you may not use this file except in compliance + * with the License. You may obtain a copy of the License at + * + * http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.flink.runtime.rest.handler.router; + +import org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod; +import org.apache.flink.shaded.netty4.io.netty.handler.codec.http.QueryStringDecoder; + +import java.util.ArrayList; +import java.util.Collections; +import java.util.HashMap; +import java.util.HashSet; +import java.util.List; +import java.util.Map; +import java.util.Map.Entry; +import java.util.Set; + +/** + * This is adopted and simplified code from tv.cntt:netty-router library. Compared to original version this one --- End diff -- - Maybe add a link to https://github.com/sinetja/netty-router/blob/2.2.0/src/main/java/io/netty/handler/codec/http/router/Router.java ---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190213387

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/MethodlessRouter.java

---

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.LinkedHashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

+ *

+ * Router that doesn't contain information about HTTP request methods

and route

+ * matching orders.

+ */

+final class MethodlessRouter {

+ private static final Logger log =

LoggerFactory.getLogger(MethodlessRouter.class);

+

+ // A path pattern can only point to one target

+ private final Map<PathPattern, T> routes = new LinkedHashMap<>();

--- End diff --

Using the `LinkedHashMap` is what preserves the order of adding handlers,

right? Or is there another thing that I've missed?

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190248244

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/PathPattern.java

---

@@ -0,0 +1,179 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import java.util.Map;

+

+import static org.apache.flink.util.Preconditions.checkNotNull;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

+ *

+ * The pattern can contain constants or placeholders, example:

+ * {@code constant1/:placeholder1/constant2/:*}.

+ *

+ * {@code :*} is a special placeholder to catch the rest of the path

+ * (may include slashes). If exists, it must appear at the end of the path.

+ *

+ * The pattern must not contain query, example:

+ * {@code constant1/constant2?foo=bar}.

+ *

+ * The pattern will be broken to tokens, example:

+ * {@code ["constant1", ":variable", "constant2", ":*"]}

+ */

+final class PathPattern {

--- End diff --

Maybe add reference to

https://github.com/sinetja/netty-router/blob/2.2.0/src/main/java/io/netty/handler/codec/http/router/PathPattern.java

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190186599

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/RouterHandler.java

---

@@ -0,0 +1,109 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.apache.flink.runtime.rest.handler.AbstractRestHandler;

+import org.apache.flink.runtime.rest.handler.util.HandlerUtils;

+import org.apache.flink.runtime.rest.messages.ErrorResponseBody;

+

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandler;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandlerContext;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandler;

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelPipeline;

+import

org.apache.flink.shaded.netty4.io.netty.channel.SimpleChannelInboundHandler;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.DefaultFullHttpResponse;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpHeaders;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpMethod;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpRequest;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpResponseStatus;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.HttpVersion;

+import

org.apache.flink.shaded.netty4.io.netty.handler.codec.http.QueryStringDecoder;

+

+import java.util.Map;

+

+import static java.util.Objects.requireNonNull;

+

+/**

+ * This class replaces the standard error response to be identical with

those sent by the {@link AbstractRestHandler}.

--- End diff --

- I would add a comment that this was copied and simplified from

https://github.com/sinetja/netty-router/blob/1.10/src/main/java/io/netty/handler/codec/http/router/Handler.java

and

https://github.com/sinetja/netty-router/blob/1.10/src/main/java/io/netty/handler/codec/http/router/AbstractHandler.java

for future reference. That can be beneficial in the future.

- I would also copy the high-level comment from that class: `Inbound

handler that converts HttpRequest to RoutedRequest and passes RoutedRequest to

the matched handler` as found in the original Handler class.

---

[GitHub] flink pull request #6031: [FLINK-9386] Embed netty router

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/6031#discussion_r190197601

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/handler/router/MethodlessRouter.java

---

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.rest.handler.router;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.LinkedHashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+

+/**

+ * This is adopted and simplified code from tv.cntt:netty-router library.

For more information check {@link Router}.

--- End diff --

- Maybe add a link to

https://github.com/sinetja/netty-router/blob/2.2.0/src/main/java/io/netty/handler/codec/http/router/MethodlessRouter.java

and

https://github.com/sinetja/netty-router/blob/2.2.0/src/main/java/io/netty/handler/codec/http/router/OrderlessRouter.java

as a reference

---

[GitHub] flink pull request #:

Github user uce commented on the pull request: https://github.com/apache/flink/commit/3a61ea47922280e15f462ca3cdc0c367047bde24#commitcomment-28293221 @twalthr I think this broke the build. At least locally, I get a RAT license failure. ``` 1 Unknown Licenses * Files with unapproved licenses: docs/page/js/jquery.min.js * ``` ---

[GitHub] flink issue #5395: [FLINK-8308] Remove explicit yajl-ruby dependency, update...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5395 Didn't merge yet, because there is an issue with the buildbot environment. ---

[GitHub] flink issue #5395: [FLINK-8308] Remove explicit yajl-ruby dependency, update...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5395 I've built this locally and everything looks good to me (linebreaks and code highlighting). I will merge this to `master`, but keep this PR open for a while. If everything works in the `buildbot` environment I will also merge it to 1.4. Then we can close this PR. :-) ---

[GitHub] flink pull request #4809: [FLINK-7803][Documentation] Add missing savepoint ...

Github user uce commented on a diff in the pull request: https://github.com/apache/flink/pull/4809#discussion_r166008336 --- Diff: docs/ops/state/savepoints.md --- @@ -120,6 +120,10 @@ This will atomically trigger a savepoint for the job with ID `:jobid` and cancel If you don't specify a target directory, you need to have [configured a default directory](#configuration). Otherwise, cancelling the job with a savepoint will fail. + --- End diff -- ð ---

[GitHub] flink issue #5395: [FLINK-8308] Remove explicit yajl-ruby dependency, update...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5395 https://issues.apache.org/jira/browse/INFRA-15959 `ruby2.3.1` has been installed on the buildbot. I will check whether the build works as expected and report back here (probably only by next week). ---

[GitHub] flink pull request #5395: [FLINK-8308] Remove explicit yajl-ruby dependency,...

Github user uce commented on a diff in the pull request: https://github.com/apache/flink/pull/5395#discussion_r165295535 --- Diff: docs/_config.yml --- @@ -77,12 +77,7 @@ defaults: layout: plain nav-pos: 9 # Move to end if no pos specified -markdown: KramdownPygments --- End diff -- Some questions regarding the removed lines: - What is used for markdown and code highlighting now? - Do we still support GitHub-flavored Markdown? - What happens to new lines? I didn't have time to build the docs again to check yet. ---

[GitHub] flink issue #5395: [FLINK-8308] Remove explicit yajl-ruby dependency, update...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5395 https://ci.apache.org/builders/flink-docs-master/builds/977/steps/Flink%20docs/logs/stdio says ``` Ruby version: ruby 2.0.0p384 (2014-01-12) [x86_64-linux-gnu] ``` I can ask INFRA whether it is possible to run a more recent Ruby version. ---

[GitHub] flink issue #5331: [FLINK-8473][webUI] Improve error behavior of JarListHand...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5331 I just tried it, re-uploading after deleting the directory **does not work**. Good catch Stephan. :-) @zentol: I found `HttpRequestHandler` which handles the uploads. The handler assumes that the directory exists and the logic for creating the temp directory is in `WebRuntimeMonitor`. We should consolidate this in a single place (if no one else needs this directory). ---

[GitHub] flink issue #5331: [FLINK-8473][webUI] Improve error behavior of JarListHand...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5331 ð to merge. ---

[GitHub] flink pull request #5331: [FLINK-8473][webUI] Improve error behavior of JarL...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/5331#discussion_r162935682

--- Diff:

flink-runtime-web/src/main/java/org/apache/flink/runtime/webmonitor/handlers/JarListHandler.java

---

@@ -77,6 +82,11 @@ public boolean accept(File dir, String name) {

}

});

+ if (list == null) {

+ LOG.warn("Jar storage directory

{} has been deleted.", jarDir.getAbsolutePath());

--- End diff --

Somehow this comment got lost before: Should we make the error message more

explicit and say that it was `deleted externally` (e.g. not by Flink)?

---

[GitHub] flink pull request #5331: [FLINK-8473][webUI] Improve error behavior of JarL...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/5331#discussion_r162932050

--- Diff:

flink-runtime-web/src/main/java/org/apache/flink/runtime/webmonitor/handlers/JarListHandler.java

---

@@ -145,6 +155,7 @@ public boolean accept(File dir, String name) {

return writer.toString();

}

catch (Exception e) {

+ LOG.warn("Failed to fetch jar list.",

e);

--- End diff --

Aren't failed completions logged anyways?

---

[GitHub] flink issue #5264: [FLINK-8352][web-dashboard] Flink UI Reports No Error on ...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5264 Build failure is unrelated (YARN test). Merging this. Thanks! ---

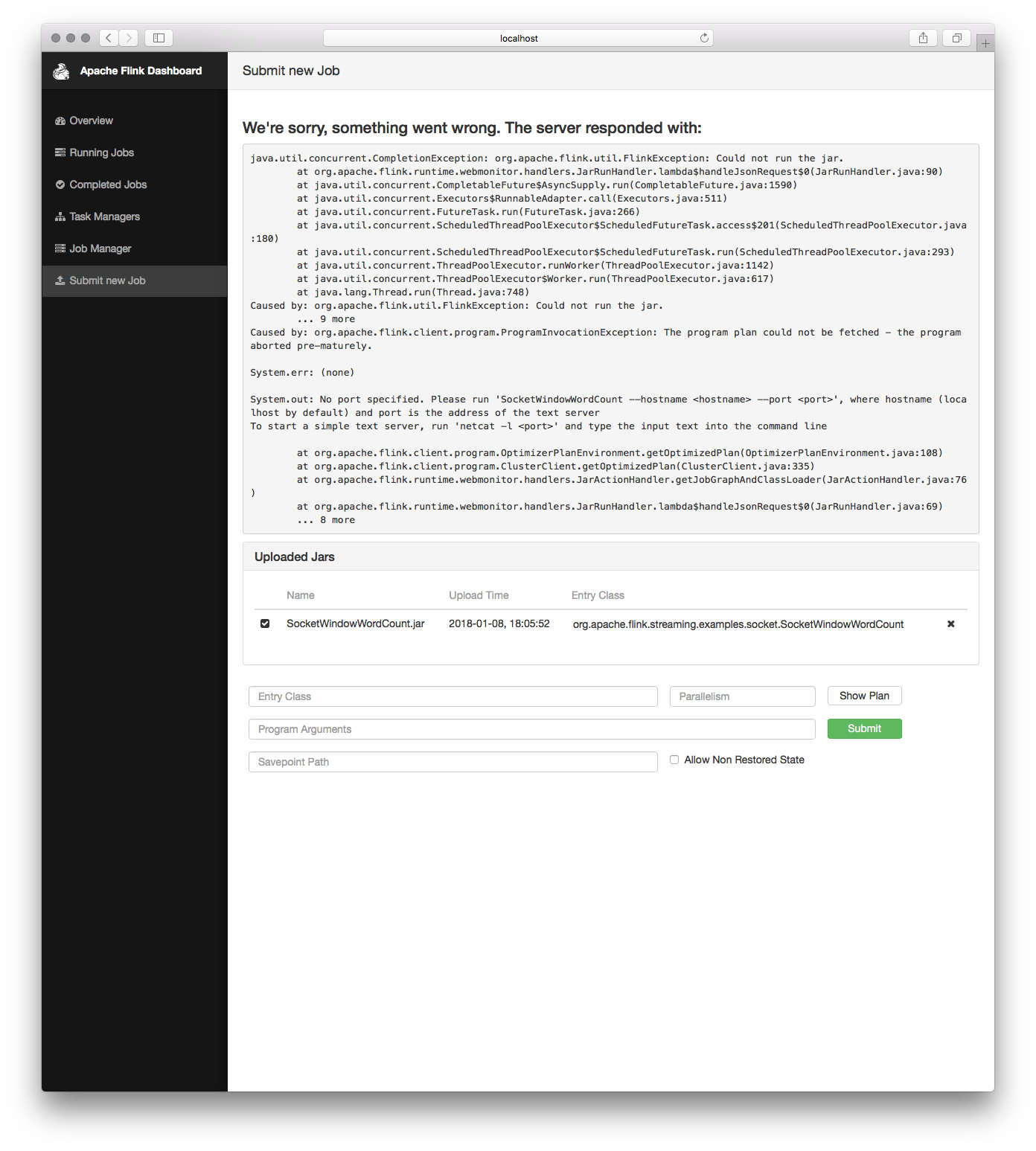

[GitHub] flink issue #5264: [FLINK-8352][web-dashboard] Flink UI Reports No Error on ...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5264 Looks good to me. I've tried this out locally for both a working and not working (see screenshot) JAR.  I will merge this as soon as Travis gives a green light. ---

[GitHub] flink issue #5263: [FLINK-7991][docs] Fix baseUrl for master branch

Github user uce commented on the issue: https://github.com/apache/flink/pull/5263 Looks good to me. Good catch! +1 to merge ð ---

[GitHub] flink pull request #:

Github user uce commented on the pull request: https://github.com/apache/flink/commit/8086e3bee8be4614359041c14786140edff19666#commitcomment-26179876 I know that the project historically did not consider the REST API as a public API, but I would vote to note down all of these breaking REST API changes for the upcoming 1.5 release notes in order to have a good migration path for users. I just ran into this when pointing a tool that was using the `jobsoverview` endpoint of 1.4 to the latest master and had to look into what happened when I got a 404. It might also be worth to add redirects in 1.5. and only remove them in the release after that (cc @zentol). ---

[GitHub] flink issue #5102: [FLINK-7762, FLINK-8167] Clean up and harden WikipediaEdi...

Github user uce commented on the issue: https://github.com/apache/flink/pull/5102 I would be in favour of removing this or moving it to Bahir, but it is currently used in a [doc example](https://ci.apache.org/projects/flink/flink-docs-release-1.4/quickstart/run_example_quickstart.html). Besides that I doubt that this is of much value to users. If you think it's OK with it, let's merge this for now and think about the example thing really needs it. ---

[GitHub] flink pull request #5102: [FLINK-7762, FLINK-8167] Clean up and harden Wikip...

GitHub user uce opened a pull request: https://github.com/apache/flink/pull/5102 [FLINK-7762, FLINK-8167] Clean up and harden WikipediaEditsSource ## What is the purpose of the change This pull requests addresses two related issues with the WikipediaEditsSource. It makes the WikipediaEditsSourceTest a proper test instead of unnecessarily starting a FlinkMiniCluster and addresses a potential test instability. In general, the WikipediaEditsSource is not in good shape and could benefit from further refactoring. One potential area for improvement is integration with the asynchronous channel listener that reports events like errors or being kicked out of a channel, etc. I did not do this due to time constraints and the fact that this is not a production source. In general, it is questionable whether we should keep the test as is or remove it since it depends on connectivity to an IRC channel. ## Brief change log - Harden WikipediaEditsSource with eager sanity checks - Make WikipediaEditsSourceTest proper test ## Verifying this change This change is a rework/code cleanup without any new test coverage. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): yes, but only to `flink-test-utils-junit` - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable You can merge this pull request into a Git repository by running: $ git pull https://github.com/uce/flink 7762-8167-wikiedits Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/5102.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #5102 commit b2ab66f05ce545214a8132dc2d46b3143939b015 Author: Ufuk Celebi <u...@apache.org> Date: 2017-11-29T15:28:18Z [FLINK-8167] [connector-wikiedits] Harden WikipediaEditsSource - Minor eager sanity checks - Use UUID suffix for nickname. As reported in FLINK-8167, the current nickname suffix can result in nickname clashes which lead to test failures. commit 06ec1542963bbe2afaf1ad1fd55a54d13f855304 Author: Ufuk Celebi <u...@apache.org> Date: 2017-11-29T15:36:29Z [FLINK-7762] [connector-wikiedits] Make WikipediaEditsSourceTest proper test The WikipediaEditsSourceTest unnecessarily implements an integration test that starts a FlinkMiniCluster and executes a small Flink program. This simply creates a source and executes run in a separate thread until a single WikipediaEditEvent is received. ---

[GitHub] flink pull request #4533: [FLINK-7416][network] Implement Netty receiver out...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4533#discussion_r151946320

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/netty/CreditBasedClientHandler.java

---

@@ -0,0 +1,364 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.io.network.netty;

+

+import org.apache.flink.core.memory.MemorySegment;

+import org.apache.flink.core.memory.MemorySegmentFactory;

+import org.apache.flink.runtime.io.network.buffer.Buffer;

+import org.apache.flink.runtime.io.network.buffer.FreeingBufferRecycler;

+import

org.apache.flink.runtime.io.network.netty.exception.LocalTransportException;

+import

org.apache.flink.runtime.io.network.netty.exception.RemoteTransportException;

+import

org.apache.flink.runtime.io.network.netty.exception.TransportException;

+import org.apache.flink.runtime.io.network.netty.NettyMessage.AddCredit;

+import

org.apache.flink.runtime.io.network.partition.PartitionNotFoundException;

+import

org.apache.flink.runtime.io.network.partition.consumer.InputChannelID;

+import

org.apache.flink.runtime.io.network.partition.consumer.RemoteInputChannel;

+

+import org.apache.flink.shaded.netty4.io.netty.channel.Channel;

+import org.apache.flink.shaded.netty4.io.netty.channel.ChannelFuture;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelFutureListener;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelHandlerContext;

+import

org.apache.flink.shaded.netty4.io.netty.channel.ChannelInboundHandlerAdapter;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.net.SocketAddress;

+import java.util.ArrayDeque;

+import java.util.concurrent.ConcurrentHashMap;

+import java.util.concurrent.ConcurrentMap;

+import java.util.concurrent.atomic.AtomicReference;

+

+/**

+ * Channel handler to read the messages of buffer response or error

response from the

+ * producer, to write and flush the unannounced credits for the producer.

+ */

+class CreditBasedClientHandler extends ChannelInboundHandlerAdapter {

+

+ private static final Logger LOG =

LoggerFactory.getLogger(CreditBasedClientHandler.class);

+

+ /** Channels, which already requested partitions from the producers. */

+ private final ConcurrentMap<InputChannelID, RemoteInputChannel>

inputChannels = new ConcurrentHashMap<>();

+

+ /** Channels, which will notify the producers about unannounced credit.

*/

+ private final ArrayDeque inputChannelsWithCredit =

new ArrayDeque<>();

+

+ private final AtomicReference channelError = new

AtomicReference<>();

+

+ private final ChannelFutureListener writeListener = new

WriteAndFlushNextMessageIfPossibleListener();

+

+ /**

+* Set of cancelled partition requests. A request is cancelled iff an

input channel is cleared

+* while data is still coming in for this channel.

+*/

+ private final ConcurrentMap<InputChannelID, InputChannelID> cancelled =

new ConcurrentHashMap<>();

+

+ private volatile ChannelHandlerContext ctx;

--- End diff --

ð

---

[GitHub] flink issue #3442: [FLINK-5778] [savepoints] Add savepoint serializer with r...

Github user uce commented on the issue: https://github.com/apache/flink/pull/3442 I had a quick chat with Stephan about this. @StefanRRichter has an idea how to properly implement this. Closing this PR and unassigning the issue. ---

[GitHub] flink pull request #3442: [FLINK-5778] [savepoints] Add savepoint serializer...

Github user uce closed the pull request at: https://github.com/apache/flink/pull/3442 ---

[GitHub] flink issue #4888: [backport] [FLINK-7067] Resume checkpointing after failed...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4888 Merged in e8e2913. ---

[GitHub] flink pull request #4888: [backport] [FLINK-7067] Resume checkpointing after...

Github user uce closed the pull request at: https://github.com/apache/flink/pull/4888 ---

[GitHub] flink issue #4888: [backport] [FLINK-7067] Resume checkpointing after failed...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4888 All failed tests are due to a similar issue that seems not to be related to the tests. Merging... ---

[GitHub] flink issue #4888: [backport] [FLINK-7067] Resume checkpointing after failed...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4888 @aljoscha Getting the following message in two of the builds in https://travis-ci.org/apache/flink/builds/291523047: ``` == Compilation/test failure detected, skipping shaded dependency check. == ``` Ever seen this? ---

[GitHub] flink pull request #4888: [backport] [FLINK-7067] Resume checkpointing after...

GitHub user uce opened a pull request: https://github.com/apache/flink/pull/4888 [backport] [FLINK-7067] Resume checkpointing after failed cancel-job-with-savepoint This is a backport of #4254. I will merge this as soon as Travis gives the green light. You can merge this pull request into a Git repository by running: $ git pull https://github.com/uce/flink 7067-backport Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/4888.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #4888 commit 9226c3a15f8037851110fbdecf775cad99da771f Author: Ufuk Celebi <u...@apache.org> Date: 2017-07-04T14:39:02Z [hotfix] [tests] Reduce visibility of helper class methods There is no need to make the helper methods public. No other class should even use this inner test helper invokable. commit c571929ce476f17d02ee22df0b5170b0eb322c2d Author: Ufuk Celebi <u...@apache.org> Date: 2017-07-04T15:01:32Z [FLINK-7067] [jobmanager] Resume periodic checkpoints after failed cancel-job-with-savepoint Problem: If a cancel-job-with-savepoint request fails, this has an unintended side effect on the respective job if it has periodic checkpoints enabled. The periodic checkpoint scheduler is stopped before triggering the savepoint, but not restarted if a savepoint fails and the job is not cancelled. This commit makes sure that the periodic checkpoint scheduler is restarted iff periodic checkpoints were enabled before. This closes #4254. commit 074630a2fbd6dbdc7ff775ee9fb5d46c088dbc6d Author: Ufuk Celebi <u...@apache.org> Date: 2017-10-23T12:42:46Z [FLINK-7067] [jobmanager] Backport to 1.3 ---

[GitHub] flink issue #4254: [FLINK-7067] [jobmanager] Fix side effects after failed c...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4254 Travis gave the green light, merging this now. ---

[GitHub] flink issue #4254: [FLINK-7067] [jobmanager] Fix side effects after failed c...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4254 Cool! I'll rebase this and merge after Travis gives the green light. ---

[GitHub] flink issue #4254: [FLINK-7067] [jobmanager] Fix side effects after failed c...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4254 @tillrohrmann Thanks for looking over this. The `TestingCluster` is definitely preferable. I don't recall how I ended up with the custom setup instead of the `TestingCluster`. I changed the test to wait for another checkpoint after the failed savepoint. I also considered this for the initial PR, but went with mocking in order to test the case that periodic checkpoints were not activated before the cancellation [1]. I think the current variant is a good compromise between completeness and simplicity though. [1] As seen in the diff of `JobManager.scala`, we only activate the periodic scheduler after a failed cancellation iff it was activated before cancellation. This case can't be tested robustly with the current approach. We could wait for some time and if no checkpoint arrives in that time consider checkpoints as not accidentally activated, but that's not robust. I would therefore ignore this case if you don't have another idea. ---

[GitHub] flink pull request #4254: [FLINK-7067] [jobmanager] Fix side effects after f...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4254#discussion_r145923787

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/jobmanager/JobManagerTest.java

---

@@ -940,6 +955,177 @@ public void testCancelWithSavepoint() throws

Exception {

}

/**

+* Tests that a failed cancel-job-with-savepoint request does not

accidentally disable

+* periodic checkpoints.

+*/

+ @Test

+ public void testCancelJobWithSavepointFailurePeriodicCheckpoints()

throws Exception {

+ testCancelJobWithSavepointFailure(true);

+ }

+

+ /**

+* Tests that a failed cancel-job-with-savepoint request does not

accidentally enable

+* periodic checkpoints.

+*/

+ @Test

+ public void testCancelJobWithSavepointFailureNoPeriodicCheckpoints()

throws Exception {

+ testCancelJobWithSavepointFailure(false);

+ }

+

+ /**

+* Tests that a failed savepoint does not cancel the job and that there

are no

+* unintended side effects.

+*

+* @param enablePeriodicCheckpoints Flag to indicate whether to enable

periodic checkpoints. We

+* need to test both here in order to verify that we don't accidentally

disable or enable

+* checkpoints after a failed cancel-job-with-savepoint request.

+*/

+ private void testCancelJobWithSavepointFailure(

+ boolean enablePeriodicCheckpoints) throws Exception {

+

+ long checkpointInterval = enablePeriodicCheckpoints ? 360 :

Long.MAX_VALUE;

+

+ // Savepoint target

+ File savepointTarget = tmpFolder.newFolder();

+ savepointTarget.deleteOnExit();

+

+ // Timeout for Akka messages

+ FiniteDuration askTimeout = new FiniteDuration(30,

TimeUnit.SECONDS);

+

+ // A source that declines savepoints, simulating the behaviour

+ // of a failed savepoint.

+ JobVertex sourceVertex = new JobVertex("Source");

+

sourceVertex.setInvokableClass(FailOnSavepointStatefulTask.class);

+ sourceVertex.setParallelism(1);

+ JobGraph jobGraph = new JobGraph("TestingJob", sourceVertex);

+

+ final ActorSystem actorSystem =

AkkaUtils.createLocalActorSystem(new Configuration());

+

+ try {

+ Tuple2<ActorRef, ActorRef> master =

JobManager.startJobManagerActors(

+ new Configuration(),

+ actorSystem,

+ TestingUtils.defaultExecutor(),

+ TestingUtils.defaultExecutor(),

+ highAvailabilityServices,

+ Option.apply("jm"),

+ Option.apply("arch"),

+ TestingJobManager.class,

+ TestingMemoryArchivist.class);

+

+ UUID leaderId =

LeaderRetrievalUtils.retrieveLeaderSessionId(

+

highAvailabilityServices.getJobManagerLeaderRetriever(HighAvailabilityServices.DEFAULT_JOB_ID),

+ TestingUtils.TESTING_TIMEOUT());

+

+ ActorGateway jobManager = new

AkkaActorGateway(master._1(), leaderId);

+

+ ActorRef taskManagerRef =

TaskManager.startTaskManagerComponentsAndActor(

+ new Configuration(),

+ ResourceID.generate(),

+ actorSystem,

+ highAvailabilityServices,

+ "localhost",

+ Option.apply("tm"),

+ true,

+ TestingTaskManager.class);

+

+ ActorGateway taskManager = new

AkkaActorGateway(taskManagerRef, leaderId);

--- End diff --

Definitely +1

---

[GitHub] flink issue #4693: [FLINK-7645][docs] Modify system-metrics part show in the...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4693 Hey @yew1eb, I like this ð I think it really makes sense to make this browsable from the table of contents. @zentol What do you think? I would be in favour of merging this if you don't have any objections. ---

[GitHub] flink pull request #4645: [FLINK-7582] [REST] Netty thread immediately parse...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4645#discussion_r137269070

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/RestClient.java ---

@@ -159,24 +155,17 @@ public void shutdown(Time timeout) {

}

private CompletableFuture

submitRequest(String targetAddress, int targetPort, FullHttpRequest

httpRequest, Class responseClass) {

- return CompletableFuture.supplyAsync(() ->

bootstrap.connect(targetAddress, targetPort), executor)

- .thenApply((channel) -> {

- try {

- return channel.sync();

- } catch (InterruptedException e) {

- throw new FlinkRuntimeException(e);

- }

- })

- .thenApply((ChannelFuture::channel))

- .thenCompose(channel -> {

- ClientHandler handler =

channel.pipeline().get(ClientHandler.class);

- CompletableFuture future =

handler.getJsonFuture();

- channel.writeAndFlush(httpRequest);

- return future;

- }).thenComposeAsync(

- (JsonResponse rawResponse) ->

parseResponse(rawResponse, responseClass),

- executor

- );

+ ChannelFuture connect = bootstrap.connect(targetAddress,

targetPort);

+ Channel channel;

--- End diff --

I understand, but it can be confusing that a method which returns a future

actually has a (potentially long) blocking operation.

---

[GitHub] flink issue #4647: [FLINK-7575] [WEB-DASHBOARD] Display "Fetching..." instea...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4647 Thanks @zentol for the review. @jameslafa - Regarding the first point with checkstyle: It's confusing that our checkstyle settings don't catch this, but Chesnay is right here. Seems nitpicky but we try to avoid unnecessary formatting changes. - Regarding the changes to the *.js files: Since you didn't change any of the coffee scripts, there should be no need to commit those files and I would also suggest to remove those changes. The changes are probably due to different versions on your machine and the previous contributor who changed the files. I think this only reinforces the argument we had about committing the *.lock file too. Could you create a new JIRA for this? @zentol - I didn't understand your follow up comments regarding the `metricsFetched` flag. Could you please elaborate on what you mean? Is the flag ok to keep after #4472 is merged? ---

[GitHub] flink pull request #4645: [FLINK-7582] [REST] Netty thread immediately parse...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4645#discussion_r137038459

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/rest/RestClient.java ---

@@ -159,24 +155,17 @@ public void shutdown(Time timeout) {

}

private CompletableFuture

submitRequest(String targetAddress, int targetPort, FullHttpRequest

httpRequest, Class responseClass) {

- return CompletableFuture.supplyAsync(() ->

bootstrap.connect(targetAddress, targetPort), executor)

- .thenApply((channel) -> {

- try {

- return channel.sync();

- } catch (InterruptedException e) {

- throw new FlinkRuntimeException(e);

- }

- })

- .thenApply((ChannelFuture::channel))

- .thenCompose(channel -> {

- ClientHandler handler =

channel.pipeline().get(ClientHandler.class);

- CompletableFuture future =

handler.getJsonFuture();

- channel.writeAndFlush(httpRequest);

- return future;

- }).thenComposeAsync(

- (JsonResponse rawResponse) ->

parseResponse(rawResponse, responseClass),

- executor

- );

+ ChannelFuture connect = bootstrap.connect(targetAddress,

targetPort);

+ Channel channel;

--- End diff --

Couldn't you keep the async connect and only change the last `composeAsync`

to `compose`?

---

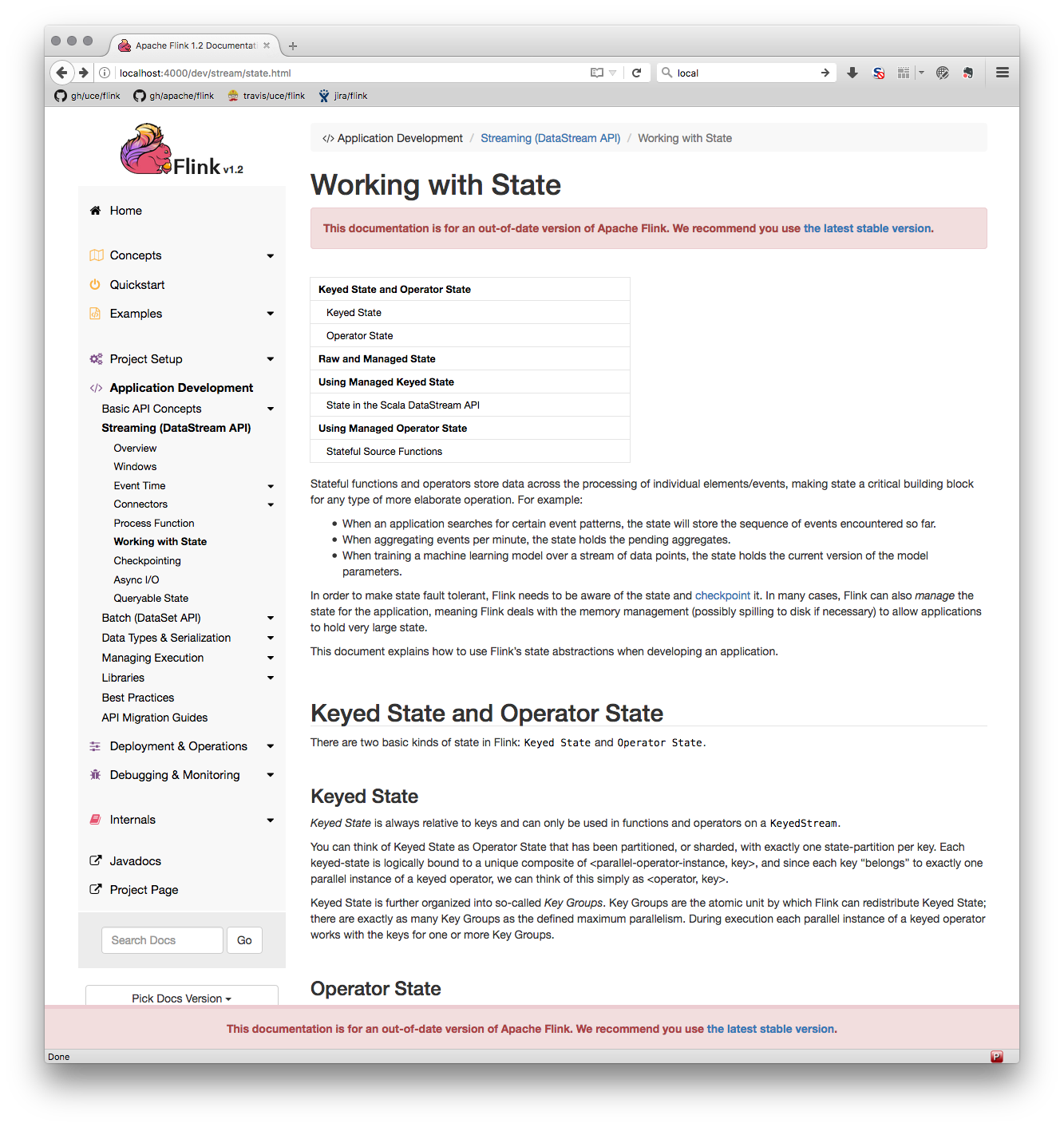

[GitHub] flink issue #4553: [FLINK-7642] [docs] Add very obvious warning about outdat...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4553 @twalthr Thanks for doing the work of merging this to the other branches as well. I've triggered a build for all branches. While 1.2 worked and the warning is now available online (https://ci.apache.org/projects/flink/flink-docs-release-1.2/), older branches don't build properly: https://ci.apache.org/builders/flink-docs-release-1.0/builds/545 https://ci.apache.org/builders/flink-docs-release-1.1/builds/391 Do you have a clue what we can do there? Also pinging @rmetzger who has some experience with the Apache infrastructure... --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink pull request #4553: [FLINK-7642] [docs] Add very obvious warning about...

GitHub user uce opened a pull request: https://github.com/apache/flink/pull/4553 [FLINK-7642] [docs] Add very obvious warning about outdated docs ## What is the purpose of the change This pull requests make the warning about outdated docs more obvious.  Please compare the screenshot to our current state: https://ci.apache.org/projects/flink/flink-docs-release-1.1/ If you like this, I would back/forward port this to all versions >= 1.0 and update the releasing Wiki page to add a note about updating the configuration of the docs. ## Brief change log - Change the color of the outdated warning footer to red - Rename the config key from `is_latest` to `show_outdated_warning` - Add an outdated warning to every page before the actual content ## Verifying this change - We don't have any tests for the docs - You can manually check out my branch and build the docs You can merge this pull request into a Git repository by running: $ git pull https://github.com/uce/flink 7462-outdated_docs_warning Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/4553.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #4553 commit e140634687a56fb5fdd0e32a1dce3d5ac41fd123 Author: Ufuk Celebi <u...@apache.org> Date: 2017-08-16T16:21:49Z [FLINK-7462] [docs] Make outdated docs footer background light red commit 15fe71e0402d2e2d931485497338973e12cce9db Author: Ufuk Celebi <u...@apache.org> Date: 2017-08-16T16:34:51Z [FLINK-7462] [docs] Rename is_latest flag to show_outdated_warning commit 9bdeeae0cb88b59f0dfaee547fbf9b54d125645e Author: Ufuk Celebi <u...@apache.org> Date: 2017-08-16T16:35:03Z [FLINK-7462] [docs] Add outdated warning to content --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink issue #4360: [FLINK-7220] [checkpoints] Update RocksDB dependency to 5...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4360 Thanks for the pointer Greg. I didn't look at the JIRA issue. I like the idea of automating this but I would go with a pre-push hook instead of a pre-commit hook. It could be annoying during local dev if we force every commit to have the format already. I'm not sure whether INFRA allows this or not though. @StefanRRichter Sure, can happen. ð --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink issue #4360: [FLINK-7220] [checkpoints] Update RocksDB dependency to 5...

Github user uce commented on the issue: https://github.com/apache/flink/pull/4360 @StefanRRichter As mentioned by Greg, we should either squash follow up commits like `Stephan's comments` into their parent or tag them similar to the main commit with a more descriptive message. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink issue #4391: [FLINK-7258] [network] Fix watermark configuration order

Github user uce commented on the issue: https://github.com/apache/flink/pull/4391 Thanks for the review @zhijiangW and @zentol. Merging this for `1.3` and `master`. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink pull request #4391: [FLINK-7258] [network] Fix watermark configuration...

GitHub user uce opened a pull request: https://github.com/apache/flink/pull/4391 [FLINK-7258] [network] Fix watermark configuration order ## Purpose This PR changes the order in which low and high watermarks are configured for Netty server child connections (high first). That way we avoid running into an `IllegalArgumentException` when the low watermark is larger than the high watermark (relevant if the configured memory segment size is larger than the default). This situation surfaced only as a logged warning and the low watermark configuration was ignored. ## Changelog - Configure high watermark before low watermark in `NettyServer` - Configure high watermark before low watermark in `KvStateServer` ## Verifying this change - The change is pretty trivial with an extended `NettyServerLowAndHighWatermarkTest` that now checks the expected watermarks. - I didn't add a test for `KvStateServer`, because the watermarks can't be configured there manually. - To verify, you can run `NettyServerLowAndHighWatermarkTest` with logging before and after this change and verify that no warning is logged anymore. ## Does this pull request potentially affect one of the following parts? - Dependencies (does it add or upgrade a dependency): **no** - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: **no** - The serializers: **no** - The runtime per-record code paths (performance sensitive): **no** - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: **no** ## Documentation - Does this pull request introduce a new feature? **no** - If yes, how is the feature documented? **not applicable** You can merge this pull request into a Git repository by running: $ git pull https://github.com/uce/flink 7258-watermark_config Alternatively you can review and apply these changes as the patch at: https://github.com/apache/flink/pull/4391.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #4391 commit 73998ba1328d4bf61ee979ed327b0a684ed03aa7 Author: Ufuk Celebi <u...@apache.org> Date: 2017-07-24T16:47:23Z [FLINK-7258] [network] Fix watermark configuration order When configuring larger memory segment sizes, configuring the low watermark before the high watermark may lead to an IllegalArgumentException, because the low watermark will temporarily be higher than the high watermark. It's necessary to configure the high watermark before the low watermark. For the queryable state server in KvStateServer I didn't add an extra test as the watermarks cannot be configured there. --- If your project is set up for it, you can reply to this email and have your reply appear on GitHub as well. If your project does not have this feature enabled and wishes so, or if the feature is enabled but not working, please contact infrastructure at infrastruct...@apache.org or file a JIRA ticket with INFRA. ---

[GitHub] flink pull request #4112: [FLINK-6901] Flip checkstyle configuration files

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4112#discussion_r126464199

--- Diff:

flink-runtime/src/main/java/org/apache/flink/runtime/io/network/netty/NettyBufferPool.java

---

@@ -61,7 +60,9 @@ public NettyBufferPool(int numberOfArenas) {

checkArgument(numberOfArenas >= 1, "Number of arenas");

this.numberOfArenas = numberOfArenas;

- if (!PlatformDependent.hasUnsafe()) {

+ try {

+ Class.forName("sun.misc.Unsafe");

+ } catch (ClassNotFoundException e) {

--- End diff --

Is ok with me to remove this check

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastruct...@apache.org or file a JIRA ticket

with INFRA.

---

[GitHub] flink pull request #4254: [FLINK-7067] [jobmanager] Fix side effects after f...

Github user uce commented on a diff in the pull request:

https://github.com/apache/flink/pull/4254#discussion_r125606557

--- Diff:

flink-runtime/src/test/java/org/apache/flink/runtime/jobmanager/JobManagerTest.java

---

@@ -940,6 +955,177 @@ public void testCancelWithSavepoint() throws

Exception {

}

/**

+* Tests that a failed cancel-job-with-savepoint request does not

accidentally disable

+* periodic checkpoints.

+*/

+ @Test

+ public void testCancelJobWithSavepointFailurePeriodicCheckpoints()

throws Exception {

+ testCancelJobWithSavepointFailure(true);

+ }

+

+ /**

+* Tests that a failed cancel-job-with-savepoint request does not

accidentally enable

+* periodic checkpoints.

+*/

+ @Test

+ public void testCancelJobWithSavepointFailureNoPeriodicCheckpoints()

throws Exception {

+ testCancelJobWithSavepointFailure(false);

+ }

+

+ /**

+* Tests that a failed savepoint does not cancel the job and that there

are no

+* unintended side effects.

+*

+* @param enablePeriodicCheckpoints Flag to indicate whether to enable

periodic checkpoints. We

+* need to test both here in order to verify that we don't accidentally

disable or enable

+* checkpoints after a failed cancel-job-with-savepoint request.

+*/

+ private void testCancelJobWithSavepointFailure(

+ boolean enablePeriodicCheckpoints) throws Exception {

+

+ long checkpointInterval = enablePeriodicCheckpoints ? 360 :

Long.MAX_VALUE;

+

+ // Savepoint target

+ File savepointTarget = tmpFolder.newFolder();

+ savepointTarget.deleteOnExit();

+

+ // Timeout for Akka messages

+ FiniteDuration askTimeout = new FiniteDuration(30,

TimeUnit.SECONDS);

+

+ // A source that declines savepoints, simulating the behaviour

+ // of a failed savepoint.

+ JobVertex sourceVertex = new JobVertex("Source");

+

sourceVertex.setInvokableClass(FailOnSavepointStatefulTask.class);

+ sourceVertex.setParallelism(1);

+ JobGraph jobGraph = new JobGraph("TestingJob", sourceVertex);

+

+ final ActorSystem actorSystem =

AkkaUtils.createLocalActorSystem(new Configuration());

+

+ try {

+ Tuple2<ActorRef, ActorRef> master =

JobManager.startJobManagerActors(

+ new Configuration(),

+ actorSystem,

+ TestingUtils.defaultExecutor(),

+ TestingUtils.defaultExecutor(),

+ highAvailabilityServices,

+ Option.apply("jm"),

+ Option.apply("arch"),

+ TestingJobManager.class,

+ TestingMemoryArchivist.class);

+

+ UUID leaderId =

LeaderRetrievalUtils.retrieveLeaderSessionId(

+

highAvailabilityServices.getJobManagerLeaderRetriever(HighAvailabilityServices.DEFAULT_JOB_ID),

+ TestingUtils.TESTING_TIMEOUT());

+

+ ActorGateway jobManager = new

AkkaActorGateway(master._1(), leaderId);

+

+ ActorRef taskManagerRef =

TaskManager.startTaskManagerComponentsAndActor(

+ new Configuration(),

+ ResourceID.generate(),

+ actorSystem,

+ highAvailabilityServices,

+ "localhost",

+ Option.apply("tm"),

+ true,

+ TestingTaskManager.class);

+

+ ActorGateway taskManager = new

AkkaActorGateway(taskManagerRef, leaderId);

+

+ // Wait until connected

+ Object msg = new

TestingTaskManagerMessages.NotifyWhenRegisteredAtJobManager(jobManager.actor());

+ Await.ready(taskManager.ask(msg, askTimeout),

askTimeout);

+

+ JobCheckpointingSettings snapshottingSettings = new

JobCheckpointingSettings(

+ Collections.singletonList(sourceVertex.getID()),

+ Collections.singletonList(sourceVertex.getID()),

+ Collections.singletonList(sourceVertex.getID()),

+ checkpointInterval,

+ 360,

+ 0,

+ Integer.MAX_VALUE,

[GitHub] flink pull request #4254: [FLINK-7067] [jobmanager] Fix side effects after f...