[GitHub] [kafka] showuon commented on pull request #10987: MINOR: improve the partitioner.class doc

showuon commented on pull request #10987: URL: https://github.com/apache/kafka/pull/10987#issuecomment-876198201 @jolshan , I've updated. Also, thanks for pointing to the JIRA issue, I'll take a look when available. Please let me know if you have other suggestion. Thank you.  -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #10794: KAFKA-12677: return not_controller error in envelope response itself

showuon commented on pull request #10794: URL: https://github.com/apache/kafka/pull/10794#issuecomment-876252977 Failed tests are unrelated. Thanks. ``` Build / ARM / kafka.raft.KafkaMetadataLogTest.testDeleteSnapshots() Build / ARM / kafka.raft.KafkaMetadataLogTest.testDeleteSnapshots() Build / JDK 8 and Scala 2.12 / org.apache.kafka.connect.integration.BlockingConnectorTest.testBlockInConnectorInitialize Build / JDK 8 and Scala 2.12 / org.apache.kafka.connect.integration.ExampleConnectIntegrationTest.testSinkConnector Build / JDK 8 and Scala 2.12 / kafka.raft.KafkaMetadataLogTest.testDeleteSnapshots() Build / JDK 8 and Scala 2.12 / kafka.raft.KafkaMetadataLogTest.testDeleteSnapshots() Build / JDK 11 and Scala 2.13 / kafka.api.ConsumerBounceTest.testCloseDuringRebalance() Build / JDK 11 and Scala 2.13 / org.apache.kafka.streams.integration.EOSUncleanShutdownIntegrationTest.shouldWorkWithUncleanShutdownWipeOutStateStore[exactly_once_v2] Build / JDK 11 and Scala 2.13 / org.apache.kafka.streams.integration.EosV2UpgradeIntegrationTest.shouldUpgradeFromEosAlphaToEosV2[true] Build / JDK 16 and Scala 2.13 / org.apache.kafka.connect.integration.ConnectWorkerIntegrationTest.testBrokerCoordinator Build / JDK 16 and Scala 2.13 / org.apache.kafka.connect.integration.ConnectorClientPolicyIntegrationTest.testCreateWithAllowedOverridesForPrincipalPolicy Build / JDK 16 and Scala 2.13 / kafka.api.ConsumerBounceTest.testCloseDuringRebalance() Build / JDK 16 and Scala 2.13 / kafka.api.ConsumerBounceTest.testCloseDuringRebalance() Build / JDK 16 and Scala 2.13 / kafka.api.TransactionsTest.testAbortTransactionTimeout() Build / JDK 16 and Scala 2.13 / kafka.api.TransactionsTest.testAbortTransactionTimeout() ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10678: TRIVIAL: Fix type inconsistencies, unthrown exceptions, etc

dongjinleekr commented on pull request #10678: URL: https://github.com/apache/kafka/pull/10678#issuecomment-876256809 Rebased onto the latest trunk. cc/ @ijuma -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr removed a comment on pull request #10678: TRIVIAL: Fix type inconsistencies, unthrown exceptions, etc

dongjinleekr removed a comment on pull request #10678: URL: https://github.com/apache/kafka/pull/10678#issuecomment-864781801 Rebased onto the latest trunk. cc/ @ijuma @cadonna -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10472: KAFKA-12613: Inconsistencies between Kafka Config and Log Config

dongjinleekr commented on pull request #10472: URL: https://github.com/apache/kafka/pull/10472#issuecomment-876258437 @kowshik @rajinisivaram Could you kindly have a look? :bowing_man: -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10826: KAFKA-7632: Support Compression Level

dongjinleekr commented on pull request #10826: URL: https://github.com/apache/kafka/pull/10826#issuecomment-876259060 @kkonstantine Here it is - I rebased it onto the latest trunk. Could anyone review this PR? :pray: -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #7898: KAFKA-9366: Change log4j dependency into log4j2

dongjinleekr commented on pull request #7898: URL: https://github.com/apache/kafka/pull/7898#issuecomment-876265899 Rebased onto the latest trunk. Could anyone review this PR? :bow: cc/ @kkonstantine -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kamalcph commented on a change in pull request #10602: KAFKA-12724: Add 2.8.0 to system tests and streams upgrade tests.

kamalcph commented on a change in pull request #10602: URL: https://github.com/apache/kafka/pull/10602#discussion_r666016083 ## File path: tests/kafkatest/tests/streams/streams_upgrade_test.py ## @@ -25,12 +25,12 @@ from kafkatest.services.zookeeper import ZookeeperService from kafkatest.tests.streams.utils import extract_generation_from_logs, extract_generation_id from kafkatest.version import LATEST_0_10_0, LATEST_0_10_1, LATEST_0_10_2, LATEST_0_11_0, LATEST_1_0, LATEST_1_1, \ -LATEST_2_0, LATEST_2_1, LATEST_2_2, LATEST_2_3, LATEST_2_4, LATEST_2_5, LATEST_2_6, LATEST_2_7, DEV_BRANCH, DEV_VERSION, KafkaVersion +LATEST_2_0, LATEST_2_1, LATEST_2_2, LATEST_2_3, LATEST_2_4, LATEST_2_5, LATEST_2_6, LATEST_2_7, LATEST_2_8, DEV_BRANCH, DEV_VERSION, KafkaVersion # broker 0.10.0 is not compatible with newer Kafka Streams versions broker_upgrade_versions = [str(LATEST_0_10_1), str(LATEST_0_10_2), str(LATEST_0_11_0), str(LATEST_1_0), str(LATEST_1_1), \ str(LATEST_2_0), str(LATEST_2_1), str(LATEST_2_2), str(LATEST_2_3), \ - str(LATEST_2_4), str(LATEST_2_5), str(LATEST_2_6), str(LATEST_2_7), str(DEV_BRANCH)] + str(LATEST_2_4), str(LATEST_2_5), str(LATEST_2_6), str(LATEST_2_7), str(LATEST_2_8), str(DEV_BRANCH)] Review comment: Added v2.0 and above to the metadata_upgrade test. As per [KIP-268](https://cwiki.apache.org/confluence/display/KAFKA/KIP-268%3A+Simplify+Kafka+Streams+Rebalance+Metadata+Upgrade), one rolling restart is enough for apps upgrading from 2.x to the latest version. I kept the test behavior unchanged, we can fix this later if required in a follow-up PR. Yeah, the upgrade_downgrade_brokers test was already disabled, so adding versions to this test won't help. Sorry for late reply. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kamalcph commented on pull request #10602: KAFKA-12724: Add 2.8.0 to system tests and streams upgrade tests.

kamalcph commented on pull request #10602: URL: https://github.com/apache/kafka/pull/10602#issuecomment-876277111 @vvcephei Resolved the conflicts. Ran the below tests: ``` ./gradlew :streams:testAll TC_PATHS="tests/kafkatest/tests/streams/streams_upgrade_test.py" bash tests/docker/run_tests.sh ``` Please take a look when you get a chance. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-13047) KafkaConsumer is unable to consume messages from a topic created by confluent-kafka-python client

Prashanto created KAFKA-13047: - Summary: KafkaConsumer is unable to consume messages from a topic created by confluent-kafka-python client Key: KAFKA-13047 URL: https://issues.apache.org/jira/browse/KAFKA-13047 Project: Kafka Issue Type: Bug Components: clients Affects Versions: 2.8.0 Reporter: Prashanto Attachments: ApacheKafkaConsumer.txt I have a Python microservice that creates a Kafka topic using confluent-kafka-python client. I am writing a Java Kafka Streams client that is unable to retrieve any messages from this topic. To debug this issue, I wrote a simple KafkaConsumer client that is also unable to consume messages from the topic. I have attached the debug logs from the run. Any pointers will be sincerely appreciated. If I create a topic using kafka-topics.sh script, the consumer is able to successfully consume messages. Kafka broker version: 2.2.2 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] kamalcph edited a comment on pull request #10602: KAFKA-12724: Add 2.8.0 to system tests and streams upgrade tests.

kamalcph edited a comment on pull request #10602: URL: https://github.com/apache/kafka/pull/10602#issuecomment-876277111 @vvcephei Resolved the conflicts. Ran the below tests: ``` ./gradlew :streams:testAll TC_PATHS="tests/kafkatest/tests/streams/streams_upgrade_test.py" bash tests/docker/run_tests.sh ``` The metadata_upgrade test fails for versions above 2.4.1 with `Could not detect Kafka Streams version 3.1.0-SNAPSHOT`. Please take a look when you get a chance. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-13048) Update vulnerable dependencies in 2.8.0

Pavel Kuznetsov created KAFKA-13048: --- Summary: Update vulnerable dependencies in 2.8.0 Key: KAFKA-13048 URL: https://issues.apache.org/jira/browse/KAFKA-13048 Project: Kafka Issue Type: Bug Components: core, KafkaConnect Affects Versions: 2.8.0 Reporter: Pavel Kuznetsov **Describe the bug** I checked kafka_2.13-2.8.0.tgz distribution with WhiteSource and find out that some libraries have vulnerabilities. Here they are: - jetty-http-9.4.40.v20210413.jar has CVE-2021-28169 vulnerability. The way to fix it is to upgrade to org.eclipse.jetty:jetty-http:9.4.41.v20210516 - jetty-server-9.4.40.v20210413.jar has CVE-2021-28169 and CVE-2021-34428 vulnerabilities. The way to fix it is to upgrade to org.eclipse.jetty:jetty-server:9.4.41.v20210516 - jetty-servlets-9.4.40.v20210413.jar has CVE-2021-28169 vulnerability. The way to fix it is to upgrade to org.eclipse.jetty:jetty-servlets:9.4.41.v20210516 **To Reproduce** Download kafka_2.13-2.8.0.tgz and find jars, listed above. Check that these jars with corresponding versions are mentioned in corresponding vulnerability description. **Expected behavior** - jetty-http upgraded to 9.4.41.v20210516 or higher - jetty-server upgraded to 9.4.41.v20210516 or higher - jetty-servlets upgraded to 9.4.41.v20210516 or higher **Actual behaviour** - jetty-http is 9.4.40.v20210413 - jetty-server is 9.4.40.v20210413 - jetty-servlets is 9.4.40.v20210413 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (KAFKA-13049) Log recovery threads use default thread pool naming

Tom Bentley created KAFKA-13049:

---

Summary: Log recovery threads use default thread pool naming

Key: KAFKA-13049

URL: https://issues.apache.org/jira/browse/KAFKA-13049

Project: Kafka

Issue Type: Bug

Components: core

Reporter: Tom Bentley

Assignee: Tom Bentley

The threads used for log recovery use a pool

{{Executors.newFixedThreadPool(int)}} and hence pick up the naming scheme from

{{Executors.defaultThreadFactory()}}. It's not so clear in a thread dump taken

during log recovery what those threads are. They should have clearer names.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] edoardocomar commented on a change in pull request #10649: KAFKA-12762: Use connection timeout when polling the network for new …

edoardocomar commented on a change in pull request #10649: URL: https://github.com/apache/kafka/pull/10649#discussion_r666133904 ## File path: clients/src/main/java/org/apache/kafka/clients/ClusterConnectionStates.java ## @@ -273,6 +276,7 @@ public void authenticationFailed(String id, long now, AuthenticationException ex nodeState.state = ConnectionState.AUTHENTICATION_FAILED; nodeState.lastConnectAttemptMs = now; updateReconnectBackoff(nodeState); +connectingNodes.remove(id); Review comment: looks like the line is no longer needed to pass all the tests ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] edoardocomar commented on a change in pull request #10649: KAFKA-12762: Use connection timeout when polling the network for new …

edoardocomar commented on a change in pull request #10649:

URL: https://github.com/apache/kafka/pull/10649#discussion_r666134747

##

File path:

clients/src/main/java/org/apache/kafka/clients/ClusterConnectionStates.java

##

@@ -105,11 +105,13 @@ public boolean isBlackedOut(String id, long now) {

public long connectionDelay(String id, long now) {

NodeConnectionState state = nodeState.get(id);

if (state == null) return 0;

Review comment:

right, updated to match the inline comment!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Resolved] (KAFKA-13048) Update vulnerable dependencies in 2.8.0

[ https://issues.apache.org/jira/browse/KAFKA-13048?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Dongjin Lee resolved KAFKA-13048. - Resolution: Duplicate > Update vulnerable dependencies in 2.8.0 > --- > > Key: KAFKA-13048 > URL: https://issues.apache.org/jira/browse/KAFKA-13048 > Project: Kafka > Issue Type: Bug > Components: core, KafkaConnect >Affects Versions: 2.8.0 >Reporter: Pavel Kuznetsov >Priority: Major > Labels: security > > **Describe the bug** > I checked kafka_2.13-2.8.0.tgz distribution with WhiteSource and find out > that some libraries have vulnerabilities. > Here they are: > - jetty-http-9.4.40.v20210413.jar has CVE-2021-28169 vulnerability. The way > to fix it is to upgrade to org.eclipse.jetty:jetty-http:9.4.41.v20210516 > - jetty-server-9.4.40.v20210413.jar has CVE-2021-28169 and CVE-2021-34428 > vulnerabilities. The way to fix it is to upgrade to > org.eclipse.jetty:jetty-server:9.4.41.v20210516 > - jetty-servlets-9.4.40.v20210413.jar has CVE-2021-28169 vulnerability. The > way to fix it is to upgrade to > org.eclipse.jetty:jetty-servlets:9.4.41.v20210516 > **To Reproduce** > Download kafka_2.13-2.8.0.tgz and find jars, listed above. > Check that these jars with corresponding versions are mentioned in > corresponding vulnerability description. > **Expected behavior** > - jetty-http upgraded to 9.4.41.v20210516 or higher > - jetty-server upgraded to 9.4.41.v20210516 or higher > - jetty-servlets upgraded to 9.4.41.v20210516 or higher > **Actual behaviour** > - jetty-http is 9.4.40.v20210413 > - jetty-server is 9.4.40.v20210413 > - jetty-servlets is 9.4.40.v20210413 -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] edoardocomar commented on pull request #10649: KAFKA-12762: Use connection timeout when polling the network for new …

edoardocomar commented on pull request #10649: URL: https://github.com/apache/kafka/pull/10649#issuecomment-876392990 Thanks @rajinisivaram made minor changes and rebased -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #10338: KAFKA-10251: wait for consumer rebalance completed before consuming records

showuon commented on pull request #10338: URL: https://github.com/apache/kafka/pull/10338#issuecomment-876394407 @mumrah , it failed frequently these days. I think we should merge this fix soon. Please help have a 2nd review when available. Thank you. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10428: KAFKA-12572: Add import ordering checkstyle rule and configure an automatic formatter

dongjinleekr commented on pull request #10428: URL: https://github.com/apache/kafka/pull/10428#issuecomment-876395308 Rebased onto the latest trunk. Also, I reflected the recent project structure change (i.e., `storage` → `storage/api`) by updating `suppressions.xml`. cc/ @cadonna @ableegoldman -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10428: KAFKA-12572: Add import ordering checkstyle rule and configure an automatic formatter

dongjinleekr commented on pull request #10428: URL: https://github.com/apache/kafka/pull/10428#issuecomment-876395486 Retest this please. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr removed a comment on pull request #10428: KAFKA-12572: Add import ordering checkstyle rule and configure an automatic formatter

dongjinleekr removed a comment on pull request #10428: URL: https://github.com/apache/kafka/pull/10428#issuecomment-876395486 Retest this please. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] showuon commented on a change in pull request #10952: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name

showuon commented on a change in pull request #10952:

URL: https://github.com/apache/kafka/pull/10952#discussion_r666157068

##

File path: clients/src/main/java/org/apache/kafka/clients/Metadata.java

##

@@ -367,7 +386,8 @@ else if (metadata.error() ==

Errors.TOPIC_AUTHORIZATION_FAILED)

*/

private Optional updateLatestMetadata(

Review comment:

We should also update the java doc to mention the TopicId changed case

##

File path: clients/src/main/java/org/apache/kafka/clients/MetadataCache.java

##

@@ -130,13 +149,30 @@ MetadataCache mergeWith(String newClusterId,

Set addInvalidTopics,

Set addInternalTopics,

Node newController,

+Map topicIds,

BiPredicate retainTopic) {

Predicate shouldRetainTopic = topic -> retainTopic.test(topic,

internalTopics.contains(topic));

Map newMetadataByPartition = new

HashMap<>(addPartitions.size());

+Map newTopicIds = new HashMap<>(topicIds.size());

+

+// We want the most recent topic ID. We add the old one here for

retained topics and then update with newest information in the MetadataResponse

+// we add if a new topic ID is added or remove if the request did not

support topic IDs for this topic.

Review comment:

Can't get what you mean here. Could you rephrase this sentence?

##

File path: clients/src/main/java/org/apache/kafka/clients/Metadata.java

##

@@ -216,6 +217,14 @@ public synchronized boolean updateRequested() {

}

}

+public synchronized Uuid topicId(String topicName) {

+return cache.topicId(topicName);

+}

+

+public synchronized String topicName(Uuid topicId) {

Review comment:

nit: 2 spaces between `synchronized` and `String`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] showuon commented on pull request #10952: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name

showuon commented on pull request #10952: URL: https://github.com/apache/kafka/pull/10952#issuecomment-876418475 Also, please remember to rebase with the latest `trunk` branch. Thanks. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lct45 commented on a change in pull request #10988: [KAFKA-9559] add docs for changing default serde to null

lct45 commented on a change in pull request #10988: URL: https://github.com/apache/kafka/pull/10988#discussion_r666177495 ## File path: docs/streams/developer-guide/datatypes.html ## @@ -34,7 +34,7 @@ Data Types and Serialization Every Kafka Streams application must provide SerDes (Serializer/Deserializer) for the data types of record keys and record values (e.g. java.lang.String) to materialize the data when necessary. Operations that require such SerDes information include: stream(), table(), to(), repartition(), groupByKey(), groupBy(). -You can provide SerDes by using either of these methods: +You can provide SerDes by using either of these methods, but you must use at least one of these methods: By setting default SerDes in the java.util.Properties config instance. By specifying explicit SerDes when calling the appropriate API methods, thus overriding the defaults. Review comment: Sure, just did it for the `datatypes` page. Do you think we should do it for all uses in `dsl-api`? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lbradstreet commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

lbradstreet commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876496847 This is failing for me with: ``` Step 10/68 : RUN python3 -m pip install -U pip==21.1.1; ---> [Warning] Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap. ---> Running in 23f5e4d95ed4 Collecting pip==21.1.1 Could not find a version that satisfies the requirement pip==21.1.1 (from versions: 0.2, 0.2.1, 0.3, 0.3.1, 0.4, 0.5, 0.5.1, 0.6, 0.6.1, 0.6.2, 0.6.3, 0.7, 0.7.1, 0.7.2, 0.8, 0.8.1, 0.8.2, 0.8.3, 1.0, 1.0.1, 1.0.2, 1.1, 1.2, 1.2.1, 1.3, 1.3.1 , 1.4, 1.4.1, 1.5, 1.5.1, 1.5.2, 1.5.3, 1.5.4, 1.5.5, 1.5.6, 6.0, 6.0.1, 6.0.2, 6.0.3, 6.0.4, 6.0.5, 6.0.6, 6.0.7, 6.0.8, 6.1.0, 6.1.1, 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.1.0, 7.1.1, 7.1.2, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.1.0, 8.1.1, 8.1.2, 9.0.0, 9.0 .1, 9.0.2, 9.0.3, 10.0.0b1, 10.0.0b2, 10.0.0, 10.0.1, 18.0, 18.1, 19.0, 19.0.1, 19.0.2, 19.0.3, 19.1, 19.1.1, 19.2, 19.2.1, 19.2.2, 19.2.3, 19.3, 19.3.1, 20.0, 20.0.1, 20.0.2, 20.1b1, 20.1, 20.1.1, 20.2b1, 20.2, 20.2.1, 20.2.2, 20.2.3, 20.2.4, 20.3b1, 20.3, 20.3.1, 20.3.2, 20.3.3, 20.3.4) No matching distribution found for pip==21.1.1 ``` @chia7712 are you using a different jdk base docker image version on your m1? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #10827: [WIP] KAFKA-12899: Support --bootstrap-server in ReplicaVerificationTool

dongjinleekr commented on pull request #10827: URL: https://github.com/apache/kafka/pull/10827#issuecomment-876498696 Hi @tombentley, Sorry for missing your comment on [KIP-629](https://cwiki.apache.org/confluence/display/KAFKA/KIP-629%3A+Use+racially+neutral+terms+in+our+codebase?src=contextnavchildmode). Reviewing [KAFKA-10201](https://issues.apache.org/jira/browse/KAFKA-10201), I found that it is already under progress with [KAFKA-10589](https://issues.apache.org/jira/browse/KAFKA-10589). So, it would be better to address this issue in [this PR](https://github.com/apache/kafka/pull/9404). I also rebased onto the latest trunk. Thank you for reviewing! :bowing_man: -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dongjinleekr commented on pull request #9404: KAFKA-10589 replica verification tool changes for KIP-629

dongjinleekr commented on pull request #9404: URL: https://github.com/apache/kafka/pull/9404#issuecomment-876498811 Hi @xvrl, How it is going? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

chia7712 commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876500588 > are you using a different jdk base docker image version on your m1? IIRC, I was using `openjdk:8`. Will retry it later :( -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

chia7712 commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876514001 ## build image on MacBook Air M1 https://user-images.githubusercontent.com/6234750/124944605-09b02680-e040-11eb-9a97-14daa986e397.png";> ## build image on iMac 27 intel https://user-images.githubusercontent.com/6234750/124945142-77f4e900-e040-11eb-92f4-25906def19c9.png";> @lbradstreet Please take a look at above screenshot. I don't observe the error you mentioned. BTW, the step `Step 10/68 ` is different from repo's docker file. Did you build image by other docker file? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] omkreddy merged pull request #10915: KAFKA-13041: Enable connecting VS Code remote debugger

omkreddy merged pull request #10915: URL: https://github.com/apache/kafka/pull/10915 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-13041) Support debugging system tests with ducker-ak

[ https://issues.apache.org/jira/browse/KAFKA-13041?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Manikumar resolved KAFKA-13041. --- Fix Version/s: 3.0.0 Resolution: Fixed > Support debugging system tests with ducker-ak > - > > Key: KAFKA-13041 > URL: https://issues.apache.org/jira/browse/KAFKA-13041 > Project: Kafka > Issue Type: Improvement > Components: system tests >Reporter: Stanislav Vodetskyi >Priority: Major > Fix For: 3.0.0 > > > Currently when you're using ducker-ak to run system tests locally, your only > debug option is to add print/log messages. > It should be possible to connect to a ducker-ak test with a remote debugger. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] jolshan commented on pull request #10952: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name

jolshan commented on pull request #10952: URL: https://github.com/apache/kafka/pull/10952#issuecomment-876521556 Yup. This merge conflict was caused by my other PR 😅 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mumrah opened a new pull request #10997: MINOR: Fix flaky deleteSnapshots test

mumrah opened a new pull request #10997: URL: https://github.com/apache/kafka/pull/10997 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lbradstreet commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

lbradstreet commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876523777 @chia7712 thanks for checking. Yes, my dockerfile was a bit modified. The unmodified run is: ``` Sending build context to Docker daemon 43.52kB Step 1/54 : ARG jdk_version=openjdk:8 Step 2/54 : FROM $jdk_version ---> 09df0563bdfc Step 3/54 : MAINTAINER Apache Kafka d...@kafka.apache.org ---> Using cache ---> abfbf85c6575 Step 4/54 : VOLUME ["/opt/kafka-dev"] ---> Using cache ---> d9178c554c16 Step 5/54 : ENV TZ="/usr/share/zoneinfo/America/Los_Angeles" ---> Using cache ---> 8245a50f89b4 Step 6/54 : ENV DEBIAN_FRONTEND noninteractive ---> Using cache ---> 6e41f5a58d78 Step 7/54 : ARG ducker_creator=default ---> Using cache ---> 9d39a84cd18d Step 8/54 : LABEL ducker.creator=$ducker_creator ---> Using cache ---> 25fe89abca0c Step 9/54 : RUN apt update && apt install -y sudo git netcat iptables rsync unzip wget curl jq coreutils openssh-server net-tools vim python3-pip python3-dev libffi-dev libssl-dev cmake pkg-config libfuse-dev iperf traceroute && apt-get -y clean ---> Using cache ---> 0b5597132e07 Step 10/54 : RUN python3 -m pip install -U pip==21.1.1; ---> [Warning] Your kernel does not support swap limit capabilities or the cgroup is not mounted. Memory limited without swap. ---> Running in 0bae66304b96 Collecting pip==21.1.1 Could not find a version that satisfies the requirement pip==21.1.1 (from versions: 0.2, 0.2.1, 0.3, 0.3.1, 0.4, 0.5, 0.5.1, 0.6, 0.6.1, 0.6.2, 0.6.3, 0.7, 0.7.1, 0.7.2, 0.8, 0.8.1, 0.8.2, 0.8.3, 1.0, 1.0.1, 1.0.2, 1.1, 1.2, 1.2.1, 1.3, 1.3.1, 1.4, 1.4.1, 1.5, 1.5.1, 1.5.2, 1.5.3, 1.5.4, 1.5.5, 1.5.6, 6.0, 6.0.1, 6.0.2, 6.0.3, 6.0.4, 6.0.5, 6.0.6, 6.0.7, 6.0.8, 6.1.0, 6.1.1, 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.1.0, 7.1.1, 7.1.2, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.1.0, 8.1.1, 8.1.2, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 10.0.0b1, 10.0.0b2, 10.0.0, 10.0.1, 18.0, 18.1, 19.0, 19.0.1, 19.0.2, 19.0.3, 19.1, 19.1.1, 19.2, 19.2.1, 19.2.2, 19.2.3, 19.3, 19.3.1, 20.0, 20.0.1, 20.0.2, 20.1b1, 20.1, 20.1.1, 20.2b1, 20.2, 20.2.1, 20.2.2, 20.2.3, 20.2.4, 20.3b1, 20.3, 20.3.1, 20.3.2, 20.3.3, 20.3.4) No matching distribution found for pip==21.1.1 The command '/bin/sh -c python3 -m pip install -U pip==21.1.1;' returned a non-zero code: 1 ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

chia7712 commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876529435 @lbradstreet Could you share the ID of `openjdk:8` to me? the image id of `openjdk:8` in my env is `d61c96e2d100` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lbradstreet commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

lbradstreet commented on pull request #10661:

URL: https://github.com/apache/kafka/pull/10661#issuecomment-876532382

> @lbradstreet Could you share the ID of `openjdk:8` to me? the image id of

`openjdk:8` in my env is `d61c96e2d100`

I ran docker system prune before reporting this in case something was

happening there.

```

"Id":

"sha256:09df0563bdfc760be1ce6f153f4fdb1950e8ccc9dbb298f8c76da38dc0cecfd6",

"RepoTags": [

"openjdk:8"

],

"RepoDigests": [

"openjdk@sha256:c58264860b32c8603ac125862d17b1d73896bceaa33c843c9a7163904786eb82"

],

"Parent": "",

"Comment": "",

"Created": "2019-11-23T14:34:16.005709367Z",

"Container":

"1f98649c600b636393cc3d3cd8b594134fe979b85d122dc5fa41d4d8311de8b3",

"ContainerConfig": {

"Hostname": "",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/openjdk-8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"LANG=C.UTF-8",

"JAVA_HOME=/usr/local/openjdk-8",

"JAVA_VERSION=8u232",

[GitHub] [kafka] lbradstreet commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

lbradstreet commented on pull request #10661:

URL: https://github.com/apache/kafka/pull/10661#issuecomment-876533410

> > @lbradstreet Could you share the ID of `openjdk:8` to me? the image id

of `openjdk:8` in my env is `d61c96e2d100`

>

> I ran docker system prune before reporting this in case something was

happening there.

>

> ```

>"Id":

"sha256:09df0563bdfc760be1ce6f153f4fdb1950e8ccc9dbb298f8c76da38dc0cecfd6",

>"RepoTags": [

>"openjdk:8"

>],

>"RepoDigests": [

>

"openjdk@sha256:c58264860b32c8603ac125862d17b1d73896bceaa33c843c9a7163904786eb82"

>],

>"Parent": "",

>"Comment": "",

>"Created": "2019-11-23T14:34:16.005709367Z",

>"Container":

"1f98649c600b636393cc3d3cd8b594134fe979b85d122dc5fa41d4d8311de8b3",

>"ContainerConfig": {

>"Hostname": "",

>"Domainname": "",

>"User": "",

>"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

>"Tty": false,

>"OpenStdin": false,

>"StdinOnce": false,

>"Env": [

>

"PATH=/usr/local/openjdk-8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

>"LANG=C.UTF-8",

>"JAVA_HOME=/usr/local/openjdk-8",

>"JAVA_VERSION=8u232",

>

"JAVA_BAS

[GitHub] [kafka] lbradstreet commented on pull request #10661: MINOR: upgrade pip from 20.2.2 to 21.1.1

lbradstreet commented on pull request #10661: URL: https://github.com/apache/kafka/pull/10661#issuecomment-876535817 @chia7712 sorry for the false alarm and thanks for checking that it worked on your side. The problem was that I should have run `docker system prune -a` rather than `docker system prune`. It's now working. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] lbradstreet commented on pull request #10995: MINOR: fix ducker-ak Dockerfile issue

lbradstreet commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876549710 I hit this same problem and was able to resolve it with `docker system prune -a` to make sure it grabbed a later openjdk image where this is not a problem. See the discussion in https://github.com/apache/kafka/pull/10661. Maybe we can modify this PR to instead provide a more helpful error message? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #10990: MINOR: the broker should use metadata.log.max.record.bytes.between.snapshots

cmccabe commented on pull request #10990: URL: https://github.com/apache/kafka/pull/10990#issuecomment-876562610 That one build failure looks like some kind of Jenkins weirdness. ``` [2021-07-08T00:12:38.900Z] > requirement failed: Source file '/tmp/sbt_8c62b7b4/META-INF/MANIFEST.MF' does not exist. ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] junrao commented on a change in pull request #10753: KAFKA-12803: Support reassigning partitions when in KRaft mode

junrao commented on a change in pull request #10753:

URL: https://github.com/apache/kafka/pull/10753#discussion_r666339593

##

File path:

metadata/src/main/java/org/apache/kafka/controller/ReplicationControlManager.java

##

@@ -1204,6 +1317,298 @@ void generateLeaderAndIsrUpdates(String context,

}

}

+PartitionChangeRecord generateLeaderAndIsrUpdate(TopicControlInfo topic,

+ int partitionId,

+ PartitionControlInfo

partition,

+ int[] newIsr,

+ Function isAcceptableLeader) {

+PartitionChangeRecord record = new PartitionChangeRecord().

+setTopicId(topic.id).

+setPartitionId(partitionId);

+int[] newReplicas = partition.replicas;

+if (partition.isrChangeCompletesReassignment(newIsr)) {

+if (partition.addingReplicas.length > 0) {

+record.setAddingReplicas(Collections.emptyList());

+}

+if (partition.removingReplicas.length > 0) {

+record.setRemovingReplicas(Collections.emptyList());

+newIsr = Replicas.copyWithout(newIsr,

partition.removingReplicas);

+newReplicas = Replicas.copyWithout(partition.replicas,

partition.removingReplicas);

+}

+}

+int newLeader;

+if (Replicas.contains(newIsr, partition.leader)) {

+// If the current leader is good, don't change.

+newLeader = partition.leader;

+} else {

+// Choose a new leader.

+boolean uncleanOk =

configurationControl.uncleanLeaderElectionEnabledForTopic(topic.name);

+newLeader = bestLeader(newReplicas, newIsr, uncleanOk,

isAcceptableLeader);

+}

+if (!electionWasClean(newLeader, newIsr)) {

+// After an unclean leader election, the ISR is reset to just the

new leader.

+newIsr = new int[] {newLeader};

+} else if (newIsr.length == 0) {

+// We never want to shrink the ISR to size 0.

+newIsr = partition.isr;

+}

+if (newLeader != partition.leader) record.setLeader(newLeader);

+if (!Arrays.equals(newIsr, partition.isr)) {

+record.setIsr(Replicas.toList(newIsr));

+}

+if (!Arrays.equals(newReplicas, partition.replicas)) {

+record.setReplicas(Replicas.toList(newReplicas));

+}

+return record;

+}

+

+ControllerResult

+alterPartitionReassignments(AlterPartitionReassignmentsRequestData

request) {

+List records = new ArrayList<>();

+AlterPartitionReassignmentsResponseData result =

+new

AlterPartitionReassignmentsResponseData().setErrorMessage(null);

+int successfulAlterations = 0, totalAlterations = 0;

+for (ReassignableTopic topic : request.topics()) {

+ReassignableTopicResponse topicResponse = new

ReassignableTopicResponse().

+setName(topic.name());

+for (ReassignablePartition partition : topic.partitions()) {

+ApiError error = ApiError.NONE;

+try {

+alterPartitionReassignment(topic.name(), partition,

records);

+successfulAlterations++;

+} catch (Throwable e) {

+log.info("Unable to alter partition reassignment for " +

+topic.name() + ":" + partition.partitionIndex() + "

because " +

+"of an " + e.getClass().getSimpleName() + " error: " +

e.getMessage());

+error = ApiError.fromThrowable(e);

+}

+totalAlterations++;

+topicResponse.partitions().add(new

ReassignablePartitionResponse().

+setPartitionIndex(partition.partitionIndex()).

+setErrorCode(error.error().code()).

+setErrorMessage(error.message()));

+}

+result.responses().add(topicResponse);

+}

+log.info("Successfully altered {} out of {} partition

reassignment(s).",

+successfulAlterations, totalAlterations);

+return ControllerResult.atomicOf(records, result);

+}

+

+void alterPartitionReassignment(String topicName,

+ReassignablePartition partition,

+List records) {

+Uuid topicId = topicsByName.get(topicName);

+if (topicId == null) {

+throw new UnknownTopicOrPartitionException("Unable to find a topic

" +

+"named " + topicName + ".");

+}

+TopicControlInfo topicInfo = topics.get(topicId);

+if (topicInfo == null) {

+throw new UnknownTopicOrPartitionException("Unable to find a topic

" +

[GitHub] [kafka] hachikuji commented on a change in pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

hachikuji commented on a change in pull request #10995:

URL: https://github.com/apache/kafka/pull/10995#discussion_r666348755

##

File path: tests/docker/ducker-ak

##

@@ -185,6 +185,12 @@ must_popd() {

popd &> /dev/null || die "failed to popd"

}

+echo_and_do() {

+local cmd="${@}"

+echo "${cmd}"

+${cmd}

Review comment:

The logic through `must_do`, which we were doing previously also

redirects output and checks the command return. Should we do something similar?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] kirktrue commented on pull request #10951: KAFKA-12841: NPE from the provided metadata in client callback in case of ApiException

kirktrue commented on pull request #10951: URL: https://github.com/apache/kafka/pull/10951#issuecomment-876583460 @cmccabe - could you take a look at this and/or assign a reviewer for this? Thanks! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kirktrue commented on pull request #10980: KAFKA-12989: MockClient should respect the request matcher passed to prepareUnsupportedVersionResponse

kirktrue commented on pull request #10980: URL: https://github.com/apache/kafka/pull/10980#issuecomment-876583510 @cmccabe - could you take a look at this and/or assign a reviewer for this? Thanks! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (KAFKA-13050) Race between controller creating snapshot and snapshot cleaning

David Arthur created KAFKA-13050:

Summary: Race between controller creating snapshot and snapshot

cleaning

Key: KAFKA-13050

URL: https://issues.apache.org/jira/browse/KAFKA-13050

Project: Kafka

Issue Type: Bug

Components: controller, kraft

Affects Versions: 3.0.0

Reporter: David Arthur

If the controller attempts to take a snapshot with its cached OffsetAndEpoch

while snapshot cleaning is happening, it is possible for the OffsetAndEpoch to

be invalidated due to truncation.

{code}

[2021-07-08 12:12:41,938] WARN [Controller 1]

org.apache.kafka.controller.QuorumController@67e0d836: failed with unknown

server exception IllegalArgumentException at epoch -1 in 3207460 us. Reverting

to last committed offset 98. (org.apache.kafka.controller.QuorumController)

java.lang.IllegalArgumentException: Snapshot id (OffsetAndEpoch(offset=99,

epoch=5)) is not valid according to the log: ValidOffsetAndEpoch(kind=SNAPSHOT,

offsetAndEpoch=OffsetAndEpoch(offset=180, epoch=8))

at

kafka.raft.KafkaMetadataLog.createNewSnapshot(KafkaMetadataLog.scala:252)

at

org.apache.kafka.raft.KafkaRaftClient.lambda$createSnapshot$30(KafkaRaftClient.java:2334)

at

org.apache.kafka.snapshot.SnapshotWriter.createWithHeader(SnapshotWriter.java:134)

at

org.apache.kafka.raft.KafkaRaftClient.createSnapshot(KafkaRaftClient.java:2333)

at

org.apache.kafka.controller.QuorumController$SnapshotGeneratorManager.createSnapshotGenerator(QuorumController.java:351)

at

org.apache.kafka.controller.QuorumController.checkSnapshotGeneration(QuorumController.java:904)

at

org.apache.kafka.controller.QuorumController.access$3000(QuorumController.java:121)

at

org.apache.kafka.controller.QuorumController$QuorumMetaLogListener.lambda$handleCommit$0(QuorumController.java:681)

at

org.apache.kafka.controller.QuorumController$ControlEvent.run(QuorumController.java:311)

at

org.apache.kafka.queue.KafkaEventQueue$EventContext.run(KafkaEventQueue.java:121)

at

org.apache.kafka.queue.KafkaEventQueue$EventHandler.handleEvents(KafkaEventQueue.java:200)

at

org.apache.kafka.queue.KafkaEventQueue$EventHandler.run(KafkaEventQueue.java:173)

at java.lang.Thread.run(Thread.java:748)

[2021-07-08 12:12:41,941] INFO [BrokerMetadataListener id=1] Loading snapshot

180-8. (kafka.server.metadata.BrokerMetadataListener)

{code}

This was observed while running a broker in combined mode with artificially low

values for snapshot generation and cleaning.

{code}

metadata.log.max.record.bytes.between.snapshots=100

metadata.log.segment.bytes=1024

metadata.max.retention.bytes=4096

{code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [kafka] kamalcph commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

kamalcph commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876588345 There is also a tip to run the command with `--no-cache` flag on error. Why isn't it exposed? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe commented on a change in pull request #10995:

URL: https://github.com/apache/kafka/pull/10995#discussion_r666358707

##

File path: tests/docker/ducker-ak

##

@@ -185,6 +185,12 @@ must_popd() {

popd &> /dev/null || die "failed to popd"

}

+echo_and_do() {

+local cmd="${@}"

+echo "${cmd}"

+${cmd}

Review comment:

Well... I made this new function because the other one was checking the

output and exiting if it was nonzero. That didn't allow me to print the error

message I wanted. So doing the same thing here would defeat the point :)

If bash shell was a better language, I could add a lambda argument to the

existing function and have the lambda return what to print on failure. But

shell doesn't really do closures, so it would turn into kind of a mess.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] kamalcph commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

kamalcph commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876589345 Also, can you update the label from `ducker.type` to `ducker.creator` [here](https://github.com/apache/kafka/blob/a657d735cf3ca1fafd4bd255137713a28509ed8a/tests/docker/ducker-ak#L339). -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kamalcph edited a comment on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

kamalcph edited a comment on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876589345 Also, can you update the label from `ducker.type` to `ducker.creator` [here](https://github.com/apache/kafka/blob/a657d735cf3ca1fafd4bd255137713a28509ed8a/tests/docker/ducker-ak#L339)? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

hachikuji commented on a change in pull request #10995:

URL: https://github.com/apache/kafka/pull/10995#discussion_r666360055

##

File path: tests/docker/ducker-ak

##

@@ -185,6 +185,12 @@ must_popd() {

popd &> /dev/null || die "failed to popd"

}

+echo_and_do() {

+local cmd="${@}"

+echo "${cmd}"

+${cmd}

Review comment:

Fair enough.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876590217 @kamalcph : Thanks, that is a good find. I agree we should update the label from ducker.type to ducker.creator. Can you make a separate PR for that? I can review it quickly. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] kamalcph commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

kamalcph commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876590746 > @kamalcph : Thanks, that is a good find. I agree we should update the label from ducker.type to ducker.creator. Can you make a separate PR for that? I can review it quickly. PR: #10499 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on a change in pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe commented on a change in pull request #10995:

URL: https://github.com/apache/kafka/pull/10995#discussion_r666362458

##

File path: tests/docker/ducker-ak

##

@@ -185,6 +185,12 @@ must_popd() {

popd &> /dev/null || die "failed to popd"

}

+echo_and_do() {

+local cmd="${@}"

+echo "${cmd}"

+${cmd}

Review comment:

Also I guess some people would say that I'm doing things too much the

Java way here, and that the kewl bash way of doing this would be

```

set -x

set +x

```

But it still feels kind of like a mess since, does `set +x` change `$?` ?

Sigh.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] 0x1991babe opened a new pull request #10998: MINOR: fix quoted boolean in FetchRequest.json

0x1991babe opened a new pull request #10998: URL: https://github.com/apache/kafka/pull/10998 Hi, I'm working on maintaining an implementation of the protocol written in Rust: https://github.com/0x1991babe/kafka-protocol-rs. A recent change in 2b8aff58b5 breaks my deserialization logic for the `ignorable` field. I could work around this, but hope this minor change is acceptable. :) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876593643 > PR: #10499 @kamalcph: Thanks for that. Can you make a more minimal PR that just changes `ducker.type` to `ducker.creator`? We're getting so close to the release, and I just don't have time to review a lot of bash changes (to be 100% honest) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876596528 > There is also a tip to run the command with --no-cache flag on error. Why isn't it exposed? I agree `--no-cache` should probably appear in the help output somewhere. Let's tackle this in a follow-on. I don't know if it would help with the current issue that many people are seeing with their cache. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] cmccabe merged pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

cmccabe merged pull request #10995: URL: https://github.com/apache/kafka/pull/10995 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #10794: KAFKA-12677: return not_controller error in envelope response itself

hachikuji commented on a change in pull request #10794:

URL: https://github.com/apache/kafka/pull/10794#discussion_r666364712

##

File path: clients/src/main/java/org/apache/kafka/common/requests/ApiError.java

##

@@ -36,10 +38,21 @@

private final String message;

public static ApiError fromThrowable(Throwable t) {

+Throwable throwableToBeEncode = t;

Review comment:

nit: `throwableToBeEncoded`?

##

File path: core/src/main/scala/kafka/network/RequestChannel.scala

##

@@ -124,8 +124,15 @@ object RequestChannel extends Logging {

def buildResponseSend(abstractResponse: AbstractResponse): Send = {

envelope match {

case Some(request) =>

- val responseBytes =

context.buildResponseEnvelopePayload(abstractResponse)

- val envelopeResponse = new EnvelopeResponse(responseBytes,

Errors.NONE)

+ val envelopeResponse = if

(abstractResponse.errorCounts().containsKey(Errors.NOT_CONTROLLER)) {

+// Since it's a Not Controller error response, we need to make

envelope response with Not Controller error

Review comment:

Do we have a test case which fails without this fix?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] junrao commented on a change in pull request #10579: KAFKA-9555 Added default RLMM implementation based on internal topic storage.

junrao commented on a change in pull request #10579:

URL: https://github.com/apache/kafka/pull/10579#discussion_r666372073

##

File path:

storage/src/main/java/org/apache/kafka/server/log/remote/metadata/storage/TopicBasedRemoteLogMetadataManager.java

##

@@ -0,0 +1,432 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.server.log.remote.metadata.storage;

+

+import org.apache.kafka.clients.admin.AdminClient;

+import org.apache.kafka.clients.admin.NewTopic;

+import org.apache.kafka.clients.producer.RecordMetadata;

+import org.apache.kafka.common.KafkaException;

+import org.apache.kafka.common.TopicIdPartition;

+import org.apache.kafka.common.config.TopicConfig;

+import org.apache.kafka.common.errors.RetriableException;

+import org.apache.kafka.common.errors.TopicExistsException;

+import org.apache.kafka.common.utils.KafkaThread;

+import org.apache.kafka.common.utils.Time;

+import org.apache.kafka.common.utils.Utils;

+import org.apache.kafka.server.log.remote.storage.RemoteLogMetadata;

+import org.apache.kafka.server.log.remote.storage.RemoteLogMetadataManager;

+import org.apache.kafka.server.log.remote.storage.RemoteLogSegmentMetadata;

+import

org.apache.kafka.server.log.remote.storage.RemoteLogSegmentMetadataUpdate;

+import org.apache.kafka.server.log.remote.storage.RemoteLogSegmentState;

+import

org.apache.kafka.server.log.remote.storage.RemotePartitionDeleteMetadata;

+import org.apache.kafka.server.log.remote.storage.RemoteStorageException;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.time.Duration;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.Iterator;

+import java.util.Map;

+import java.util.Objects;

+import java.util.Optional;

+import java.util.Set;

+import java.util.concurrent.atomic.AtomicBoolean;

+import java.util.concurrent.locks.ReentrantReadWriteLock;

+

+/**

+ * This is the {@link RemoteLogMetadataManager} implementation with storage as

an internal topic with name {@link

TopicBasedRemoteLogMetadataManagerConfig#REMOTE_LOG_METADATA_TOPIC_NAME}.

+ * This is used to publish and fetch {@link RemoteLogMetadata} for the

registered user topic partitions with

+ * {@link #onPartitionLeadershipChanges(Set, Set)}. Each broker will have an

instance of this class and it subscribes

+ * to metadata updates for the registered user topic partitions.

+ */

+public class TopicBasedRemoteLogMetadataManager implements

RemoteLogMetadataManager {

+private static final Logger log =

LoggerFactory.getLogger(TopicBasedRemoteLogMetadataManager.class);

+private static final long INITIALIZATION_RETRY_INTERVAL_MS = 3L;

+

+private volatile boolean configured = false;

+

+// It indicates whether the close process of this instance is started or

not via #close() method.

+// Using AtomicBoolean instead of volatile as it may encounter

http://findbugs.sourceforge.net/bugDescriptions.html#SP_SPIN_ON_FIELD

+// if the field is read but not updated in a spin loop like in

#initializeResources() method.

+private final AtomicBoolean closing = new AtomicBoolean(false);

+private final AtomicBoolean initialized = new AtomicBoolean(false);

+private final Time time = Time.SYSTEM;

+

+private Thread initializationThread;

+private volatile ProducerManager producerManager;

+private volatile ConsumerManager consumerManager;

+

+// This allows to gracefully close this instance using {@link #close()}

method while there are some pending or new

+// requests calling different methods which use the resources like

producer/consumer managers.

+private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();

+

+private final RemotePartitionMetadataStore remotePartitionMetadataStore =

new RemotePartitionMetadataStore();

+private volatile TopicBasedRemoteLogMetadataManagerConfig rlmmConfig;

+private volatile RemoteLogMetadataTopicPartitioner rlmmTopicPartitioner;

+private volatile Set pendingAssignPartitions =

Collections.synchronizedSet(new HashSet<>());

+

+@Override

+public void addRemoteLogSegmentMetadata(RemoteLogSegmentMetadata

remoteLo

[GitHub] [kafka] kamalcph commented on pull request #10995: MINOR: Hint about "docker system prune" when ducker-ak build fails

kamalcph commented on pull request #10995: URL: https://github.com/apache/kafka/pull/10995#issuecomment-876604684 @cmccabe Updated #10499, can you take another look? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji merged pull request #10560: KAFKA-12660: Do not update offset commit sensor after append failure

hachikuji merged pull request #10560: URL: https://github.com/apache/kafka/pull/10560 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Resolved] (KAFKA-12660) Do not update offset commit sensor after append failure

[ https://issues.apache.org/jira/browse/KAFKA-12660?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Jason Gustafson resolved KAFKA-12660. - Fix Version/s: 3.0.0 Resolution: Fixed > Do not update offset commit sensor after append failure > --- > > Key: KAFKA-12660 > URL: https://issues.apache.org/jira/browse/KAFKA-12660 > Project: Kafka > Issue Type: Bug >Reporter: Jason Gustafson >Assignee: dengziming >Priority: Major > Fix For: 3.0.0 > > > In the append callback after writing an offset to the log in > `GroupMetadataManager`, It seems wrong to update the offset commit sensor > prior to checking for errors: > https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/coordinator/group/GroupMetadataManager.scala#L394. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] jolshan commented on pull request #10998: MINOR: fix quoted boolean in FetchRequest.json

jolshan commented on pull request #10998: URL: https://github.com/apache/kafka/pull/10998#issuecomment-876625362 Ah this should be fine. I don't think I should have put that in quotes in the first place. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan opened a new pull request #10999: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name (for 3.0)

jolshan opened a new pull request #10999: URL: https://github.com/apache/kafka/pull/10999 Opening a version of https://github.com/apache/kafka/pull/10952 for the 3.0 branch Store topic ID info in consumer metadata. We will always take the topic ID from the latest metadata response and remove any topic IDs from the cache if the metadata response did not return a topic ID for the topic. With the addition of topic IDs, when we encounter a new topic ID (recreated topic) we can choose to get the topic's metadata even if the epoch is lower than the deleted topic. The idea is that when we update from no topic IDs to using topic IDs, we will not count the topic as new (It could be the same topic but with a new ID). We will only take the update if the topic ID changed. Added tests for this scenario as well as some tests for storing the topic IDs. Also added tests for topic IDs in metadata cache. ### Committer Checklist (excluded from commit message) - [ ] Verify design and implementation - [ ] Verify test coverage and CI build status - [ ] Verify documentation (including upgrade notes) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan closed pull request #10999: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name (for 3.0)

jolshan closed pull request #10999: URL: https://github.com/apache/kafka/pull/10999 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] jolshan commented on pull request #10999: KAFKA-12257: Consumer mishandles topics deleted and recreated with the same name (for 3.0)

jolshan commented on pull request #10999: URL: https://github.com/apache/kafka/pull/10999#issuecomment-876629791 I realized I can change the target of the original. I'll open a new PR for the revised trunk version. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] mattwong949 commented on a change in pull request #10914: [KAKFA-8522] Streamline tombstone and transaction marker removal

mattwong949 commented on a change in pull request #10914:

URL: https://github.com/apache/kafka/pull/10914#discussion_r666406571

##

File path:

clients/src/main/java/org/apache/kafka/common/record/DefaultRecordBatch.java

##

@@ -156,13 +161,27 @@ public void ensureValid() {

}

/**

- * Get the timestamp of the first record in this batch. It is always the

create time of the record even if the

+ * Gets the base timestamp of the batch which is used to calculate the

timestamp deltas.

+ *

+ * @return The base timestamp or

+ * {@link RecordBatch#NO_TIMESTAMP} if the batch is empty

+ */

+public long baseTimestamp() {

+return buffer.getLong(FIRST_TIMESTAMP_OFFSET);

+}

+

+/**

+ * Get the timestamp of the first record in this batch. It is usually the

create time of the record even if the

* timestamp type of the batch is log append time.

- *

- * @return The first timestamp or {@link RecordBatch#NO_TIMESTAMP} if the

batch is empty

+ *

+ * @return The first timestamp if a record has been appended, unless the

delete horizon has been set

+ * {@link RecordBatch#NO_TIMESTAMP} if the batch is empty or if

the delete horizon is set

*/

public long firstTimestamp() {

Review comment:

I've removed the firstTimestamp() method, which included some

modifications to the baseTimestamp() method and those test changes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] cmccabe merged pull request #10982: MINOR: Update dropwizard metrics to 4.1.12.1

cmccabe merged pull request #10982: URL: https://github.com/apache/kafka/pull/10982 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: jira-unsubscr...@kafka.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

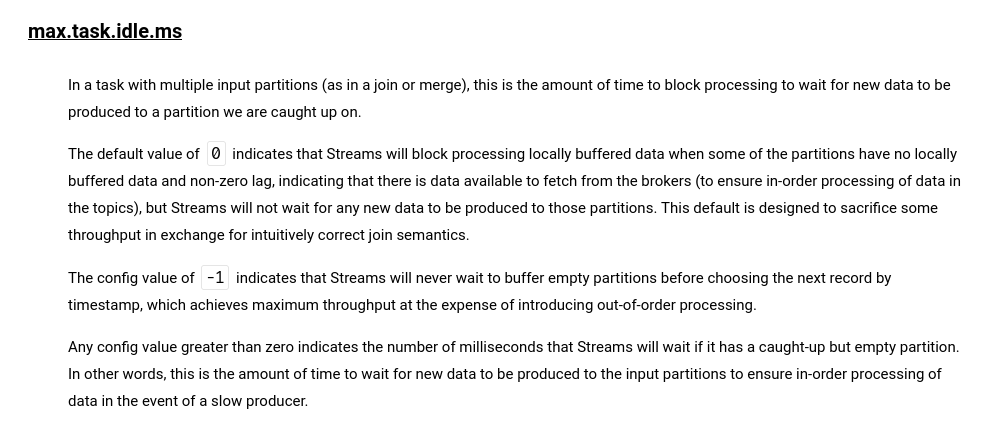

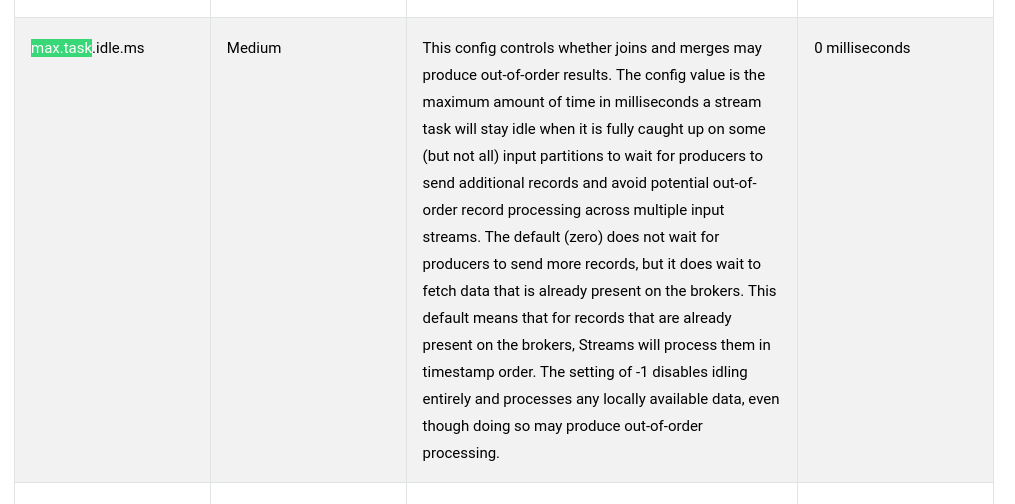

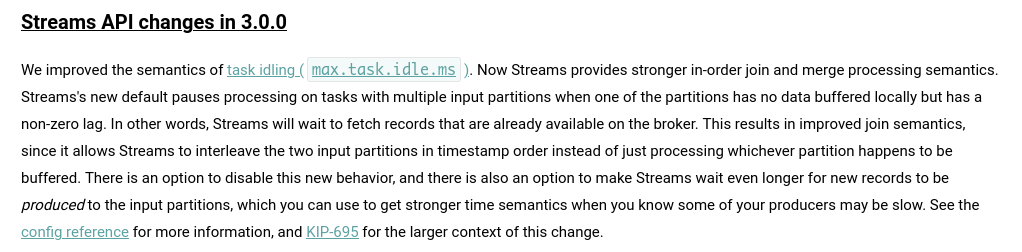

[jira] [Commented] (KAFKA-8315) Historical join issues

[

https://issues.apache.org/jira/browse/KAFKA-8315?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17377546#comment-17377546

]

John Roesler commented on KAFKA-8315:

-

Hello again, all, this issue should be resolved in 3.0, via KAFKA-10091.

> Historical join issues

> --

>

> Key: KAFKA-8315

> URL: https://issues.apache.org/jira/browse/KAFKA-8315

> Project: Kafka

> Issue Type: Bug

> Components: streams

>Reporter: Andrew

>Assignee: John Roesler

>Priority: Major

> Fix For: 3.0.0

>

> Attachments: code.java

>

>

> The problem we are experiencing is that we cannot reliably perform simple

> joins over pre-populated kafka topics. This seems more apparent where one

> topic has records at less frequent record timestamp intervals that the other.

> An example of the issue is provided in this repository :

> [https://github.com/the4thamigo-uk/join-example]

>

> The only way to increase the period of historically joined records is to

> increase the grace period for the join windows, and this has repercussions

> when you extend it to a large period e.g. 2 years of minute-by-minute records.

> Related slack conversations :

> [https://confluentcommunity.slack.com/archives/C48AHTCUQ/p1556799561287300]

> [https://confluentcommunity.slack.com/archives/C48AHTCUQ/p1557733979453900]

>

> Research on this issue has gone through a few phases :

> 1) This issue was initially thought to be due to the inability to set the

> retention period for a join window via {{Materialized: i.e.}}

> The documentation says to use `Materialized` not `JoinWindows.until()`

> ([https://kafka.apache.org/22/javadoc/org/apache/kafka/streams/kstream/JoinWindows.html#until-long-]),

> but there is no where to pass a `Materialized` instance to the join

> operation, only to the group operation is supported it seems.

> This was considered to be a problem with the documentation not with the API

> and is addressed in [https://github.com/apache/kafka/pull/6664]

> 2) We then found an apparent issue in the code which would affect the

> partition that is selected to deliver the next record to the join. This would

> only be a problem for data that is out-of-order, and join-example uses data

> that is in order of timestamp in both topics. So this fix is thought not to

> affect join-example.

> This was considered to be an issue and is being addressed in

> [https://github.com/apache/kafka/pull/6719]

> 3) Further investigation using a crafted unit test seems to show that the

> partition-selection and ordering (PartitionGroup/RecordQueue) seems to work ok

> [https://github.com/the4thamigo-uk/kafka/commit/5121851491f2fd0471d8f3c49940175e23a26f1b]

> 4) the current assumption is that the issue is rooted in the way records are

> consumed from the topics :

> We have tried to set various options to suppress reads form the source topics

> but it doesnt seem to make any difference :