[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/22326 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220777535

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/python/BatchEvalPythonExecSuite.scala

---

@@ -100,6 +105,29 @@ class BatchEvalPythonExecSuite extends SparkPlanTest

with SharedSQLContext {

}

assert(qualifiedPlanNodes.size == 1)

}

+

+ test("SPARK-25314: Python UDF refers to the attributes from more than

one child " +

--- End diff --

Got it, I use this for IDE mock python UDF, will do this in a follow up PR

with a new test suites in `org.apache.spark.sql.catalyst.optimizer`, revert in

2b6977d.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220777383

--- Diff: python/pyspark/sql/tests.py ---

@@ -552,6 +552,96 @@ def test_udf_in_filter_on_top_of_join(self):

df = left.crossJoin(right).filter(f("a", "b"))

self.assertEqual(df.collect(), [Row(a=1, b=1)])

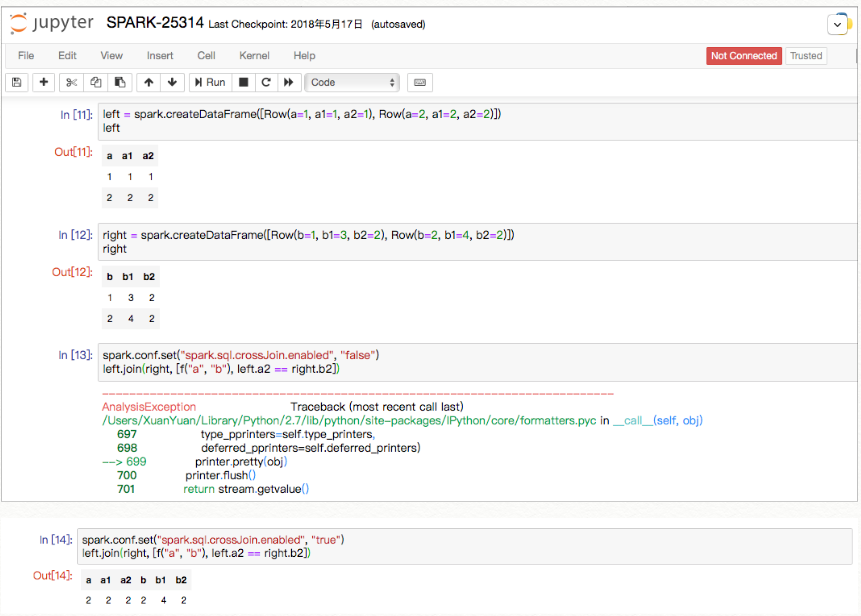

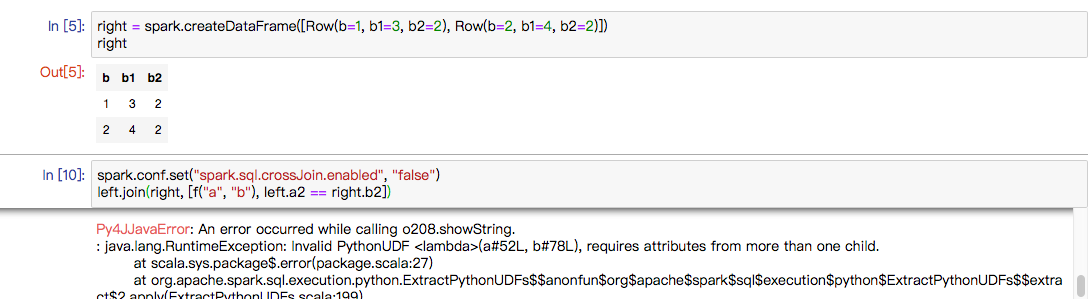

+def test_udf_in_join_condition(self):

+# regression test for SPARK-25314

+from pyspark.sql.functions import udf

+left = self.spark.createDataFrame([Row(a=1)])

+right = self.spark.createDataFrame([Row(b=1)])

+f = udf(lambda a, b: a == b, BooleanType())

+df = left.join(right, f("a", "b"))

+with self.assertRaisesRegexp(AnalysisException, 'Detected implicit

cartesian product'):

+df.collect()

+with self.sql_conf({"spark.sql.crossJoin.enabled": True}):

+self.assertEqual(df.collect(), [Row(a=1, b=1)])

+

+def test_udf_in_left_semi_join_condition(self):

+# regression test for SPARK-25314

+from pyspark.sql.functions import udf

+left = self.spark.createDataFrame([Row(a=1, a1=1, a2=1), Row(a=2,

a1=2, a2=2)])

+right = self.spark.createDataFrame([Row(b=1, b1=1, b2=1)])

+f = udf(lambda a, b: a == b, BooleanType())

+df = left.join(right, f("a", "b"), "leftsemi")

+with self.assertRaisesRegexp(AnalysisException, 'Detected implicit

cartesian product'):

+df.collect()

+with self.sql_conf({"spark.sql.crossJoin.enabled": True}):

+self.assertEqual(df.collect(), [Row(a=1, a1=1, a2=1)])

+

+def test_udf_and_filter_in_join_condition(self):

--- End diff --

Make sense, just for checking during implement, delete both in 2b6977d.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220770012

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/python/BatchEvalPythonExecSuite.scala

---

@@ -100,6 +105,29 @@ class BatchEvalPythonExecSuite extends SparkPlanTest

with SharedSQLContext {

}

assert(qualifiedPlanNodes.size == 1)

}

+

+ test("SPARK-25314: Python UDF refers to the attributes from more than

one child " +

--- End diff --

This is still an end-to-end test, I don't think we need it

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220769336

--- Diff: python/pyspark/sql/tests.py ---

@@ -552,6 +552,96 @@ def test_udf_in_filter_on_top_of_join(self):

df = left.crossJoin(right).filter(f("a", "b"))

self.assertEqual(df.collect(), [Row(a=1, b=1)])

+def test_udf_in_join_condition(self):

+# regression test for SPARK-25314

+from pyspark.sql.functions import udf

+left = self.spark.createDataFrame([Row(a=1)])

+right = self.spark.createDataFrame([Row(b=1)])

+f = udf(lambda a, b: a == b, BooleanType())

+df = left.join(right, f("a", "b"))

+with self.assertRaisesRegexp(AnalysisException, 'Detected implicit

cartesian product'):

+df.collect()

+with self.sql_conf({"spark.sql.crossJoin.enabled": True}):

+self.assertEqual(df.collect(), [Row(a=1, b=1)])

+

+def test_udf_in_left_semi_join_condition(self):

+# regression test for SPARK-25314

+from pyspark.sql.functions import udf

+left = self.spark.createDataFrame([Row(a=1, a1=1, a2=1), Row(a=2,

a1=2, a2=2)])

+right = self.spark.createDataFrame([Row(b=1, b1=1, b2=1)])

+f = udf(lambda a, b: a == b, BooleanType())

+df = left.join(right, f("a", "b"), "leftsemi")

+with self.assertRaisesRegexp(AnalysisException, 'Detected implicit

cartesian product'):

+df.collect()

+with self.sql_conf({"spark.sql.crossJoin.enabled": True}):

+self.assertEqual(df.collect(), [Row(a=1, a1=1, a2=1)])

+

+def test_udf_and_filter_in_join_condition(self):

--- End diff --

This test (and the corresponding one for left semi join) is not very

useful. The filter in join condition will be pushed down so this test is

basically same as the `test_udf_in_join_condition`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220628624

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/python/BatchEvalPythonExecSuite.scala

---

@@ -100,6 +104,28 @@ class BatchEvalPythonExecSuite extends SparkPlanTest

with SharedSQLContext {

}

assert(qualifiedPlanNodes.size == 1)

}

+

+ test("SPARK-25314: Python UDF refers to the attributes from more than

one child " +

+"in join condition") {

+def dummyPythonUDFTest(): Unit = {

+ val df = Seq(("Hello", 4)).toDF("a", "b")

+ val df2 = Seq(("Hello", 4)).toDF("c", "d")

+ val joinDF = df.join(df2,

+dummyPythonUDF(col("a"), col("c")) === dummyPythonUDF(col("d"),

col("c")))

+ val qualifiedPlanNodes = joinDF.queryExecution.executedPlan.collect {

+case b: BatchEvalPythonExec => b

+ }

+ assert(qualifiedPlanNodes.size == 1)

+}

+// Test without spark.sql.crossJoin.enabled set

+val errMsg = intercept[AnalysisException] {

+ dummyPythonUDFTest()

+}

+assert(errMsg.getMessage.startsWith("Detected implicit cartesian

product"))

+// Test with spark.sql.crossJoin.enabled=true

+spark.conf.set("spark.sql.crossJoin.enabled", "true")

--- End diff --

Thanks, done in 7f66954.

```

So I'd prefer having one or 2 end-to-end tests and create a new suite

testing only the rule and the plan transformation, both for having lower

testing time and finer grained tests checking that the output plan is indeed

the expected one (not only checking the result of the query).

```

Make sense, will add a plan test for this rule.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220586456

--- Diff:

sql/core/src/test/scala/org/apache/spark/sql/execution/python/BatchEvalPythonExecSuite.scala

---

@@ -100,6 +104,28 @@ class BatchEvalPythonExecSuite extends SparkPlanTest

with SharedSQLContext {

}

assert(qualifiedPlanNodes.size == 1)

}

+

+ test("SPARK-25314: Python UDF refers to the attributes from more than

one child " +

+"in join condition") {

+def dummyPythonUDFTest(): Unit = {

+ val df = Seq(("Hello", 4)).toDF("a", "b")

+ val df2 = Seq(("Hello", 4)).toDF("c", "d")

+ val joinDF = df.join(df2,

+dummyPythonUDF(col("a"), col("c")) === dummyPythonUDF(col("d"),

col("c")))

+ val qualifiedPlanNodes = joinDF.queryExecution.executedPlan.collect {

+case b: BatchEvalPythonExec => b

+ }

+ assert(qualifiedPlanNodes.size == 1)

+}

+// Test without spark.sql.crossJoin.enabled set

+val errMsg = intercept[AnalysisException] {

+ dummyPythonUDFTest()

+}

+assert(errMsg.getMessage.startsWith("Detected implicit cartesian

product"))

+// Test with spark.sql.crossJoin.enabled=true

+spark.conf.set("spark.sql.crossJoin.enabled", "true")

--- End diff --

please use `withSQLConf`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220576239

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+logWarning(s"The join condition:$condition of the join plan

contains " +

+ "PythonUDF only, it will be moved out and the join plan will be

turned to cross " +

+ s"join. This plan shows below:\n $j")

+None

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike => Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

+j.left.output.map(_.toAttribute),

+ Filter(udf.reduceLeft(And), newJoin.copy(joinType = Inner)))

--- End diff --

Thanks, done in d2739af.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220576188

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+logWarning(s"The join condition:$condition of the join plan

contains " +

+ "PythonUDF only, it will be moved out and the join plan will be

turned to cross " +

+ s"join. This plan shows below:\n $j")

--- End diff --

Got it, done in d2739af.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220576115

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

--- End diff --

Thanks, done in d2739af.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220576062

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

--- End diff --

Make sense, duplicate with log. Done in d2739af.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220575840

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

--- End diff --

Thanks, done in d2739af.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220569284

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

--- End diff --

I think we don't need here the second sentence, ie. the one startng with

`If join condition ...`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220570791

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+logWarning(s"The join condition:$condition of the join plan

contains " +

+ "PythonUDF only, it will be moved out and the join plan will be

turned to cross " +

+ s"join. This plan shows below:\n $j")

--- End diff --

can we at least remove the whole plan from the warning? Plans can be pretty

big...

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220568484

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

--- End diff --

nits:

- `they are`

- missing space before `(`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220570975

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+logWarning(s"The join condition:$condition of the join plan

contains " +

+ "PythonUDF only, it will be moved out and the join plan will be

turned to cross " +

+ s"join. This plan shows below:\n $j")

+None

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike => Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

+j.left.output.map(_.toAttribute),

+ Filter(udf.reduceLeft(And), newJoin.copy(joinType = Inner)))

--- End diff --

nit: indentation

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220570021

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,53 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * PythonUDF in join condition can not be evaluated, this rule will detect

the PythonUDF

+ * and pull them out from join condition. For python udf accessing

attributes from only one side,

+ * they would be pushed down by operation push down rules. If not(e.g.

user disables filter push

+ * down rules), we need to pull them out in this rule too.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // If condition expression contains python udf, it will be moved out

from

+ // the new join conditions. If join condition has python udf only,

it will be turned

+ // to cross join and the crossJoinEnable will be checked in

CheckCartesianProducts.

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

--- End diff --

nit: -> `splitConjunctivePredicates(condition.get).partition(...)` seems

more clear to me

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220568332

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

--- End diff --

Thanks, as our discussion in

https://github.com/apache/spark/pull/22326/files#r220518094.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220567623

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

--- End diff --

Got it, thanks :)

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220567381

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

--- End diff --

Thanks, done in 87f0f50.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220567301

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

--- End diff --

Thanks, done in 87f0f50.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220567216

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

--- End diff --

Thanks, done in 87f0f50.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220564636

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

--- End diff --

ah, let's leave left anti join then, thanks for trying!

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220562279

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

--- End diff --

I tried two ways to implement LeftAnti here:

1. Use the Except(join.left, left semi result, isAll=false) to simulate, it

is banned by strategy and actually also no plan for

Except.https://github.com/apache/spark/blob/89671a27e783d77d4bfaec3d422cc8dd468ef04c/sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala#L557-L559

2. Also use cross join and filter to simulate, but maybe it can't reached

when there's only udf in anti join condition. Because after cross join, it's

hard to roll back to original status. UDF+ normal common condition can be

simulated by

```

Project(

j.left.output.map(_.toAttribute),

Filter(Not(udf.reduceLeft(And)),

newJoin.copy(joinType = Inner, condition = not(rest.reduceLeft(And)

```

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220526661

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

--- End diff --

No problem, I'll change it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220525992

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

--- End diff --

Then shall we make it better? e.g. only log warning if it really becomes a

cross join, i.e. the join condition is none.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220524866

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

--- End diff --

Let me try.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220524840

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

--- End diff --

Let me try.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220523238

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

--- End diff --

Can we keep this? As we discuss before, there's a little strange for user

to get a implicit cartesian product exception during python udf in join

condition, maybe left this log can give some clue. WDYT?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220522504

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

--- End diff --

```

can we then update the comment of this class in order to reflect what it

actually does?

```

I'll update the comment soon.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220518094

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

+"PythonUDF, it will be moved out and the join plan will be turned

to cross " +

+s"join when its the only condition. This plan shows below:\n $j")

+ val (udf, rest) =

+

condition.map(splitConjunctivePredicates).get.partition(hasPythonUDF)

+ val newCondition = if (rest.isEmpty) {

+Option.empty

+ } else {

+Some(rest.reduceLeft(And))

+ }

+ val newJoin = j.copy(condition = newCondition)

+ joinType match {

+case _: InnerLike =>

+ Filter(udf.reduceLeft(And), newJoin)

+case LeftSemi =>

+ Project(

--- End diff --

so we are simulating a left semi join here. Seems we can do the same thing

for left anti join.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220518059

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

--- End diff --

thanks for the explanation @cloud-fan , makes sense, can we then update the

comment of this class in order to reflect what it actually does?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220517587

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

+ logWarning(s"The join condition:$condition of the join plan contains

" +

--- End diff --

do we really need this warning? If it becomes cross join, users will get an

error anyway, if cross join is disabled.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220517383

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter

after join. If we pass

+// the plan here, it'll still get a an invalid PythonUDF

RuntimeException with message

+// `requires attributes from more than one child`, we throw

firstly here for better

+// readable information.

+throw new AnalysisException("Using PythonUDF in join condition of

join type" +

+ s" $joinType is not supported.")

+ }

+ // if condition expression contains python udf, it will be moved out

from

+ // the new join conditions, and the join type will be changed to

CrossJoin.

--- End diff --

the comment is outdated

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220516732

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

--- End diff --

This doesn't matter. We can't evaluate python udf in the join condition,

and need to pull it out, that's all.

For python udf accessing attributes from only one side, these would be

pushed down by other rules. If they don't (e.g. user disables filter pushdown

rule), we need to pull them out here, too. Anyway it's orthogonal to this rule.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220482709

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

--- End diff --

I see no check that this requires attributes from both sides, shall we add

it? I see that if this is not true the predicate should have been already

pushed down, but an additional sanity check is worth IMHO

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22326: [SPARK-25314][SQL] Fix Python UDF accessing attri...

Github user mgaido91 commented on a diff in the pull request:

https://github.com/apache/spark/pull/22326#discussion_r220482278

--- Diff:

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/joins.scala

---

@@ -152,3 +153,51 @@ object EliminateOuterJoin extends Rule[LogicalPlan]

with PredicateHelper {

if (j.joinType == newJoinType) f else Filter(condition,

j.copy(joinType = newJoinType))

}

}

+

+/**

+ * Correctly handle PythonUDF which need access both side of join side by

changing the new join

+ * type to Cross.

+ */

+object PullOutPythonUDFInJoinCondition extends Rule[LogicalPlan] with

PredicateHelper {

+ def hasPythonUDF(expression: Expression): Boolean = {

+expression.collectFirst { case udf: PythonUDF => udf }.isDefined

+ }

+

+ override def apply(plan: LogicalPlan): LogicalPlan = plan transformUp {

+case j @ Join(_, _, joinType, condition)

+if condition.isDefined && hasPythonUDF(condition.get) =>

+ if (!joinType.isInstanceOf[InnerLike] && joinType != LeftSemi) {

+// The current strategy only support InnerLike and LeftSemi join

because for other type,

+// it breaks SQL semantic if we run the join condition as a filter