[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22674 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22674 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97140/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22674 **[Test build #97140 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97140/testReport)** for PR 22674 at commit [`a456226`](https://github.com/apache/spark/commit/a4562264c45c24a88f4d508c2d34d4e7aed50631). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22656: [SPARK-25669][SQL] Check CSV header only when it ...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22656#discussion_r223566522

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala ---

@@ -505,7 +505,8 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

val actualSchema =

StructType(schema.filterNot(_.name ==

parsedOptions.columnNameOfCorruptRecord))

-val linesWithoutHeader: RDD[String] = maybeFirstLine.map { firstLine =>

+val linesWithoutHeader = if (parsedOptions.headerFlag &&

maybeFirstLine.isDefined) {

--- End diff --

LGTM but it really needs some refactoring. Let me give a shot

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22675: [SPARK-25347][ML][DOC] Spark datasource for image...

Github user mengxr commented on a diff in the pull request:

https://github.com/apache/spark/pull/22675#discussion_r223566032

--- Diff: docs/ml-datasource.md ---

@@ -0,0 +1,51 @@

+---

+layout: global

+title: Data sources

+displayTitle: Data sources

+---

+

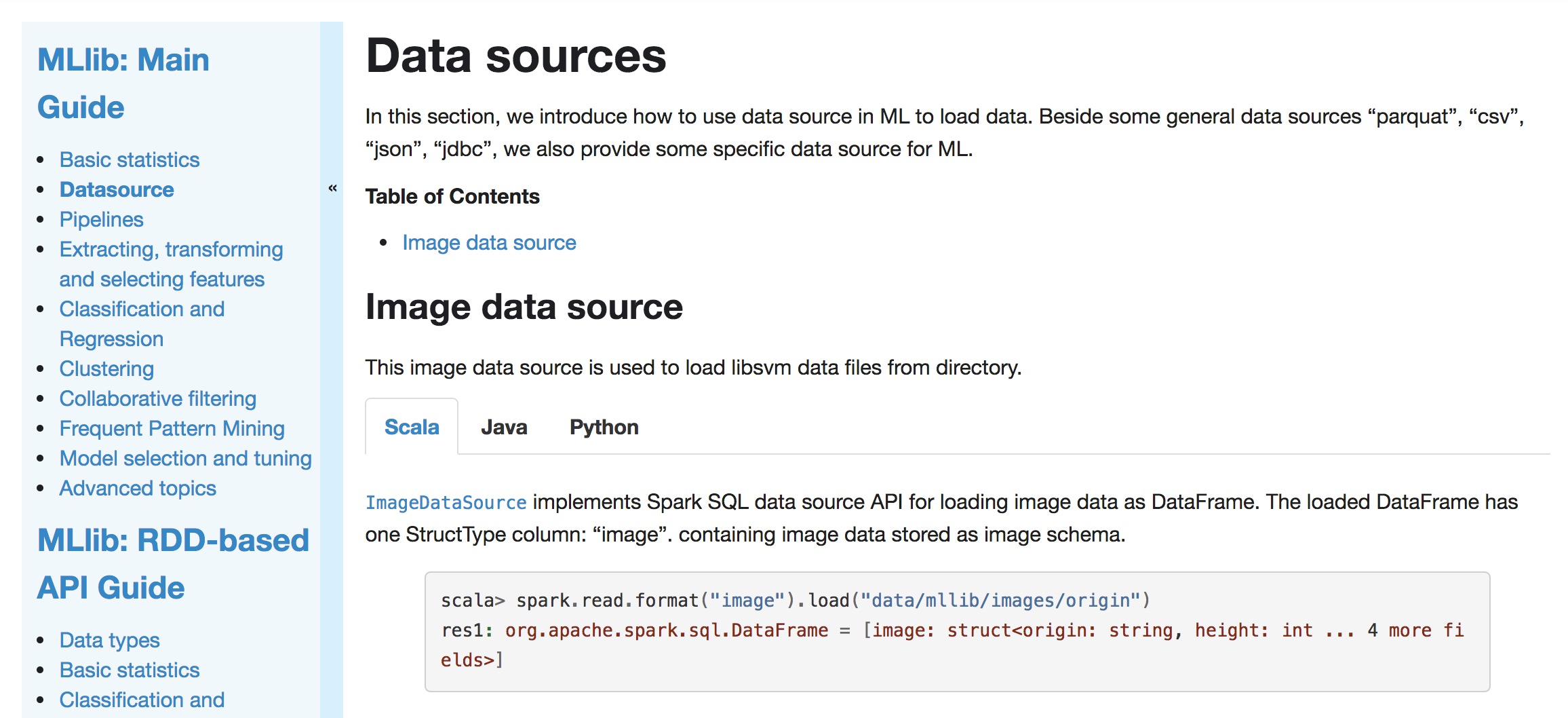

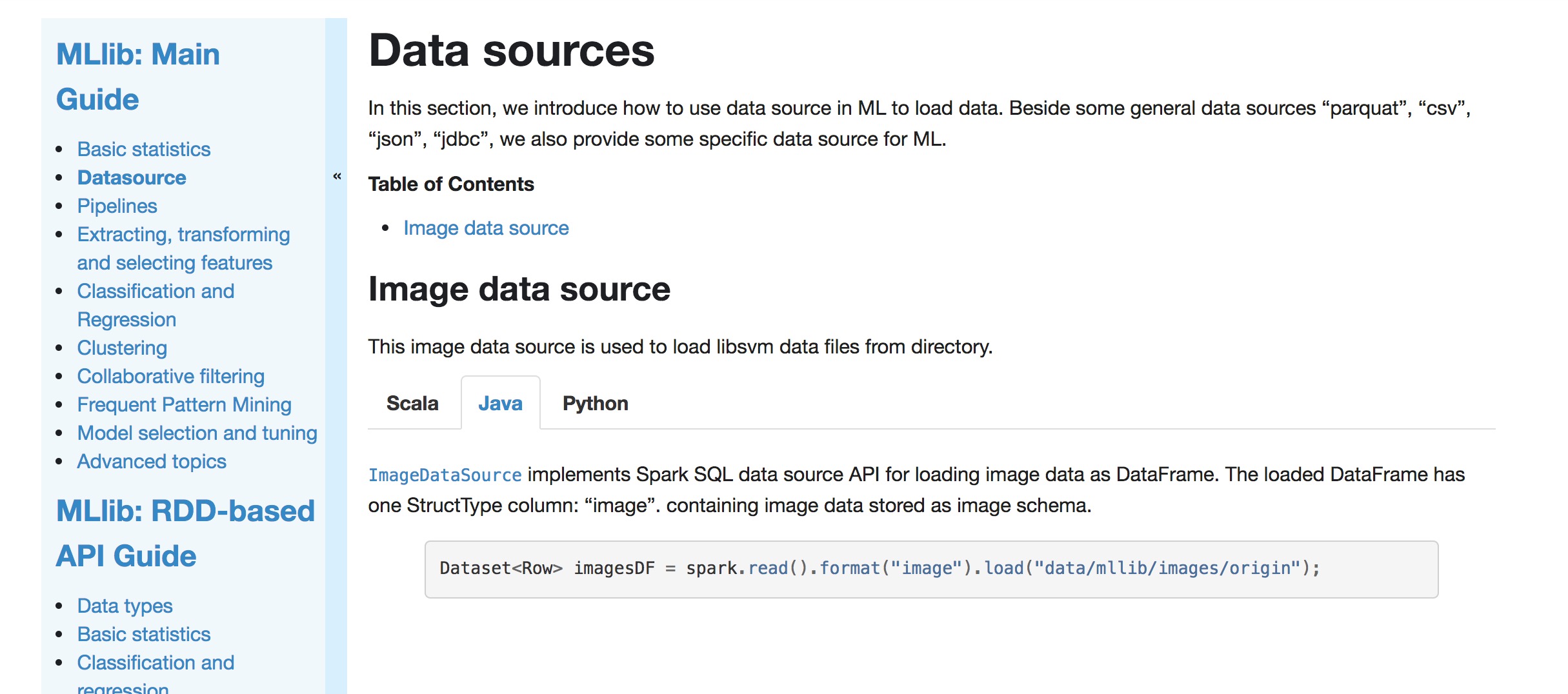

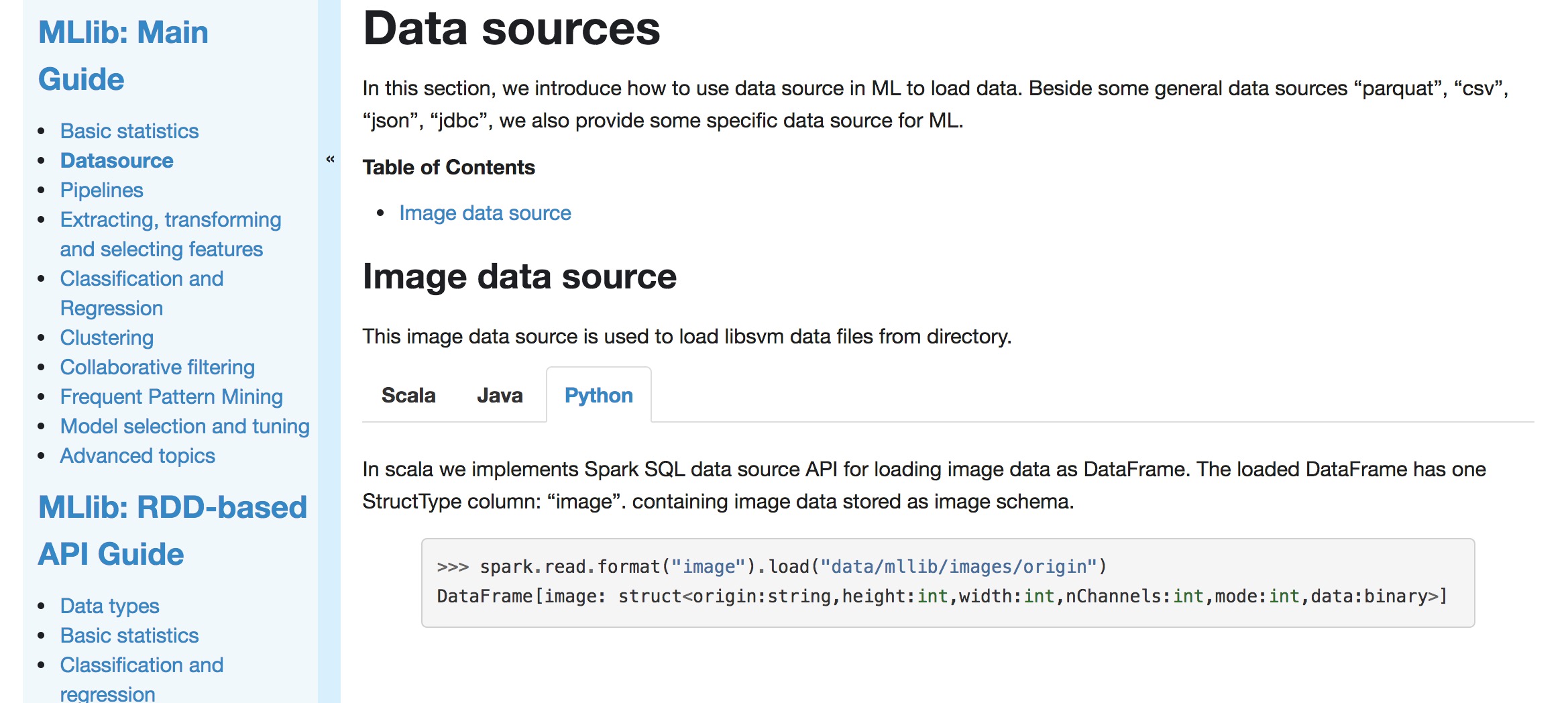

+In this section, we introduce how to use data source in ML to load data.

+Beside some general data sources "parquat", "csv", "json", "jdbc", we also

provide some specific data source for ML.

+

+**Table of Contents**

+

+* This will become a table of contents (this text will be scraped).

+{:toc}

+

+## Image data source

+

+This image data source is used to load libsvm data files from directory.

+

+

+

+[`ImageDataSource`](api/scala/index.html#org.apache.spark.ml.source.image.ImageDataSource)

+implements Spark SQL data source API for loading image data as DataFrame.

+The loaded DataFrame has one StructType column: "image". containing image

data stored as image schema.

+

+{% highlight scala %}

+scala> spark.read.format("image").load("data/mllib/images/origin")

+res1: org.apache.spark.sql.DataFrame = [image: struct]

+{% endhighlight %}

+

+

+

+[`ImageDataSource`](api/java/org/apache/spark/ml/source/image/ImageDataSource.html)

--- End diff --

Usually it depends on how important the use case is. For example, CSV was

created as an external data source and later merged into Spark. See

https://issues.apache.org/jira/browse/SPARK-21866?focusedCommentId=16148268=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16148268.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22623 **[Test build #97143 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97143/testReport)** for PR 22623 at commit [`131b3af`](https://github.com/apache/spark/commit/131b3af7ab286d2f001cbf0638cd5dbe7653a420). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22661: [SPARK-25664][SQL][TEST] Refactor JoinBenchmark to use m...

Github user wangyum commented on the issue: https://github.com/apache/spark/pull/22661 cc @dongjoon-hyun --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22663: [SPARK-25490][SQL][TEST] Refactor KryoBenchmark to use m...

Github user gengliangwang commented on the issue: https://github.com/apache/spark/pull/22663 @dongjoon-hyun Is the changes OK to you? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request: https://github.com/apache/spark/pull/21669#discussion_r223560424 --- Diff: resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesConf.scala --- @@ -47,6 +50,13 @@ private[spark] case class KubernetesExecutorSpecificConf( driverPod: Option[Pod]) extends KubernetesRoleSpecificConf +/* + * Structure containing metadata for HADOOP_CONF_DIR customization + */ +private[spark] case class HadoopConfSpecConf( --- End diff -- Let's just call it `HadoopConfSpec`. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223560209

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/hadooputils/HadoopBootstrapUtil.scala

---

@@ -0,0 +1,260 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features.hadooputils

+

+import java.io.File

+import java.nio.charset.StandardCharsets

+

+import scala.collection.JavaConverters._

+

+import com.google.common.io.Files

+import io.fabric8.kubernetes.api.model._

+

+import org.apache.spark.deploy.k8s.Constants._

+import org.apache.spark.deploy.k8s.SparkPod

+

+private[spark] object HadoopBootstrapUtil {

+

+ /**

+* Mounting the DT secret for both the Driver and the executors

+*

+* @param dtSecretName Name of the secret that stores the Delegation

Token

+* @param dtSecretItemKey Name of the Item Key storing the Delegation

Token

+* @param userName Name of the SparkUser to set SPARK_USER

+* @param maybeFileLocation Optional Location of the krb5 file

+* @param newKrb5ConfName Optional location of the ConfigMap for Krb5

+* @param maybeKrb5ConfName Optional name of ConfigMap for Krb5

+* @param pod Input pod to be appended to

+* @return a modified SparkPod

+*/

+ def bootstrapKerberosPod(

+dtSecretName: String,

+dtSecretItemKey: String,

+userName: String,

+maybeFileLocation: Option[String],

+newKrb5ConfName: Option[String],

+maybeKrb5ConfName: Option[String],

--- End diff --

Rename this to `existingKrb5ConfName`.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223557398

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesConf.scala

---

@@ -61,7 +71,16 @@ private[spark] case class KubernetesConf[T <:

KubernetesRoleSpecificConf](

roleSecretEnvNamesToKeyRefs: Map[String, String],

roleEnvs: Map[String, String],

roleVolumes: Iterable[KubernetesVolumeSpec[_ <:

KubernetesVolumeSpecificConf]],

-sparkFiles: Seq[String]) {

+sparkFiles: Seq[String],

+hadoopConfDir: Option[HadoopConfSpecConf]) {

--- End diff --

Can you rename this parameter to `hadoopConfSpec`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223561140

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/KerberosConfDriverFeatureStep.scala

---

@@ -0,0 +1,182 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features

+

+import io.fabric8.kubernetes.api.model.HasMetadata

+

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.k8s.{KubernetesConf, KubernetesUtils,

SparkPod}

+import org.apache.spark.deploy.k8s.Config._

+import org.apache.spark.deploy.k8s.Constants._

+import org.apache.spark.deploy.k8s.KubernetesDriverSpecificConf

+import org.apache.spark.deploy.k8s.features.hadooputils._

+import org.apache.spark.internal.Logging

+

+ /**

+ * Runs the necessary Hadoop-based logic based on Kerberos configs and

the presence of the

+ * HADOOP_CONF_DIR. This runs various bootstrap methods defined in

HadoopBootstrapUtil.

+ */

+private[spark] class KerberosConfDriverFeatureStep(

+ kubernetesConf: KubernetesConf[KubernetesDriverSpecificConf])

+ extends KubernetesFeatureConfigStep with Logging {

+

+ require(kubernetesConf.hadoopConfDir.isDefined,

+ "Ensure that HADOOP_CONF_DIR is defined either via env or a

pre-defined ConfigMap")

+ private val hadoopConfDirSpec = kubernetesConf.hadoopConfDir.get

+ private val conf = kubernetesConf.sparkConf

+ private val maybePrincipal =

conf.get(org.apache.spark.internal.config.PRINCIPAL)

+ private val maybeKeytab =

conf.get(org.apache.spark.internal.config.KEYTAB)

+ private val maybeExistingSecretName =

conf.get(KUBERNETES_KERBEROS_DT_SECRET_NAME)

+ private val maybeExistingSecretItemKey =

+ conf.get(KUBERNETES_KERBEROS_DT_SECRET_ITEM_KEY)

+ private val maybeKrb5File =

+ conf.get(KUBERNETES_KERBEROS_KRB5_FILE)

+ private val maybeKrb5CMap =

--- End diff --

Can we call this `existingKrb5ConfigMap`?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request: https://github.com/apache/spark/pull/21669#discussion_r223560748 --- Diff: resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/KubernetesConf.scala --- @@ -47,6 +50,13 @@ private[spark] case class KubernetesExecutorSpecificConf( driverPod: Option[Pod]) extends KubernetesRoleSpecificConf +/* + * Structure containing metadata for HADOOP_CONF_DIR customization + */ +private[spark] case class HadoopConfSpecConf( --- End diff -- Can you create a similar one for krb5 and call it `Krb5ConfSpec`, which contains an optional krb5 file location or the name of a pre-defined krb5 ConfigMap? Then you can use that `Krb5ConfSpec` in places where you use both the file location and ConfigMap as parameters. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223558019

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/hadooputils/HadoopBootstrapUtil.scala

---

@@ -0,0 +1,260 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features.hadooputils

+

+import java.io.File

+import java.nio.charset.StandardCharsets

+

+import scala.collection.JavaConverters._

+

+import com.google.common.io.Files

+import io.fabric8.kubernetes.api.model._

+

+import org.apache.spark.deploy.k8s.Constants._

+import org.apache.spark.deploy.k8s.SparkPod

+

+private[spark] object HadoopBootstrapUtil {

+

+ /**

+* Mounting the DT secret for both the Driver and the executors

+*

+* @param dtSecretName Name of the secret that stores the Delegation

Token

+* @param dtSecretItemKey Name of the Item Key storing the Delegation

Token

+* @param userName Name of the SparkUser to set SPARK_USER

+* @param maybeFileLocation Optional Location of the krb5 file

+* @param newKrb5ConfName Optional location of the ConfigMap for Krb5

+* @param maybeKrb5ConfName Optional name of ConfigMap for Krb5

+* @param pod Input pod to be appended to

+* @return a modified SparkPod

+*/

+ def bootstrapKerberosPod(

+dtSecretName: String,

+dtSecretItemKey: String,

+userName: String,

+maybeFileLocation: Option[String],

+newKrb5ConfName: Option[String],

+maybeKrb5ConfName: Option[String],

+pod: SparkPod) : SparkPod = {

+

+val maybePreConfigMapVolume = maybeKrb5ConfName.map { kconf =>

+ new VolumeBuilder()

+.withName(KRB_FILE_VOLUME)

+.withNewConfigMap()

+ .withName(kconf)

+ .endConfigMap()

+.build() }

+

+val maybeCreateConfigMapVolume = for {

+ fileLocation <- maybeFileLocation

+ krb5ConfName <- newKrb5ConfName

+ } yield {

+ val krb5File = new File(fileLocation)

+ val fileStringPath = krb5File.toPath.getFileName.toString

+ new VolumeBuilder()

+.withName(KRB_FILE_VOLUME)

+.withNewConfigMap()

+.withName(krb5ConfName)

+.withItems(new KeyToPathBuilder()

+ .withKey(fileStringPath)

+ .withPath(fileStringPath)

+ .build())

+.endConfigMap()

+.build()

+}

+

+ // Breaking up Volume Creation for clarity

+ val configMapVolume = maybePreConfigMapVolume.getOrElse(

+ maybeCreateConfigMapVolume.get)

+

+ val kerberizedPod = new PodBuilder(pod.pod)

+ .editOrNewSpec()

+.addNewVolume()

+ .withName(SPARK_APP_HADOOP_SECRET_VOLUME_NAME)

+ .withNewSecret()

+.withSecretName(dtSecretName)

+.endSecret()

+ .endVolume()

+.addNewVolumeLike(configMapVolume)

+ .endVolume()

+.endSpec()

+.build()

+

+ val kerberizedContainer = new ContainerBuilder(pod.container)

+ .addNewVolumeMount()

+.withName(SPARK_APP_HADOOP_SECRET_VOLUME_NAME)

+.withMountPath(SPARK_APP_HADOOP_CREDENTIALS_BASE_DIR)

+.endVolumeMount()

+ .addNewVolumeMount()

+.withName(KRB_FILE_VOLUME)

+.withMountPath(KRB_FILE_DIR_PATH + "/krb5.conf")

+.withSubPath("krb5.conf")

+.endVolumeMount()

+ .addNewEnv()

+.withName(ENV_HADOOP_TOKEN_FILE_LOCATION)

+

.withValue(s"$SPARK_APP_HADOOP_CREDENTIALS_BASE_DIR/$dtSecretItemKey")

+.endEnv()

+ .addNewEnv()

+.withName(ENV_SPARK_USER)

+.withValue(userName)

+.endEnv()

+ .build()

+ SparkPod(kerberizedPod, kerberizedContainer)

+ }

+

+ /**

+* setting

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223558932

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/Constants.scala

---

@@ -78,4 +80,29 @@ private[spark] object Constants {

val KUBERNETES_MASTER_INTERNAL_URL = "https://kubernetes.default.svc;

val DRIVER_CONTAINER_NAME = "spark-kubernetes-driver"

val MEMORY_OVERHEAD_MIN_MIB = 384L

+

+ // Hadoop Configuration

+ val HADOOP_FILE_VOLUME = "hadoop-properties"

+ val KRB_FILE_VOLUME = "krb5-file"

+ val HADOOP_CONF_DIR_PATH = "/opt/hadoop/conf"

+ val KRB_FILE_DIR_PATH = "/etc"

+ val ENV_HADOOP_CONF_DIR = "HADOOP_CONF_DIR"

+ val HADOOP_CONFIG_MAP_NAME =

+"spark.kubernetes.executor.hadoopConfigMapName"

+ val KRB5_CONFIG_MAP_NAME =

+"spark.kubernetes.executor.krb5ConfigMapName"

+

+ // Kerberos Configuration

+ val KERBEROS_DELEGEGATION_TOKEN_SECRET_NAME = "delegation-tokens"

+ val KERBEROS_KEYTAB_SECRET_NAME =

--- End diff --

Looks like this is used as the name of the secret storing the DT, not the

keytab. So please rename it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223561204

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/KerberosConfDriverFeatureStep.scala

---

@@ -0,0 +1,182 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features

+

+import io.fabric8.kubernetes.api.model.HasMetadata

+

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.k8s.{KubernetesConf, KubernetesUtils,

SparkPod}

+import org.apache.spark.deploy.k8s.Config._

+import org.apache.spark.deploy.k8s.Constants._

+import org.apache.spark.deploy.k8s.KubernetesDriverSpecificConf

+import org.apache.spark.deploy.k8s.features.hadooputils._

+import org.apache.spark.internal.Logging

+

+ /**

+ * Runs the necessary Hadoop-based logic based on Kerberos configs and

the presence of the

+ * HADOOP_CONF_DIR. This runs various bootstrap methods defined in

HadoopBootstrapUtil.

+ */

+private[spark] class KerberosConfDriverFeatureStep(

+ kubernetesConf: KubernetesConf[KubernetesDriverSpecificConf])

+ extends KubernetesFeatureConfigStep with Logging {

+

+ require(kubernetesConf.hadoopConfDir.isDefined,

+ "Ensure that HADOOP_CONF_DIR is defined either via env or a

pre-defined ConfigMap")

+ private val hadoopConfDirSpec = kubernetesConf.hadoopConfDir.get

+ private val conf = kubernetesConf.sparkConf

+ private val maybePrincipal =

conf.get(org.apache.spark.internal.config.PRINCIPAL)

--- End diff --

Can we avoid adding the prefix `maybe` to the variable names? They make the

variables unnecessarily longer without really improving readability much.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user liyinan926 commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223561016

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/KerberosConfDriverFeatureStep.scala

---

@@ -0,0 +1,182 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features

+

+import io.fabric8.kubernetes.api.model.HasMetadata

+

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.k8s.{KubernetesConf, KubernetesUtils,

SparkPod}

+import org.apache.spark.deploy.k8s.Config._

+import org.apache.spark.deploy.k8s.Constants._

+import org.apache.spark.deploy.k8s.KubernetesDriverSpecificConf

+import org.apache.spark.deploy.k8s.features.hadooputils._

+import org.apache.spark.internal.Logging

+

+ /**

+ * Runs the necessary Hadoop-based logic based on Kerberos configs and

the presence of the

+ * HADOOP_CONF_DIR. This runs various bootstrap methods defined in

HadoopBootstrapUtil.

+ */

+private[spark] class KerberosConfDriverFeatureStep(

+ kubernetesConf: KubernetesConf[KubernetesDriverSpecificConf])

+ extends KubernetesFeatureConfigStep with Logging {

+

+ require(kubernetesConf.hadoopConfDir.isDefined,

+ "Ensure that HADOOP_CONF_DIR is defined either via env or a

pre-defined ConfigMap")

+ private val hadoopConfDirSpec = kubernetesConf.hadoopConfDir.get

+ private val conf = kubernetesConf.sparkConf

+ private val maybePrincipal =

conf.get(org.apache.spark.internal.config.PRINCIPAL)

+ private val maybeKeytab =

conf.get(org.apache.spark.internal.config.KEYTAB)

+ private val maybeExistingSecretName =

conf.get(KUBERNETES_KERBEROS_DT_SECRET_NAME)

+ private val maybeExistingSecretItemKey =

+ conf.get(KUBERNETES_KERBEROS_DT_SECRET_ITEM_KEY)

+ private val maybeKrb5File =

+ conf.get(KUBERNETES_KERBEROS_KRB5_FILE)

+ private val maybeKrb5CMap =

+ conf.get(KUBERNETES_KERBEROS_KRB5_CONFIG_MAP)

+ private val kubeTokenManager = kubernetesConf.tokenManager(conf,

+ SparkHadoopUtil.get.newConfiguration(conf))

+ private val isKerberosEnabled =

+ (hadoopConfDirSpec.hadoopConfDir.isDefined &&

kubeTokenManager.isSecurityEnabled) ||

+ (hadoopConfDirSpec.hadoopConfigMapName.isDefined &&

+ (maybeKrb5File.isDefined || maybeKrb5CMap.isDefined))

+

+ require(maybeKeytab.isEmpty || isKerberosEnabled,

+ "You must enable Kerberos support if you are specifying a Kerberos

Keytab")

+

+ require(maybeExistingSecretName.isEmpty || isKerberosEnabled,

+ "You must enable Kerberos support if you are specifying a Kerberos

Secret")

+

+ require((maybeKrb5File.isEmpty || maybeKrb5CMap.isEmpty) ||

isKerberosEnabled,

+ "You must specify either a krb5 file location or a ConfigMap with a

krb5 file")

+

+ KubernetesUtils.requireNandDefined(

+ maybeKrb5File,

+ maybeKrb5CMap,

+ "Do not specify both a Krb5 local file and the ConfigMap as the

creation " +

+ "of an additional ConfigMap, when one is already specified, is

extraneous")

+

+ KubernetesUtils.requireBothOrNeitherDefined(

+ maybeKeytab,

+ maybePrincipal,

+ "If a Kerberos principal is specified you must also specify a

Kerberos keytab",

+ "If a Kerberos keytab is specified you must also specify a Kerberos

principal")

+

+ KubernetesUtils.requireBothOrNeitherDefined(

+ maybeExistingSecretName,

+ maybeExistingSecretItemKey,

+ "If a secret data item-key where the data of the Kerberos Delegation

Token is specified" +

+" you must also specify the name of the secret",

+ "If a secret storing a Kerberos Delegation Token is specified you

must also" +

+" specify the item-key where the data is stored")

+

+ private val hadoopConfigurationFiles =

hadoopConfDirSpec.hadoopConfDir.map { hConfDir =>

+

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22615 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22615 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97137/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22615 **[Test build #97137 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97137/testReport)** for PR 22615 at commit [`7392cf0`](https://github.com/apache/spark/commit/7392cf00c31d48790d1235330c2fe18f7f850624). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22466: [SPARK-25464][SQL] Create Database to the location,only ...

Github user sandeep-katta commented on the issue: https://github.com/apache/spark/pull/22466 @cloud-fan @gatorsmile if everything is okay,can you please merge this PR --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22583: [SPARK-10816][SS] SessionWindow support for Structure St...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22583 **[Test build #97142 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97142/testReport)** for PR 22583 at commit [`0a27efd`](https://github.com/apache/spark/commit/0a27efdbcd5d7612cdeb9fc2618e1c76e70a586c). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22583: [SPARK-10816][SS] SessionWindow support for Structure St...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22583 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3812/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22583: [SPARK-10816][SS] SessionWindow support for Structure St...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22583 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22675: [SPARK-25347][ML][DOC] Spark datasource for image...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22675#discussion_r223552437

--- Diff: docs/ml-datasource.md ---

@@ -0,0 +1,51 @@

+---

+layout: global

+title: Data sources

+displayTitle: Data sources

+---

+

+In this section, we introduce how to use data source in ML to load data.

+Beside some general data sources "parquat", "csv", "json", "jdbc", we also

provide some specific data source for ML.

+

+**Table of Contents**

+

+* This will become a table of contents (this text will be scraped).

+{:toc}

+

+## Image data source

+

+This image data source is used to load libsvm data files from directory.

+

+

+

+[`ImageDataSource`](api/scala/index.html#org.apache.spark.ml.source.image.ImageDataSource)

+implements Spark SQL data source API for loading image data as DataFrame.

+The loaded DataFrame has one StructType column: "image". containing image

data stored as image schema.

+

+{% highlight scala %}

+scala> spark.read.format("image").load("data/mllib/images/origin")

+res1: org.apache.spark.sql.DataFrame = [image: struct]

+{% endhighlight %}

+

+

+

+[`ImageDataSource`](api/java/org/apache/spark/ml/source/image/ImageDataSource.html)

--- End diff --

cc @mengxr as well

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22675: [SPARK-25347][ML][DOC] Spark datasource for image...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22675#discussion_r223552386

--- Diff: docs/ml-datasource.md ---

@@ -0,0 +1,51 @@

+---

+layout: global

+title: Data sources

+displayTitle: Data sources

+---

+

+In this section, we introduce how to use data source in ML to load data.

+Beside some general data sources "parquat", "csv", "json", "jdbc", we also

provide some specific data source for ML.

+

+**Table of Contents**

+

+* This will become a table of contents (this text will be scraped).

+{:toc}

+

+## Image data source

+

+This image data source is used to load libsvm data files from directory.

+

+

+

+[`ImageDataSource`](api/scala/index.html#org.apache.spark.ml.source.image.ImageDataSource)

+implements Spark SQL data source API for loading image data as DataFrame.

+The loaded DataFrame has one StructType column: "image". containing image

data stored as image schema.

+

+{% highlight scala %}

+scala> spark.read.format("image").load("data/mllib/images/origin")

+res1: org.apache.spark.sql.DataFrame = [image: struct]

+{% endhighlight %}

+

+

+

+[`ImageDataSource`](api/java/org/apache/spark/ml/source/image/ImageDataSource.html)

--- End diff --

Out of curiosity, why did we put the image source inside of Spark, rather

then a separate module? (see also

https://github.com/apache/spark/pull/21742#discussion_r201552008). Avro was put

as a separate module.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22653: [SPARK-25659][PYTHON][TEST] Test type inference s...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22653#discussion_r223552056

--- Diff: python/pyspark/sql/tests.py ---

@@ -1149,6 +1149,75 @@ def test_infer_schema(self):

result = self.spark.sql("SELECT l[0].a from test2 where d['key'].d

= '2'")

self.assertEqual(1, result.head()[0])

+def test_infer_schema_specification(self):

+from decimal import Decimal

+

+class A(object):

+def __init__(self):

+self.a = 1

+

+data = [

+True,

+1,

+"a",

+u"a",

--- End diff --

Fair point. Will change when I fix some codes around here.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22615 I want to see the configurations .. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22237: [SPARK-25243][SQL] Use FailureSafeParser in from_...

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22237#discussion_r223550790

--- Diff: docs/sql-programming-guide.md ---

@@ -1890,6 +1890,10 @@ working with timestamps in `pandas_udf`s to get the

best performance, see

# Migration Guide

+## Upgrading From Spark SQL 2.4 to 3.0

+

+ - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`. In version 2.4 and earlier, arrays of JSON objects

are considered as invalid and converted to `null` if specified schema is

`StructType`. Since Spark 3.0, the input is considered as a valid JSON array

and only its first element is parsed if it conforms to the specified

`StructType`.

--- End diff --

Last option sounds better to me but can we fill the corrupt row when the

corrupt field name is specified in the schema?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22675 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22675 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97141/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22675 **[Test build #97141 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97141/testReport)** for PR 22675 at commit [`887f528`](https://github.com/apache/spark/commit/887f5282fba8a8a0bcbb9242eb87b27bf94d0210). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22630 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22630 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97136/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22630 **[Test build #97136 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97136/testReport)** for PR 22630 at commit [`4fc4301`](https://github.com/apache/spark/commit/4fc43010c7c466e7a3db6a08c554adc78719db76). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22675 **[Test build #97141 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97141/testReport)** for PR 22675 at commit [`887f528`](https://github.com/apache/spark/commit/887f5282fba8a8a0bcbb9242eb87b27bf94d0210). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22675 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3811/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22675: [SPARK-25347][ML][DOC] Spark datasource for image/libsvm...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22675 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22675: [SPARK-25347][ML][DOC] Spark datasource for image...

GitHub user WeichenXu123 opened a pull request: https://github.com/apache/spark/pull/22675 [SPARK-25347][ML][DOC] Spark datasource for image/libsvm user guide ## What changes were proposed in this pull request? Spark datasource for image/libsvm user guide ## How was this patch tested? Scala:  Java:  Python:  You can merge this pull request into a Git repository by running: $ git pull https://github.com/WeichenXu123/spark add_image_source_doc Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/22675.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #22675 commit 887f5282fba8a8a0bcbb9242eb87b27bf94d0210 Author: WeichenXu Date: 2018-10-09T02:54:09Z init --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21156: [SPARK-24087][SQL] Avoid shuffle when join keys are a su...

Github user wangshisan commented on the issue: https://github.com/apache/spark/pull/21156 What is the status now? I think this is of great value, since this gives users more possibility to leverage bucket join, all joins which take the bucket key as the prefix of join keys will benefit from this. And we have a further optimization here: 1. Table A(a1, a2, a3) is bucketed by a1, a2 2. Table B(b1, b2, b3) is bucketed by b1. 3. A join B on (a1=b1, a2=b2, a3=b3) In this case, only table B needs extra shuffle, and shuffle keys are (b1, b2). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22674 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 Kubernetes integration test status success URL: https://amplab.cs.berkeley.edu/jenkins/job/testing-k8s-prb-make-spark-distribution-unified/3809/ --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3809/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22674 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3810/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 Kubernetes integration test starting URL: https://amplab.cs.berkeley.edu/jenkins/job/testing-k8s-prb-make-spark-distribution-unified/3809/ --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22237: [SPARK-25243][SQL] Use FailureSafeParser in from_...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22237#discussion_r223545341

--- Diff: docs/sql-programming-guide.md ---

@@ -1890,6 +1890,10 @@ working with timestamps in `pandas_udf`s to get the

best performance, see

# Migration Guide

+## Upgrading From Spark SQL 2.4 to 3.0

+

+ - Since Spark 3.0, the `from_json` functions supports two modes -

`PERMISSIVE` and `FAILFAST`. The modes can be set via the `mode` option. The

default mode became `PERMISSIVE`. In previous versions, behavior of `from_json`

did not conform to either `PERMISSIVE` nor `FAILFAST`, especially in processing

of malformed JSON records. For example, the JSON string `{"a" 1}` with the

schema `a INT` is converted to `null` by previous versions but Spark 3.0

converts it to `Row(null)`. In version 2.4 and earlier, arrays of JSON objects

are considered as invalid and converted to `null` if specified schema is

`StructType`. Since Spark 3.0, the input is considered as a valid JSON array

and only its first element is parsed if it conforms to the specified

`StructType`.

--- End diff --

the last option LGTM

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 Kubernetes integration test status success URL: https://amplab.cs.berkeley.edu/jenkins/job/testing-k8s-prb-make-spark-distribution-unified/3808/ --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3808/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22674: [SPARK-25680][SQL] SQL execution listener shouldn't happ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22674 **[Test build #97140 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97140/testReport)** for PR 22674 at commit [`a456226`](https://github.com/apache/spark/commit/a4562264c45c24a88f4d508c2d34d4e7aed50631). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 Kubernetes integration test starting URL: https://amplab.cs.berkeley.edu/jenkins/job/testing-k8s-prb-make-spark-distribution-unified/3808/ --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22659: [SPARK-25623][SPARK-25624][SPARK-25625][TEST] Reduce tes...

Github user shahidki31 commented on the issue: https://github.com/apache/spark/pull/22659 Thank you @srowen for merging. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 **[Test build #97139 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97139/testReport)** for PR 21669 at commit [`2108154`](https://github.com/apache/spark/commit/210815496cc2e5ecf8a0e7f86572733994e2f671). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 **[Test build #97138 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97138/testReport)** for PR 21669 at commit [`69840a8`](https://github.com/apache/spark/commit/69840a8026d1f8a72eed9a3e6c7073e8bdbf04b0). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22482: WIP - [SPARK-10816][SS] Support session window natively

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22482 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97135/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22482: WIP - [SPARK-10816][SS] Support session window natively

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22482 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22482: WIP - [SPARK-10816][SS] Support session window natively

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22482 **[Test build #97135 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97135/testReport)** for PR 22482 at commit [`94e9859`](https://github.com/apache/spark/commit/94e9859b4bef01a9c08da0ce444167dd0d25e3ba). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22623 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97134/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22623 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22623 **[Test build #97134 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97134/testReport)** for PR 22623 at commit [`71e3e30`](https://github.com/apache/spark/commit/71e3e30aaf94dfb638eca7e2edfc5feddccc0d3d). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22623 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22623 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97133/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22623: [SPARK-25636][CORE] spark-submit cuts off the failure re...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22623 **[Test build #97133 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97133/testReport)** for PR 22623 at commit [`9e60602`](https://github.com/apache/spark/commit/9e6060289c798117d2e2b18d2f73af1e8eb74d3f). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user shaneknapp commented on the issue: https://github.com/apache/spark/pull/22615 @srowen fair 'nuf... i'll create a jira for this tomorrow and we can hash out final design shite there (rather than overloading this PR). :) --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user ifilonenko commented on a diff in the pull request: https://github.com/apache/spark/pull/21669#discussion_r223535529 --- Diff: docs/running-on-kubernetes.md --- @@ -820,4 +820,37 @@ specific to Spark on Kubernetes. This sets the major Python version of the docker image used to run the driver and executor containers. Can either be 2 or 3. + + spark.kubernetes.kerberos.krb5.location + (none) + + Specify the local location of the krb5 file to be mounted on the driver and executors for Kerberos interaction. + It is important to note that for local files, the KDC defined needs to be visible from inside the containers. --- End diff -- True --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22653: [SPARK-25659][PYTHON][TEST] Test type inference s...

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/22653#discussion_r223534303

--- Diff: python/pyspark/sql/tests.py ---

@@ -1149,6 +1149,75 @@ def test_infer_schema(self):

result = self.spark.sql("SELECT l[0].a from test2 where d['key'].d

= '2'")

self.assertEqual(1, result.head()[0])

+def test_infer_schema_specification(self):

+from decimal import Decimal

+

+class A(object):

+def __init__(self):

+self.a = 1

+

+data = [

+True,

+1,

+"a",

+u"a",

--- End diff --

nit: since this is for unicode string, how about using a non-ascii string?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21809: [SPARK-24851][UI] Map a Stage ID to it's Associated Job ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21809 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97130/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21809: [SPARK-24851][UI] Map a Stage ID to it's Associated Job ...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21809 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21809: [SPARK-24851][UI] Map a Stage ID to it's Associated Job ...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21809 **[Test build #97130 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97130/testReport)** for PR 21809 at commit [`0be099a`](https://github.com/apache/spark/commit/0be099a81bb016034e73562cc32599bd06810956). * This patch passes all tests. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22659: [SPARK-25623][SPARK-25624][SPARK-25625][TEST] Red...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/22659 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22659: [SPARK-25623][SPARK-25624][SPARK-25625][TEST] Reduce tes...

Github user srowen commented on the issue: https://github.com/apache/spark/pull/22659 Merged to master --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22653: [SPARK-25659][PYTHON][TEST] Test type inference specific...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/22653 Sorry for late. @HyukjinKwon Yes, this LGTM. It is great we can follow up other issues like None and datetime.time in other JIRA. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22635: [SPARK-25591][PySpark][SQL] Avoid overwriting deserializ...

Github user viirya commented on the issue: https://github.com/apache/spark/pull/22635 @cloud-fan @gatorsmile @HyukjinKwon Thanks. Yes. As Pandas UDF has the same issue and it is fixed by this PR. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22653: [SPARK-25659][PYTHON][TEST] Test type inference s...

Github user asfgit closed the pull request at: https://github.com/apache/spark/pull/22653 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22615 **[Test build #97137 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97137/testReport)** for PR 22615 at commit [`7392cf0`](https://github.com/apache/spark/commit/7392cf00c31d48790d1235330c2fe18f7f850624). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22615 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22615 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3807/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22653: [SPARK-25659][PYTHON][TEST] Test type inference specific...

Github user HyukjinKwon commented on the issue: https://github.com/apache/spark/pull/22653 Merged to master. Thank you @BryanCutler. Let me try to make sure I take further actions for related items. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Merged build finished. Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22630 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution-unified/3806/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/21669 Test FAILed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97128/ Test FAILed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22615: [SPARK-25016][BUILD][CORE] Remove support for Hadoop 2.6

Github user srowen commented on the issue: https://github.com/apache/spark/pull/22615 Yeah this does need to be in a public repo. apache/spark-jenkins-configurations or something. We can ask INFRA to create them. But, I'm not against just putting them in dev/ or something in the main repo. It's not much right? and we already host all the release scripts there which maybe 5 people are interested in right now. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22630 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22630: [SPARK-25497][SQL] Limit operation within whole stage co...

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/22630 **[Test build #97136 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97136/testReport)** for PR 22630 at commit [`4fc4301`](https://github.com/apache/spark/commit/4fc43010c7c466e7a3db6a08c554adc78719db76). --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21669: [SPARK-23257][K8S] Kerberos Support for Spark on K8S

Github user SparkQA commented on the issue: https://github.com/apache/spark/pull/21669 **[Test build #97128 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/97128/testReport)** for PR 21669 at commit [`e303048`](https://github.com/apache/spark/commit/e30304810c1090c1eaf69cd0302f1621b1f44644). * This patch **fails Spark unit tests**. * This patch merges cleanly. * This patch adds no public classes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user vanzin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223525785

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/hadooputils/HadoopKerberosLogin.scala

---

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features.hadooputils

+

+import scala.collection.JavaConverters._

+

+import io.fabric8.kubernetes.api.model.SecretBuilder

+import org.apache.commons.codec.binary.Base64

+

+import org.apache.spark.{SparkConf, SparkException}

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.k8s.Constants._

+import

org.apache.spark.deploy.k8s.security.KubernetesHadoopDelegationTokenManager

+

+ /**

+ * This logic does all the heavy lifting for Delegation Token creation.

This step

+ * assumes that the job user has either specified a principal and keytab

or ran

+ * $kinit before running spark-submit. By running UGI.getCurrentUser we

are able

+ * to obtain the current user, either signed in via $kinit or keytab.

With the

+ * Job User principal you then retrieve the delegation token from the

NameNode

+ * and store values in DelegationToken. Lastly, the class puts the data

into

+ * a secret. All this is defined in a KerberosConfigSpec.

+ */

+private[spark] object HadoopKerberosLogin {

+ def buildSpec(

+ submissionSparkConf: SparkConf,

+ kubernetesResourceNamePrefix : String,

+ tokenManager: KubernetesHadoopDelegationTokenManager):

KerberosConfigSpec = {

+ val hadoopConf =

SparkHadoopUtil.get.newConfiguration(submissionSparkConf)

+ if (!tokenManager.isSecurityEnabled) {

+ throw new SparkException("Hadoop not configured with Kerberos")

+ }

+ // The JobUserUGI will be taken fom the Local Ticket Cache or via

keytab+principal

+ // The login happens in the SparkSubmit so login logic is not

necessary to include

+ val jobUserUGI = tokenManager.getCurrentUser

+ val originalCredentials = jobUserUGI.getCredentials

+ val (tokenData, renewalInterval) = tokenManager.getDelegationTokens(

+ originalCredentials,

+ submissionSparkConf,

+ hadoopConf)

+ require(tokenData.nonEmpty, "Did not obtain any delegation tokens")

+ val currentTime = tokenManager.getCurrentTime

+ val initialTokenDataKeyName =

s"$KERBEROS_SECRET_LABEL_PREFIX-$currentTime-$renewalInterval"

--- End diff --

> will always need to know the renewal interval

Since the renewal service *does not exist*, why do those values need to be

in the config name? That is my question.

If the answer is "because the renewal service needs it", it means it should

be added when the renewal service exists, and should be removed from here. And

at that time we'll discuss the best way to do it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21669: [SPARK-23257][K8S] Kerberos Support for Spark on ...

Github user ifilonenko commented on a diff in the pull request:

https://github.com/apache/spark/pull/21669#discussion_r223525420

--- Diff:

resource-managers/kubernetes/core/src/main/scala/org/apache/spark/deploy/k8s/features/hadooputils/HadoopKerberosLogin.scala

---

@@ -0,0 +1,75 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.spark.deploy.k8s.features.hadooputils

+

+import scala.collection.JavaConverters._

+

+import io.fabric8.kubernetes.api.model.SecretBuilder

+import org.apache.commons.codec.binary.Base64

+

+import org.apache.spark.{SparkConf, SparkException}

+import org.apache.spark.deploy.SparkHadoopUtil

+import org.apache.spark.deploy.k8s.Constants._

+import

org.apache.spark.deploy.k8s.security.KubernetesHadoopDelegationTokenManager

+

+ /**

+ * This logic does all the heavy lifting for Delegation Token creation.

This step

+ * assumes that the job user has either specified a principal and keytab

or ran

+ * $kinit before running spark-submit. By running UGI.getCurrentUser we

are able

+ * to obtain the current user, either signed in via $kinit or keytab.

With the

+ * Job User principal you then retrieve the delegation token from the

NameNode

+ * and store values in DelegationToken. Lastly, the class puts the data

into

+ * a secret. All this is defined in a KerberosConfigSpec.

+ */

+private[spark] object HadoopKerberosLogin {

+ def buildSpec(

+ submissionSparkConf: SparkConf,

+ kubernetesResourceNamePrefix : String,

+ tokenManager: KubernetesHadoopDelegationTokenManager):

KerberosConfigSpec = {

+ val hadoopConf =

SparkHadoopUtil.get.newConfiguration(submissionSparkConf)

+ if (!tokenManager.isSecurityEnabled) {

+ throw new SparkException("Hadoop not configured with Kerberos")

+ }

+ // The JobUserUGI will be taken fom the Local Ticket Cache or via

keytab+principal

+ // The login happens in the SparkSubmit so login logic is not

necessary to include

+ val jobUserUGI = tokenManager.getCurrentUser

+ val originalCredentials = jobUserUGI.getCredentials

+ val (tokenData, renewalInterval) = tokenManager.getDelegationTokens(

+ originalCredentials,

+ submissionSparkConf,

+ hadoopConf)

+ require(tokenData.nonEmpty, "Did not obtain any delegation tokens")

+ val currentTime = tokenManager.getCurrentTime

+ val initialTokenDataKeyName =

s"$KERBEROS_SECRET_LABEL_PREFIX-$currentTime-$renewalInterval"

--- End diff --

> why this config name needs to reference those values?

Because, either way, an external renewal service will always need to know

the renewal interval and current time to calculate when to do renewal.

> I'm sure we'll have discussions about how to make requests to the service

and propagate any needed configuration.

Agreed, that will be the case in followup PRs

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #22630: [SPARK-25497][SQL] Limit operation within whole s...

Github user cloud-fan commented on a diff in the pull request:

https://github.com/apache/spark/pull/22630#discussion_r223525444

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/basicPhysicalOperators.scala

---

@@ -452,46 +452,73 @@ case class RangeExec(range:

org.apache.spark.sql.catalyst.plans.logical.Range)

val localIdx = ctx.freshName("localIdx")

val localEnd = ctx.freshName("localEnd")

-val range = ctx.freshName("range")

val shouldStop = if (parent.needStopCheck) {

- s"if (shouldStop()) { $number = $value + ${step}L; return; }"

+ s"if (shouldStop()) { $nextIndex = $value + ${step}L; return; }"

} else {

"// shouldStop check is eliminated"

}

+val loopCondition = if (limitNotReachedChecks.isEmpty) {

+ "true"

+} else {

+ limitNotReachedChecks.mkString(" && ")

--- End diff --

This is whole-stage-codege. If bytecode overfolow happens, we will fallback

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22614: [SPARK-25561][SQL] Implement a new config to control par...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22614 Merged build finished. Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22614: [SPARK-25561][SQL] Implement a new config to control par...

Github user AmplabJenkins commented on the issue: https://github.com/apache/spark/pull/22614 Test PASSed. Refer to this link for build results (access rights to CI server needed): https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/97126/ Test PASSed. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #22614: [SPARK-25561][SQL] Implement a new config to control par...