[jira] [Created] (FLINK-21180) Move the state module from 'pyflink.common' to 'pyflink.datastream'

Wei Zhong created FLINK-21180: - Summary: Move the state module from 'pyflink.common' to 'pyflink.datastream' Key: FLINK-21180 URL: https://issues.apache.org/jira/browse/FLINK-21180 Project: Flink Issue Type: Sub-task Components: API / Python Reporter: Wei Zhong Fix For: 1.13.0 Currently we put all the DataStream Functions to 'pyflink.datastream.functions' module and all the State API to 'pyflink.common.state' module. But the ReducingState and AggregatingState depend on ReduceFunction and AggregateFunction, which means the 'state' module will depend the 'functions' module. So we need to move the 'state' module to 'pyflink.datastream' package to avoid circular dependencies between 'pyflink.datastream' and 'pyflink.common'. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] KarmaGYZ commented on pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on pull request #14647: URL: https://github.com/apache/flink/pull/14647#issuecomment-768869214 @zentol I also glad to have a unified SlotManager. As the feature is not stable. I tend to put it out of the scope of this PR. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14783: [FLINK-21169][kafka] flink-connector-base dependency should be scope compile

flinkbot edited a comment on pull request #14783: URL: https://github.com/apache/flink/pull/14783#issuecomment-768767423 ## CI report: * ea0fa2c3a97b2d2082b40732850ccc960ba7a09e Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12576) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] KarmaGYZ commented on pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on pull request #14647: URL: https://github.com/apache/flink/pull/14647#issuecomment-768868239 Thanks for the review @xintongsong . All your comments have been addressed. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20838) Implement SlotRequestAdapter for the FineGrainedSlotManager

[ https://issues.apache.org/jira/browse/FLINK-20838?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273388#comment-17273388 ] Xintong Song commented on FLINK-20838: -- There a todo item from FLINK-20835, that should be addressed once this issue is resolved. https://github.com/apache/flink/pull/14647#discussion_r560716703 > Implement SlotRequestAdapter for the FineGrainedSlotManager > --- > > Key: FLINK-20838 > URL: https://issues.apache.org/jira/browse/FLINK-20838 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Reporter: Yangze Guo >Priority: Major > > Implement an adapter for the deprecated slot request protocol. The adapter > will be removed along with the slot request protocol in the future. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-21174) Optimize the performance of ResourceAllocationStrategy

[

https://issues.apache.org/jira/browse/FLINK-21174?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Yangze Guo updated FLINK-21174:

---

Parent: FLINK-14187

Issue Type: Sub-task (was: Improvement)

> Optimize the performance of ResourceAllocationStrategy

> --

>

> Key: FLINK-21174

> URL: https://issues.apache.org/jira/browse/FLINK-21174

> Project: Flink

> Issue Type: Sub-task

> Components: Runtime / Coordination

>Reporter: Yangze Guo

>Priority: Major

>

> In FLINK-20835, we introduce the {{ResourceAllocationStrategy}} for

> fine-grained resource management, which matches resource requirements against

> available and pending resources and returns the allocation result.

> We need to optimize the computation logic of it, which is so complicated atm.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Updated] (FLINK-21177) Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained resource management

[ https://issues.apache.org/jira/browse/FLINK-21177?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yangze Guo updated FLINK-21177: --- Parent: FLINK-14187 Issue Type: Sub-task (was: New Feature) > Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained > resource management > > > Key: FLINK-21177 > URL: https://issues.apache.org/jira/browse/FLINK-21177 > Project: Flink > Issue Type: Sub-task >Reporter: Yangze Guo >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #14787: [FLINK-21013][table-planner-blink] Ingest row time into StreamRecord in Blink planner

flinkbot edited a comment on pull request #14787: URL: https://github.com/apache/flink/pull/14787#issuecomment-768849685 ## CI report: * 43d7e4e3c23451fd7cf18cfaa7ba91d8ee47bd60 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12585) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #14787: [FLINK-21013][table-planner-blink] Ingest row time into StreamRecord in Blink planner

flinkbot commented on pull request #14787: URL: https://github.com/apache/flink/pull/14787#issuecomment-768849685 ## CI report: * 43d7e4e3c23451fd7cf18cfaa7ba91d8ee47bd60 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14786: [FLINK-19592] [Table SQL / Runtime] MiniBatchGroupAggFunction and MiniBatchGlobalGroupAggFunction emit messages to prevent too early

flinkbot edited a comment on pull request #14786: URL: https://github.com/apache/flink/pull/14786#issuecomment-768805034 ## CI report: * 0d544bd5e5eb4b7fa39a82da9aad46758d994faf Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12581) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14774: [FLINK-21163][python] Fix the issue that Python dependencies specified via CLI override the dependencies specified in configuration

flinkbot edited a comment on pull request #14774: URL: https://github.com/apache/flink/pull/14774#issuecomment-768234538 ## CI report: * 54a8354c03402cedbfe56f8e8e7336f2d9072e34 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12575) * 05847a0e4866d5eccf348c99a83113bedea27682 Azure: [PENDING](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12584) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-21179) Make sure that the open/close methods of the Python DataStream Function are not implemented when using in ReducingState and AggregatingState

[ https://issues.apache.org/jira/browse/FLINK-21179?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Wei Zhong updated FLINK-21179: -- Description: As the ReducingState and AggregatingState only support non-rich functions, we need to make sure that the open/close methods of the Python DataStream Function are not implemented when using in ReducingState and AggregatingState. (was: As the ReducingState and AggregatingState only support non-rich functions, we need to split the base class of the DataStream Functions to 'Function' and 'RichFunction'.) Summary: Make sure that the open/close methods of the Python DataStream Function are not implemented when using in ReducingState and AggregatingState (was: Split the base class of Python DataStream Function to 'Function' and 'RichFunction') > Make sure that the open/close methods of the Python DataStream Function are > not implemented when using in ReducingState and AggregatingState > > > Key: FLINK-21179 > URL: https://issues.apache.org/jira/browse/FLINK-21179 > Project: Flink > Issue Type: Sub-task > Components: API / Python >Reporter: Wei Zhong >Priority: Major > Fix For: 1.13.0 > > > As the ReducingState and AggregatingState only support non-rich functions, we > need to make sure that the open/close methods of the Python DataStream > Function are not implemented when using in ReducingState and AggregatingState. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot commented on pull request #14787: [FLINK-21013][table-planner-blink] Ingest row time into StreamRecord in Blink planner

flinkbot commented on pull request #14787: URL: https://github.com/apache/flink/pull/14787#issuecomment-768845020 Thanks a lot for your contribution to the Apache Flink project. I'm the @flinkbot. I help the community to review your pull request. We will use this comment to track the progress of the review. ## Automated Checks Last check on commit 43d7e4e3c23451fd7cf18cfaa7ba91d8ee47bd60 (Thu Jan 28 07:01:11 UTC 2021) **Warnings:** * No documentation files were touched! Remember to keep the Flink docs up to date! Mention the bot in a comment to re-run the automated checks. ## Review Progress * ❓ 1. The [description] looks good. * ❓ 2. There is [consensus] that the contribution should go into to Flink. * ❓ 3. Needs [attention] from. * ❓ 4. The change fits into the overall [architecture]. * ❓ 5. Overall code [quality] is good. Please see the [Pull Request Review Guide](https://flink.apache.org/contributing/reviewing-prs.html) for a full explanation of the review process. The Bot is tracking the review progress through labels. Labels are applied according to the order of the review items. For consensus, approval by a Flink committer of PMC member is required Bot commands The @flinkbot bot supports the following commands: - `@flinkbot approve description` to approve one or more aspects (aspects: `description`, `consensus`, `architecture` and `quality`) - `@flinkbot approve all` to approve all aspects - `@flinkbot approve-until architecture` to approve everything until `architecture` - `@flinkbot attention @username1 [@username2 ..]` to require somebody's attention - `@flinkbot disapprove architecture` to remove an approval you gave earlier This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] leonardBang opened a new pull request #14787: [FLINK-21013][table-planner-blink] Ingest row time into StreamRecord in Blink planner

leonardBang opened a new pull request #14787: URL: https://github.com/apache/flink/pull/14787 ## What is the purpose of the change * This pull request aims to fix Blink planner does not ingest the row time timestamp into `StreamRecord` when leaving Table/SQL ## Brief change log - Improve the `OperatorCodeGenerator` for sink operator to deal the row time properly ## Verifying this change Add `TableToDataStreamITCase` to check the conversions between `Table` and `DataStream`. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (no) - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (no) - The serializers: (no) - The runtime per-record code paths (performance sensitive): ( no) - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (no) - The S3 file system connector: (no) ## Documentation - Does this pull request introduce a new feature? (no) - If yes, how is the feature documented? (not applicable / docs / JavaDocs / not documented) This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

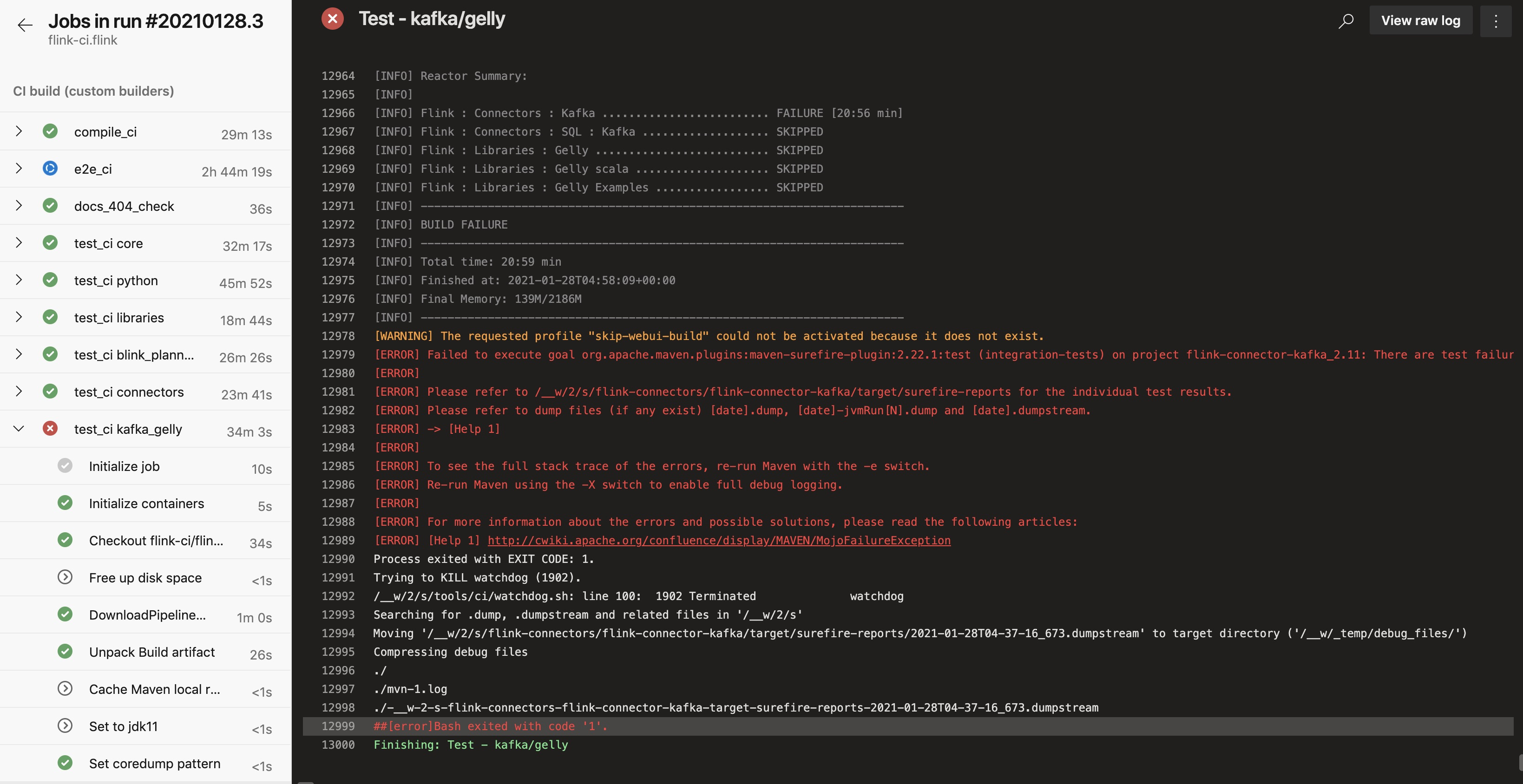

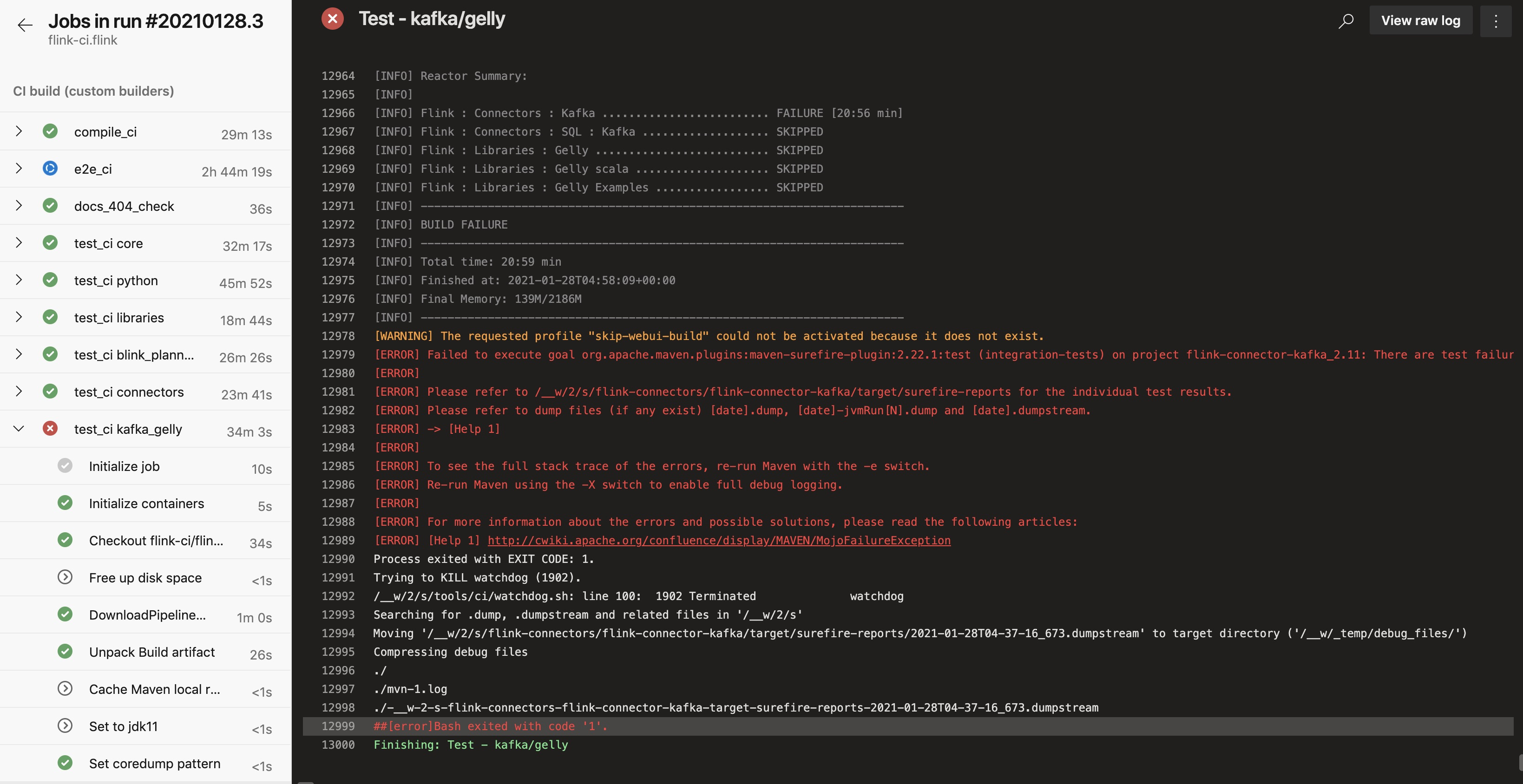

[jira] [Commented] (FLINK-18634) FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout expired after 60000milliseconds while awaiting InitProducerId"

[

https://issues.apache.org/jira/browse/FLINK-18634?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273372#comment-17273372

]

Xintong Song commented on FLINK-18634:

--

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=12577=results

> FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout

> expired after 6milliseconds while awaiting InitProducerId"

>

>

> Key: FLINK-18634

> URL: https://issues.apache.org/jira/browse/FLINK-18634

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.11.0, 1.12.0, 1.13.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=4590=logs=c5f0071e-1851-543e-9a45-9ac140befc32=684b1416-4c17-504e-d5ab-97ee44e08a20

> {code}

> 2020-07-17T11:43:47.9693015Z [ERROR] Tests run: 12, Failures: 0, Errors: 1,

> Skipped: 0, Time elapsed: 269.399 s <<< FAILURE! - in

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase

> 2020-07-17T11:43:47.9693862Z [ERROR]

> testRecoverCommittedTransaction(org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase)

> Time elapsed: 60.679 s <<< ERROR!

> 2020-07-17T11:43:47.9694737Z org.apache.kafka.common.errors.TimeoutException:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> 2020-07-17T11:43:47.9695376Z Caused by:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] pengkangjing edited a comment on pull request #14779: [FLINK-21158][Runtime/Web Frontend] wrong jvm metaspace and overhead size show in taskmanager metric page

pengkangjing edited a comment on pull request #14779: URL: https://github.com/apache/flink/pull/14779#issuecomment-768836183 @xintongsong Another error seems to be not related to this change  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-21138) KvStateServerHandler is not invoked with user code classloader

[ https://issues.apache.org/jira/browse/FLINK-21138?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273371#comment-17273371 ] Maciej Prochniak commented on FLINK-21138: -- I think using original.getClass().getClassLoader() won't work here - "original" is SerializableSerializer wrapper, which is probably loaded by framework classloader. I think with such custom serializers the part loaded by user classloader can hidden via quite a few wrappers/decorators and using contextClassloader is safer, it's done like that also here: [here|https://github.com/apache/flink/blob/master/flink-runtime/src/main/java/org/apache/flink/runtime/state/JavaSerializer.java#L59] I'll try to come up with PR with test > KvStateServerHandler is not invoked with user code classloader > -- > > Key: FLINK-21138 > URL: https://issues.apache.org/jira/browse/FLINK-21138 > Project: Flink > Issue Type: Bug > Components: Runtime / Queryable State >Affects Versions: 1.11.2 >Reporter: Maciej Prochniak >Priority: Major > Attachments: TestJob.java, stacktrace > > > When using e.g. custom Kryo serializers user code classloader has to be set > as context classloader during invocation of methods such as > TypeSerializer.duplicat() > KvStateServerHandler does not do this, which leads to exceptions like > ClassNotFound etc. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Assigned] (FLINK-21134) Reactive mode: Introduce execution mode configuration key and check for supported ClusterEntrypoint type

[ https://issues.apache.org/jira/browse/FLINK-21134?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Robert Metzger reassigned FLINK-21134: -- Assignee: Robert Metzger > Reactive mode: Introduce execution mode configuration key and check for > supported ClusterEntrypoint type > > > Key: FLINK-21134 > URL: https://issues.apache.org/jira/browse/FLINK-21134 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Reporter: Robert Metzger >Assignee: Robert Metzger >Priority: Major > Fix For: 1.13.0 > > > According to the FLIP, introduce a "execution-mode" configuration key, and > check in the ClusterEntrypoint if the chosen entry point type is supported by > the selected execution mode. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] flinkbot edited a comment on pull request #14786: [FLINK-19592] [Table SQL / Runtime] MiniBatchGroupAggFunction and MiniBatchGlobalGroupAggFunction emit messages to prevent too early

flinkbot edited a comment on pull request #14786: URL: https://github.com/apache/flink/pull/14786#issuecomment-768805034 ## CI report: * 0d544bd5e5eb4b7fa39a82da9aad46758d994faf Azure: [CANCELED](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12581) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14774: [FLINK-21163][python] Fix the issue that Python dependencies specified via CLI override the dependencies specified in configuration

flinkbot edited a comment on pull request #14774: URL: https://github.com/apache/flink/pull/14774#issuecomment-768234538 ## CI report: * 54a8354c03402cedbfe56f8e8e7336f2d9072e34 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12575) * 05847a0e4866d5eccf348c99a83113bedea27682 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-21179) Split the base class of Python DataStream Function to 'Function' and 'RichFunction'

Wei Zhong created FLINK-21179: - Summary: Split the base class of Python DataStream Function to 'Function' and 'RichFunction' Key: FLINK-21179 URL: https://issues.apache.org/jira/browse/FLINK-21179 Project: Flink Issue Type: Sub-task Components: API / Python Reporter: Wei Zhong Fix For: 1.13.0 As the ReducingState and AggregatingState only support non-rich functions, we need to split the base class of the DataStream Functions to 'Function' and 'RichFunction'. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] CPS794 commented on pull request #14786: [FLINK-19592] [Table SQL / Runtime] MiniBatchGroupAggFunction and MiniBatchGlobalGroupAggFunction emit messages to prevent too early state evi

CPS794 commented on pull request #14786: URL: https://github.com/apache/flink/pull/14786#issuecomment-768836986 @flinkbot run azure This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] pengkangjing commented on pull request #14779: [FLINK-21158][Runtime/Web Frontend] wrong jvm metaspace and overhead size show in taskmanager metric page

pengkangjing commented on pull request #14779: URL: https://github.com/apache/flink/pull/14779#issuecomment-768836183 @xintongsong Another error seem to be not related to this change  This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Created] (FLINK-21178) Task failure will not trigger master hook's reset()

Brian Zhou created FLINK-21178: -- Summary: Task failure will not trigger master hook's reset() Key: FLINK-21178 URL: https://issues.apache.org/jira/browse/FLINK-21178 Project: Flink Issue Type: Bug Components: Runtime / Checkpointing Affects Versions: 1.11.3, 1.12.0 Reporter: Brian Zhou In Pravega Flink connector integration with Flink 1.12, we found an issue with our no-checkpoint recovery test case [1]. We expect the recovery will call the ReaderCheckpointHook::reset() function which was the behaviour before 1.12. However FLINK-20222 changes the logic, the reset() call will only be called along with a global recovery. This causes Pravega source data loss when failure happens before the first checkpoint. [1] [https://github.com/crazyzhou/flink-connectors/blob/da9f76d04404071471ebd86bf6889b307c9122ff/src/test/java/io/pravega/connectors/flink/FlinkPravegaReaderRGStateITCase.java#L78] -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] PatrickRen commented on pull request #14783: [FLINK-21169][kafka] flink-connector-base dependency should be scope compile

PatrickRen commented on pull request #14783: URL: https://github.com/apache/flink/pull/14783#issuecomment-768833202 Hello @tweise and @becketqin ~ I think putting flink-connector-base JAR under lib directory of Flink distribution might be a better choice. After eventually all connectors are migrated to the new source and sink API, almost every job will reference this module as long as the job uses any type of connector, which would be a kind of duplication among all Flink job JARs. This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot edited a comment on pull request #14774: [FLINK-21163][python] Fix the issue that Python dependencies specified via CLI override the dependencies specified in configuration

flinkbot edited a comment on pull request #14774: URL: https://github.com/apache/flink/pull/14774#issuecomment-768234538 ## CI report: * 54a8354c03402cedbfe56f8e8e7336f2d9072e34 Azure: [SUCCESS](https://dev.azure.com/apache-flink/98463496-1af2-4620-8eab-a2ecc1a2e6fe/_build/results?buildId=12575) Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run travis` re-run the last Travis build - `@flinkbot run azure` re-run the last Azure build This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565849050

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/FineGrainedSlotManager.java

##

@@ -0,0 +1,790 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.annotation.VisibleForTesting;

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.api.common.time.Time;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.runtime.clusterframework.types.AllocationID;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.clusterframework.types.SlotID;

+import org.apache.flink.runtime.concurrent.FutureUtils;

+import org.apache.flink.runtime.concurrent.ScheduledExecutor;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.metrics.MetricNames;

+import org.apache.flink.runtime.metrics.groups.SlotManagerMetricGroup;

+import org.apache.flink.runtime.resourcemanager.ResourceManagerId;

+import org.apache.flink.runtime.resourcemanager.WorkerResourceSpec;

+import

org.apache.flink.runtime.resourcemanager.registration.TaskExecutorConnection;

+import org.apache.flink.runtime.slots.ResourceCounter;

+import org.apache.flink.runtime.slots.ResourceRequirement;

+import org.apache.flink.runtime.slots.ResourceRequirements;

+import org.apache.flink.runtime.taskexecutor.SlotReport;

+import org.apache.flink.util.FlinkException;

+import org.apache.flink.util.Preconditions;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import javax.annotation.Nullable;

+

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.Comparator;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.Set;

+import java.util.concurrent.CompletableFuture;

+import java.util.concurrent.Executor;

+import java.util.concurrent.ScheduledFuture;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+

+/** Implementation of {@link SlotManager} supporting fine-grained resource

management. */

+public class FineGrainedSlotManager implements SlotManager {

+private static final Logger LOG =

LoggerFactory.getLogger(FineGrainedSlotManager.class);

+

+private final TaskManagerTracker taskManagerTracker;

+private final ResourceTracker resourceTracker;

+private final ResourceAllocationStrategy resourceAllocationStrategy;

+

+private final SlotStatusSyncer slotStatusSyncer;

+

+/** Scheduled executor for timeouts. */

+private final ScheduledExecutor scheduledExecutor;

+

+/** Timeout after which an unused TaskManager is released. */

+private final Time taskManagerTimeout;

+

+private final SlotManagerMetricGroup slotManagerMetricGroup;

+

+private final Map jobMasterTargetAddresses = new

HashMap<>();

+

+/** Defines the max limitation of the total number of task executors. */

+private final int maxTaskManagerNum;

+

+/** Defines the number of redundant task executors. */

+private final int redundantTaskManagerNum;

+

+/**

+ * Release task executor only when each produced result partition is

either consumed or failed.

+ */

+private final boolean waitResultConsumedBeforeRelease;

+

+/** The default resource spec of workers to request. */

+private final WorkerResourceSpec defaultWorkerResourceSpec;

+

+/** The resource profile of default slot. */

+private final ResourceProfile defaultSlotResourceProfile;

+

+private boolean sendNotEnoughResourceNotifications = true;

+

+/** ResourceManager's id. */

+@Nullable private ResourceManagerId resourceManagerId;

+

+/** Executor for future callbacks which have to be "synchronized". */

+@Nullable private Executor mainThreadExecutor;

+

+/** Callbacks for resource (de-)allocations. */

+@Nullable private ResourceActions resourceActions;

+

+private ScheduledFuture

[jira] [Created] (FLINK-21177) Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained resource management

Yangze Guo created FLINK-21177: -- Summary: Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained resource management Key: FLINK-21177 URL: https://issues.apache.org/jira/browse/FLINK-21177 Project: Flink Issue Type: Improvement Reporter: Yangze Guo -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Updated] (FLINK-21177) Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained resource management

[ https://issues.apache.org/jira/browse/FLINK-21177?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yangze Guo updated FLINK-21177: --- Issue Type: New Feature (was: Improvement) > Introduce the counterpart of slotmanager.number-of-slots.max in fine-grained > resource management > > > Key: FLINK-21177 > URL: https://issues.apache.org/jira/browse/FLINK-21177 > Project: Flink > Issue Type: New Feature >Reporter: Yangze Guo >Priority: Major > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565846698

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/ResourceAllocationResult.java

##

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.slots.ResourceCounter;

+

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+

+/** Contains the results of the {@link ResourceAllocationStrategy}. */

+public class ResourceAllocationResult {

+private final Set unfulfillableJobs;

+private final Map>

registeredResourceAllocationResult;

+private final List pendingTaskManagersToBeAllocated;

+private final Map>

+pendingResourceAllocationResult;

+

+private ResourceAllocationResult(

+Set unfulfillableJobs,

+Map>

registeredResourceAllocationResult,

+List pendingTaskManagersToBeAllocated,

+Map>

+pendingResourceAllocationResult) {

+this.unfulfillableJobs = unfulfillableJobs;

+this.registeredResourceAllocationResult =

registeredResourceAllocationResult;

+this.pendingTaskManagersToBeAllocated =

pendingTaskManagersToBeAllocated;

+this.pendingResourceAllocationResult = pendingResourceAllocationResult;

+}

+

+public List getPendingTaskManagersToBeAllocated() {

+return Collections.unmodifiableList(pendingTaskManagersToBeAllocated);

+}

+

+public Set getUnfulfillableJobs() {

+return Collections.unmodifiableSet(unfulfillableJobs);

+}

+

+public Map>

getRegisteredResourceAllocationResult() {

+return Collections.unmodifiableMap(registeredResourceAllocationResult);

Review comment:

That's a good point. I agree that the strict unmodifiability is not

necessary for this PR, given that it is more than 5000 lines now.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565846698

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/ResourceAllocationResult.java

##

@@ -0,0 +1,121 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.slots.ResourceCounter;

+

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+

+/** Contains the results of the {@link ResourceAllocationStrategy}. */

+public class ResourceAllocationResult {

+private final Set unfulfillableJobs;

+private final Map>

registeredResourceAllocationResult;

+private final List pendingTaskManagersToBeAllocated;

+private final Map>

+pendingResourceAllocationResult;

+

+private ResourceAllocationResult(

+Set unfulfillableJobs,

+Map>

registeredResourceAllocationResult,

+List pendingTaskManagersToBeAllocated,

+Map>

+pendingResourceAllocationResult) {

+this.unfulfillableJobs = unfulfillableJobs;

+this.registeredResourceAllocationResult =

registeredResourceAllocationResult;

+this.pendingTaskManagersToBeAllocated =

pendingTaskManagersToBeAllocated;

+this.pendingResourceAllocationResult = pendingResourceAllocationResult;

+}

+

+public List getPendingTaskManagersToBeAllocated() {

+return Collections.unmodifiableList(pendingTaskManagersToBeAllocated);

+}

+

+public Set getUnfulfillableJobs() {

+return Collections.unmodifiableSet(unfulfillableJobs);

+}

+

+public Map>

getRegisteredResourceAllocationResult() {

+return Collections.unmodifiableMap(registeredResourceAllocationResult);

Review comment:

That's a good point. I agree that it is not necessary for this PR,

given that it is more than 5000 lines now.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Assigned] (FLINK-21172) canal-json format include es field

[

https://issues.apache.org/jira/browse/FLINK-21172?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu reassigned FLINK-21172:

---

Assignee: Nicholas Jiang

> canal-json format include es field

> --

>

> Key: FLINK-21172

> URL: https://issues.apache.org/jira/browse/FLINK-21172

> Project: Flink

> Issue Type: Improvement

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile), Table

> SQL / Ecosystem

>Affects Versions: 1.12.0, 1.12.1

>Reporter: jiabao sun

>Assignee: Nicholas Jiang

>Priority: Minor

>

> Canal flat message json format has an 'es' field extracted from mysql binlog

> which means the row data real change time in mysql. It expressed the event

> time naturally but was ignored during deserialization.

> {code:json}

> {

> "data": [

> {

> "id": "111",

> "name": "scooter",

> "description": "Big 2-wheel scooter",

> "weight": "5.18"

> }

> ],

> "database": "inventory",

> "es": 158937356,

> "id": 9,

> "isDdl": false,

> "mysqlType": {

> "id": "INTEGER",

> "name": "VARCHAR(255)",

> "description": "VARCHAR(512)",

> "weight": "FLOAT"

> },

> "old": [

> {

> "weight": "5.15"

> }

> ],

> "pkNames": [

> "id"

> ],

> "sql": "",

> "sqlType": {

> "id": 4,

> "name": 12,

> "description": 12,

> "weight": 7

> },

> "table": "products",

> "ts": 1589373560798,

> "type": "UPDATE"

> }

> {code}

> org.apache.flink.formats.json.canal. CanalJsonDeserializationSchema

> {code:java}

> private static RowType createJsonRowType(DataType databaseSchema) {

> // Canal JSON contains other information, e.g. "ts", "sql", but we

> don't need them

> return (RowType)

> DataTypes.ROW(

> DataTypes.FIELD("data",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("old",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("type", DataTypes.STRING()),

> DataTypes.FIELD("database",

> DataTypes.STRING()),

> DataTypes.FIELD("table", DataTypes.STRING()))

> .getLogicalType();

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-21172) canal-json format include es field

[

https://issues.apache.org/jira/browse/FLINK-21172?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273356#comment-17273356

]

Jark Wu commented on FLINK-21172:

-

What metadata key do you want to propose? [~nicholasjiang]

> canal-json format include es field

> --

>

> Key: FLINK-21172

> URL: https://issues.apache.org/jira/browse/FLINK-21172

> Project: Flink

> Issue Type: Improvement

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile), Table

> SQL / Ecosystem

>Affects Versions: 1.12.0, 1.12.1

>Reporter: jiabao sun

>Priority: Minor

>

> Canal flat message json format has an 'es' field extracted from mysql binlog

> which means the row data real change time in mysql. It expressed the event

> time naturally but was ignored during deserialization.

> {code:json}

> {

> "data": [

> {

> "id": "111",

> "name": "scooter",

> "description": "Big 2-wheel scooter",

> "weight": "5.18"

> }

> ],

> "database": "inventory",

> "es": 158937356,

> "id": 9,

> "isDdl": false,

> "mysqlType": {

> "id": "INTEGER",

> "name": "VARCHAR(255)",

> "description": "VARCHAR(512)",

> "weight": "FLOAT"

> },

> "old": [

> {

> "weight": "5.15"

> }

> ],

> "pkNames": [

> "id"

> ],

> "sql": "",

> "sqlType": {

> "id": 4,

> "name": 12,

> "description": 12,

> "weight": 7

> },

> "table": "products",

> "ts": 1589373560798,

> "type": "UPDATE"

> }

> {code}

> org.apache.flink.formats.json.canal. CanalJsonDeserializationSchema

> {code:java}

> private static RowType createJsonRowType(DataType databaseSchema) {

> // Canal JSON contains other information, e.g. "ts", "sql", but we

> don't need them

> return (RowType)

> DataTypes.ROW(

> DataTypes.FIELD("data",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("old",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("type", DataTypes.STRING()),

> DataTypes.FIELD("database",

> DataTypes.STRING()),

> DataTypes.FIELD("table", DataTypes.STRING()))

> .getLogicalType();

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Created] (FLINK-21176) Translate updates on Confluent Avro Format page

Jark Wu created FLINK-21176: --- Summary: Translate updates on Confluent Avro Format page Key: FLINK-21176 URL: https://issues.apache.org/jira/browse/FLINK-21176 Project: Flink Issue Type: Task Components: chinese-translation, Documentation Reporter: Jark Wu We have updated examples in FLINK-20999 in commit 2596c12f7fe6b55bfc8708e1f61d3521703225b3. We should translate the updates to Chinese. -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565845168

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/DefaultResourceAllocationStrategy.java

##

@@ -0,0 +1,233 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.slots.ResourceCounter;

+import org.apache.flink.runtime.slots.ResourceRequirement;

+

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.stream.Collectors;

+

+import static

org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerUtils.getEffectiveResourceProfile;

+

+/**

+ * The default implementation of {@link ResourceAllocationStrategy}, which

always allocate pending

+ * task managers with the fixed profile.

+ */

+public class DefaultResourceAllocationStrategy implements

ResourceAllocationStrategy {

+private final ResourceProfile defaultSlotResourceProfile;

+private final ResourceProfile totalResourceProfile;

+

+public DefaultResourceAllocationStrategy(

+ResourceProfile defaultSlotResourceProfile, int numSlotsPerWorker)

{

+this.defaultSlotResourceProfile = defaultSlotResourceProfile;

+this.totalResourceProfile =

defaultSlotResourceProfile.multiply(numSlotsPerWorker);

+}

+

+/**

+ * Matches resource requirements against available and pending resources.

For each job, in a

+ * first round requirements are matched against registered resources. The

remaining unfulfilled

+ * requirements are matched against pending resources, allocating more

workers if no matching

+ * pending resources could be found. If the requirements for a job could

not be fulfilled then

+ * it will be recorded in {@link

ResourceAllocationResult#getUnfulfillableJobs()}.

+ *

+ * Performance notes: At it's core this method loops, for each job,

over all resources for

+ * each required slot, trying to find a matching registered/pending task

manager. One should

+ * generally go in with the assumption that this runs in

numberOfJobsRequiringResources *

+ * numberOfRequiredSlots * numberOfFreeOrPendingTaskManagers.

+ *

+ * In the absolute worst case, with J jobs, requiring R slots each with

a unique resource

+ * profile such each pair of these profiles is not matching, and T

registered/pending task

+ * managers that don't fulfill any requirement, then this method does a

total of J*R*T resource

+ * profile comparisons.

+ */

+@Override

+public ResourceAllocationResult tryFulfillRequirements(

+Map> missingResources,

+Map>

registeredResources,

+List pendingTaskManagers) {

+final ResourceAllocationResult.Builder resultBuilder =

ResourceAllocationResult.builder();

+final Map pendingResources =

+pendingTaskManagers.stream()

+.collect(

+Collectors.toMap(

+

PendingTaskManager::getPendingTaskManagerId,

+

PendingTaskManager::getTotalResourceProfile));

+for (Map.Entry>

resourceRequirements :

+missingResources.entrySet()) {

+final JobID jobId = resourceRequirements.getKey();

+

+final ResourceCounter unfulfilledJobRequirements =

+tryFulfillRequirementsForJobWithRegisteredResources(

+jobId,

+resourceRequirements.getValue(),

+registeredResources,

+resultBuilder);

+

+if (!unfulfilledJobRequirements.isEmpty()) {

+tryFulfillRequirementsForJobWithPendingResources(

+jobId, unfulfilledJobRequirements,

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565845168

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/DefaultResourceAllocationStrategy.java

##

@@ -0,0 +1,233 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.slots.ResourceCounter;

+import org.apache.flink.runtime.slots.ResourceRequirement;

+

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.stream.Collectors;

+

+import static

org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerUtils.getEffectiveResourceProfile;

+

+/**

+ * The default implementation of {@link ResourceAllocationStrategy}, which

always allocate pending

+ * task managers with the fixed profile.

+ */

+public class DefaultResourceAllocationStrategy implements

ResourceAllocationStrategy {

+private final ResourceProfile defaultSlotResourceProfile;

+private final ResourceProfile totalResourceProfile;

+

+public DefaultResourceAllocationStrategy(

+ResourceProfile defaultSlotResourceProfile, int numSlotsPerWorker)

{

+this.defaultSlotResourceProfile = defaultSlotResourceProfile;

+this.totalResourceProfile =

defaultSlotResourceProfile.multiply(numSlotsPerWorker);

+}

+

+/**

+ * Matches resource requirements against available and pending resources.

For each job, in a

+ * first round requirements are matched against registered resources. The

remaining unfulfilled

+ * requirements are matched against pending resources, allocating more

workers if no matching

+ * pending resources could be found. If the requirements for a job could

not be fulfilled then

+ * it will be recorded in {@link

ResourceAllocationResult#getUnfulfillableJobs()}.

+ *

+ * Performance notes: At it's core this method loops, for each job,

over all resources for

+ * each required slot, trying to find a matching registered/pending task

manager. One should

+ * generally go in with the assumption that this runs in

numberOfJobsRequiringResources *

+ * numberOfRequiredSlots * numberOfFreeOrPendingTaskManagers.

+ *

+ * In the absolute worst case, with J jobs, requiring R slots each with

a unique resource

+ * profile such each pair of these profiles is not matching, and T

registered/pending task

+ * managers that don't fulfill any requirement, then this method does a

total of J*R*T resource

+ * profile comparisons.

+ */

+@Override

+public ResourceAllocationResult tryFulfillRequirements(

+Map> missingResources,

+Map>

registeredResources,

+List pendingTaskManagers) {

+final ResourceAllocationResult.Builder resultBuilder =

ResourceAllocationResult.builder();

+final Map pendingResources =

+pendingTaskManagers.stream()

+.collect(

+Collectors.toMap(

+

PendingTaskManager::getPendingTaskManagerId,

+

PendingTaskManager::getTotalResourceProfile));

+for (Map.Entry>

resourceRequirements :

+missingResources.entrySet()) {

+final JobID jobId = resourceRequirements.getKey();

+

+final ResourceCounter unfulfilledJobRequirements =

+tryFulfillRequirementsForJobWithRegisteredResources(

+jobId,

+resourceRequirements.getValue(),

+registeredResources,

+resultBuilder);

+

+if (!unfulfilledJobRequirements.isEmpty()) {

+tryFulfillRequirementsForJobWithPendingResources(

+jobId, unfulfilledJobRequirements,

[jira] [Closed] (FLINK-20999) Confluent Avro Format should document how to serialize kafka keys

[

https://issues.apache.org/jira/browse/FLINK-20999?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Jark Wu closed FLINK-20999.

---

Resolution: Fixed

Fixed in master: 2596c12f7fe6b55bfc8708e1f61d3521703225b3

> Confluent Avro Format should document how to serialize kafka keys

> -

>

> Key: FLINK-20999

> URL: https://issues.apache.org/jira/browse/FLINK-20999

> Project: Flink

> Issue Type: Improvement

> Components: Documentation, Table SQL / Ecosystem

>Affects Versions: 1.12.0

>Reporter: Svend Vanderveken

>Assignee: Svend Vanderveken

>Priority: Minor

> Fix For: 1.13.0

>

>

> The [Confluent Avro

> Format|https://ci.apache.org/projects/flink/flink-docs-release-1.12/dev/table/connectors/formats/avro-confluent.html]

> only shows example of how to serialize/deserialize Kafka values. Also,

> parameter description is not always clear what is influencing the source and

> the sink behaviour, IMHO.

> This seems surprising especially in the context of a sink kafka connector

> since keys are such an important concept in that case.

> Adding examples of how to serialize/deserialize Kafka keys would add clarity.

> While it can be argued that a connector format is independent from the

> underlying storage, probably showing kafka-oriented examples in this case

> (i.e, with a concept of "key" and "value") makes senses here since this

> connector is very much thought with Kafka in mind.

>

> I'm happy to submit a PR with all if this suggested change is approved?

>

> I suggest to add this:

> h3. writing to Kafka while keeping the keys in "raw" big endian format:

> {code:java}

> CREATE TABLE OUTPUT_TABLE (

> user_id BIGINT,

> item_id BIGINT,

> category_id BIGINT,

> behavior STRING

> ) WITH (

> 'connector' = 'kafka',

> 'topic' = 'user_behavior',

> 'properties.bootstrap.servers' = 'localhost:9092',

> 'key.format' = 'raw',

> 'key.raw.endianness' = 'big-endian',

> 'key.fields' = 'user_id',

> 'value.format' = 'avro-confluent',

> 'value.avro-confluent.schema-registry.url' = 'http://localhost:8081',

> 'value.avro-confluent.schema-registry.subject' = 'user_behavior'

> )

>

> {code}

>

> h3. writing to Kafka while registering both the key and the value to the

> schema registry

> {code:java}

> CREATE TABLE OUTPUT_TABLE (

> user_id BIGINT,

> item_id BIGINT,

> category_id BIGINT,

> behavior STRING

> ) WITH (

> 'connector' = 'kafka',

> 'topic' = 'user_behavior',

> 'properties.bootstrap.servers' = 'localhost:9092',

> -- => this will register a {user_id: long} Avro type in the schema registry.

> -- Watch out: schema evolution in the context of a Kafka key is almost never

> backward nor

> -- forward compatible in practice due to hash partitioning.

> 'key.avro-confluent.schema-registry.url' = 'http://localhost:8081',

> 'key.avro-confluent.schema-registry.subject' = 'user_behavior_key',

> 'key.format' = 'avro-confluent',

> 'key.fields' = 'user_id',

> 'value.format' = 'avro-confluent',

> 'value.avro-confluent.schema-registry.url' = 'http://localhost:8081',

> 'value.avro-confluent.schema-registry.subject' = 'user_behavior_value'

> )

>

> {code}

>

> h3. reading form Kafka with both the key and value schema in the registry

> while resolving field name clashes:

> {code:java}

> CREATE TABLE INPUT_TABLE (

> -- user_id as read from the kafka key:

> from_kafka_key_user_id BIGINT,

>

> -- user_id, and other fields, as read from the kafka value-

> user_id BIGINT,

> item_id BIGINT,

> category_id BIGINT,

> behavior STRING

> ) WITH (

> 'connector' = 'kafka',

> 'topic' = 'user_behavior',

> 'properties.bootstrap.servers' = 'localhost:9092',

> 'key.format' = 'avro-confluent',

> 'key.avro-confluent.schema-registry.url' = 'http://localhost:8081',

> 'key.fields' = 'from_kafka_key_user_id',

> -- Adds a column prefix when mapping the avro fields of the kafka key to

> columns of this Table

> -- to avoid clashes with avro fields of the value (both contain 'user_id' in

> this example)

> 'key.fields-prefix' = 'from_kafka_key_',

> 'value.format' = 'avro-confluent',

> -- cannot include key here since dealt with above

> 'value.fields-include' = 'EXCEPT_KEY',

> 'value.avro-confluent.schema-registry.url' = 'http://localhost:8081'

> )

>

> {code}

>

>

>

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] wuchong merged pull request #14764: [Flink-20999][docs] - adds usage examples to the Kafka Avro Confluent connector format

wuchong merged pull request #14764: URL: https://github.com/apache/flink/pull/14764 This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-20659) YARNSessionCapacitySchedulerITCase.perJobYarnClusterOffHeap test failed with NPE

[

https://issues.apache.org/jira/browse/FLINK-20659?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273349#comment-17273349

]

Guowei Ma commented on FLINK-20659:

---

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=12572=logs=298e20ef-7951-5965-0e79-ea664ddc435e=8560c56f-9ec1-5c40-4ff5-9d3e882d

> YARNSessionCapacitySchedulerITCase.perJobYarnClusterOffHeap test failed with

> NPE

>

>

> Key: FLINK-20659

> URL: https://issues.apache.org/jira/browse/FLINK-20659

> Project: Flink

> Issue Type: Bug

> Components: Deployment / YARN

>Affects Versions: 1.11.0, 1.12.0, 1.13.0

>Reporter: Huang Xingbo

>Assignee: Matthias

>Priority: Major

> Labels: test-stability

>

> [https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=10989=logs=fc5181b0-e452-5c8f-68de-1097947f6483=6b04ca5f-0b52-511d-19c9-52bf0d9fbdfa]

> {code:java}

> 2020-12-17T22:57:58.1994352Z Test

> perJobYarnClusterOffHeap(org.apache.flink.yarn.YARNSessionCapacitySchedulerITCase)

> failed with:

> 2020-12-17T22:57:58.1994893Z java.lang.NullPointerException:

> java.lang.NullPointerException

> 2020-12-17T22:57:58.1995439Z at

> org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptMetrics.getAggregateAppResourceUsage(RMAppAttemptMetrics.java:128)

> 2020-12-17T22:57:58.1996185Z at

> org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl.getApplicationResourceUsageReport(RMAppAttemptImpl.java:900)

> 2020-12-17T22:57:58.1996919Z at

> org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl.createAndGetApplicationReport(RMAppImpl.java:660)

> 2020-12-17T22:57:58.1997526Z at

> org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.getApplications(ClientRMService.java:930)

> 2020-12-17T22:57:58.1998193Z at

> org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.getApplications(ApplicationClientProtocolPBServiceImpl.java:273)

> 2020-12-17T22:57:58.1998960Z at

> org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:507)

> 2020-12-17T22:57:58.1999876Z at

> org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:447)

> 2020-12-17T22:57:58.2000346Z at

> org.apache.hadoop.ipc.RPC$Server.call(RPC.java:989)

> 2020-12-17T22:57:58.2000744Z at

> org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:847)

> 2020-12-17T22:57:58.2001532Z at

> org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:790)

> 2020-12-17T22:57:58.2001915Z at

> java.security.AccessController.doPrivileged(Native Method)

> 2020-12-17T22:57:58.2002286Z at

> javax.security.auth.Subject.doAs(Subject.java:422)

> 2020-12-17T22:57:58.2002734Z at

> org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1836)

> 2020-12-17T22:57:58.2003185Z at

> org.apache.hadoop.ipc.Server$Handler.run(Server.java:2486)

> 2020-12-17T22:57:58.2003447Z

> 2020-12-17T22:57:58.2003708Z at

> sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

> 2020-12-17T22:57:58.2004233Z at

> sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

> 2020-12-17T22:57:58.2004810Z at

> sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

> 2020-12-17T22:57:58.2005468Z at

> java.lang.reflect.Constructor.newInstance(Constructor.java:423)

> 2020-12-17T22:57:58.2005907Z at

> org.apache.hadoop.yarn.ipc.RPCUtil.instantiateException(RPCUtil.java:53)

> 2020-12-17T22:57:58.2006387Z at

> org.apache.hadoop.yarn.ipc.RPCUtil.instantiateRuntimeException(RPCUtil.java:85)

> 2020-12-17T22:57:58.2006920Z at

> org.apache.hadoop.yarn.ipc.RPCUtil.unwrapAndThrowException(RPCUtil.java:122)

> 2020-12-17T22:57:58.2007515Z at

> org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getApplications(ApplicationClientProtocolPBClientImpl.java:291)

> 2020-12-17T22:57:58.2008082Z at

> sun.reflect.GeneratedMethodAccessor39.invoke(Unknown Source)

> 2020-12-17T22:57:58.2008518Z at

> sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> 2020-12-17T22:57:58.2008964Z at

> java.lang.reflect.Method.invoke(Method.java:498)

> 2020-12-17T22:57:58.2009430Z at

> org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:409)

> 2020-12-17T22:57:58.2010002Z at

> org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:163)

> 2020-12-17T22:57:58.2010554Z at

> org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:155)

> 2020-12-17T22:57:58.2011301Z at

>

[jira] [Created] (FLINK-21175) OneInputStreamTaskTest.testWatermarkMetrics:914 expected:<1> but was:<-9223372036854775808>

Guowei Ma created FLINK-21175: - Summary: OneInputStreamTaskTest.testWatermarkMetrics:914 expected:<1> but was:<-9223372036854775808> Key: FLINK-21175 URL: https://issues.apache.org/jira/browse/FLINK-21175 Project: Flink Issue Type: Bug Components: API / Core Affects Versions: 1.13.0 Reporter: Guowei Ma [https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=12572=logs=d89de3df-4600-5585-dadc-9bbc9a5e661c=66b5c59a-0094-561d-0e44-b149dfdd586d] [ERROR] OneInputStreamTaskTest.testWatermarkMetrics:914 expected:<1> but was:<-9223372036854775808> -- This message was sent by Atlassian Jira (v8.3.4#803005)

[jira] [Created] (FLINK-21174) Optimize the performance of ResourceAllocationStrategy

Yangze Guo created FLINK-21174:

--

Summary: Optimize the performance of ResourceAllocationStrategy

Key: FLINK-21174

URL: https://issues.apache.org/jira/browse/FLINK-21174

Project: Flink

Issue Type: Improvement

Components: Runtime / Coordination

Reporter: Yangze Guo

In FLINK-20835, we introduce the {{ResourceAllocationStrategy}} for

fine-grained resource management, which matches resource requirements against

available and pending resources and returns the allocation result.

We need to optimize the computation logic of it, which is so complicated atm.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[GitHub] [flink] KarmaGYZ commented on a change in pull request #14647: [FLINK-20835] Implement FineGrainedSlotManager

KarmaGYZ commented on a change in pull request #14647:

URL: https://github.com/apache/flink/pull/14647#discussion_r565842059

##

File path:

flink-runtime/src/main/java/org/apache/flink/runtime/resourcemanager/slotmanager/DefaultResourceAllocationStrategy.java

##

@@ -0,0 +1,233 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.runtime.resourcemanager.slotmanager;

+

+import org.apache.flink.api.common.JobID;

+import org.apache.flink.api.java.tuple.Tuple2;

+import org.apache.flink.runtime.clusterframework.types.ResourceProfile;

+import org.apache.flink.runtime.instance.InstanceID;

+import org.apache.flink.runtime.slots.ResourceCounter;

+import org.apache.flink.runtime.slots.ResourceRequirement;

+

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.stream.Collectors;

+

+import static

org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerUtils.getEffectiveResourceProfile;

+

+/**

+ * The default implementation of {@link ResourceAllocationStrategy}, which

always allocate pending

+ * task managers with the fixed profile.

+ */

+public class DefaultResourceAllocationStrategy implements

ResourceAllocationStrategy {

+private final ResourceProfile defaultSlotResourceProfile;

+private final ResourceProfile totalResourceProfile;

+

+public DefaultResourceAllocationStrategy(

+ResourceProfile defaultSlotResourceProfile, int numSlotsPerWorker)

{

+this.defaultSlotResourceProfile = defaultSlotResourceProfile;

+this.totalResourceProfile =

defaultSlotResourceProfile.multiply(numSlotsPerWorker);

+}

+

+/**

+ * Matches resource requirements against available and pending resources.

For each job, in a

+ * first round requirements are matched against registered resources. The

remaining unfulfilled

+ * requirements are matched against pending resources, allocating more

workers if no matching

+ * pending resources could be found. If the requirements for a job could

not be fulfilled then

+ * it will be recorded in {@link

ResourceAllocationResult#getUnfulfillableJobs()}.

+ *

+ * Performance notes: At it's core this method loops, for each job,

over all resources for

+ * each required slot, trying to find a matching registered/pending task

manager. One should

+ * generally go in with the assumption that this runs in

numberOfJobsRequiringResources *

+ * numberOfRequiredSlots * numberOfFreeOrPendingTaskManagers.

+ *

+ * In the absolute worst case, with J jobs, requiring R slots each with

a unique resource

+ * profile such each pair of these profiles is not matching, and T

registered/pending task

+ * managers that don't fulfill any requirement, then this method does a

total of J*R*T resource

+ * profile comparisons.

+ */

Review comment:

I open https://issues.apache.org/jira/browse/FLINK-21174 for it.

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-21172) canal-json format include es field

[

https://issues.apache.org/jira/browse/FLINK-21172?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273347#comment-17273347

]

Nicholas Jiang commented on FLINK-21172:

I agree with the point of [~jiabao.sun][~jark]. [~jark], could you please

assign this ticket to me?

> canal-json format include es field

> --

>

> Key: FLINK-21172

> URL: https://issues.apache.org/jira/browse/FLINK-21172

> Project: Flink

> Issue Type: Improvement

> Components: Formats (JSON, Avro, Parquet, ORC, SequenceFile), Table

> SQL / Ecosystem

>Affects Versions: 1.12.0, 1.12.1

>Reporter: jiabao sun

>Priority: Minor

>

> Canal flat message json format has an 'es' field extracted from mysql binlog

> which means the row data real change time in mysql. It expressed the event

> time naturally but was ignored during deserialization.

> {code:json}

> {

> "data": [

> {

> "id": "111",

> "name": "scooter",

> "description": "Big 2-wheel scooter",

> "weight": "5.18"

> }

> ],

> "database": "inventory",

> "es": 158937356,

> "id": 9,

> "isDdl": false,

> "mysqlType": {

> "id": "INTEGER",

> "name": "VARCHAR(255)",

> "description": "VARCHAR(512)",

> "weight": "FLOAT"

> },

> "old": [

> {

> "weight": "5.15"

> }

> ],

> "pkNames": [

> "id"

> ],

> "sql": "",

> "sqlType": {

> "id": 4,

> "name": 12,

> "description": 12,

> "weight": 7

> },

> "table": "products",

> "ts": 1589373560798,

> "type": "UPDATE"

> }

> {code}

> org.apache.flink.formats.json.canal. CanalJsonDeserializationSchema

> {code:java}

> private static RowType createJsonRowType(DataType databaseSchema) {

> // Canal JSON contains other information, e.g. "ts", "sql", but we

> don't need them

> return (RowType)

> DataTypes.ROW(

> DataTypes.FIELD("data",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("old",

> DataTypes.ARRAY(databaseSchema)),

> DataTypes.FIELD("type", DataTypes.STRING()),

> DataTypes.FIELD("database",

> DataTypes.STRING()),

> DataTypes.FIELD("table", DataTypes.STRING()))

> .getLogicalType();

> }

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-18634) FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout expired after 60000milliseconds while awaiting InitProducerId"

[

https://issues.apache.org/jira/browse/FLINK-18634?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273344#comment-17273344

]

Guowei Ma commented on FLINK-18634:

---

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=12571=logs=72d4811f-9f0d-5fd0-014a-0bc26b72b642=c1d93a6a-ba91-515d-3196-2ee8019fbda7

> FlinkKafkaProducerITCase.testRecoverCommittedTransaction failed with "Timeout

> expired after 6milliseconds while awaiting InitProducerId"

>

>

> Key: FLINK-18634

> URL: https://issues.apache.org/jira/browse/FLINK-18634

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Kafka, Tests

>Affects Versions: 1.11.0, 1.12.0, 1.13.0

>Reporter: Dian Fu

>Priority: Major

> Labels: test-stability

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=4590=logs=c5f0071e-1851-543e-9a45-9ac140befc32=684b1416-4c17-504e-d5ab-97ee44e08a20

> {code}

> 2020-07-17T11:43:47.9693015Z [ERROR] Tests run: 12, Failures: 0, Errors: 1,

> Skipped: 0, Time elapsed: 269.399 s <<< FAILURE! - in

> org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase

> 2020-07-17T11:43:47.9693862Z [ERROR]

> testRecoverCommittedTransaction(org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducerITCase)

> Time elapsed: 60.679 s <<< ERROR!

> 2020-07-17T11:43:47.9694737Z org.apache.kafka.common.errors.TimeoutException:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> 2020-07-17T11:43:47.9695376Z Caused by:

> org.apache.kafka.common.errors.TimeoutException: Timeout expired after

> 6milliseconds while awaiting InitProducerId

> {code}

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

[jira] [Commented] (FLINK-20329) Elasticsearch7DynamicSinkITCase hangs

[

https://issues.apache.org/jira/browse/FLINK-20329?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17273341#comment-17273341

]

Guowei Ma commented on FLINK-20329:

---

https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=12571=logs=961f8f81-6b52-53df-09f6-7291a2e4af6a=60581941-0138-53c0-39fe-86d62be5f407

> Elasticsearch7DynamicSinkITCase hangs

> -

>

> Key: FLINK-20329

> URL: https://issues.apache.org/jira/browse/FLINK-20329

> Project: Flink

> Issue Type: Bug

> Components: Connectors / ElasticSearch

>Affects Versions: 1.12.0

>Reporter: Dian Fu

>Priority: Critical

> Labels: test-stability

> Fix For: 1.13.0

>

>

> https://dev.azure.com/apache-flink/apache-flink/_build/results?buildId=10052=logs=d44f43ce-542c-597d-bf94-b0718c71e5e8=03dca39c-73e8-5aaf-601d-328ae5c35f20

> {code}

> 2020-11-24T16:04:05.9260517Z [INFO] Running

> org.apache.flink.streaming.connectors.elasticsearch.table.Elasticsearch7DynamicSinkITCase

> 2020-11-24T16:19:25.5481231Z

> ==

> 2020-11-24T16:19:25.5483549Z Process produced no output for 900 seconds.

> 2020-11-24T16:19:25.5484064Z

> ==

> 2020-11-24T16:19:25.5484498Z