[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 FYI: we are working to fix the CI machines now so I won't be able ATM to run any job on it :( I hope to have the CI machines up and running soon to continue :+1: ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > @qihongxu @michaelandrepearce > I'm running now a CI job: it will take some time, but when it will be fine I will merge this > @qihongxu After all the relevant bits re paging will be merged I will send another PR with the same 2 commits I have sent to your branch: are you available to give some help to check the effects of that PR on your tests? > @qihongxu big thanks for all the effort on this!! And providing the testing time My pleasure :) We are glad to see any boost in perf, especially on paging mode. I will keep a close watch on the new PR you mentioned and ran some more tests as we have done in this issue if needed. Also thank you all for reviews and works on this PR! ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu big thanks for all the effort on this!! ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu @michaelandrepearce I'm running now a CI job: it will take some time, but when it will be fine I will merge this :+1: @qihongxu After all the relevant bits re paging will be merged I will send another PR with the same 2 commits I have sent to your branch: are you available to give some help to check the effects of that PR on your tests? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 Yes if @qihongxu agree to give an hand :P re this pr probably make sense to include at least the second commit of my PR that was unrelated to the new cache impl and was just avoiding an unnecessary query that was always performed... ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @franz1981 i think well have to do some more real world testing with it (difference between a isolated bench and a full e2e test), with @qihongxu help hopefully, it might be something odd that by releasing some performance it causes an odd bottleneck somewhere else. Are you ok, if once full CI passes we merge as is, and continue this on a separate pr? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce I would like first to trigger a CI job of some kind, maybe @clebertsuconic can help with his superbox (just this time) to get an answer sooner? Re the cache I was thinking already to send another PR, but I have verified that is virtually impossible that's the reason of the consumer slow-down. These are the numbers of a the bench comparing it with the original version: ``` Benchmark (size) (type) Mode Cnt Score Error Units CacheBench.getMessage1 32 chunked thrpt 10 150039261.251 ± 12504804.694 ops/s CacheBench.getMessage1 32 linkedlist thrpt 10 31776755.611 ± 1405838.635 ops/s CacheBench.getMessage11024 chunked thrpt 10 31433127.788 ± 3902498.303 ops/s CacheBench.getMessage11024 linkedlist thrpt 102638404.341 ± 119171.758 ops/s CacheBench.getMessage1 102400 chunked thrpt 10 344799.911 ± 27267.965 ops/s CacheBench.getMessage1 102400 linkedlist thrpt 10 20020.925 ± 5392.418 ops/s CacheBench.getMessage3 32 chunked thrpt 10 384605640.192 ± 35164543.632 ops/s CacheBench.getMessage3 32 linkedlist thrpt 10 14124979.510 ± 2875341.272 ops/s CacheBench.getMessage31024 chunked thrpt 10 90418506.375 ± 4593688.556 ops/s CacheBench.getMessage31024 linkedlist thrpt 101562687.000 ± 91433.926 ops/s CacheBench.getMessage3 102400 chunked thrpt 10 978575.016 ± 44800.161 ops/s CacheBench.getMessage3 102400 linkedlist thrpt 10 21614.717 ± 5344.742 ops/s ``` Where `getMessage1` is `LivePageCacheImpl::getMessage` called @ random positions by 1 thread and `getMessage3` is `LivePageCacheImpl::getMessage` called @ random positions by 3 threads. `chunked` is the version and `linkedlist` the original version: the difference is quite large and the new version just scale linearly... ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @franz1981 based on this, shall we merge this pr as is which is quite impressive result at a combined 49.4k. And then work on livepagecache changes separately? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > A possible explanation might be that in our case consumers were reading old pages while producers were writing new pages. There is no intersection between these two groups such as a common page being writing&reading by both of them. So why it the consumer side is being slowed down? I need to check, but I remember that consumers were querying the live page cache, but I could be wrong. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu Sorry to ask twice, but I haven't understood if `Ver no lock & new livePageCache` was making uses of this pr https://github.com/qihongxu/activemq-artemis/pull/1 ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu ping! :) I'm too curious :) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu > Ver no lock & new livePageCache Is making use of the last 2 commits I have sent as a PR to your repository? It sould be way lot faster then `Ver no lock` ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce @franz1981 After we ran tests on both version (one with no lock and the other with new LivePageCache & no lock ), the result chart is as below. Â | Send&Receive | In Total -- | -- | -- Ver Original | 30k/12.5k | 32.5k Ver Modified checkDepage | 31.1k/11.3k | 42.4k Ver Modified hasNext | 23.8k/23.5k | 47.3k Ver no lock | **22.9k/26.5k** | **49.4k** Ver no lock & new livePageCache | **24.5k/22k** | **46.5k** Tests are implemented with same conditions before. Clients consumed and produced on the same queue with 10 million messages in it. All version are based on Artemis-2214, which cache something in PagedRef. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > @michaelandrepearce @qihongxu Ok, checked: the latest version of this PR + my branch https://github.com/franz1981/activemq-artemis/tree/lock-free-live-page-cache is fully non-blocking :) Nice work!! > Be great to see a final stat in @qihongxu test env As soon as I'm back Monday I will try implement same tests on both version(this and franz's). ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce Done, the PR has been sent, now we can just wait the perf results on it :) I have improved quite a bit the live page cache behaviour/reliability (especially if OOME), but sadly I see that the most called method `getMessage` cannot be improved anymore without making the lock-free code a real nightmare. The original version was O(n) depending which message was queried, because it needs to walk the entire linked list of paged messages. In my version I have amortized the cost by using an interesting hybrid between an ArrayList and a LinkedList, similar to https://en.wikipedia.org/wiki/Unrolled_linked_list, but (very) optimized for addition. I'm mentioning this, because is a long time I want to design a single-threaded version of this same data-structure to be used as the main datastructure inside QueueImpl. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce Good idea! let me do it now ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @franz1981 did you send a pr to @qihongxu branch so he can merge it and this pr picks it up? Be great to see a final stat in @qihongxu test env ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce @qihongxu Ok, checked: the latest version of this PR + my branch https://github.com/franz1981/activemq-artemis/tree/lock-free-live-page-cache is never blocked :) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce @qihongxu I have re-implemented the LivePageCache to be completly lock-free: https://github.com/franz1981/activemq-artemis/tree/lock-free-live-page-cache Fell free to try it into this PR too and I can do a PR to your branch. I will provide soon some charts of the contention state :+1: ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > whats the current PR look like in heat maps? I'm finishing a thing to fix `LivePageCacheImpl` too, hopefully today and I will post: impl-wise seems good to me as well :+1: ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu looks good to me. @franz1981 whats the current PR look like in heat maps? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > em.Could you please tell us which issues? We need to verify how it affects our cluster. The big issue im relating to, which became a night mare for my organisation, was that under high concurrency (high throughput and low latency broker setup), the buffers can get mixed up, and was causing index out of bounds issues. Fixes were multiple: https://github.com/apache/activemq-artemis/commit/024db5bd3c1656d265daf60c9e3a362d53b9088b https://github.com/apache/activemq-artemis/commit/da7fb89037481fb6343c760010d4553ff28ac87e Im also aware there have been some other concurrency fixes for smaller issues. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce Removed readlock of isPaging(). Also as @franz1981 suggested now only volatile load addressFullMessagePolicy once on each call. Please review and notify me if any more test needed:) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user wy96f commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > Id agree, im just cautious as we've been hit a few times with concurrency issues that have been a nightmare to find (and quite recently as last month!). em.Could you please tell us which issues? We need to verify how it affects our cluster. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > @michaelandrepearce > > > Do you get this on master or this PR (im mean is that a typo)? > > I've got that on master! ok so i think we not need worry on this for realms of this PR. (probably needs looking into, but doesn;t need to be solved in this pr - imo) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > If we return `true` from the dirty read we can just return it, while if we found the it `false` we could attempt to enter the read lock and validate that's not paging for real. Ive literally gone through every case, what occurs is we call isPaging within an if statement, and then do some logic after, as such anyhow any action we do within these if statements anyhow will be based off a stale state. Im starting to just think we make isPaging not use a read lock (aka make it dirty), as its only used in queueimpl like mentioned and for queuecontrol (aka the admin gui) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce > Im starting to feel like Alice here and were going to end up going into a rabbit hole ;) and will end up with the original isPaging just being dirty. +1 same exact feeling Quoting myself twice for @wy96f: > LivePageCacheImpl (in violet) is now a major contention point and > Compaction is stealing lot of cpu and I/O Just a thought: we can just choose in what being less stale or not. If we return `true` from the dirty read we can just return it, while if we found the it `false` we could attempt to enter the read lock and validate that's not paging for real. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce > Do you get this on master or this PR (im mean is that a typo)? I've got that on master! ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > @michaelandrepearce @qihongxu Just a lil OT but I'm getting these warns on master: > > ``` > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,136 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,139 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,142 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,145 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,148 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,151 during compact replay > 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,154 during compact replay > ``` Do you get this on master? If not then that is a BIG worry ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > Looking at the CPU graph instead I can see many odd things ie Compaction is stealing lot of cpu and I/O: Nice find. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @franz1981 nice graphs, looks like essentially isPaging in QueueImpl unless everything is dirtyRead then we simply move the problem somewhere else, i think we probably need to make then a more general call if all these are safe to have dirty read or not. (Im starting to feel like ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > > > CursorIterator:hasNext > > > > > > Im bit concerned with this doing a dirty read, as this isnt something that is trigger an ascyn action, in actual fact the hasNext is purposefully synchronized. (especially as recently some concurrency issues have been found in paging (e.g. howards current pr) im sensitive to us being too hap hazard here. > > I don't get why isPaging() in hasNext() needs to be consistent. The paging status can change after isPaging() unless we readlock isPaging() and subsequent operations. Without readlock, a) if isPaging() returns true, but the other thread set it to false, subsequent next() call reads no data and returns null; b) if isPaging() returns false, but the other thread set it to true(a new message coming), deliverAsync would be called later anyway. if thats the case then the sync method could be removed also, though i think @clebertsuconic has given some further info to why this is like it is. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 So out the two, the checkDepage is the safer one to use a dirty read, so id expect to see that changed first before anything else. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce > @qihongxu you're using JMS api not core api then? Yes (sometimes for for compatibility concern) > @qihongxu i dont see checkDepage using the dirtyRead in current commit Sorry I probably did not make it clear enough in my last comment. As the chart shows we tried checkDepage using the dirtyRead, but found it makes no difference in perf. We even changed isPaging() method in PageSubscriptionImpl from `return pageStore.isPaging();` to `return pageStore.isPagingDirtyRead();`. In this way not only checkDepage, but also other methods that call PageSubscription.isPaging() (as @franz1981 shown in flame charts above) will all use dirtyRead. But still, same perf as before. From all above we thought it's safer to leave others unchanged, only force CursorIterator:hasNext to use dirtyRead since it affects perf a lot. My latest RP is based on these concerns. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user clebertsuconic commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 during initial development years ago I needed to be careful when to leave paging. this needed some sync points to make sure depage would set it synchronously. I'm not saying anything against the change (I'm still in vacation mode, I'm back on monday).. just saying what was the original intent when it was written. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user wy96f commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > > CursorIterator:hasNext > > Im bit concerned with this doing a dirty read, as this isnt something that is trigger an ascyn action, in actual fact the hasNext is purposefully synchronized. (especially as recently some concurrency issues have been found in paging (e.g. howards current pr) im sensitive to us being too hap hazard here. I don't get why isPaging() in hasNext() needs to be consistent. The paging status can change after isPaging() unless we readlock isPaging() and subsequent operations. Without readlock, a) if isPaging() returns true, but the other thread set it to false, subsequent next() call reads no data and returns null; b) if isPaging() returns false, but the other thread set it to true(a new message coming), deliverAsync would be called later anyway. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

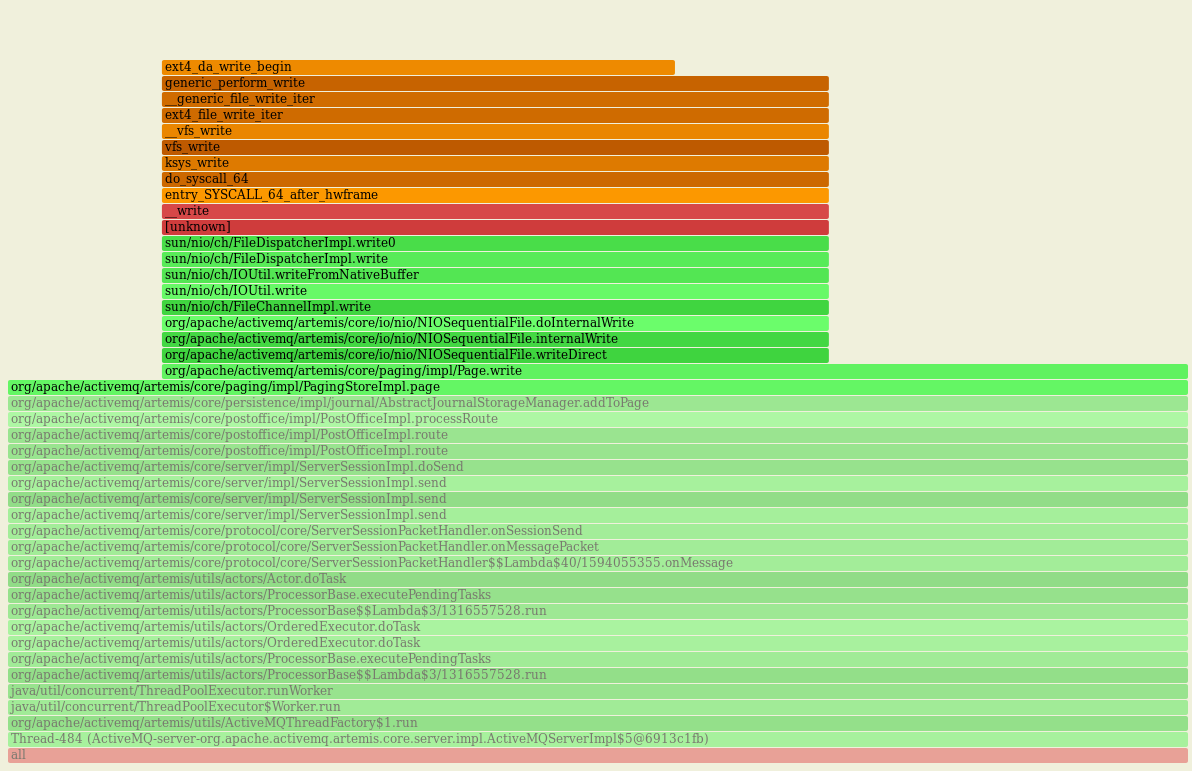

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 Looking at the CPU graph instead I can see many odd things: - Page writing is double copying the heap ByteBuffer into a native ByteBuffer to write on the page file:  - Compaction is stealing lot of cpu and I/O:  ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

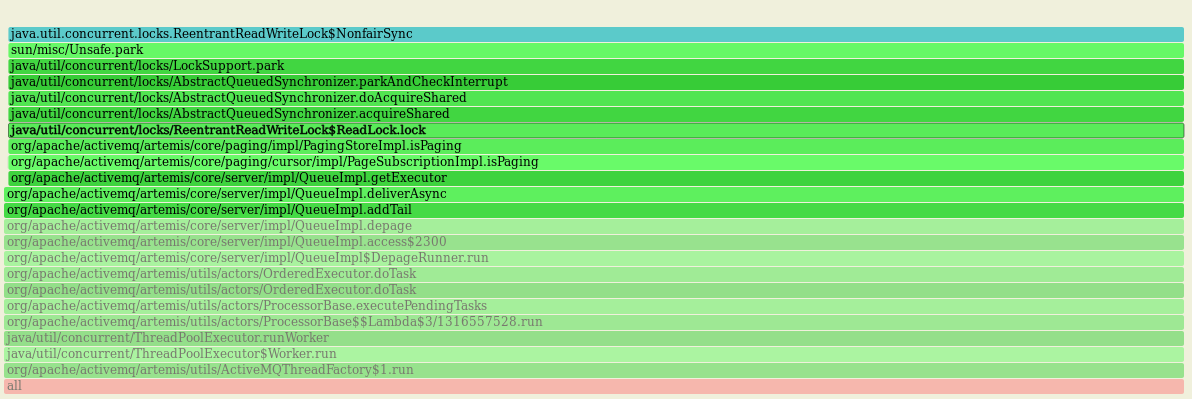

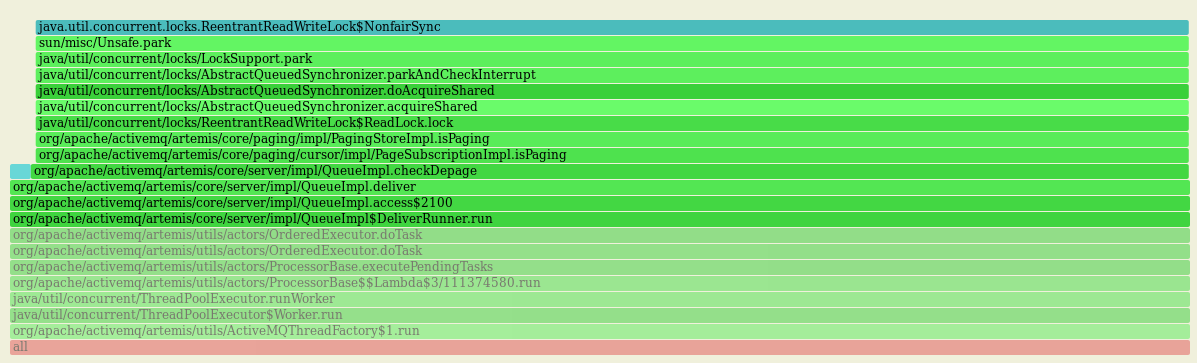

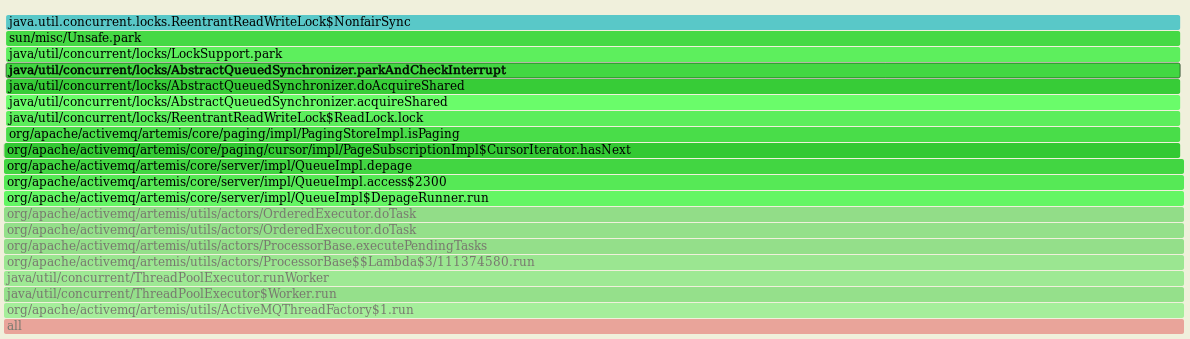

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 I have taken a look on the version used for this PR + the change suggested by @michaelandrepearce on checkDepage and I have found that: LivePageCacheImpl (in violet) is now a major contention point:  While re `isPaging`:  and  Are now source of contention ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce @qihongxu Just a lil OT but I'm getting these warns on master: ``` 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,136 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,139 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,142 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,145 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,148 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,151 during compact replay 2019-01-03 17:36:44,408 WARN [org.apache.activemq.artemis.journal] AMQ142007: Can not find record 8,103,154 during compact replay ``` ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu i dont see checkDepage using the dirtyRead in current commit ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > The version I have shown is just master ie any read lock is there! I get that, im more relfecting on this change in PR. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 The version I have shown is just master ie any read lock is there! ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > CursorIterator:hasNext Im bit concerned with this doing a dirty read, as this isnt something that is trigger an ascyn action, in actual fact the hasNext is purposefully synchronized. @franz1981 if checkDepage is removed from the lock, why would DepageRunner now be locking it up so bad? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > > Instead of transaction consumer you could use client acknowledge or even individual acknowledge. > > It seems that both client-acknowledge and individual-acknowledge mode will finally use Transaction at server side. Considering these modes have no obvious difference in performance, we choose to use transaction as itâs more reliable and supports rollback. @qihongxu you're using JMS api not core then? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user franz1981 commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu Just as a confirmation, I have used https://github.com/jvm-profiling-tools/async-profiler on lock events ie time while waiting to enter into a lock and I have found the same exact behaviours explained above: QueueImpl::checkDepage  QueueImpl::DepageRunner  both are calling `PagingStoreImpl::isPaging` and are blocking each others ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > Instead of transaction consumer you could use client acknowledge or even individual acknowledge. It seems that both client-acknowledge and individual-acknowledge mode will finally use Transaction at server side. Considering these modes have no obvious difference in performance, we choose to use transaction as itâs more reliable and supports rollback. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 > @qihongxu, > > re: > " > According to this we have an internal branch that removes isPaging() methodâs readlock in PagingStoreImpl, along with adding pageSyncTimerâs specific executor. After that we performed a same test and get a result of 21k/25k Send&Receiveâin total 46k TPS. This version runs smoothly in our use case but we are still exploring potential effects. > " > > Agree that as the QueueImpl:checkDepage which is called from deliver method, only schedules another depage asynchronously that the read barrier lock for that is over kill as it doesn't have to be strongly consistent correct. > > I think changing the current method could be dangerous, unless we do a fuller analysis on all its uses, but i think we could easily add a new method named something like "isPagingDirtyRead" which can return without the readlock, it will mean the returned value isn't always 100% accurate but in cases such as checkDepage, we don't need it to be, its just a single, and we can call it where we know its safe to. > > Would you be happy adding this, (will need to be exposed via PageSubscription)? And updating checkDepage to use that one? As you suggested we tried update checkDepage with a new "isPagingDirtyRead" method which can return without the readlock. But after running same tests on this version it seems that checkDepage did not affect receive performance too much. Instead, CursorIterator#hasNext in PageSubscriptionImpl also called isPaging() and had a significant impact on receive performance. According to this we updated hasNext() and forced it to use the new âisPagingDirtyReadâ. Below is the result chart. (P.S the original ver is a control group, not exactly the âoriginalâ master-branch build. It has been applied with specific pageSyncTimer executor, and cache durable in PageReference - See PR Artemis-2214.) Â | Send&Receive | In Total -- | -- | -- Ver Original | 30k/12.5k | 32.5k Ver Modified checkDepage | 31.1k/11.3k | 42.4k Ver Modified hasNext | 23.8k/23.5k | 47.3k Detailed TPS chart can be seen in attachment. (Sorry we dont have enough time for running tests. Just got back to work today) [TPS chart.docx](https://github.com/apache/activemq-artemis/files/2723731/TPS.chart.docx) ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @qihongxu, re: " According to this we have an internal branch that removes isPaging() methodâs readlock in PagingStoreImpl, along with adding pageSyncTimerâs specific executor. After that we performed a same test and get a result of 21k/25k Send&Receiveâin total 46k TPS. This version runs smoothly in our use case but we are still exploring potential effects. " Agree that as the QueueImpl:checkDepage which is called from deliver method, only schedules another depage asynchronously that the read barrier lock for that is over kill as it doesn't have to be strongly consistent correct. I think changing the current method could be dangerous, unless we do a fuller analysis on all its uses, but i think we could easily add a new method named something like "isPagingDirtyRead" which can return without the readlock, it will mean the returned value isn't always 100% accurate but in cases such as checkDepage, we don't need it to be, its just a single. Would you be happy adding this, (will need to be exposed via PageSubscription)? And updating checkDepage to use that one? ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 The bit around lock contention on paging check is very interesting where @qihongxu removed it and performance rose, this would suggest consumer perf change isnt related to io but due to over lock contention in code. Def would be good to see if its safe to remove ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user clebertsuconic commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 The receiver could be slower because of more IO. Thatâs not a bad thing. Iâm on vacation this week. I wonât be able to get to this. Just saying why the receiver could be slower. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 If you could supply more complete stats with ramp ups e.g pef at 1k, 2k, 3k...5k...15k..20k.21k.22k...25k etc etc, over time. Rather than just a summary snapshot. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user qihongxu commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 @michaelandrepearce As presented in test result adding a specific executor do improve producer rates, and also means page() method in PagingStoreImpl will acquire writelock more often. At the same time QueueImpl::deliver will call isPaging() to check whether queue is paging or not, which acquire readlock and make it a race condition. This may explain why the increase of producer rates is accompanied by the decrease of consumer rates. According to this we have an internal branch that removes isPaging() methodâs readlock in PagingStoreImpl, along with adding pageSyncTimerâs specific executor. After that we performed a same test and get a result of 21k/25k Send&Receiveâin total 46k TPS. This version runs smoothly in our use case but we are still exploring potential effects. The reason why we use transactional receive is to make sure every message indeed delivered and consumed. Say that if we do non-tx receive and consumer system fail in the following processing chain, this message may be delivered but actually âlostâ. So we choose to let consumer decide when should they ack and commit, instead of auto-commit acks. ---

[GitHub] activemq-artemis issue #2484: ARTEMIS-2216 Use a specific executor for pageS...

Github user michaelandrepearce commented on the issue: https://github.com/apache/activemq-artemis/pull/2484 Comments on this are: We have shared executor and thread pools as a way to pool these its part artemis design so that these can be controlled, eg the number of threads are configurable. And theres uses cases for why, we dont just add executors. If we add a new pool it should be configurable like others. Whilst your use case this significantly improves things. As you note this change isnt entirely positive to all users as it does impact use cases where people may care more on the consumer side (i am one one of those). If anything if the broker is saturated its more important consumers for us can dequeue and if anything producers backpressure. As if consumers cant keep up and producer peak continues you can very soon end up situation where paging depth will just grow and grow. It would be good to get more stats therefore on how as producer rates ramup how consumer rates ramp down. And crticial cross over points. Along with number of remote consumers and producers. All of this could be mute if there is a way to eliminate the negative effect this change has. ---