[GitHub] [spark] olaky commented on pull request #36762: [SPARK-39259][SQL][TEST][FOLLOWUP] Fix Scala 2.13 `ClassCastException` in `ComputeCurrentTimeSuite`

olaky commented on PR #36762: URL: https://github.com/apache/spark/pull/36762#issuecomment-1148259858 @dongjoon-hyun thanks a lot for picking this up for me! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] olaky commented on pull request #36753: [SPARK-39259][SQL][3.2] Evaluate timestamps consistently in subqueries

olaky commented on PR #36753: URL: https://github.com/apache/spark/pull/36753#issuecomment-1148258363 I cherry-picked 583a9c75bbb35387169d4f0cf763ef566d899954 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #35250: [SPARK-37961][SQL] Override maxRows/maxRowsPerPartition for some logical operators

zhengruifeng commented on PR #35250: URL: https://github.com/apache/spark/pull/35250#issuecomment-1148255084 @cloud-fan Sure, Let me update this PR -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] LuciferYang commented on pull request #36732: [SPARK-39345][CORE][SQL][DSTREAM][ML][MESOS][SS] Replace `filter(!condition)` with `filterNot(condition)`

LuciferYang commented on PR #36732: URL: https://github.com/apache/spark/pull/36732#issuecomment-1148250861 > No, we wouldn't backport this, that's more change. Does this offer any benefit? I'm not sure it's more readable even. If the readability is not improved, let me close this pr. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] shiyuhang0 commented on pull request #21990: [SPARK-25003][PYSPARK] Use SessionExtensions in Pyspark

shiyuhang0 commented on PR #21990: URL: https://github.com/apache/spark/pull/21990#issuecomment-1148248826 Why not port it to Spark < 3 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36776: [SPARK-38997][SPARK-39037][SQL][FOLLOWUP] `PushableColumnWithoutNestedColumn` need be translated to predicate too

beliefer commented on code in PR #36776:

URL: https://github.com/apache/spark/pull/36776#discussion_r890808786

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -55,8 +55,13 @@ class V2ExpressionBuilder(

} else {

Some(FieldReference(name))

}

-case pushableColumn(name) if !nestedPredicatePushdownEnabled =>

- Some(FieldReference.column(name))

+case col @ pushableColumn(name) if !nestedPredicatePushdownEnabled =>

Review Comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #35612: [SPARK-38289][SQL] Refactor SQL CLI exit code to make it more clear

AngersZh commented on PR #35612: URL: https://github.com/apache/spark/pull/35612#issuecomment-1148234463 Looks like the latest failed test not related to this pr -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on a diff in pull request #36776: [SPARK-38997][SPARK-39037][SQL][FOLLOWUP] `PushableColumnWithoutNestedColumn` need be translated to predicate too

huaxingao commented on code in PR #36776:

URL: https://github.com/apache/spark/pull/36776#discussion_r890797844

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -55,8 +55,13 @@ class V2ExpressionBuilder(

} else {

Some(FieldReference(name))

}

-case pushableColumn(name) if !nestedPredicatePushdownEnabled =>

- Some(FieldReference.column(name))

+case col @ pushableColumn(name) if !nestedPredicatePushdownEnabled =>

Review Comment:

+1

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #36776: [SPARK-38997][SPARK-39037][SQL][FOLLOWUP] `PushableColumnWithoutNestedColumn` need be translated to predicate too

cloud-fan commented on code in PR #36776:

URL: https://github.com/apache/spark/pull/36776#discussion_r890793608

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/util/V2ExpressionBuilder.scala:

##

@@ -55,8 +55,13 @@ class V2ExpressionBuilder(

} else {

Some(FieldReference(name))

}

-case pushableColumn(name) if !nestedPredicatePushdownEnabled =>

- Some(FieldReference.column(name))

+case col @ pushableColumn(name) if !nestedPredicatePushdownEnabled =>

Review Comment:

can we merge the code a bit more?

```

case col @ pushableColumn(name) =>

val ref = if (nestedPredicatePushdownEnabled) ... else ...

if (predicate) ... else ...

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xiuzhu9527 commented on pull request #36784: [SPARK-39396][SQL] Fix LDAP login exception 'error code 49 - invalid credentials'

xiuzhu9527 commented on PR #36784: URL: https://github.com/apache/spark/pull/36784#issuecomment-1148226308 @HyukjinKwon please take a look, Thanks ! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xiuzhu9527 opened a new pull request, #36784: [SPARK-39396][SQL] Fix LDAP login exception 'error code 49 - invalid credentials'

xiuzhu9527 opened a new pull request, #36784: URL: https://github.com/apache/spark/pull/36784 ### What changes were proposed in this pull request? In the PR, Fixed the problem that the DN is (cn=user,ou=people, dc=example, dc=com) LDAP login failure. ### Why are the changes needed? The hard coded DN in the org.apache.hive.service.auth.LdapAuthenticationProviderImpl#Authenticate() is (uid=user,ou=people, dc=example, dc=com), resulting in LDAP authentication failure ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing test. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xiuzhu9527 closed pull request #36783: [SPARK-39396][SQL] Fix LDAP login exception 'error code 49 - invalid …

xiuzhu9527 closed pull request #36783: [SPARK-39396][SQL] Fix LDAP login exception 'error code 49 - invalid … URL: https://github.com/apache/spark/pull/36783 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] xiuzhu9527 opened a new pull request, #36783: [SPARK-39396][SQL] Fix LDAP login exception 'error code 49 - invalid …

xiuzhu9527 opened a new pull request, #36783: URL: https://github.com/apache/spark/pull/36783 ### What changes were proposed in this pull request? In the PR, Fixed the problem that the DN is (cn=user,ou=people, dc=example, dc=com) LDAP login failure. ### Why are the changes needed? The hard coded DN in the org.apache.hive.service.auth.LdapAuthenticationProviderImpl#Authenticate() is (uid=user,ou=people, dc=example, dc=com), resulting in LDAP authentication failure ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing test. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #36782: [SPARK-39394][DOCS][SS] Improve PySpark Structured Streaming page more readable

HyukjinKwon closed pull request #36782: [SPARK-39394][DOCS][SS] Improve PySpark Structured Streaming page more readable URL: https://github.com/apache/spark/pull/36782 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #36782: [SPARK-39394][DOCS][SS] Improve PySpark Structured Streaming page more readable

HyukjinKwon commented on PR #36782: URL: https://github.com/apache/spark/pull/36782#issuecomment-1148212500 Merged to master. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on a diff in pull request #36775: [SPARK-39389]Filesystem closed should not be considered as corrupt files

mridulm commented on code in PR #36775:

URL: https://github.com/apache/spark/pull/36775#discussion_r890765675

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##

@@ -253,6 +253,9 @@ class FileScanRDD(

// Throw FileNotFoundException even if `ignoreCorruptFiles`

is true

case e: FileNotFoundException if !ignoreMissingFiles =>

throw e

case e @ (_: RuntimeException | _: IOException) if

ignoreCorruptFiles =>

+if (e.getMessage.contains("Filesystem closed")) {

Review Comment:

+1 to @JoshRosen's proposal here.

Given that hadoop is throwing a generic exception here, and given the lack

of principled alternatives available - walking the stack allows us to

reasonably detect if the cause is due to hadoop filesystem being closed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on a diff in pull request #36775: [SPARK-39389]Filesystem closed should not be considered as corrupt files

mridulm commented on code in PR #36775:

URL: https://github.com/apache/spark/pull/36775#discussion_r890765675

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##

@@ -253,6 +253,9 @@ class FileScanRDD(

// Throw FileNotFoundException even if `ignoreCorruptFiles`

is true

case e: FileNotFoundException if !ignoreMissingFiles =>

throw e

case e @ (_: RuntimeException | _: IOException) if

ignoreCorruptFiles =>

+if (e.getMessage.contains("Filesystem closed")) {

Review Comment:

+1 to @JoshRosen's proposal.

Given that hadoop is throwing a generic exception here, and given the lack

of principled alternatives available - walking the stack allows us to

reasonably detect if the cause is due to hadoop filesystem being closed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log

huaxingao commented on PR #36777: URL: https://github.com/apache/spark/pull/36777#issuecomment-1148178978 Merged to master. Thanks @dcoliversun -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao closed pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log

huaxingao closed pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log URL: https://github.com/apache/spark/pull/36777 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] otterc commented on a diff in pull request #35906: [SPARK-33236][shuffle] Enable Push-based shuffle service to store state in NM level DB for work preserving restart

otterc commented on code in PR #35906:

URL: https://github.com/apache/spark/pull/35906#discussion_r890584847

##

common/network-yarn/src/main/java/org/apache/spark/network/yarn/YarnShuffleService.java:

##

@@ -230,11 +241,14 @@ protected void serviceInit(Configuration externalConf)

throws Exception {

// when it comes back

if (_recoveryPath != null) {

registeredExecutorFile = initRecoveryDb(RECOVERY_FILE_NAME);

+mergeManagerFile =

initRecoveryDb(SPARK_SHUFFLE_MERGE_RECOVERY_FILE_NAME);

}

- TransportConf transportConf = new TransportConf("shuffle", new

HadoopConfigProvider(_conf));

- MergedShuffleFileManager shuffleMergeManager =

newMergedShuffleFileManagerInstance(

-transportConf);

+ TransportConf transportConf = new TransportConf("shuffle",new

HadoopConfigProvider(_conf));

Review Comment:

why this change?

##

common/network-yarn/src/main/java/org/apache/spark/network/yarn/YarnShuffleService.java:

##

@@ -230,11 +241,14 @@ protected void serviceInit(Configuration externalConf)

throws Exception {

// when it comes back

if (_recoveryPath != null) {

registeredExecutorFile = initRecoveryDb(RECOVERY_FILE_NAME);

+mergeManagerFile =

initRecoveryDb(SPARK_SHUFFLE_MERGE_RECOVERY_FILE_NAME);

}

- TransportConf transportConf = new TransportConf("shuffle", new

HadoopConfigProvider(_conf));

- MergedShuffleFileManager shuffleMergeManager =

newMergedShuffleFileManagerInstance(

-transportConf);

+ TransportConf transportConf = new TransportConf("shuffle",new

HadoopConfigProvider(_conf));

+ if (shuffleMergeManager == null) {

Review Comment:

why did we add this condition here?

We don't check for the `blockHandler` below.

##

common/network-shuffle/src/main/java/org/apache/spark/network/shuffle/RemoteBlockPushResolver.java:

##

@@ -350,15 +416,27 @@ void closeAndDeletePartitionFilesIfNeeded(

* up older shuffleMergeId partitions. The cleanup will be executed in a

separate thread.

*/

@VisibleForTesting

- void closeAndDeletePartitionFiles(Map

partitions) {

+ void closeAndDeleteOutdatedPartitions(Map

partitions) {

partitions

.forEach((partitionId, partitionInfo) -> {

synchronized (partitionInfo) {

partitionInfo.closeAllFilesAndDeleteIfNeeded(true);

+

removeAppShufflePartitionInfoFromInDB(partitionInfo.appAttemptShuffleMergeId);

}

});

}

+ void removeAppShufflePartitionInfoFromInDB(AppAttemptShuffleMergeId

appAttemptShuffleMergeId) {

Review Comment:

Nit: `removeAppShufflePartitionInfoFromInDB` ->

`removeAppShufflePartitionInfoFromDB`

##

common/network-shuffle/src/main/java/org/apache/spark/network/shuffle/RemoteBlockPushResolver.java:

##

@@ -655,6 +743,197 @@ public void registerExecutor(String appId,

ExecutorShuffleInfo executorInfo) {

}

}

+

+ @Override

+ public void close() {

+if (db != null) {

+ try {

+db.close();

+ } catch (IOException e) {

+logger.error("Exception closing leveldb with registered app paths info

and "

++ "shuffle partition info", e);

+ }

+}

+ }

+

+ private void writeAppPathsInfoToDb(String appId, int attemptId, AppPathsInfo

appPathsInfo) {

+if (db != null) {

+ try {

+byte[] key = getDbAppAttemptPathsKey(new AppAttemptId(appId,

attemptId));

+String valueStr = mapper.writeValueAsString(appPathsInfo);

+byte[] value = valueStr.getBytes(StandardCharsets.UTF_8);

+db.put(key, value);

+ } catch (Exception e) {

+logger.error("Error saving registered app paths info", e);

+ }

+}

+ }

+

+ private void writeAppAttemptShuffleMergeInfoToDB(

+ String appId,

+ int appAttemptId,

+ int shuffleId,

+ int shuffleMergeId) {

+if (db != null) {

+ // Write AppAttemptShuffleMergeId into LevelDB for finalized shuffles

+ try{

+byte[] dbKey = getDbAppAttemptShufflePartitionKey(

+new AppAttemptShuffleMergeId(appId, appAttemptId, shuffleId,

shuffleMergeId));

+db.put(dbKey, new byte[0]);

+ } catch (Exception e) {

+logger.error("Error saving active app shuffle partition", e);

+ }

+}

+

+ }

+

+ private T parseDbKey(String key, String prefix, Class valueType) {

+try {

+ String json = key.substring(prefix.length() + 1);

+ return mapper.readValue(json, valueType);

+} catch (Exception exception) {

+ logger.error("Exception while parsing the DB key {}", key);

+ return null;

+}

+ }

+

+ private AppPathsInfo parseDBAppAttemptPathsValue(byte[] value, AppAttemptId

appAttemptId) {

+try {

+ return mapper.readValue(value, AppPathsInfo.class);

+} catch (Exception exception) {

+ logger.error("Exception while parsing the DB value for {}",

appAttemptId);

+ return null;

+}

+ }

+

+ priv

[GitHub] [spark] itholic opened a new pull request, #36782: [SPARK-39394][DOCS] Improve PySpark Structured Streaming page more readable

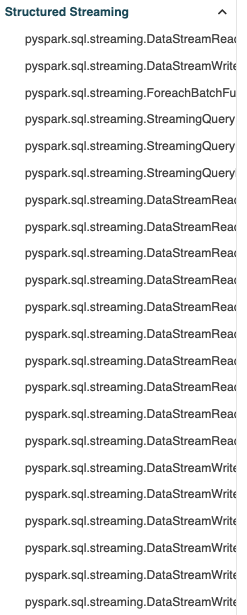

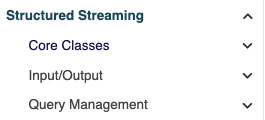

itholic opened a new pull request, #36782: URL: https://github.com/apache/spark/pull/36782 ### What changes were proposed in this pull request? This PR proposes to improve the PySpark Structured Streaming API reference page to be more readable, So far, the PySpark Structured Streaming API reference page is not-well organized so it's a bit uncomfortable to be read as below:  ### Why are the changes needed? The improvement of document readability will also improve the usability for PySpark Structured Streaming. ### Does this PR introduce _any_ user-facing change? Yes, now the documentation is categorized by its class or their own purpose more clearly as below:  ### How was this patch tested? The existing doc build in CI should cover. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] JoshRosen commented on a diff in pull request #36775: [SPARK-39389]Filesystem closed should not be considered as corrupt files

JoshRosen commented on code in PR #36775:

URL: https://github.com/apache/spark/pull/36775#discussion_r890727456

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##

@@ -253,6 +253,9 @@ class FileScanRDD(

// Throw FileNotFoundException even if `ignoreCorruptFiles`

is true

case e: FileNotFoundException if !ignoreMissingFiles =>

throw e

case e @ (_: RuntimeException | _: IOException) if

ignoreCorruptFiles =>

+if (e.getMessage.contains("Filesystem closed")) {

Review Comment:

I agree that checking exception messages isn't the most robust solution.

Thinking about the risks of exception text matching, I see two potential

classes of problems:

1. _Genuine_ corruption might just-so-happen to produce an exception that

also contains "Filesystem closed", in which case we'd break a user's job.

2. The third-party FileSystem implementation could change its error

messages, which would cause this PR's fix to stop working.

Given the fact that the current code treats essentially _all_ exceptions as

corruption, a potentially-brittle solution which ignores issue (2) and solves

issue (1) _might_ not be a terrible trade-off.

For example, let's say that we're only trying to put a bandaid on the

`DFSClient` closed filesystem issue and aren't trying to solve the broader

class of issues I mentioned in my other comment. In that case, we might be able

to write some sort of helper function which would walk through the exception's

stack frames and look for a frame that calls `DFSClient.checkOpen`. Given that

Hadoop hasn't changed that method in 11+ years, it seems pretty unlikely that

they would break it in a future release.

Then, we could do something like

```

case e: IOException if isDFSClientClosedException(e) => throw e

```

before the current corruption ignoring case.

This would have no chance of mis-identifying corruption as non-corruption.

It's potentially brittle and doesn't necessarily solve all cases, but it could

greatly reduce the likelihood of data loss (even though it doesn't completely

eliminate it). Depending on the context of use, maybe that's an okay short-term

band-aid?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #35484: [SPARK-38181][SS][DOCS] Update comments in KafkaDataConsumer.scala

HyukjinKwon commented on PR #35484: URL: https://github.com/apache/spark/pull/35484#issuecomment-1148140363 Oh, also please enable GItHub Actions in your forked repository (https://github.com/ArvinZheng/spark). Apache Spark repository leverages PR author's GitHub Actions resources. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #35484: [SPARK-38181][SS][DOCS] Update comments in KafkaDataConsumer.scala

HyukjinKwon commented on PR #35484: URL: https://github.com/apache/spark/pull/35484#issuecomment-1148140028 @ArvinZheng mind rebasing this PR? seems like something went wrong about finding GA actions in your fork. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] anishshri-db commented on pull request #35484: [SPARK-38181][SS][DOCS] Update comments in KafkaDataConsumer.scala

anishshri-db commented on PR #35484: URL: https://github.com/apache/spark/pull/35484#issuecomment-1148138847 Seems like the tests are failing though ? Maybe try merging back and re-trigger ? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] anishshri-db commented on a diff in pull request #35484: [SPARK-38181][SS][DOCS] Update comments in KafkaDataConsumer.scala

anishshri-db commented on code in PR #35484: URL: https://github.com/apache/spark/pull/35484#discussion_r890722033 ## connector/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/consumer/KafkaDataConsumer.scala: ## @@ -298,9 +296,10 @@ private[kafka010] class KafkaDataConsumer( s"requested $offset") // The following loop is basically for `failOnDataLoss = false`. When `failOnDataLoss` is -// `false`, first, we will try to fetch the record at `offset`. If no such record exists, then -// we will move to the next available offset within `[offset, untilOffset)` and retry. -// If `failOnDataLoss` is `true`, the loop body will be executed only once. +// `false`, we will try to fetch the record at `offset`, if the record does not exist, we will +// try to fetch next available record within [offset, untilOffset). +// If `failOnDataLoss` is `true`, the loop body will be executed only once, either return the +// record at `offset` or throw an exception when the record does not exist Review Comment: Nit: Period at the end -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] JoshRosen commented on pull request #36775: [SPARK-39389]Filesystem closed should not be considered as corrupt files

JoshRosen commented on PR #36775: URL: https://github.com/apache/spark/pull/36775#issuecomment-1148136217 Does checking for filesystem closed exceptions completely fix this issue or are we vulnerable to race conditions? Skimming through the [Hadoop DFSClient code](https://github.com/apache/hadoop/blame/2dfa928a201560bc739ff5e4fe29f8bb19188927/hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/DFSClient.java), it looks like it only throws `Filesystem closed` IOExceptions from a `checkOpen()` call, but what happens if the FS is closed while a thread has proceeded past `checkOpen()` and is in the middle of some other operation? In that case we might get a different IOException but would presumably want to treat it the same way (because it is a side-effect of another task being interrupted rather than a side-effect of a corrupt file). It seems like the core problem is that we have a really overly-broad `catch` block which catches corrupt files but also catches many other potential things. For example, let's say that we get an IOException caused by a transient network connectivity issue to external storage: this doesn't meet the intuitive definition of "corrupt file" but would still get caught in the dragnet of the current exception handler. The current code seems biased towards identifying "false positive" instances of corruption (which can lead to correctness issues). If instead we wanted to bias towards false negatives (i.e. mis-identifying true corruption as a transient crash, therefore failing a user's job) then maybe we could have the code in `readFunc` wrap and re-throw exceptions in some sort of `CorruptFileException` wrapper and then modify the `catch` here to only ignore that new exception. This would require changes in a number of data sources, though. The FileScanRDD code might simply lack the necessary information to be able to identify true corruption cases, so pushing part of that decision one layer lower might make sense. I think that could be a breaking change for certain users, though, so we'd need to treat it as a user-facing change and document it appropriately (and might need add escape hatch flags in case users need to revert back to the old (arguably buggy) behavior). I'm not sure what's the right course of action here. I just wanted to flag that there's a potentially broader issue here. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #36776: [SPARK-38997][SPARK-39037][SQL][FOLLOWUP] `PushableColumnWithoutNestedColumn` need be translated to predicate too

beliefer commented on PR #36776: URL: https://github.com/apache/spark/pull/36776#issuecomment-1148132608 ping @huaxingao cc @cloud-fan -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36773: [SPARK-39385][SQL] Translate linear regression aggregate functions for pushdown

beliefer commented on code in PR #36773:

URL: https://github.com/apache/spark/pull/36773#discussion_r890715857

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/DataSourceStrategy.scala:

##

@@ -750,6 +750,22 @@ object DataSourceStrategy

PushableColumnWithoutNestedColumn(right), _) =>

Some(new GeneralAggregateFunc("CORR", agg.isDistinct,

Array(FieldReference.column(left), FieldReference.column(right

+case aggregate.RegrIntercept(PushableColumnWithoutNestedColumn(left),

Review Comment:

two indents ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HeartSaVioR commented on pull request #36737: [SPARK-39347] [SS] Generate wrong time window when (timestamp-startTime) % slideDuration…

HeartSaVioR commented on PR #36737: URL: https://github.com/apache/spark/pull/36737#issuecomment-1148131018 Sorry I'll find a time sooner. I'll also find someone able to review this in prior. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36773: [SPARK-39385][SQL] Translate linear regression aggregate functions for pushdown

beliefer commented on code in PR #36773:

URL: https://github.com/apache/spark/pull/36773#discussion_r890714758

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/H2Dialect.scala:

##

@@ -72,6 +72,26 @@ private[sql] object H2Dialect extends JdbcDialect {

assert(f.children().length == 2)

val distinct = if (f.isDistinct) "DISTINCT " else ""

Some(s"CORR($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_INTERCEPT" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_INTERCEPT($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_R2" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_R2($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SLOPE" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SLOPE($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXX" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SXX($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXY" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

Review Comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #35612: [SPARK-38289][SQL] Refactor SQL CLI exit code to make it more clear

AngersZh commented on PR #35612: URL: https://github.com/apache/spark/pull/35612#issuecomment-1148126351 > Tests are running in https://github.com/AngersZh/spark/runs/6765635006 > > Seems like Scala 2.13 build fails as below: > > ``` > [error] /home/runner/work/spark/spark/sql/hive-thriftserver/src/main/java/org/apache/hive/service/server/HiveServer2.java:263:1: error: cannot find symbol > [error] System.exit(SparkExitCode.EXIT_SUCCESS); > [error]^ symbol: variable EXIT_SUCCESS > [error] location: class SparkExitCode > [error] Note: Some input files use or override a deprecated API. > [error] Note: Recompile with -Xlint:deprecation for details. > [error] 1 error > ``` Updated -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36773: [SPARK-39385][SQL] Translate linear regression aggregate functions for pushdown

beliefer commented on code in PR #36773:

URL: https://github.com/apache/spark/pull/36773#discussion_r890696454

##

sql/core/src/test/scala/org/apache/spark/sql/jdbc/JDBCV2Suite.scala:

##

@@ -,6 +,28 @@ class JDBCV2Suite extends QueryTest with

SharedSparkSession with ExplainSuiteHel

checkAnswer(df, Seq(Row(1d), Row(1d), Row(null)))

}

+ test("scan with aggregate push-down: linear regression functions with filter

and group by") {

+val df = sql(

+ """

+|SELECT

+| REGR_INTERCEPT(bonus, bonus),

+| REGR_R2(bonus, bonus),

+| REGR_SLOPE(bonus, bonus),

+| REGR_SXY(bonus, bonus)

+|FROM h2.test.employee where dept > 0 group by DePt""".stripMargin)

Review Comment:

OK

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36773: [SPARK-39385][SQL] Translate linear regression aggregate functions for pushdown

beliefer commented on code in PR #36773:

URL: https://github.com/apache/spark/pull/36773#discussion_r890694554

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/H2Dialect.scala:

##

@@ -72,6 +72,26 @@ private[sql] object H2Dialect extends JdbcDialect {

assert(f.children().length == 2)

val distinct = if (f.isDistinct) "DISTINCT " else ""

Some(s"CORR($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_INTERCEPT" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_INTERCEPT($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_R2" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_R2($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SLOPE" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SLOPE($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXX" =>

Review Comment:

Thank you. I forgot to remove it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36662: [SPARK-39286][DOC] Update documentation for the decode function

beliefer commented on code in PR #36662:

URL: https://github.com/apache/spark/pull/36662#discussion_r890692975

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/stringExpressions.scala:

##

@@ -2504,9 +2504,10 @@ object Decode {

usage = """

_FUNC_(bin, charset) - Decodes the first argument using the second

argument character set.

-_FUNC_(expr, search, result [, search, result ] ... [, default]) - Decode

compares expr

- to each search value one by one. If expr is equal to a search, returns

the corresponding result.

- If no match is found, then Oracle returns default. If default is

omitted, returns null.

+_FUNC_(expr, search, result [, search, result ] ... [, default]) -

Compares expr

+ to each search value in order. If expr is equal to a search value,

_FUNC_ returns

+ the corresponding result. If no match is found, then it returns default.

If default

Review Comment:

I just want simplify the comment. The comment is OK too.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #36662: [SPARK-39286][DOC] Update documentation for the decode function

beliefer commented on code in PR #36662:

URL: https://github.com/apache/spark/pull/36662#discussion_r890691547

##

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/stringExpressions.scala:

##

@@ -2504,9 +2504,10 @@ object Decode {

usage = """

_FUNC_(bin, charset) - Decodes the first argument using the second

argument character set.

-_FUNC_(expr, search, result [, search, result ] ... [, default]) - Decode

compares expr

- to each search value one by one. If expr is equal to a search, returns

the corresponding result.

- If no match is found, then Oracle returns default. If default is

omitted, returns null.

+_FUNC_(expr, search, result [, search, result ] ... [, default]) -

Compares expr

+ to each search value in order. If expr is equal to a search value,

_FUNC_ returns

Review Comment:

I got it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dcoliversun commented on a diff in pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log

dcoliversun commented on code in PR #36777:

URL: https://github.com/apache/spark/pull/36777#discussion_r890683738

##

core/src/main/scala/org/apache/spark/SecurityManager.scala:

##

@@ -87,10 +87,14 @@ private[spark] class SecurityManager(

private var secretKey: String = _

logInfo("SecurityManager: authentication " + (if (authOn) "enabled" else

"disabled") +

"; ui acls " + (if (aclsOn) "enabled" else "disabled") +

-"; users with view permissions: " + viewAcls.toString() +

-"; groups with view permissions: " + viewAclsGroups.toString() +

-"; users with modify permissions: " + modifyAcls.toString() +

-"; groups with modify permissions: " + modifyAclsGroups.toString())

+"; users with view permissions: " +

+(if (viewAcls.nonEmpty) viewAcls.mkString(",") else "EMPTY") +

Review Comment:

OK :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dcoliversun commented on a diff in pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log

dcoliversun commented on code in PR #36777:

URL: https://github.com/apache/spark/pull/36777#discussion_r890683347

##

core/src/main/scala/org/apache/spark/SecurityManager.scala:

##

@@ -87,10 +87,14 @@ private[spark] class SecurityManager(

private var secretKey: String = _

logInfo("SecurityManager: authentication " + (if (authOn) "enabled" else

"disabled") +

"; ui acls " + (if (aclsOn) "enabled" else "disabled") +

-"; users with view permissions: " + viewAcls.toString() +

-"; groups with view permissions: " + viewAclsGroups.toString() +

-"; users with modify permissions: " + modifyAcls.toString() +

-"; groups with modify permissions: " + modifyAclsGroups.toString())

+"; users with view permissions: " +

Review Comment:

Fine. I delete extra spaces after `users`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #35612: [SPARK-38289][SQL] Refactor SQL CLI exit code to make it more clear

HyukjinKwon commented on PR #35612: URL: https://github.com/apache/spark/pull/35612#issuecomment-1148079558 Tests are running in https://github.com/AngersZh/spark/runs/6765635006 Seems like Scala 2.13 build fails as below: ``` [error] /home/runner/work/spark/spark/sql/hive-thriftserver/src/main/java/org/apache/hive/service/server/HiveServer2.java:263:1: error: cannot find symbol [error] System.exit(SparkExitCode.EXIT_SUCCESS); [error]^ symbol: variable EXIT_SUCCESS [error] location: class SparkExitCode [error] Note: Some input files use or override a deprecated API. [error] Note: Recompile with -Xlint:deprecation for details. [error] 1 error ``` -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on a diff in pull request #36777: [SPARK-39390][CORE] Hide and optimize `viewAcls`/`viewAclsGroups`/`modifyAcls`/`modifyAclsGroups` from INFO log

huaxingao commented on code in PR #36777:

URL: https://github.com/apache/spark/pull/36777#discussion_r890673570

##

core/src/main/scala/org/apache/spark/SecurityManager.scala:

##

@@ -87,10 +87,14 @@ private[spark] class SecurityManager(

private var secretKey: String = _

logInfo("SecurityManager: authentication " + (if (authOn) "enabled" else

"disabled") +

"; ui acls " + (if (aclsOn) "enabled" else "disabled") +

-"; users with view permissions: " + viewAcls.toString() +

-"; groups with view permissions: " + viewAclsGroups.toString() +

-"; users with modify permissions: " + modifyAcls.toString() +

-"; groups with modify permissions: " + modifyAclsGroups.toString())

+"; users with view permissions: " +

Review Comment:

nit: there is an extra space after `users`

##

core/src/main/scala/org/apache/spark/SecurityManager.scala:

##

@@ -87,10 +87,14 @@ private[spark] class SecurityManager(

private var secretKey: String = _

logInfo("SecurityManager: authentication " + (if (authOn) "enabled" else

"disabled") +

"; ui acls " + (if (aclsOn) "enabled" else "disabled") +

-"; users with view permissions: " + viewAcls.toString() +

-"; groups with view permissions: " + viewAclsGroups.toString() +

-"; users with modify permissions: " + modifyAcls.toString() +

-"; groups with modify permissions: " + modifyAclsGroups.toString())

+"; users with view permissions: " +

+(if (viewAcls.nonEmpty) viewAcls.mkString(",") else "EMPTY") +

Review Comment:

nit: In `mkString(",")`, add an empty space after `,` ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #32397: [WIP][SPARK-35084][CORE] Spark 3: supporting "--packages" in k8s cluster mode

github-actions[bot] closed pull request #32397: [WIP][SPARK-35084][CORE] Spark 3: supporting "--packages" in k8s cluster mode URL: https://github.com/apache/spark/pull/32397 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #35417: [SPARK-38102][CORE] Support custom commitProtocolClass in saveAsNewAPIHadoopDataset

github-actions[bot] closed pull request #35417: [SPARK-38102][CORE] Support custom commitProtocolClass in saveAsNewAPIHadoopDataset URL: https://github.com/apache/spark/pull/35417 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #35363: [SPARK-38066][SQL] evaluateEquality should ignore attribute without min/max ColumnStat

github-actions[bot] closed pull request #35363: [SPARK-38066][SQL] evaluateEquality should ignore attribute without min/max ColumnStat URL: https://github.com/apache/spark/pull/35363 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on a diff in pull request #36773: [SPARK-39385][SQL] Translate linear regression aggregate functions for pushdown

huaxingao commented on code in PR #36773:

URL: https://github.com/apache/spark/pull/36773#discussion_r890658489

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/H2Dialect.scala:

##

@@ -72,6 +72,26 @@ private[sql] object H2Dialect extends JdbcDialect {

assert(f.children().length == 2)

val distinct = if (f.isDistinct) "DISTINCT " else ""

Some(s"CORR($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_INTERCEPT" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_INTERCEPT($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_R2" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_R2($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SLOPE" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SLOPE($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXX" =>

Review Comment:

Do we ever hit this path? In `translateAggregate` you don't have `REGR_SXX`

and in the PR description it is said that ".. REGR_SXX and REGR_SXY are

replaced to other expression in runtime"? Actually `REGR_SXY` is not converted

to other expression in runtime, right?

##

sql/core/src/main/scala/org/apache/spark/sql/jdbc/H2Dialect.scala:

##

@@ -72,6 +72,26 @@ private[sql] object H2Dialect extends JdbcDialect {

assert(f.children().length == 2)

val distinct = if (f.isDistinct) "DISTINCT " else ""

Some(s"CORR($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_INTERCEPT" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_INTERCEPT($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_R2" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_R2($distinct${f.children().head}, ${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SLOPE" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SLOPE($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXX" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

+ Some(s"REGR_SXX($distinct${f.children().head},

${f.children().last})")

+case f: GeneralAggregateFunc if f.name() == "REGR_SXY" =>

+ assert(f.children().length == 2)

+ val distinct = if (f.isDistinct) "DISTINCT " else ""

Review Comment:

Can we test `DISTINCT` too?

##

sql/core/src/test/scala/org/apache/spark/sql/jdbc/JDBCV2Suite.scala:

##

@@ -,6 +,28 @@ class JDBCV2Suite extends QueryTest with

SharedSparkSession with ExplainSuiteHel

checkAnswer(df, Seq(Row(1d), Row(1d), Row(null)))

}

+ test("scan with aggregate push-down: linear regression functions with filter

and group by") {

+val df = sql(

+ """

+|SELECT

+| REGR_INTERCEPT(bonus, bonus),

+| REGR_R2(bonus, bonus),

+| REGR_SLOPE(bonus, bonus),

+| REGR_SXY(bonus, bonus)

+|FROM h2.test.employee where dept > 0 group by DePt""".stripMargin)

Review Comment:

nit: capitalize the sql keywords `where` and `group by`?

I just noticed that not all the sql keywords in this test suite are

capitalized. Probably open a separate PR to fix them all?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] vli-databricks commented on a diff in pull request #36780: [SPARK-39392][SQL] Refine ANSI error messages for try_* function hints

vli-databricks commented on code in PR #36780:

URL: https://github.com/apache/spark/pull/36780#discussion_r890657167

##

core/src/main/resources/error/error-classes.json:

##

@@ -195,7 +195,7 @@

},

"INVALID_ARRAY_INDEX_IN_ELEMENT_AT" : {

"message" : [

- "The index is out of bounds. The array has

elements. To return NULL instead, use `try_element_at`. If necessary set

to \"false\" to bypass this error."

+ "The index is out of bounds. The array has

elements. Use `try_element_at` to tolerate accessing element at non-existing

index and return NULL instead. If necessary set to \"false\" to bypass

this error."

Review Comment:

Yes, that is better. Fixed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] huaxingao commented on pull request #36781: [SPARK-39393][SQL] Parquet data source only supports push-down predicate filters for non-repeated primitive types

huaxingao commented on PR #36781: URL: https://github.com/apache/spark/pull/36781#issuecomment-1148022608 @Borjianamin98 Could you please add a test? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm commented on pull request #36734: [SPARK-38987][SHUFFLE] Throw FetchFailedException when merged shuffle blocks are corrupted and spark.shuffle.detectCorrupt is set to true

mridulm commented on PR #36734: URL: https://github.com/apache/spark/pull/36734#issuecomment-1147982565 Merged to master, thanks for working on this @akpatnam25 ! Thanks for the review @otterc :-) -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] mridulm closed pull request #36734: [SPARK-38987][SHUFFLE] Throw FetchFailedException when merged shuffle blocks are corrupted and spark.shuffle.detectCorrupt is set to true

mridulm closed pull request #36734: [SPARK-38987][SHUFFLE] Throw FetchFailedException when merged shuffle blocks are corrupted and spark.shuffle.detectCorrupt is set to true URL: https://github.com/apache/spark/pull/36734 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #36775: [SPARK-39389]Filesystem closed should not be considered as corrupt files

dongjoon-hyun commented on code in PR #36775:

URL: https://github.com/apache/spark/pull/36775#discussion_r890600656

##

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##

@@ -253,6 +253,9 @@ class FileScanRDD(

// Throw FileNotFoundException even if `ignoreCorruptFiles`

is true

case e: FileNotFoundException if !ignoreMissingFiles =>

throw e

case e @ (_: RuntimeException | _: IOException) if

ignoreCorruptFiles =>

+if (e.getMessage.contains("Filesystem closed")) {

Review Comment:

+1 for @HyukjinKwon 's comment. We need a more robust way to detect,

@boneanxs .

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #36745: [SPARK-39359][SQL] Restrict DEFAULT columns to allowlist of supported data source types

dtenedor commented on code in PR #36745:

URL: https://github.com/apache/spark/pull/36745#discussion_r890579762

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/analysis/ResolveSessionCatalog.scala:

##

@@ -41,21 +41,29 @@ import org.apache.spark.sql.types.{MetadataBuilder,

StructField, StructType}

*

* We can remove this rule once we implement all the catalog functionality in

`V2SessionCatalog`.

*/

-class ResolveSessionCatalog(val catalogManager: CatalogManager)

+class ResolveSessionCatalog(val analyzer: Analyzer)

extends Rule[LogicalPlan] with LookupCatalog {

import org.apache.spark.sql.connector.catalog.CatalogV2Implicits._

import org.apache.spark.sql.connector.catalog.CatalogV2Util._

import org.apache.spark.sql.execution.datasources.v2.DataSourceV2Implicits._

+ override val catalogManager = analyzer.catalogManager

+

override def apply(plan: LogicalPlan): LogicalPlan = plan.resolveOperatorsUp

{

-case AddColumns(ResolvedV1TableIdentifier(ident), cols) =>

+case AddColumns(

+ ResolvedTable(catalog, ident, v1Table: V1Table, _), cols)

+if isSessionCatalog(catalog) =>

cols.foreach { c =>

assertTopLevelColumn(c.name, "AlterTableAddColumnsCommand")

if (!c.nullable) {

throw

QueryCompilationErrors.addColumnWithV1TableCannotSpecifyNotNullError

}

}

- AlterTableAddColumnsCommand(ident.asTableIdentifier,

cols.map(convertToStructField))

+ val prevSchema = StructType(cols.map(convertToStructField))

+ val newSchema: StructType =

+DefaultCols.constantFoldCurrentDefaultsToExistDefaults(

Review Comment:

Thanks for pointing this out, we don't need to duplicate it again. Updated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dtenedor commented on a diff in pull request #36745: [SPARK-39359][SQL] Restrict DEFAULT columns to allowlist of supported data source types

dtenedor commented on code in PR #36745: URL: https://github.com/apache/spark/pull/36745#discussion_r890579364 ## sql/core/src/main/scala/org/apache/spark/sql/internal/BaseSessionStateBuilder.scala: ## @@ -186,7 +186,7 @@ abstract class BaseSessionStateBuilder( new ResolveSQLOnFile(session) +: new FallBackFileSourceV2(session) +: ResolveEncodersInScalaAgg +: -new ResolveSessionCatalog(catalogManager) +: +new ResolveSessionCatalog(this) +: Review Comment: Good point, this is not needed, we can revert this part. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on pull request #36772: [SPARK-39387][BUILD] Upgrade hive-storage-api to 2.7.3

dongjoon-hyun commented on PR #36772: URL: https://github.com/apache/spark/pull/36772#issuecomment-1147900459 Apache ORC community uses 2.8.1 based on `Panagiotis Garefalakis`'s comment (which I shared here) because he is the Hive committer and ORC PMC member. In Apache Spark community, what the Apache Spark community needs is actually a test coverage. I'm fine with both versions if both of them works in Spark. > Should I raise another PR to upgrade to 2.8.1? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] [spark] Borjianamin98 opened a new pull request, #36781: [SPARK-39393][SQL] Parquet data source only supports push-down predicate filters for non-repeated primitive types

Borjianamin98 opened a new pull request, #36781:

URL: https://github.com/apache/spark/pull/36781

### What changes were proposed in this pull request?

In Spark version 3.1.0 and newer, Spark creates extra filter predicate

conditions for repeated parquet columns.

These fields do not have the ability to have a filter predicate, according

to the [PARQUET-34](https://issues.apache.org/jira/browse/PARQUET-34) issue in

the parquet library.

This PR solves this problem until the appropriate functionality is provided

by the parquet.

Before this PR:

Assume follow Protocol buffer schema:

```

message Model {

string name = 1;

repeated string keywords = 2;

}

```

Suppose a parquet file is created from a set of records in the above format

with the help of the parquet-protobuf library.

Using Spark version 3.1.0 or newer, we get following exception when run the

following query using spark-shell:

```

val data = spark.read.parquet("/path/to/parquet")

data.registerTempTable("models")

spark.sql("select * from models where array_contains(keywords,

'X')").show(false)

```

```

Caused by: java.lang.IllegalArgumentException: FilterPredicates do not

currently support repeated columns. Column keywords is repeated.

at

org.apache.parquet.filter2.predicate.SchemaCompatibilityValidator.validateColumn(SchemaCompatibilityValidator.java:176)

at

org.apache.parquet.filter2.predicate.SchemaCompatibilityValidator.validateColumnFilterPredicate(SchemaCompatibilityValidator.java:149)

at

org.apache.parquet.filter2.predicate.SchemaCompatibilityValidator.visit(SchemaCompatibilityValidator.java:89)

at

org.apache.parquet.filter2.predicate.SchemaCompatibilityValidator.visit(SchemaCompatibilityValidator.java:56)

at

org.apache.parquet.filter2.predicate.Operators$NotEq.accept(Operators.java:192)

at

org.apache.parquet.filter2.predicate.SchemaCompatibilityValidator.validate(SchemaCompatibilityValidator.java:61)

at

org.apache.parquet.filter2.compat.RowGroupFilter.visit(RowGroupFilter.java:95)

at

org.apache.parquet.filter2.compat.RowGroupFilter.visit(RowGroupFilter.java:45)

at

org.apache.parquet.filter2.compat.FilterCompat$FilterPredicateCompat.accept(FilterCompat.java:149)

at

org.apache.parquet.filter2.compat.RowGroupFilter.filterRowGroups(RowGroupFilter.java:72)

at

org.apache.parquet.hadoop.ParquetFileReader.filterRowGroups(ParquetFileReader.java:870)

at

org.apache.parquet.hadoop.ParquetFileReader.(ParquetFileReader.java:789)

at

org.apache.parquet.hadoop.ParquetFileReader.open(ParquetFileReader.java:657)

at

org.apache.parquet.hadoop.ParquetRecordReader.initializeInternalReader(ParquetRecordReader.java:162)

at

org.apache.parquet.hadoop.ParquetRecordReader.initialize(ParquetRecordReader.java:140)

at

org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat.$anonfun$buildReaderWithPartitionValues$2(ParquetFileFormat.scala:373)

at

org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.org$apache$spark$sql$execution$datasources$FileScanRDD$$anon$$readCurrentFile(FileScanRDD.scala:127)

...

```

The cause of the problem is due to a change in the data filtering conditions:

```

spark.sql("select * from log where array_contains(keywords,

'X')").explain(true);

// Spark 3.0.2 and older

== Physical Plan ==

...

+- FileScan parquet [link#0,keywords#1]

DataFilters: [array_contains(keywords#1, Google)]

PushedFilters: []

...

// Spark 3.1.0 and newer

== Physical Plan == ...

+- FileScan parquet [link#0,keywords#1]

DataFilters: [isnotnull(keywords#1), array_contains(keywords#1, Google)]

PushedFilters: [IsNotNull(keywords)]

...

```

Pushing filters down for repeated columns of parquet is not necessary

because it is not supported by parquet library for now. So we can exclude them

from pushed predicate filters and solve issue.

### Why are the changes needed?

Predicate filters that are pushed down to parquet should not be created on

repeated-type fields.

### Does this PR introduce any user-facing change?

No, It's only fixed a bug and before this, due to the limitations of the

parquet library, no more work was possible.

### How was this patch tested?

Need no more tests and checked only by executing code base tests.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apac

[GitHub] [spark] gengliangwang commented on a diff in pull request #36745: [SPARK-39359][SQL] Restrict DEFAULT columns to allowlist of supported data source types

gengliangwang commented on code in PR #36745:

URL: https://github.com/apache/spark/pull/36745#discussion_r890519223

##

sql/core/src/main/scala/org/apache/spark/sql/catalyst/analysis/ResolveSessionCatalog.scala:

##

@@ -41,21 +41,29 @@ import org.apache.spark.sql.types.{MetadataBuilder,

StructField, StructType}

*

* We can remove this rule once we implement all the catalog functionality in

`V2SessionCatalog`.

*/

-class ResolveSessionCatalog(val catalogManager: CatalogManager)

+class ResolveSessionCatalog(val analyzer: Analyzer)

extends Rule[LogicalPlan] with LookupCatalog {

import org.apache.spark.sql.connector.catalog.CatalogV2Implicits._

import org.apache.spark.sql.connector.catalog.CatalogV2Util._

import org.apache.spark.sql.execution.datasources.v2.DataSourceV2Implicits._

+ override val catalogManager = analyzer.catalogManager

+

override def apply(plan: LogicalPlan): LogicalPlan = plan.resolveOperatorsUp

{

-case AddColumns(ResolvedV1TableIdentifier(ident), cols) =>

+case AddColumns(

+ ResolvedTable(catalog, ident, v1Table: V1Table, _), cols)

+if isSessionCatalog(catalog) =>

cols.foreach { c =>

assertTopLevelColumn(c.name, "AlterTableAddColumnsCommand")

if (!c.nullable) {

throw

QueryCompilationErrors.addColumnWithV1TableCannotSpecifyNotNullError

}

}

- AlterTableAddColumnsCommand(ident.asTableIdentifier,

cols.map(convertToStructField))

+ val prevSchema = StructType(cols.map(convertToStructField))

+ val newSchema: StructType =

+DefaultCols.constantFoldCurrentDefaultsToExistDefaults(

Review Comment:

`constantFoldCurrentDefaultsToExistDefaults` is called in

`AlterTableAddColumnsCommand` too. Seems duplicated here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For queries about this service, please contact Infrastructure at: