[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191685596 --- Diff: python/pyspark/sql/dataframe.py --- @@ -351,8 +352,62 @@ def show(self, n=20, truncate=True, vertical=False): else: print(self._jdf.showString(n, int(truncate), vertical)) +def _get_repl_config(self): +"""Return the configs for eager evaluation each time when __repr__ or +_repr_html_ called by user or notebook. +""" +eager_eval = self.sql_ctx.getConf( +"spark.sql.repl.eagerEval.enabled", "false").lower() == "true" +console_row = int(self.sql_ctx.getConf( +"spark.sql.repl.eagerEval.maxNumRows", u"20")) +console_truncate = int(self.sql_ctx.getConf( +"spark.sql.repl.eagerEval.truncate", u"20")) +return (eager_eval, console_row, console_truncate) --- End diff -- OK. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21370 @viirya @gatorsmile @ueshin @felixcheung @HyukjinKwon The refactor about generating html code out of `Dataset.scala` was done in 94f3414. Please help to check whether it is appropriate when you have time. Thanks! @rdblue @rxin The lastest commit also include the logic of using `spark.sql.repl.eagerEval.enabled` both control \_\_repr\_\_ and \_repr\_html\_. Please have a look when you have time. Thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21445: [SPARK-24404][SS] Increase currentEpoch when meet a Epoc...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21445 ``` Looks like the patch is needed only with #21353 #21332 #21293 as of now, right? ``` @HeartSaVioR Yes, sorry for the late explanation. The background is we are running POC based on #21353 #21332 #21293 and the latest master, including the work of queue rdd reader/writer by @jose-torres. Greatly thanks for the work of #21239, we can complete all status operation after fix this bug. So we think we should report this to let you know. ``` Please note that I'm commenting on top of current implementation, not considering #21353 #21332 #21293. ``` Got it, owing to some pressure within internal requirement for CP, we running over these 3 patches, but we'll follow closely with all your works and hope to contribute into CP. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21385: [SPARK-24234][SS] Support multiple row writers in...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21385#discussion_r191149214

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/continuous/shuffle/UnsafeRowReceiver.scala

---

@@ -41,11 +50,15 @@ private[shuffle] case class ReceiverEpochMarker()

extends UnsafeRowReceiverMessa

*/

private[shuffle] class UnsafeRowReceiver(

queueSize: Int,

+ numShuffleWriters: Int,

+ checkpointIntervalMs: Long,

override val rpcEnv: RpcEnv)

extends ThreadSafeRpcEndpoint with ContinuousShuffleReader with

Logging {

// Note that this queue will be drained from the main task thread and

populated in the RPC

// response thread.

- private val queue = new

ArrayBlockingQueue[UnsafeRowReceiverMessage](queueSize)

+ private val queues = Array.fill(numShuffleWriters) {

--- End diff --

Hi TD, just a question here, what's the 'a non-RPC-endpoint-based transfer

mechanism' refers for?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191080316 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.sql.repl.eagerEval.enabled + false + +Enable eager evaluation or not. If true and repl you're using supports eager evaluation, +dataframe will be ran automatically and html table will feedback the queries user have defined +(see https://issues.apache.org/jira/browse/SPARK-24215;>SPARK-24215 for more details). + + + + spark.sql.repl.eagerEval.showRows + 20 + +Default number of rows in HTML table. + + + + spark.sql.repl.eagerEval.truncate --- End diff -- Yep, I just want to keep the same behavior of `dataframe.show`. ``` That's useful for console output, but not so much for notebooks. ``` Notebooks aren't afraid for too many chaacters within a cell, so I just delete this? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191080194 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala --- @@ -237,9 +238,13 @@ class Dataset[T] private[sql]( * @param truncate If set to more than 0, truncates strings to `truncate` characters and * all cells will be aligned right. * @param vertical If set to true, prints output rows vertically (one line per column value). + * @param html If set to true, return output as html table. --- End diff -- @viirya @gatorsmile @rdblue Sorry for the late commit, the refactor do in 94f3414. I spend some time on testing and implementing the transformation of rows between python and scala. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191080082

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to

`truncate` characters and

+ *all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sbStringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+StringUtils.leftPad(cell, colWidths(i))

+ } else {

+StringUtils.rightPad(cell, colWidths(i))

+ }

+}

+(html, head) match {

+ case (true, true) =>

+data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "", "\n", "\n")

--- End diff --

I change the format in python \_repr\_html\_ in 94f3414.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191080049 --- Diff: python/pyspark/sql/dataframe.py --- @@ -347,13 +347,30 @@ def show(self, n=20, truncate=True, vertical=False): name | Bob """ if isinstance(truncate, bool) and truncate: -print(self._jdf.showString(n, 20, vertical)) +print(self._jdf.showString(n, 20, vertical, False)) else: -print(self._jdf.showString(n, int(truncate), vertical)) +print(self._jdf.showString(n, int(truncate), vertical, False)) --- End diff -- Fix in 94f3414. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191080066

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,30 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

+"""Returns a dataframe with html code when you enabled eager

evaluation

+by 'spark.sql.repl.eagerEval.enabled', this only called by repr

you're

--- End diff --

Thanks, change to REPL in 94f3414.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191080057

--- Diff: python/pyspark/sql/tests.py ---

@@ -3040,6 +3040,50 @@ def test_csv_sampling_ratio(self):

.csv(rdd, samplingRatio=0.5).schema

self.assertEquals(schema, StructType([StructField("_c0",

IntegerType(), True)]))

+def _get_content(self, content):

+"""

+Strips leading spaces from content up to the first '|' in each

line.

+"""

+import re

+pattern = re.compile(r'^ *\|', re.MULTILINE)

--- End diff --

Thanks! Fix it in 94f3414.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191080044 --- Diff: python/pyspark/sql/dataframe.py --- @@ -347,13 +347,30 @@ def show(self, n=20, truncate=True, vertical=False): name | Bob """ if isinstance(truncate, bool) and truncate: -print(self._jdf.showString(n, 20, vertical)) +print(self._jdf.showString(n, 20, vertical, False)) --- End diff -- Thanks, fix in 94f3414. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191080037

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,30 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

--- End diff --

Thanks for your reply, this implement in 94f3414.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r191080026 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.sql.repl.eagerEval.enabled + false + +Enable eager evaluation or not. If true and repl you're using supports eager evaluation, +dataframe will be ran automatically and html table will feedback the queries user have defined +(see https://issues.apache.org/jira/browse/SPARK-24215;>SPARK-24215 for more details). + + + + spark.sql.repl.eagerEval.showRows --- End diff -- Thanks, change it in 94f3414. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21370 ``` Can we also do something a bit more generic that works for non-Jupyter notebooks as well? ``` Can we accept `spark.sql.repl.eagerEval.enabled` to control both \_\_repr\_\_ and \_repr\_html\_ ? The behavior like below: 1. If not support _repr_html_ and open eagerEval.enable, just call something like `show` and trigger `take` inside. 2. If support _repr_html_, use the html output. (Here need a small trick, we should add a var in python dataframe to check whether _repr_html_ called or not, otherwise in this mode _repr_html and __repr__ will both call showString). I test offline an it can work both python shell and Jupyter, if we agree this way, I'll add this support in next commit together will the refactor of showString in scala Dataset.   --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190244648

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to

`truncate` characters and

+ *all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sbStringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+StringUtils.leftPad(cell, colWidths(i))

+ } else {

+StringUtils.rightPad(cell, colWidths(i))

+ }

+}

+(html, head) match {

+ case (true, true) =>

+data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "", "\n", "\n")

--- End diff --

Got it, I'll change it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r190154145 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala --- @@ -237,9 +238,13 @@ class Dataset[T] private[sql]( * @param truncate If set to more than 0, truncates strings to `truncate` characters and * all cells will be aligned right. * @param vertical If set to true, prints output rows vertically (one line per column value). + * @param html If set to true, return output as html table. --- End diff -- Thanks for guidance, I will do this in next commit. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190154231

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to

`truncate` characters and

+ *all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sbStringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+StringUtils.leftPad(cell, colWidths(i))

+ } else {

+StringUtils.rightPad(cell, colWidths(i))

+ }

+}

+(html, head) match {

+ case (true, true) =>

+data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "", "", "")

--- End diff --

Thanks, done. feb5f4a.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r190153907 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled --- End diff -- Thanks, done. feb5f4a. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190153833

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,26 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

--- End diff --

Thanks, done. feb5f4a.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190153812

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,26 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

--- End diff --

Thanks, done. feb5f4a.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189614136 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled --- End diff -- Got it, fix it in next commit. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189614067

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,26 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

--- End diff --

No problem, I'll added in `SQLTests` in next commit.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189613358

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -292,31 +297,25 @@ class Dataset[T] private[sql](

}

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+",

"+\n").toString()

+ val sep: String = if (html) {

+// Initial append table label

+sb.append("\n")

+"\n"

+ } else {

+colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ }

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

-if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

-} else {

- StringUtils.rightPad(cell, colWidths(i))

-}

- }.addString(sb, "|", "|", "|\n")

-

+ appendRowString(rows.head, truncate, colWidths, html, true, sb)

sb.append(sep)

// data

- rows.tail.foreach {

-_.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

-StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

-StringUtils.rightPad(cell.toString, colWidths(i))

- }

-}.addString(sb, "|", "|", "|\n")

+ rows.tail.foreach { row =>

+appendRowString(row.map(_.toString), truncate, colWidths, html,

false, sb)

--- End diff --

I see, the `cell.toString` has been called here.

https://github.com/apache/spark/pull/21370/files/f2bb8f334631734869ddf5d8ef1eca1fa29d334a#diff-7a46f10c3cedbf013cf255564d9483cdR271

Got it, I'll fix this in next commit.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189611792

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to

`truncate` characters and

+ *all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sbStringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+StringUtils.leftPad(cell, colWidths(i))

+ } else {

+StringUtils.rightPad(cell, colWidths(i))

+ }

+}

+(html, head) match {

+ case (true, true) =>

+data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "", "", "")

--- End diff --

Ah, I understand your consideration. I'll add this in next commit.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189603851

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,26 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

--- End diff --

No problem, is the SQLTests in pyspark/sql/tests.py the right place?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21370 Thanks all reviewer's comments, I address all comments in this commit. Please have a look. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189574938 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala --- @@ -237,9 +238,13 @@ class Dataset[T] private[sql]( * @param truncate If set to more than 0, truncates strings to `truncate` characters and * all cells will be aligned right. * @param vertical If set to true, prints output rows vertically (one line per column value). + * @param html If set to true, return output as html table. --- End diff -- We can do this in python side, I implement it in scala side mainly consider to reuse the code and logic of `show()`, maybe it's more natural in `show df as html` call showString. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189570764

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to

`truncate` characters and

+ *all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sbStringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+StringUtils.leftPad(cell, colWidths(i))

+ } else {

+StringUtils.rightPad(cell, colWidths(i))

+ }

+}

+(html, head) match {

+ case (true, true) =>

+data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "", "", "")

--- End diff --

the "\n" added in

seperatedLine:https://github.com/apache/spark/pull/21370/files#diff-7a46f10c3cedbf013cf255564d9483cdR300

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189570479

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -292,31 +297,25 @@ class Dataset[T] private[sql](

}

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+",

"+\n").toString()

+ val sep: String = if (html) {

+// Initial append table label

+sb.append("\n")

+"\n"

+ } else {

+colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ }

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

-if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

-} else {

- StringUtils.rightPad(cell, colWidths(i))

-}

- }.addString(sb, "|", "|", "|\n")

-

+ appendRowString(rows.head, truncate, colWidths, html, true, sb)

sb.append(sep)

// data

- rows.tail.foreach {

-_.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

-StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

-StringUtils.rightPad(cell.toString, colWidths(i))

- }

-}.addString(sb, "|", "|", "|\n")

+ rows.tail.foreach { row =>

+appendRowString(row.map(_.toString), truncate, colWidths, html,

false, sb)

--- End diff --

I think we need this toString, because the appendRowString method including

both column names and data in original logic, which call `toString` in data

part.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189569952 --- Diff: python/pyspark/sql/dataframe.py --- @@ -78,6 +78,12 @@ def __init__(self, jdf, sql_ctx): self.is_cached = False self._schema = None # initialized lazily self._lazy_rdd = None +self._eager_eval = sql_ctx.getConf( +"spark.jupyter.eagerEval.enabled", "false").lower() == "true" +self._default_console_row = int(sql_ctx.getConf( +"spark.jupyter.default.showRows", u"20")) +self._default_console_truncate = int(sql_ctx.getConf( +"spark.jupyter.default.showRows", u"20")) --- End diff -- Yep, I'll fix it in next commit.  --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189569437

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +353,18 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

-print(self._jdf.showString(n, 20, vertical))

+print(self._jdf.showString(n, 20, vertical, False))

else:

-print(self._jdf.showString(n, int(truncate), vertical))

+print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in

self.dtypes))

+def _repr_html_(self):

+if self._eager_eval:

+return self._jdf.showString(

+self._default_console_row, self._default_console_truncate,

False, True)

--- End diff --

```

What will be shown if spark.jupyter.eagerEval.enabled is False? Fallback

the original automatically?

```

Yes, it will fallback to call __repr__.

```

We need to return None if self._eager_eval is False.

```

Got it, more clear in code logic.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189567614 --- Diff: python/pyspark/sql/dataframe.py --- @@ -78,6 +78,12 @@ def __init__(self, jdf, sql_ctx): self.is_cached = False self._schema = None # initialized lazily self._lazy_rdd = None +self._eager_eval = sql_ctx.getConf( +"spark.jupyter.eagerEval.enabled", "false").lower() == "true" +self._default_console_row = int(sql_ctx.getConf( +"spark.jupyter.default.showRows", u"20")) +self._default_console_truncate = int(sql_ctx.getConf( +"spark.jupyter.default.showRows", u"20")) --- End diff -- My bad, sorry for this. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189567350 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled + false + +Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically +and html table will feedback the queries user have defined (see +https://issues.apache.org/jira/browse/SPARK-24215;>SPARK-24215 for more details). + + + + spark.jupyter.default.showRows + 20 + +Default number of rows in jupyter html table. + + + + spark.jupyter.default.truncate --- End diff -- Yep, change to spark.jupyter.eagerEval.truncate --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189567315 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled + false + +Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically +and html table will feedback the queries user have defined (see +https://issues.apache.org/jira/browse/SPARK-24215;>SPARK-24215 for more details). + + + + spark.jupyter.default.showRows --- End diff -- change to spark.jupyter.eagerEval.showRows,thanks --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189567259 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled + false + +Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically --- End diff -- Got it, thanks. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189483903 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled + false + +Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically +and html table will feedback the queries user have defined (see --- End diff -- Got it. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189483894 --- Diff: docs/configuration.md --- @@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful from JVM to Python worker for every task. + + spark.jupyter.eagerEval.enabled + false + +Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically --- End diff -- Copy. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21370 ``` this will need to escape the values to make sure it is legal html too right? ``` Yes you're right, thanks for your guidance, the new patch consider the escape and add new UT. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189463652

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -237,9 +236,13 @@ class Dataset[T] private[sql](

* @param truncate If set to more than 0, truncates strings to

`truncate` characters and

* all cells will be aligned right.

* @param vertical If set to true, prints output rows vertically (one

line per column value).

+ * @param html If set to true, return output as html table.

*/

private[sql] def showString(

- _numRows: Int, truncate: Int = 20, vertical: Boolean = false):

String = {

+ _numRows: Int,

+ truncate: Int = 20,

--- End diff --

Yes, the truncated string will be showed in table row and controlled by

`spark.jupyter.default.truncate`

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189463098 --- Diff: python/pyspark/sql/dataframe.py --- @@ -78,6 +78,12 @@ def __init__(self, jdf, sql_ctx): self.is_cached = False self._schema = None # initialized lazily self._lazy_rdd = None +self._eager_eval = sql_ctx.getConf( +"spark.jupyter.eagerEval.enabled", "false").lower() == "true" --- End diff -- Got it. Do it in next commit. Thanks for reminding. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21370#discussion_r189463079 --- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala --- @@ -3056,7 +3059,6 @@ class Dataset[T] private[sql]( * view, e.g. `SELECT * FROM global_temp.view1`. * * @throws AnalysisException if the view name is invalid or already exists - * --- End diff -- Sorry for this, I'll revert the IDE changes. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21370 Not sure who is the right reviewer, maybe @rdblue @gatorsmile ? Could you help me check whether it is the right implementation for the discussion in the dev list? --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

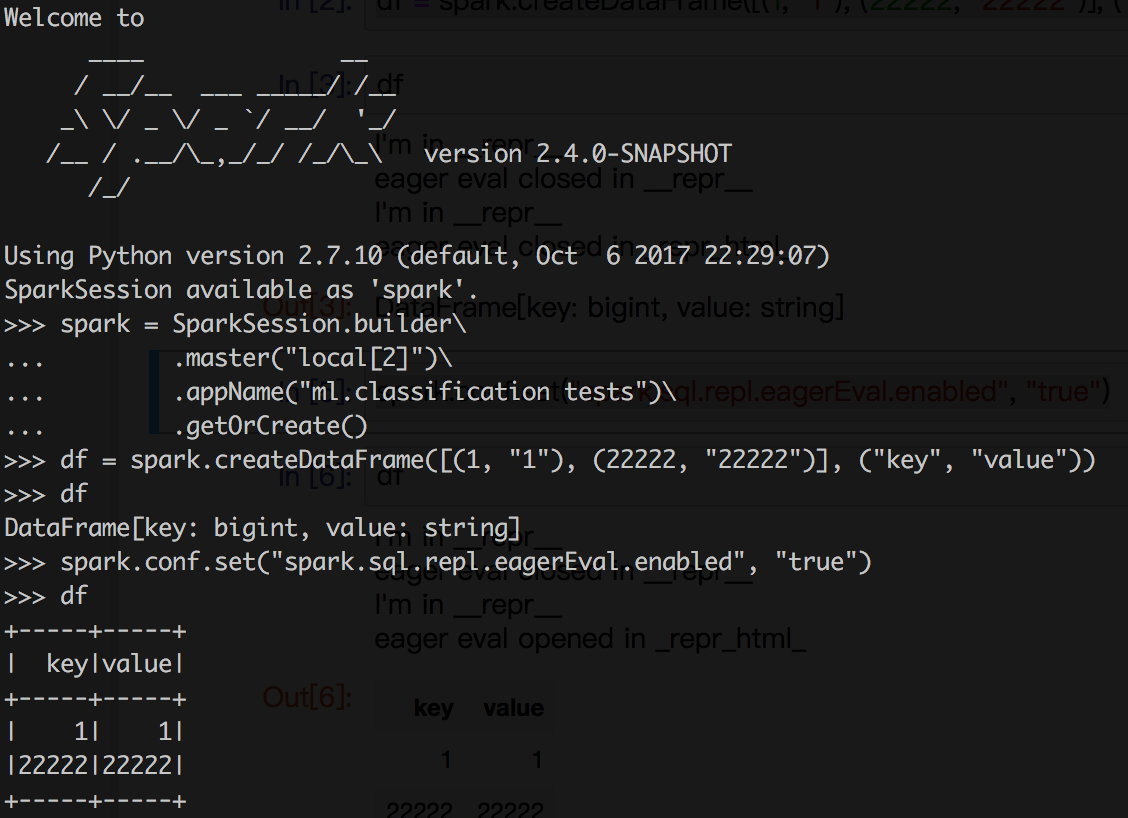

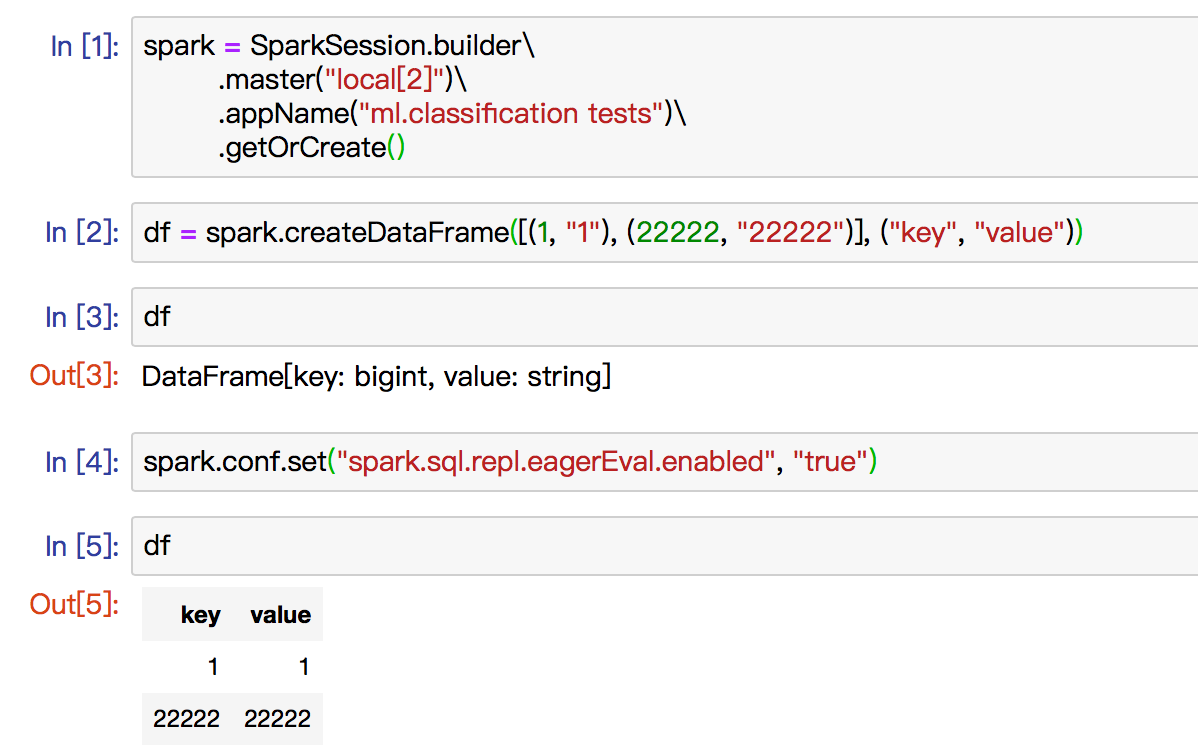

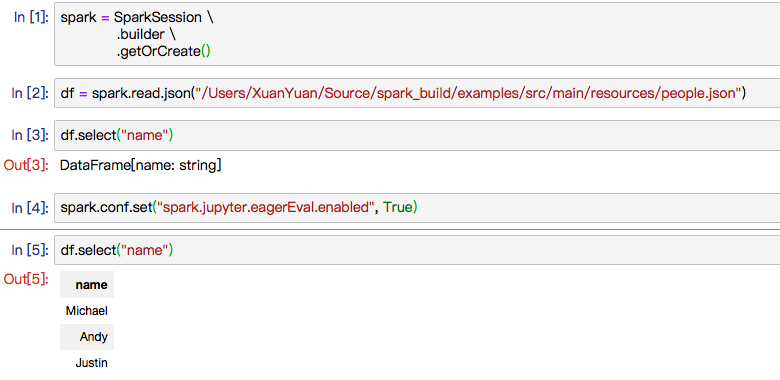

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/21370 [SPARK-24215][PySpark] Implement _repr_html_ for dataframes in PySpark ## What changes were proposed in this pull request? Implement _repr_html_ for PySpark while in notebook and add config named "spark.jupyter.eagerEval.enabled" to control this. The dev list thread for context: http://apache-spark-developers-list.1001551.n3.nabble.com/eager-execution-and-debuggability-td23928.html ## How was this patch tested? New ut in DataFrameSuite and manual test in jupyter. Some screenshot below:   You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-24215 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21370.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21370 commit ebc0b11fd006386d32949f56228e2671297373fc Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-19T11:56:02Z SPARK-24215: Implement __repr__ and _repr_html_ for dataframes in PySpark --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21353: [SPARK-24036][SS] Scheduler changes for continuou...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21353#discussion_r188975680 --- Diff: core/src/main/scala/org/apache/spark/scheduler/ContinuousShuffleMapTask.scala --- @@ -0,0 +1,139 @@ +/* + * Licensed to the Apache Software Foundation (ASF) under one or more + * contributor license agreements. See the NOTICE file distributed with + * this work for additional information regarding copyright ownership. + * The ASF licenses this file to You under the Apache License, Version 2.0 + * (the "License"); you may not use this file except in compliance with + * the License. You may obtain a copy of the License at + * + *http://www.apache.org/licenses/LICENSE-2.0 + * + * Unless required by applicable law or agreed to in writing, software + * distributed under the License is distributed on an "AS IS" BASIS, + * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. + * See the License for the specific language governing permissions and + * limitations under the License. + */ + +package org.apache.spark.scheduler + +import java.lang.management.ManagementFactory +import java.nio.ByteBuffer +import java.util.Properties + +import scala.language.existentials + +import org.apache.spark._ +import org.apache.spark.broadcast.Broadcast +import org.apache.spark.internal.Logging +import org.apache.spark.rdd.RDD +import org.apache.spark.shuffle.ShuffleWriter + +/** + * A ShuffleMapTask divides the elements of an RDD into multiple buckets (based on a partitioner + * specified in the ShuffleDependency). + * + * See [[org.apache.spark.scheduler.Task]] for more information. + * + * @param stageId id of the stage this task belongs to + * @param stageAttemptId attempt id of the stage this task belongs to + * @param taskBinary broadcast version of the RDD and the ShuffleDependency. Once deserialized, + * the type should be (RDD[_], ShuffleDependency[_, _, _]). + * @param partition partition of the RDD this task is associated with + * @param locs preferred task execution locations for locality scheduling + * @param localProperties copy of thread-local properties set by the user on the driver side. + * @param serializedTaskMetrics a `TaskMetrics` that is created and serialized on the driver side + * and sent to executor side. + * @param totalShuffleNum total shuffle number for current job. + * + * The parameters below are optional: + * @param jobId id of the job this task belongs to + * @param appId id of the app this task belongs to + * @param appAttemptId attempt id of the app this task belongs to + */ +private[spark] class ContinuousShuffleMapTask( --- End diff -- Implementation about ContinuousShuffleMapTask same with #21293 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21353: [SPARK-24036][SS] Scheduler changes for continuou...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21353#discussion_r188974718

--- Diff: core/src/main/scala/org/apache/spark/SparkEnv.scala ---

@@ -140,6 +140,7 @@ object SparkEnv extends Logging {

private[spark] val driverSystemName = "sparkDriver"

private[spark] val executorSystemName = "sparkExecutor"

+ private[spark] val START_EPOCH_KEY = "__continuous_start_epoch"

--- End diff --

Changes about SparkEnv same in #21293

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21353: [SPARK-24036][SS] Scheduler changes for continuou...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21353#discussion_r188974568 --- Diff: core/src/main/scala/org/apache/spark/MapOutputTracker.scala --- @@ -213,6 +213,12 @@ private[spark] sealed trait MapOutputTrackerMessage private[spark] case class GetMapOutputStatuses(shuffleId: Int) extends MapOutputTrackerMessage private[spark] case object StopMapOutputTracker extends MapOutputTrackerMessage +private[spark] case class CheckNoMissingPartitions(shuffleId: Int) --- End diff -- Changes about MapOutputTracker same in #21293 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21353: [SPARK-24036][SS] Scheduler changes for continuou...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21353#discussion_r188974319

--- Diff: core/src/main/scala/org/apache/spark/Dependency.scala ---

@@ -88,14 +96,53 @@ class ShuffleDependency[K: ClassTag, V: ClassTag, C:

ClassTag](

private[spark] val combinerClassName: Option[String] =

Option(reflect.classTag[C]).map(_.runtimeClass.getName)

- val shuffleId: Int = _rdd.context.newShuffleId()

+ val shuffleId: Int = if (isContinuous) {

+// This will not be reset in continuous processing, set an invalid

value for now.

+Int.MinValue

+ } else {

+_rdd.context.newShuffleId()

+ }

- val shuffleHandle: ShuffleHandle =

_rdd.context.env.shuffleManager.registerShuffle(

-shuffleId, _rdd.partitions.length, this)

+ val shuffleHandle: ShuffleHandle = if (isContinuous) {

+null

+ } else {

+_rdd.context.env.shuffleManager.registerShuffle(

+ shuffleId, _rdd.partitions.length, this)

+ }

- _rdd.sparkContext.cleaner.foreach(_.registerShuffleForCleanup(this))

+ if (!isContinuous) {

+_rdd.sparkContext.cleaner.foreach(_.registerShuffleForCleanup(this))

+ }

}

+/**

+ * :: DeveloperApi ::

+ * Represents a dependency on the output of a shuffle stage of continuous

type.

+ * Different with ShuffleDependency, the continuous dependency only create

on Executor side,

+ * so the rdd in param is deserialized from taskBinary.

+ */

+@DeveloperApi

+class ContinuousShuffleDependency[K: ClassTag, V: ClassTag, C: ClassTag](

--- End diff --

Changes about ShuffleDependency same in #21293

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21353: [SPARK-24036][SS] Scheduler changes for continuou...

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/21353 [SPARK-24036][SS] Scheduler changes for continuous processing shuffle support ## What changes were proposed in this pull request? This is the last part of the preview PRs, the mainly change is in DAGScheduler, we added a interface to submit whole job at once. In order to work and compile properly, this also including several parts in #21332 and #21293. I will marked the duplicated part for convenient review. Sorry about this... ## How was this patch tested? Added new UT You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-24304 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21353.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21353 commit fbcc88bfb0d2fb6dbaae9664d6f0852b71e64f2b Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-10T09:18:16Z commit for continuous map output tracker commit 44ae9d917c354d780071a8e112a118674865143d Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-04T03:58:06Z INF-SPARK-1382: Continuous shuffle map task implementation and output trackder support commit af2d60854856e669f40a03b76fffe02dac7b79c2 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-10T13:23:39Z Address comments commit 56442dc1c7450518d9bc84b4bfeddb017daa967b Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-11T12:12:29Z Fix mima test commit 2ac9980f30b8b50809aa780035281f6a62ad9573 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-17T13:01:33Z add inteface to submit all stages of one job in DAGScheduler commit 0f070fc5b26a3e2d5c30fdd7d59c4dd8896255ac Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-17T13:05:29Z fix commit 42e2a63f68ba12264f001ed53ff33c27c1287de8 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-17T13:36:32Z Merge SPARK-24304 --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21332: [SPARK-24236][SS] Continuous replacement for ShuffleExch...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21332 > As discussed in the other PR, I'm not sure about how we're integrating with the scheduler here, so I can't really give a more detailed review at this point. My bad, I'm preparing the part about integrating with scheduler first. That's the last part for our preview PR. I will marked the duplicated part of #21293 for convenient review. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21114: [SPARK-22371][CORE] Return None instead of throwing an e...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21114 retest this please --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21337: [SPARK-24234][SS] Reader for continuous processin...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21337#discussion_r188604001

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/continuous/shuffle/ContinuousShuffleReadRDD.scala

---

@@ -0,0 +1,64 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.continuous.shuffle

+

+import java.util.UUID

+

+import org.apache.spark.{Partition, SparkContext, SparkEnv, TaskContext}

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.expressions.UnsafeRow

+import org.apache.spark.util.NextIterator

+

+case class ContinuousShuffleReadPartition(index: Int) extends Partition {

+ // Initialized only on the executor, and only once even as we call

compute() multiple times.

+ lazy val (receiver, endpoint) = {

+val env = SparkEnv.get.rpcEnv

+val receiver = new UnsafeRowReceiver(env)

+val endpoint = env.setupEndpoint(UUID.randomUUID().toString, receiver)

+TaskContext.get().addTaskCompletionListener { ctx =>

+ env.stop(endpoint)

+}

+(receiver, endpoint)

+ }

+}

+

+/**

+ * RDD at the bottom of each continuous processing shuffle task, reading

from the

--- End diff --

"Bottom is a bit ambiguous" +1 for this.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21337: [SPARK-24234][SS] Reader for continuous processin...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21337#discussion_r188601016

--- Diff:

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/continuous/shuffle/UnsafeRowReceiver.scala

---

@@ -0,0 +1,56 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.streaming.continuous.shuffle

+

+import java.util.concurrent.{ArrayBlockingQueue, BlockingQueue}

+import java.util.concurrent.atomic.AtomicBoolean

+

+import org.apache.spark.internal.Logging

+import org.apache.spark.rpc.{RpcCallContext, RpcEnv, ThreadSafeRpcEndpoint}

+import org.apache.spark.sql.catalyst.expressions.UnsafeRow

+

+/**

+ * Messages for the UnsafeRowReceiver endpoint. Either an incoming row or

an epoch marker.

+ */

+private[shuffle] sealed trait UnsafeRowReceiverMessage extends Serializable

+private[shuffle] case class ReceiverRow(row: UnsafeRow) extends

UnsafeRowReceiverMessage

+private[shuffle] case class ReceiverEpochMarker() extends

UnsafeRowReceiverMessage

+

+/**

+ * RPC endpoint for receiving rows into a continuous processing shuffle

task.

+ */

+private[shuffle] class UnsafeRowReceiver(val rpcEnv: RpcEnv)

--- End diff --

override val rpcEnv here?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21332: [SPARK-24236][SS] Continuous replacement for ShuffleExch...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21332 cc @jose-torres As we discussion in #21293, the main difference between us is whether we can reuse current implementation of scheduler and shuffle, but in this part about the implementation of ShuffleExchangeExec, we may have the same imagine. Can you give a review about this? Great thanks! --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21332: [SPARK-24236][SS] Continuous replacement for Shuf...

GitHub user xuanyuanking opened a pull request: https://github.com/apache/spark/pull/21332 [SPARK-24236][SS] Continuous replacement for ShuffleExchangeExec ## What changes were proposed in this pull request? 1. New RDD named ContinuousShuffleRowRDD 2. New case class ContinuousShuffleExchangeExec 3. The rule named ReplaceShuffleExchange to replace original ShuffleExchange to ContinuousShuffleExhcange ## How was this patch tested? Existing UT. Add more UT later. Please review http://spark.apache.org/contributing.html before opening a pull request. You can merge this pull request into a Git repository by running: $ git pull https://github.com/xuanyuanking/spark SPARK-24236 Alternatively you can review and apply these changes as the patch at: https://github.com/apache/spark/pull/21332.patch To close this pull request, make a commit to your master/trunk branch with (at least) the following in the commit message: This closes #21332 commit 09e5ec9dcf3b1822eee02d10f29eb3f02d806485 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-15T13:20:59Z Add ContinuousShuffledRowRDD for cp shuffle commit 70c2a2b3016703950746142823fec147041b5158 Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-08T12:41:46Z INF-SPARK-1388: Implementation of ContinuousShuffleExchange and corresponding rule commit bd05db1344e5d89136f9bda51f2c0cf292abe4cc Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-09T10:04:01Z INF-SPARK-1388: Refactor commit 91d1bab0eeea612565b701166070d88b2e36882e Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-15T13:41:59Z ContinuouShuffleRowRDD support multi compute calls commit 42c7b29ce49650c79f06d2269d4f2bff029ffe9e Author: Yuanjian Li <xyliyuanjian@...> Date: 2018-05-11T08:42:27Z INF-SPARK-1392: fix mima test --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21293: [SPARK-24237][SS] Continuous shuffle dependency and map ...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21293 @jose-torres Great thanks for you advise and guidance for us! I found the main difference between us is whether we can reuse current implementation of scheduler and shuffle. I marked in your design here:https://docs.google.com/document/d/1IL4kJoKrZWeyIhklKUJqsW-yEN7V7aL05MmM65AYOfE/edit?disco=B4z058g --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21293: [SPARK-24237][SS] Continuous shuffle dependency a...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21293#discussion_r188277683

--- Diff:

core/src/main/scala/org/apache/spark/scheduler/ContinuousShuffleMapTask.scala

---

@@ -0,0 +1,139 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.scheduler

+

+import java.lang.management.ManagementFactory

+import java.nio.ByteBuffer

+import java.util.Properties

+

+import scala.language.existentials

+

+import org.apache.spark._

+import org.apache.spark.broadcast.Broadcast

+import org.apache.spark.internal.Logging

+import org.apache.spark.rdd.RDD

+import org.apache.spark.shuffle.ShuffleWriter

+

+/**

+ * A ShuffleMapTask divides the elements of an RDD into multiple buckets

(based on a partitioner

+ * specified in the ShuffleDependency).

+ *

+ * See [[org.apache.spark.scheduler.Task]] for more information.

+ *

+ * @param stageId id of the stage this task belongs to

+ * @param stageAttemptId attempt id of the stage this task belongs to

+ * @param taskBinary broadcast version of the RDD and the

ShuffleDependency. Once deserialized,

+ * the type should be (RDD[_], ShuffleDependency[_, _,

_]).

+ * @param partition partition of the RDD this task is associated with

+ * @param locs preferred task execution locations for locality scheduling

+ * @param localProperties copy of thread-local properties set by the user

on the driver side.

+ * @param serializedTaskMetrics a `TaskMetrics` that is created and

serialized on the driver side

+ * and sent to executor side.

+ * @param totalShuffleNum total shuffle number for current job.

+ *

+ * The parameters below are optional:

+ * @param jobId id of the job this task belongs to

+ * @param appId id of the app this task belongs to

+ * @param appAttemptId attempt id of the app this task belongs to

+ */

+private[spark] class ContinuousShuffleMapTask(

+stageId: Int,

+stageAttemptId: Int,

+taskBinary: Broadcast[Array[Byte]],

+partition: Partition,

+@transient private var locs: Seq[TaskLocation],

+localProperties: Properties,

+serializedTaskMetrics: Array[Byte],

+totalShuffleNum: Int,

+jobId: Option[Int] = None,

+appId: Option[String] = None,

+appAttemptId: Option[String] = None)

+ extends Task[Unit](stageId, stageAttemptId, partition.index,

localProperties,

+serializedTaskMetrics, jobId, appId, appAttemptId)

+with Logging {

+

+ /** A constructor used only in test suites. This does not require

passing in an RDD. */

+ def this(partitionId: Int, totalShuffleNum: Int) {

+this(0, 0, null, new Partition { override def index: Int = 0 }, null,

new Properties,

+ null, totalShuffleNum)

+ }

+

+ @transient private val preferredLocs: Seq[TaskLocation] = {

+if (locs == null) Nil else locs.toSet.toSeq

+ }

+

+ // TODO: Get current epoch from epoch coordinator while task restart,

also epoch is Long, we

+ // should deal with it.

+ var currentEpoch =

context.getLocalProperty(SparkEnv.START_EPOCH_KEY).toInt

+

+ override def runTask(context: TaskContext): Unit = {

+// Deserialize the RDD using the broadcast variable.

+val threadMXBean = ManagementFactory.getThreadMXBean

+val deserializeStartTime = System.currentTimeMillis()

+val deserializeStartCpuTime = if

(threadMXBean.isCurrentThreadCpuTimeSupported) {

+ threadMXBean.getCurrentThreadCpuTime

+} else 0L

+val ser = SparkEnv.get.closureSerializer.newInstance()

+// TODO: rdd here should be a wrap of ShuffledRowRDD which never stop

+val (rdd, dep) = ser.deserialize[(RDD[_], ShuffleDependency[_, _, _])](

+ ByteBuffer.wrap(taskBinary.value),

Thread.currentThread.getContextClassLoader)

+_executorDeserializeTime = System.currentTimeMillis() -

[GitHub] spark pull request #21293: [SPARK-24237][SS] Continuous shuffle dependency a...

Github user xuanyuanking commented on a diff in the pull request: https://github.com/apache/spark/pull/21293#discussion_r188273722 --- Diff: core/src/main/scala/org/apache/spark/MapOutputTracker.scala --- @@ -769,6 +796,43 @@ private[spark] class MapOutputTrackerWorker(conf: SparkConf) extends MapOutputTr } } +/** + * MapOutputTrackerWorker for continuous processing, the main difference with MapOutputTracker + * is waiting for a time when the upstream's map output status not ready. --- End diff -- The current implement of ContinuousShuffleMapOutputTracker didn't change existing behavior, just choose the corresponding output tracker instance by the job type. After we use a better way of mark a job is continuous mode(discuss in https://github.com/apache/spark/pull/21293#discussion-diff-187368178R231), they do different things and not use same codepath. --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21293: [SPARK-24237][SS] Continuous shuffle dependency a...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21293#discussion_r188270290

--- Diff: core/src/main/scala/org/apache/spark/Dependency.scala ---

@@ -88,14 +90,53 @@ class ShuffleDependency[K: ClassTag, V: ClassTag, C:

ClassTag](

private[spark] val combinerClassName: Option[String] =

Option(reflect.classTag[C]).map(_.runtimeClass.getName)

- val shuffleId: Int = _rdd.context.newShuffleId()

+ val shuffleId: Int = if (isContinuous) {

+// This will not be reset in continuous processing, set an invalid

value for now.

+Int.MinValue

+ } else {

+_rdd.context.newShuffleId()

+ }

- val shuffleHandle: ShuffleHandle =

_rdd.context.env.shuffleManager.registerShuffle(

-shuffleId, _rdd.partitions.length, this)

+ val shuffleHandle: ShuffleHandle = if (isContinuous) {

+null

+ } else {

+_rdd.context.env.shuffleManager.registerShuffle(

+ shuffleId, _rdd.partitions.length, this)

+ }

- _rdd.sparkContext.cleaner.foreach(_.registerShuffleForCleanup(this))

+ if (!isContinuous) {

+_rdd.sparkContext.cleaner.foreach(_.registerShuffleForCleanup(this))

+ }

}

+/**

+ * :: DeveloperApi ::

+ * Represents a dependency on the output of a shuffle stage of continuous

type.

+ * Different with ShuffleDependency, the continuous dependency only create

on Executor side,

+ * so the rdd in param is deserialized from taskBinary.

+ */

+@DeveloperApi

+class ContinuousShuffleDependency[K: ClassTag, V: ClassTag, C: ClassTag](

+rdd: RDD[_ <: Product2[K, V]],

+dep: ShuffleDependency[K, V, C],

+continuousEpoch: Int,

+totalShuffleNum: Int,

+shuffleNumMaps: Int)

+ extends ShuffleDependency[K, V, C](

+rdd,

+dep.partitioner,

+dep.serializer,

+dep.keyOrdering,

+dep.aggregator,

+dep.mapSideCombine, true) {

+

+ val baseShuffleId: Int = dep.shuffleId

+

+ override val shuffleId: Int = continuousEpoch * totalShuffleNum +

baseShuffleId

--- End diff --

Got it, if we move EpochCoordinator to SparkEnv, I think we can

re-implement the shuffle register on driver side, totally controlled by

EpochCoordinator. Even if the EpochCoordinator doesn't manage the shuffle

register work, I think EpochCoordinator should move to SparkEnv and take more

work in shuffle support in your design, am I right?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21293: [SPARK-24237][SS] Continuous shuffle dependency a...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21293#discussion_r188269208

--- Diff: core/src/main/scala/org/apache/spark/Dependency.scala ---

@@ -65,15 +65,17 @@ abstract class NarrowDependency[T](_rdd: RDD[T])

extends Dependency[T] {

* @param keyOrdering key ordering for RDD's shuffles

* @param aggregator map/reduce-side aggregator for RDD's shuffle

* @param mapSideCombine whether to perform partial aggregation (also

known as map-side combine)

+ * @param isContinuous mark the dependency is base for continuous

processing or not

--- End diff --

Got it, if we think currently implementation is tricky here, we can change

the implementation by getting rid of the ContinuousShuffleDependency, just as

you said in jira comments :"We might not need this to be an actual

org.apache.spark.Dependency."

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21114: [SPARK-22371][CORE] Return None instead of throwi...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21114#discussion_r188152980

--- Diff: core/src/test/scala/org/apache/spark/AccumulatorSuite.scala ---

@@ -237,6 +236,65 @@ class AccumulatorSuite extends SparkFunSuite with

Matchers with LocalSparkContex

acc.merge("kindness")

assert(acc.value === "kindness")

}

+

+ test("updating garbage collected accumulators") {

--- End diff --

This test can reproduce the crush scenario in original code base and

successful ended after this patch. I think @cloud-fan is worrying about this

test shouldn't commit in code base because it complexityï¼

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark issue #21114: [SPARK-22371][CORE] Return None instead of throwing an e...

Github user xuanyuanking commented on the issue: https://github.com/apache/spark/pull/21114 cc @cloud-fan --- - To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21114: [SPARK-22371][CORE] Return None instead of throwi...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21114#discussion_r187823469

--- Diff: core/src/test/scala/org/apache/spark/AccumulatorSuite.scala ---

@@ -237,6 +236,65 @@ class AccumulatorSuite extends SparkFunSuite with

Matchers with LocalSparkContex

acc.merge("kindness")

assert(acc.value === "kindness")

}

+

+ test("updating garbage collected accumulators") {

+// Simulate FetchFailedException in the first attempt to force a retry.

+// Then complete remaining task from the first attempt after the second

+// attempt started, but before it completes. Completion event for the

first

+// attempt will try to update garbage collected accumulators.

+val numPartitions = 2

+sc = new SparkContext("local[2]", "test")

+

+val attempt0Latch = new TestLatch("attempt0")

+val attempt1Latch = new TestLatch("attempt1")

+

+val x = sc.parallelize(1 to 100, numPartitions).groupBy(identity)

+val sid = x.dependencies.head.asInstanceOf[ShuffleDependency[_, _,

_]].shuffleHandle.shuffleId

+val rdd = x.mapPartitionsWithIndex { case (i, iter) =>

+ val taskContext = TaskContext.get()

+ if (taskContext.stageAttemptNumber() == 0) {

+if (i == 0) {

+ // Fail the first task in the first stage attempt to force retry.

+ throw new FetchFailedException(

+SparkEnv.get.blockManager.blockManagerId,

+sid,

+taskContext.partitionId(),

+taskContext.partitionId(),

+"simulated fetch failure")

+} else {

+ // Wait till the second attempt starts.

+ attempt0Latch.await()

+ iter

+}

+ } else {

+if (i == 0) {

+ // Wait till the first attempt completes.

+ attempt1Latch.await()

+}

+iter

+ }

+}

+

+sc.addSparkListener(new SparkListener {

+ override def onTaskStart(taskStart: SparkListenerTaskStart): Unit = {

+if (taskStart.stageId == 1 && taskStart.stageAttemptId == 1) {

--- End diff --

Got it.

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org

For additional commands, e-mail: reviews-h...@spark.apache.org

[GitHub] spark pull request #21114: [SPARK-22371][CORE] Return None instead of throwi...

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21114#discussion_r187763308

--- Diff: core/src/test/scala/org/apache/spark/AccumulatorSuite.scala ---

@@ -237,6 +236,65 @@ class AccumulatorSuite extends SparkFunSuite with

Matchers with LocalSparkContex

acc.merge("kindness")

assert(acc.value === "kindness")

}

+

+ test("updating garbage collected accumulators") {

+// Simulate FetchFailedException in the first attempt to force a retry.

+// Then complete remaining task from the first attempt after the second

+// attempt started, but before it completes. Completion event for the

first

+// attempt will try to update garbage collected accumulators.

+val numPartitions = 2

+sc = new SparkContext("local[2]", "test")

+

+val attempt0Latch = new TestLatch("attempt0")

+val attempt1Latch = new TestLatch("attempt1")

+

+val x = sc.parallelize(1 to 100, numPartitions).groupBy(identity)

+val sid = x.dependencies.head.asInstanceOf[ShuffleDependency[_, _,

_]].shuffleHandle.shuffleId

+val rdd = x.mapPartitionsWithIndex { case (i, iter) =>

+ val taskContext = TaskContext.get()

+ if (taskContext.stageAttemptNumber() == 0) {

+if (i == 0) {

+ // Fail the first task in the first stage attempt to force retry.

+ throw new FetchFailedException(

+SparkEnv.get.blockManager.blockManagerId,

+sid,

+taskContext.partitionId(),

+taskContext.partitionId(),

+"simulated fetch failure")

+} else {

+ // Wait till the second attempt starts.

+ attempt0Latch.await()

+ iter

+}

+ } else {

+if (i == 0) {

+ // Wait till the first attempt completes.

+ attempt1Latch.await()

+}

+iter

+ }

+}

+

+sc.addSparkListener(new SparkListener {

+ override def onTaskStart(taskStart: SparkListenerTaskStart): Unit = {

+if (taskStart.stageId == 1 && taskStart.stageAttemptId == 1) {

--- End diff --

Should we add 'taskStart.taskInfo.index == 0' here to make sure it's the

partition 0?

---

-

To unsubscribe, e-mail: reviews-unsubscr...@spark.apache.org