[jira] [Work logged] (HDFS-16291) Make the comment of INode#ReclaimContext more standardized

[

https://issues.apache.org/jira/browse/HDFS-16291?focusedWorklogId=674412&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674412

]

ASF GitHub Bot logged work on HDFS-16291:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 07:15

Start Date: 03/Nov/21 07:15

Worklog Time Spent: 10m

Work Description: jianghuazhu commented on a change in pull request #3602:

URL: https://github.com/apache/hadoop/pull/3602#discussion_r741662220

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/INode.java

##

@@ -993,15 +993,13 @@ public long getNsDelta() {

private final QuotaDelta quotaDelta;

/**

- * @param bsps

- * block storage policy suite to calculate intended storage type

Review comment:

Thanks @ferhui for the comment and review.

I will update it later.

The new style will look like this:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674412)

Time Spent: 1.5h (was: 1h 20m)

> Make the comment of INode#ReclaimContext more standardized

> --

>

> Key: HDFS-16291

> URL: https://issues.apache.org/jira/browse/HDFS-16291

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: documentation, namenode

>Affects Versions: 3.4.0

>Reporter: JiangHua Zhu

>Assignee: JiangHua Zhu

>Priority: Minor

> Labels: pull-request-available

> Attachments: image-2021-10-31-20-25-08-379.png

>

> Time Spent: 1.5h

> Remaining Estimate: 0h

>

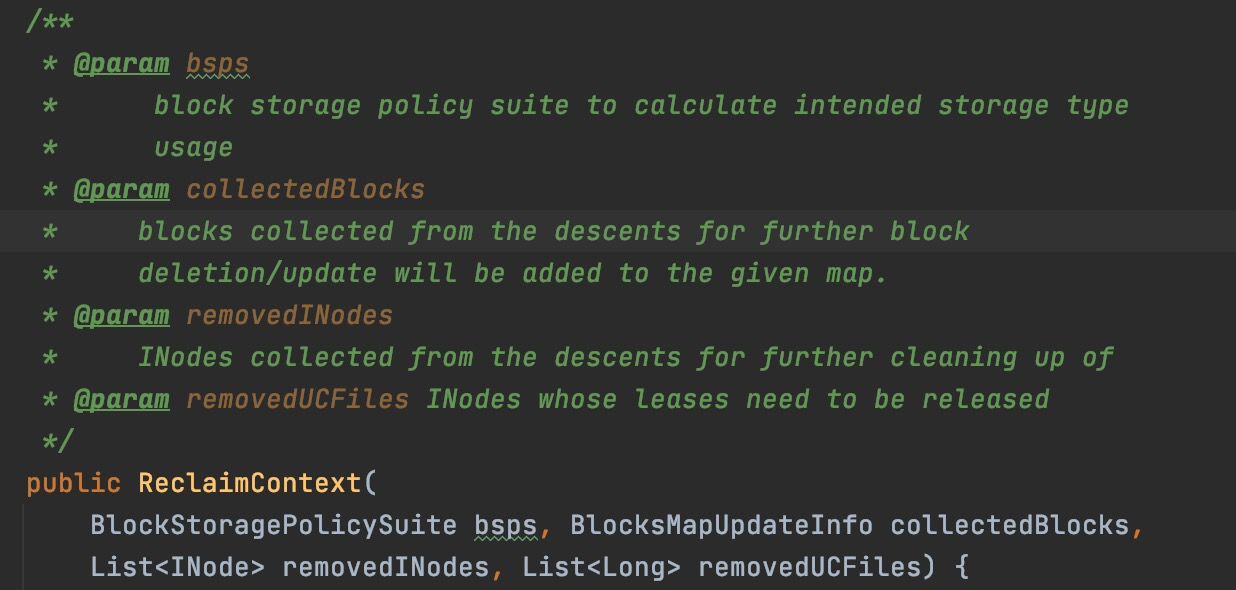

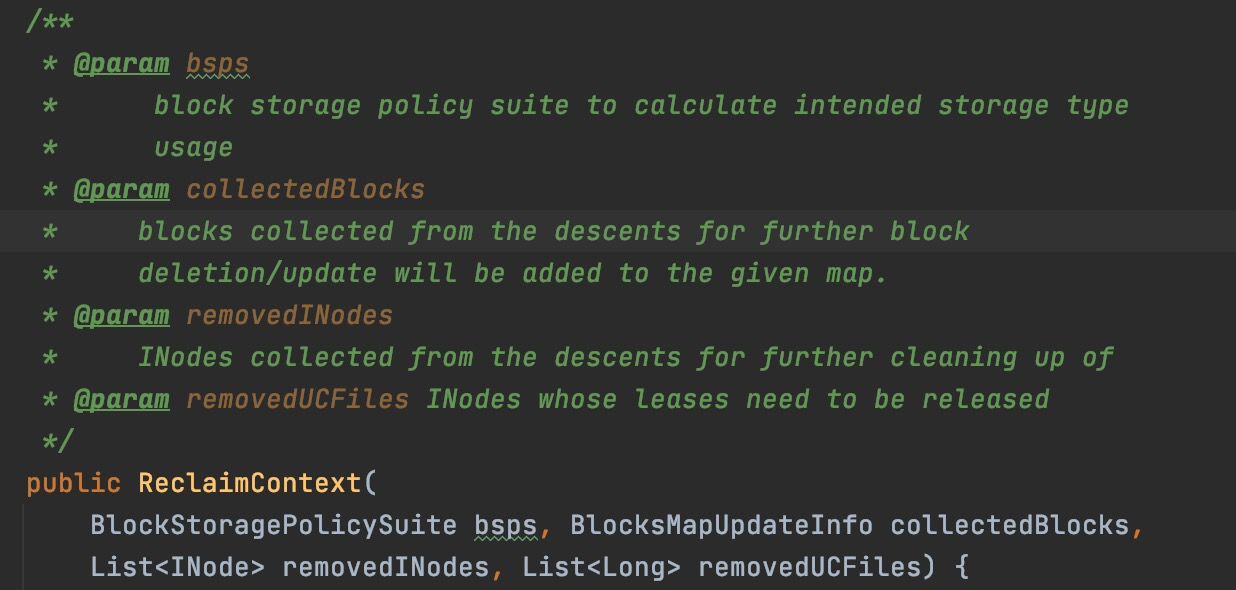

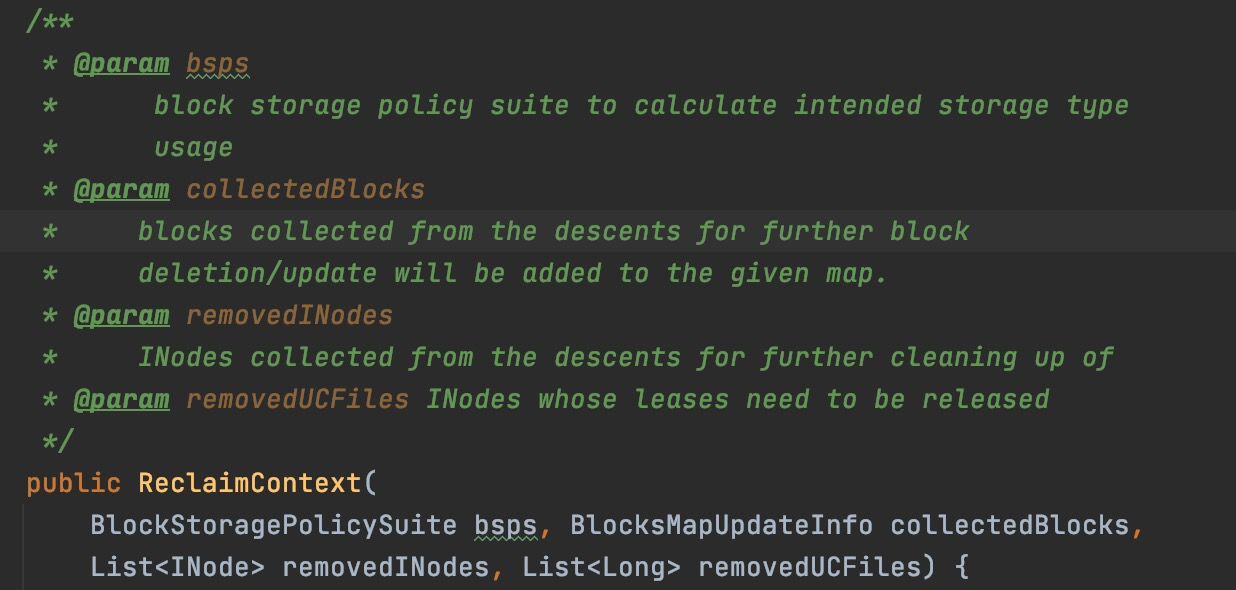

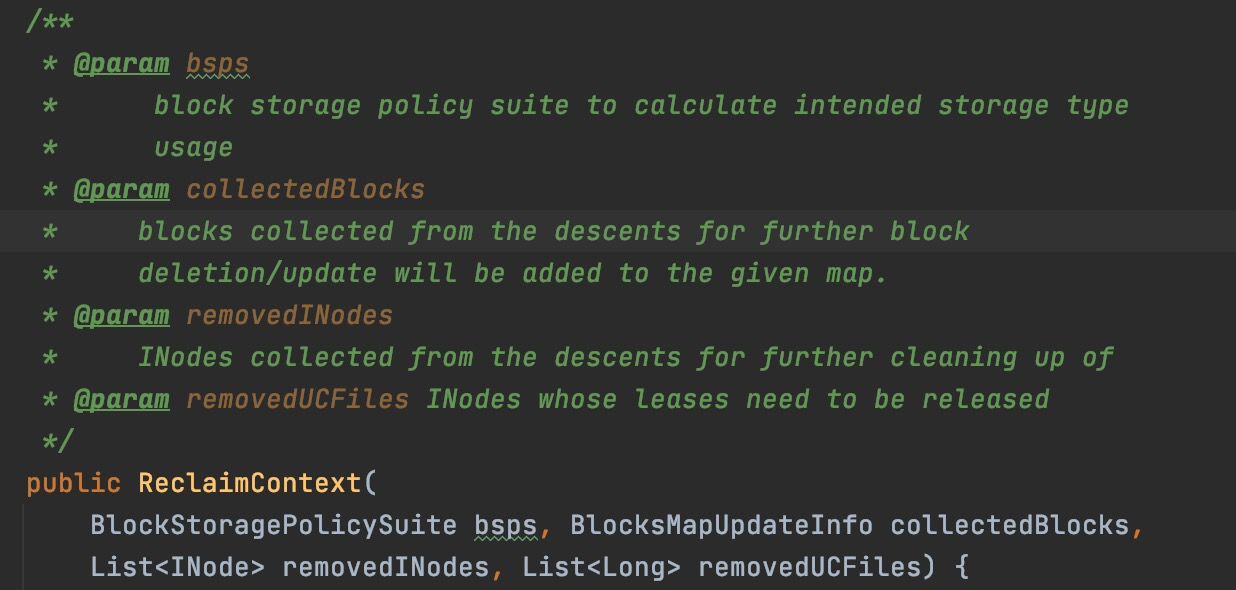

> In the INode#ReclaimContext class, there are some comments that are not

> standardized enough.

> E.g:

> !image-2021-10-31-20-25-08-379.png!

> We should make comments more standardized. This will be more readable.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[ https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674434&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674434 ] ASF GitHub Bot logged work on HDFS-16287: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:16 Start Date: 03/Nov/21 08:16 Worklog Time Spent: 10m Work Description: haiyang1987 commented on pull request #3596: URL: https://github.com/apache/hadoop/pull/3596#issuecomment-958731868 > @haiyang1987 Thanks for contribution, some comments: we can change the title here and jira, If dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is not reconfigurable. And I will check whether dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is unused. > @haiyang1987 Thanks for contribution, some comments: we can change the title here and jira, If dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is not reconfigurable. And I will check whether dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is unused. @ferhui Thank you for your review. 1.Already change the title here and jira 2. 'dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled' in BlockPlacementPolicyDefault use -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674434) Time Spent: 3h 50m (was: 3h 40m) > Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable > - > > Key: HDFS-16287 > URL: https://issues.apache.org/jira/browse/HDFS-16287 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haiyang Hu >Assignee: Haiyang Hu >Priority: Major > Labels: pull-request-available > Time Spent: 3h 50m > Remaining Estimate: 0h > > 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable > and rapid rollback in case this feature > [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected > things happen in production environment > 2. DatanodeManager#startSlowPeerCollector by parameter > 'dfs.datanode.peer.stats.enabled' to control -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[ https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674437&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674437 ] ASF GitHub Bot logged work on HDFS-16287: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:20 Start Date: 03/Nov/21 08:20 Worklog Time Spent: 10m Work Description: haiyang1987 closed pull request #3596: URL: https://github.com/apache/hadoop/pull/3596 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674437) Time Spent: 4h 10m (was: 4h) > Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable > - > > Key: HDFS-16287 > URL: https://issues.apache.org/jira/browse/HDFS-16287 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haiyang Hu >Assignee: Haiyang Hu >Priority: Major > Labels: pull-request-available > Time Spent: 4h 10m > Remaining Estimate: 0h > > 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable > and rapid rollback in case this feature > [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected > things happen in production environment > 2. DatanodeManager#startSlowPeerCollector by parameter > 'dfs.datanode.peer.stats.enabled' to control -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[ https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674436&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674436 ] ASF GitHub Bot logged work on HDFS-16287: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:20 Start Date: 03/Nov/21 08:20 Worklog Time Spent: 10m Work Description: haiyang1987 commented on pull request #3596: URL: https://github.com/apache/hadoop/pull/3596#issuecomment-958734227 > @haiyang1987 Thanks for contribution, some comments: we can change the title here and jira, If dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is not reconfigurable. And I will check whether dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is unused. @ferhui Thank you for your review. 1.Already change the title here and jira 2. 'dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled' in BlockPlacementPolicyDefault use -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674436) Time Spent: 4h (was: 3h 50m) > Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable > - > > Key: HDFS-16287 > URL: https://issues.apache.org/jira/browse/HDFS-16287 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haiyang Hu >Assignee: Haiyang Hu >Priority: Major > Labels: pull-request-available > Time Spent: 4h > Remaining Estimate: 0h > > 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable > and rapid rollback in case this feature > [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected > things happen in production environment > 2. DatanodeManager#startSlowPeerCollector by parameter > 'dfs.datanode.peer.stats.enabled' to control -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[ https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674438&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674438 ] ASF GitHub Bot logged work on HDFS-16287: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:20 Start Date: 03/Nov/21 08:20 Worklog Time Spent: 10m Work Description: haiyang1987 removed a comment on pull request #3596: URL: https://github.com/apache/hadoop/pull/3596#issuecomment-958731868 > @haiyang1987 Thanks for contribution, some comments: we can change the title here and jira, If dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is not reconfigurable. And I will check whether dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is unused. > @haiyang1987 Thanks for contribution, some comments: we can change the title here and jira, If dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is not reconfigurable. And I will check whether dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled is unused. @ferhui Thank you for your review. 1.Already change the title here and jira 2. 'dfs.namenode.block-placement-policy.exclude-slow-nodes.enabled' in BlockPlacementPolicyDefault use -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674438) Time Spent: 4h 20m (was: 4h 10m) > Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable > - > > Key: HDFS-16287 > URL: https://issues.apache.org/jira/browse/HDFS-16287 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haiyang Hu >Assignee: Haiyang Hu >Priority: Major > Labels: pull-request-available > Time Spent: 4h 20m > Remaining Estimate: 0h > > 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable > and rapid rollback in case this feature > [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected > things happen in production environment > 2. DatanodeManager#startSlowPeerCollector by parameter > 'dfs.datanode.peer.stats.enabled' to control -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[ https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674439&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674439 ] ASF GitHub Bot logged work on HDFS-16287: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:21 Start Date: 03/Nov/21 08:21 Worklog Time Spent: 10m Work Description: haiyang1987 opened a new pull request #3596: URL: https://github.com/apache/hadoop/pull/3596 ### Description of PR Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable Details: HDFS-16287 ### For code changes: - [ ] Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable and rapid rollback in case this feature HDFS-16076 unexpected things happen in production environment - [ ] DatanodeManager#startSlowPeerCollector by parameter 'dfs.datanode.peer.stats.enabled' to control -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674439) Time Spent: 4.5h (was: 4h 20m) > Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable > - > > Key: HDFS-16287 > URL: https://issues.apache.org/jira/browse/HDFS-16287 > Project: Hadoop HDFS > Issue Type: Improvement >Reporter: Haiyang Hu >Assignee: Haiyang Hu >Priority: Major > Labels: pull-request-available > Time Spent: 4.5h > Remaining Estimate: 0h > > 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable > and rapid rollback in case this feature > [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected > things happen in production environment > 2. DatanodeManager#startSlowPeerCollector by parameter > 'dfs.datanode.peer.stats.enabled' to control -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674440&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674440

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 08:22

Start Date: 03/Nov/21 08:22

Worklog Time Spent: 10m

Work Description: haiyang1987 commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r741696525

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -260,17 +257,14 @@

final Timer timer = new Timer();

this.slowPeerTracker = dataNodePeerStatsEnabled ?

new SlowPeerTracker(conf, timer) : null;

-this.excludeSlowNodesEnabled = conf.getBoolean(

-DFS_NAMENODE_BLOCKPLACEMENTPOLICY_EXCLUDE_SLOW_NODES_ENABLED_KEY,

-DFS_NAMENODE_BLOCKPLACEMENTPOLICY_EXCLUDE_SLOW_NODES_ENABLED_DEFAULT);

this.maxSlowPeerReportNodes = conf.getInt(

DFSConfigKeys.DFS_NAMENODE_MAX_SLOWPEER_COLLECT_NODES_KEY,

DFSConfigKeys.DFS_NAMENODE_MAX_SLOWPEER_COLLECT_NODES_DEFAULT);

this.slowPeerCollectionInterval = conf.getTimeDuration(

DFSConfigKeys.DFS_NAMENODE_SLOWPEER_COLLECT_INTERVAL_KEY,

DFSConfigKeys.DFS_NAMENODE_SLOWPEER_COLLECT_INTERVAL_DEFAULT,

TimeUnit.MILLISECONDS);

-if (slowPeerTracker != null && excludeSlowNodesEnabled) {

Review comment:

@tomscut Thank you for your review.

1.Current parameter 'dataNodePeerStatsEnabled' and 'excludeSlowNodesEnabled'

decision SlowPeerCollector thread whether to start ,But it didn't take into

account avoid SlowDataNodesForRead logic

2.So think about two phases:

a.The first is to start SlowPeerCollector thread

b.Second, you can control whether to enable read/write avoid slow datanode

according to dynamic parameters

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674440)

Time Spent: 4h 40m (was: 4.5h)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 4h 40m

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674447&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674447

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 08:40

Start Date: 03/Nov/21 08:40

Worklog Time Spent: 10m

Work Description: haiyang1987 commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r741707936

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Consider slowNodesUuidSet is generated when the SlowPeerCollector thread

is started,therefore it is logical to judge Therefore, it is logical to judge

whether the dnUuid exists in the slowNodesUuidSet?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674447)

Time Spent: 4h 50m (was: 4h 40m)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 4h 50m

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674448&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674448

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 08:40

Start Date: 03/Nov/21 08:40

Worklog Time Spent: 10m

Work Description: haiyang1987 commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r741707936

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Consider slowNodesUuidSet is generated when the SlowPeerCollector thread

is started,therefore it is logical to judge whether the dnUuid exists in the

slowNodesUuidSet?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674448)

Time Spent: 5h (was: 4h 50m)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 5h

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16291) Make the comment of INode#ReclaimContext more standardized

[ https://issues.apache.org/jira/browse/HDFS-16291?focusedWorklogId=674459&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674459 ] ASF GitHub Bot logged work on HDFS-16291: - Author: ASF GitHub Bot Created on: 03/Nov/21 08:49 Start Date: 03/Nov/21 08:49 Worklog Time Spent: 10m Work Description: jianghuazhu commented on pull request #3602: URL: https://github.com/apache/hadoop/pull/3602#issuecomment-958751029 Thank you very much. @ferhui @virajjasani -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674459) Time Spent: 1h 40m (was: 1.5h) > Make the comment of INode#ReclaimContext more standardized > -- > > Key: HDFS-16291 > URL: https://issues.apache.org/jira/browse/HDFS-16291 > Project: Hadoop HDFS > Issue Type: Improvement > Components: documentation, namenode >Affects Versions: 3.4.0 >Reporter: JiangHua Zhu >Assignee: JiangHua Zhu >Priority: Minor > Labels: pull-request-available > Attachments: image-2021-10-31-20-25-08-379.png > > Time Spent: 1h 40m > Remaining Estimate: 0h > > In the INode#ReclaimContext class, there are some comments that are not > standardized enough. > E.g: > !image-2021-10-31-20-25-08-379.png! > We should make comments more standardized. This will be more readable. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Created] (HDFS-16297) striped block was deleted less than 1 replication

chan created HDFS-16297: --- Summary: striped block was deleted less than 1 replication Key: HDFS-16297 URL: https://issues.apache.org/jira/browse/HDFS-16297 Project: Hadoop HDFS Issue Type: Bug Components: block placement, namanode Affects Versions: 3.2.1 Reporter: chan In my cluster,balancer is open,i found a ec file miss block(6-3),four blocks are deleted less than 1 replication, i think it`s dangerous, -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16286) Debug tool to verify the correctness of erasure coding on file

[

https://issues.apache.org/jira/browse/HDFS-16286?focusedWorklogId=674488&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674488

]

ASF GitHub Bot logged work on HDFS-16286:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 09:48

Start Date: 03/Nov/21 09:48

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3593:

URL: https://github.com/apache/hadoop/pull/3593#issuecomment-958791127

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 52s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 22s | | trunk passed |

| +1 :green_heart: | compile | 1m 32s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 17s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 0m 59s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 35s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 59s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 41s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 47s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 19s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 19s | | the patch passed |

| +1 :green_heart: | compile | 1m 9s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 9s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 52s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 16s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 48s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 22s | | the patch passed |

| +1 :green_heart: | shadedclient | 24m 50s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| +1 :green_heart: | unit | 324m 13s | | hadoop-hdfs in the patch

passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 431m 32s | | |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3593/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3593 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 04f9538a1b9b 4.15.0-147-generic #151-Ubuntu SMP Fri Jun 18

19:21:19 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 21c1887fd7d0ede169c42e11b0c793c717dc7c47 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3593/3/testReport/ |

| Max. process+thread count | 1996 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U:

hadoop-hdfs-project/hadoop-hdfs |

| Console output |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3593/3/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This

[jira] [Work logged] (HDFS-16286) Debug tool to verify the correctness of erasure coding on file

[ https://issues.apache.org/jira/browse/HDFS-16286?focusedWorklogId=674524&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674524 ] ASF GitHub Bot logged work on HDFS-16286: - Author: ASF GitHub Bot Created on: 03/Nov/21 10:45 Start Date: 03/Nov/21 10:45 Worklog Time Spent: 10m Work Description: sodonnel commented on pull request #3593: URL: https://github.com/apache/hadoop/pull/3593#issuecomment-958887599 @cndaimin I was about to commit this, and I remembered we should update the documentation to include this command. The documentation is in a markdown file and gets published with the release, like here: https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#Debug_Commands That page is generated from: ``` hadoop-hdfs-project/hadoop-hdfs/src/site/markdown/HDFSCommands.md ``` Would you be able to add a section for this new command under the Debug_Commands section please? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674524) Time Spent: 3h 50m (was: 3h 40m) > Debug tool to verify the correctness of erasure coding on file > -- > > Key: HDFS-16286 > URL: https://issues.apache.org/jira/browse/HDFS-16286 > Project: Hadoop HDFS > Issue Type: Improvement > Components: erasure-coding, tools >Affects Versions: 3.3.0, 3.3.1 >Reporter: daimin >Assignee: daimin >Priority: Minor > Labels: pull-request-available > Time Spent: 3h 50m > Remaining Estimate: 0h > > Block data in erasure coded block group may corrupt and the block meta > (checksum) is unable to discover the corruption in some cases such as EC > reconstruction, related issues are: HDFS-14768, HDFS-15186, HDFS-15240. > In addition to HDFS-15759, there needs a tool to check erasure coded file > whether any block group has data corruption in case of other conditions > rather than EC reconstruction, or the feature HDFS-15759(validation during EC > reconstruction) is not open(which is close by default now). -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Commented] (HDFS-16297) striped block was deleted less than 1 replication

[

https://issues.apache.org/jira/browse/HDFS-16297?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel&focusedCommentId=17437953#comment-17437953

]

Ayush Saxena commented on HDFS-16297:

-

{quote}found a ec file miss block(6-3),four blocks are deleted less than 1

replication, i think it`s dangerous,

{quote}

Yeps, it is dangerous. But by just knowing that we can not help solve that. Can

you share details about the file, the related logs, the namenode logs, the

datanodes where the block got deleted, the balancer logs etc.

Or In case you have found the root cause and if it is a bug, you can shoot a

patch/PR with the fix directly

> striped block was deleted less than 1 replication

> -

>

> Key: HDFS-16297

> URL: https://issues.apache.org/jira/browse/HDFS-16297

> Project: Hadoop HDFS

> Issue Type: Bug

> Components: block placement, namanode

>Affects Versions: 3.2.1

>Reporter: chan

>Priority: Major

>

> In my cluster,balancer is open,i found a ec file miss block(6-3),four blocks

> are deleted less than 1 replication, i think it`s dangerous,

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16286) Debug tool to verify the correctness of erasure coding on file

[ https://issues.apache.org/jira/browse/HDFS-16286?focusedWorklogId=674557&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674557 ] ASF GitHub Bot logged work on HDFS-16286: - Author: ASF GitHub Bot Created on: 03/Nov/21 11:44 Start Date: 03/Nov/21 11:44 Worklog Time Spent: 10m Work Description: cndaimin commented on pull request #3593: URL: https://github.com/apache/hadoop/pull/3593#issuecomment-958953019 @sodonnel Thanks, documentation file `HDFSCommands.md` is updated. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674557) Time Spent: 4h (was: 3h 50m) > Debug tool to verify the correctness of erasure coding on file > -- > > Key: HDFS-16286 > URL: https://issues.apache.org/jira/browse/HDFS-16286 > Project: Hadoop HDFS > Issue Type: Improvement > Components: erasure-coding, tools >Affects Versions: 3.3.0, 3.3.1 >Reporter: daimin >Assignee: daimin >Priority: Minor > Labels: pull-request-available > Time Spent: 4h > Remaining Estimate: 0h > > Block data in erasure coded block group may corrupt and the block meta > (checksum) is unable to discover the corruption in some cases such as EC > reconstruction, related issues are: HDFS-14768, HDFS-15186, HDFS-15240. > In addition to HDFS-15759, there needs a tool to check erasure coded file > whether any block group has data corruption in case of other conditions > rather than EC reconstruction, or the feature HDFS-15759(validation during EC > reconstruction) is not open(which is close by default now). -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16296) RBF: RouterRpcFairnessPolicyController add denied permits for each nameservice

[

https://issues.apache.org/jira/browse/HDFS-16296?focusedWorklogId=674606&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674606

]

ASF GitHub Bot logged work on HDFS-16296:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 13:34

Start Date: 03/Nov/21 13:34

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3613:

URL: https://github.com/apache/hadoop/pull/3613#issuecomment-959090976

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 3s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 31m 52s | | trunk passed |

| +1 :green_heart: | compile | 0m 44s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 0m 41s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 0m 29s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 45s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 42s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 57s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 17s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 0s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 35s | | the patch passed |

| +1 :green_heart: | compile | 0m 35s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 0m 35s | | the patch passed |

| +1 :green_heart: | compile | 0m 30s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 0m 30s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 19s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 34s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 34s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 52s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 17s | | the patch passed |

| +1 :green_heart: | shadedclient | 19m 45s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 35m 35s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt)

| hadoop-hdfs-rbf in the patch passed. |

| +1 :green_heart: | asflicense | 0m 37s | | The patch does not

generate ASF License warnings. |

| | | 121m 20s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests |

hadoop.hdfs.server.federation.router.TestDisableNameservices |

| | hadoop.hdfs.rbfbalance.TestRouterDistCpProcedure |

| | hadoop.fs.contract.router.web.TestRouterWebHDFSContractCreate |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3613 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 3148786fa0d2 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 66084908fc93f0fadd1766703d18d3ed15768aca |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apac

[jira] [Work logged] (HDFS-16286) Debug tool to verify the correctness of erasure coding on file

[ https://issues.apache.org/jira/browse/HDFS-16286?focusedWorklogId=674615&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674615 ] ASF GitHub Bot logged work on HDFS-16286: - Author: ASF GitHub Bot Created on: 03/Nov/21 13:52 Start Date: 03/Nov/21 13:52 Worklog Time Spent: 10m Work Description: sodonnel commented on pull request #3593: URL: https://github.com/apache/hadoop/pull/3593#issuecomment-959130953 Thanks, looks good. I will commit when the CI checks come back. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674615) Time Spent: 4h 10m (was: 4h) > Debug tool to verify the correctness of erasure coding on file > -- > > Key: HDFS-16286 > URL: https://issues.apache.org/jira/browse/HDFS-16286 > Project: Hadoop HDFS > Issue Type: Improvement > Components: erasure-coding, tools >Affects Versions: 3.3.0, 3.3.1 >Reporter: daimin >Assignee: daimin >Priority: Minor > Labels: pull-request-available > Time Spent: 4h 10m > Remaining Estimate: 0h > > Block data in erasure coded block group may corrupt and the block meta > (checksum) is unable to discover the corruption in some cases such as EC > reconstruction, related issues are: HDFS-14768, HDFS-15186, HDFS-15240. > In addition to HDFS-15759, there needs a tool to check erasure coded file > whether any block group has data corruption in case of other conditions > rather than EC reconstruction, or the feature HDFS-15759(validation during EC > reconstruction) is not open(which is close by default now). -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674664&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674664

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 14:45

Start Date: 03/Nov/21 14:45

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r742015162

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that when ```excludeSlowNodesEnabled``` is set to true we

```startSlowPeerCollector```, and ```stopSlowPeerCollector``` when

```excludeSlowNodesEnabled``` is set to false. There is no extra overhead. What

do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674664)

Time Spent: 5h 10m (was: 5h)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 5h 10m

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674665&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674665

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 14:46

Start Date: 03/Nov/21 14:46

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r742015162

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that only when ```excludeSlowNodesEnabled``` is set to true we

```startSlowPeerCollector```, and ```stopSlowPeerCollector``` when

```excludeSlowNodesEnabled``` is set to false. There is no extra overhead. What

do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674665)

Time Spent: 5h 20m (was: 5h 10m)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 5h 20m

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674666&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674666

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 14:47

Start Date: 03/Nov/21 14:47

Worklog Time Spent: 10m

Work Description: tomscut commented on a change in pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#discussion_r742015162

##

File path:

hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/blockmanagement/DatanodeManager.java

##

@@ -511,7 +505,16 @@ private boolean isInactive(DatanodeInfo datanode) {

private boolean isSlowNode(String dnUuid) {

return avoidSlowDataNodesForRead && slowNodesUuidSet.contains(dnUuid);

}

-

+

+ public void setAvoidSlowDataNodesForReadEnabled(boolean enable) {

Review comment:

Thanks @haiyang1987 for your comment. I think the logic right now is no

problem.

I mean that only when `excludeSlowNodesEnabled` is set to `true` we

`startSlowPeerCollector`, and `stopSlowPeerCollector` when

`excludeSlowNodesEnabled` is set to `false`. There is no extra overhead. What

do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674666)

Time Spent: 5.5h (was: 5h 20m)

> Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

> -

>

> Key: HDFS-16287

> URL: https://issues.apache.org/jira/browse/HDFS-16287

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Haiyang Hu

>Assignee: Haiyang Hu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 5.5h

> Remaining Estimate: 0h

>

> 1. Consider that make dfs.namenode.avoid.read.slow.datanode reconfigurable

> and rapid rollback in case this feature

> [HDFS-16076|https://issues.apache.org/jira/browse/HDFS-16076] unexpected

> things happen in production environment

> 2. DatanodeManager#startSlowPeerCollector by parameter

> 'dfs.datanode.peer.stats.enabled' to control

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16291) Make the comment of INode#ReclaimContext more standardized

[

https://issues.apache.org/jira/browse/HDFS-16291?focusedWorklogId=674672&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674672

]

ASF GitHub Bot logged work on HDFS-16291:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 14:55

Start Date: 03/Nov/21 14:55

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3602:

URL: https://github.com/apache/hadoop/pull/3602#issuecomment-959335456

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 0m 56s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 2s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include

any new or modified tests. Please justify why no new tests are needed for this

patch. Also please list what manual steps were performed to verify this patch.

|

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 35m 13s | | trunk passed |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 15s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 22s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 15s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 3s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 10s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 10s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 54s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 14s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 17s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 19s | | the patch passed |

| +1 :green_heart: | shadedclient | 26m 58s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 348m 51s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 39s | | The patch does not

generate ASF License warnings. |

| | | 457m 26s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3602/2/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3602 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 5d5d26c42b34 4.15.0-147-generic #151-Ubuntu SMP Fri Jun 18

19:21:19 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 673f55d0883ee7bf09e70202f14d4e334adc3cc5 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-360

[jira] [Work logged] (HDFS-16287) Support to make dfs.namenode.avoid.read.slow.datanode reconfigurable

[

https://issues.apache.org/jira/browse/HDFS-16287?focusedWorklogId=674736&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674736

]

ASF GitHub Bot logged work on HDFS-16287:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 16:28

Start Date: 03/Nov/21 16:28

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3596:

URL: https://github.com/apache/hadoop/pull/3596#issuecomment-959651079

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 24s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 2 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 36m 58s | | trunk passed |

| +1 :green_heart: | compile | 1m 35s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 33s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 3s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 28s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 41s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 39s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 27s | | the patch passed |

| +1 :green_heart: | compile | 1m 28s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 28s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 57s | | the patch passed |

| +1 :green_heart: | mvnsite | 1m 25s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 54s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 24s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 44s | | the patch passed |

| +1 :green_heart: | shadedclient | 25m 53s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 374m 11s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt)

| hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 43s | | The patch does not

generate ASF License warnings. |

| | | 486m 5s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.TestHDFSFileSystemContract |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3596 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 1fb2ee0e949b 4.15.0-143-generic #147-Ubuntu SMP Wed Apr 14

16:10:11 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 2ec82e1c420789afb326f4ebb451522a8a4e2358 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3596/4/testReport/ |

| Max. process+thread count | 2022 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdf

[jira] [Work logged] (HDFS-16296) RBF: RouterRpcFairnessPolicyController add denied permits for each nameservice

[

https://issues.apache.org/jira/browse/HDFS-16296?focusedWorklogId=674814&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674814

]

ASF GitHub Bot logged work on HDFS-16296:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 17:52

Start Date: 03/Nov/21 17:52

Worklog Time Spent: 10m

Work Description: goiri commented on a change in pull request #3613:

URL: https://github.com/apache/hadoop/pull/3613#discussion_r742196019

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/test/java/org/apache/hadoop/hdfs/server/federation/fairness/TestRouterHandlersFairness.java

##

@@ -208,4 +212,15 @@ private void invokeConcurrent(ClientProtocol routerProto,

String clientName)

routerProto.renewLease(clientName);

}

+ private int getTotalRejectedPermits(RouterContext routerContext) {

+int totalRejectedPermits = 0;

+for (String ns : cluster.getNameservices()) {

+ totalRejectedPermits += routerContext.getRouter().getRpcServer()

Review comment:

We may want to extract:

routerContext.getRouter().getRpcServer().getRPCClient()

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674814)

Time Spent: 1h (was: 50m)

> RBF: RouterRpcFairnessPolicyController add denied permits for each nameservice

> --

>

> Key: HDFS-16296

> URL: https://issues.apache.org/jira/browse/HDFS-16296

> Project: Hadoop HDFS

> Issue Type: Improvement

>Reporter: Janus Chow

>Assignee: Janus Chow

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h

> Remaining Estimate: 0h

>

> Currently RouterRpcFairnessPolicyController has a metric of

> "getProxyOpPermitRejected" to show the total rejected invokes due to lack of

> permits.

> This ticket is to add the metrics for each nameservice to have a better view

> of the load of each nameservice.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16273) RBF: RouterRpcFairnessPolicyController add availableHandleOnPerNs metrics

[

https://issues.apache.org/jira/browse/HDFS-16273?focusedWorklogId=674819&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674819

]

ASF GitHub Bot logged work on HDFS-16273:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 17:55

Start Date: 03/Nov/21 17:55

Worklog Time Spent: 10m

Work Description: goiri commented on a change in pull request #3553:

URL: https://github.com/apache/hadoop/pull/3553#discussion_r742198185

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/main/java/org/apache/hadoop/hdfs/server/federation/fairness/NoRouterRpcFairnessPolicyController.java

##

@@ -46,4 +46,9 @@ public void releasePermit(String nsId) {

public void shutdown() {

// Nothing for now.

}

+

+ @Override

+ public String getAvailableHandlerOnPerNs(){

+return "N/A";

Review comment:

Should we test for this?

##

File path:

hadoop-hdfs-project/hadoop-hdfs-rbf/src/main/java/org/apache/hadoop/hdfs/server/federation/fairness/AbstractRouterRpcFairnessPolicyController.java

##

@@ -75,4 +77,17 @@ protected void insertNameServiceWithPermits(String nsId, int

maxPermits) {

protected int getAvailablePermits(String nsId) {

return this.permits.get(nsId).availablePermits();

}

+

+ @Override

+ public String getAvailableHandlerOnPerNs() {

+JSONObject json = new JSONObject();

+for (Map.Entry entry : permits.entrySet()) {

+ try {

+json.put(entry.getKey(), entry.getValue().availablePermits());

Review comment:

Let's extract entry.getKey() and entry.getValue() to have a particular

name.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

Issue Time Tracking

---

Worklog Id: (was: 674819)

Time Spent: 1h 10m (was: 1h)

> RBF: RouterRpcFairnessPolicyController add availableHandleOnPerNs metrics

> -

>

> Key: HDFS-16273

> URL: https://issues.apache.org/jira/browse/HDFS-16273

> Project: Hadoop HDFS

> Issue Type: Improvement

> Components: rbf

>Affects Versions: 3.4.0

>Reporter: Xiangyi Zhu

>Assignee: Xiangyi Zhu

>Priority: Major

> Labels: pull-request-available

> Time Spent: 1h 10m

> Remaining Estimate: 0h

>

> Add the availableHandlerOnPerNs metrics to monitor whether the number of

> handlers configured for each NS is reasonable when using

> RouterRpcFairnessPolicyController.

--

This message was sent by Atlassian Jira

(v8.3.4#803005)

-

To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org

For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16283) RBF: improve renewLease() to call only a specific NameNode rather than make fan-out calls

[ https://issues.apache.org/jira/browse/HDFS-16283?focusedWorklogId=674822&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674822 ] ASF GitHub Bot logged work on HDFS-16283: - Author: ASF GitHub Bot Created on: 03/Nov/21 17:56 Start Date: 03/Nov/21 17:56 Worklog Time Spent: 10m Work Description: goiri commented on a change in pull request #3595: URL: https://github.com/apache/hadoop/pull/3595#discussion_r742199623 ## File path: hadoop-hdfs-project/hadoop-hdfs-client/src/main/java/org/apache/hadoop/hdfs/protocol/ClientProtocol.java ## @@ -765,6 +765,14 @@ BatchedDirectoryListing getBatchedListing( @Idempotent void renewLease(String clientName) throws IOException; + /** + * The functionality is the same as renewLease(clientName). This is to support + * router based FileSystem to newLease against a specific target FileSystem instead + * of all the target FileSystems in each call. + */ + @Idempotent + void renewLease(String clientName, String nsId) throws IOException; Review comment: That's a good point. ClientProtocol shouldn't care about subclusters. The whole abstraction is based on paths and that would make more sense. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: common-issues-unsubscr...@hadoop.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org Issue Time Tracking --- Worklog Id: (was: 674822) Time Spent: 1h 50m (was: 1h 40m) > RBF: improve renewLease() to call only a specific NameNode rather than make > fan-out calls > - > > Key: HDFS-16283 > URL: https://issues.apache.org/jira/browse/HDFS-16283 > Project: Hadoop HDFS > Issue Type: Sub-task > Components: rbf >Reporter: Aihua Xu >Assignee: Aihua Xu >Priority: Major > Labels: pull-request-available > Attachments: RBF_ improve renewLease() to call only a specific > NameNode rather than make fan-out calls.pdf > > Time Spent: 1h 50m > Remaining Estimate: 0h > > Currently renewLease() against a router will make fan-out to all the > NameNodes. Since renewLease() call is so frequent and if one of the NameNodes > are slow, then eventually the router queues are blocked by all renewLease() > and cause router degradation. > We will make a change in the client side to keep track of NameNode Id in > additional to current fileId so routers understand which NameNodes the client > is renewing lease against. -- This message was sent by Atlassian Jira (v8.3.4#803005) - To unsubscribe, e-mail: hdfs-issues-unsubscr...@hadoop.apache.org For additional commands, e-mail: hdfs-issues-h...@hadoop.apache.org

[jira] [Work logged] (HDFS-16296) RBF: RouterRpcFairnessPolicyController add denied permits for each nameservice

[

https://issues.apache.org/jira/browse/HDFS-16296?focusedWorklogId=674823&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674823

]

ASF GitHub Bot logged work on HDFS-16296:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 17:57

Start Date: 03/Nov/21 17:57

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3613:

URL: https://github.com/apache/hadoop/pull/3613#issuecomment-959782759

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 4s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 31m 55s | | trunk passed |

| +1 :green_heart: | compile | 0m 43s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 0m 40s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 0m 29s | | trunk passed |

| +1 :green_heart: | mvnsite | 0m 44s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 44s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 56s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 18s | | trunk passed |

| +1 :green_heart: | shadedclient | 20m 0s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 0m 35s | | the patch passed |

| +1 :green_heart: | compile | 0m 35s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 0m 35s | | the patch passed |

| +1 :green_heart: | compile | 0m 30s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 0m 30s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |

| +1 :green_heart: | checkstyle | 0m 20s | | the patch passed |

| +1 :green_heart: | mvnsite | 0m 33s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 32s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 0m 47s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 1m 17s | | the patch passed |

| +1 :green_heart: | shadedclient | 19m 40s | | patch has no errors

when building and testing our client artifacts. |

_ Other Tests _ |

| -1 :x: | unit | 34m 1s |

[/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/3/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs-rbf.txt)

| hadoop-hdfs-rbf in the patch passed. |

| +1 :green_heart: | asflicense | 0m 35s | | The patch does not

generate ASF License warnings. |

| | | 119m 33s | | |

| Reason | Tests |

|---:|:--|

| Failed junit tests | hadoop.hdfs.rbfbalance.TestRouterDistCpProcedure |

| Subsystem | Report/Notes |

|--:|:-|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base:

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/3/artifact/out/Dockerfile

|

| GITHUB PR | https://github.com/apache/hadoop/pull/3613 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall

mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux fe85f7d25bf3 4.15.0-112-generic #113-Ubuntu SMP Thu Jul 9

23:41:39 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 534e6fa5fd6b9a8509aaa022ad0c6ba440215ffb |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions |

/usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04

/usr/lib/jvm/java-8-openjdk-amd64:Private

Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results |

https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3613/3/testReport/ |

| Max. process+thread count | 2714 (vs. ulimit of 5500) |

| modules | C: hadoop-hd

[jira] [Work logged] (HDFS-16286) Debug tool to verify the correctness of erasure coding on file

[

https://issues.apache.org/jira/browse/HDFS-16286?focusedWorklogId=674870&page=com.atlassian.jira.plugin.system.issuetabpanels:worklog-tabpanel#worklog-674870

]

ASF GitHub Bot logged work on HDFS-16286:

-

Author: ASF GitHub Bot

Created on: 03/Nov/21 19:18

Start Date: 03/Nov/21 19:18

Worklog Time Spent: 10m

Work Description: hadoop-yetus commented on pull request #3593:

URL: https://github.com/apache/hadoop/pull/3593#issuecomment-959845514

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|::|--:|:|::|:---:|

| +0 :ok: | reexec | 1m 2s | | Docker mode activated. |

_ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files

found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +0 :ok: | markdownlint | 0m 0s | | markdownlint was not available.

|

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain

any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to

include 1 new or modified test files. |

_ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 34m 29s | | trunk passed |

| +1 :green_heart: | compile | 1m 23s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 17s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 0m 58s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 23s | | trunk passed |

| +1 :green_heart: | javadoc | 0m 57s | | trunk passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 26s | | trunk passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 16s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 37s | | branch has no errors

when building and testing our client artifacts. |

_ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 14s | | the patch passed |

| +1 :green_heart: | compile | 1m 21s | | the patch passed with JDK

Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 21s | | the patch passed |

| +1 :green_heart: | compile | 1m 11s | | the patch passed with JDK

Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 11s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks

issues. |