[jira] [Assigned] (FLINK-33064) Improve the error message when the lookup source is used as the scan source

[ https://issues.apache.org/jira/browse/FLINK-33064?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Shengkai Fang reassigned FLINK-33064: - Assignee: Yunhong Zheng > Improve the error message when the lookup source is used as the scan source > --- > > Key: FLINK-33064 > URL: https://issues.apache.org/jira/browse/FLINK-33064 > Project: Flink > Issue Type: Improvement > Components: Table SQL / Planner >Affects Versions: 1.18.0 >Reporter: Yunhong Zheng >Assignee: Yunhong Zheng >Priority: Major > Labels: pull-request-available > Fix For: 1.19.0 > > > Improve the error message when the lookup source is used as the scan source. > Currently, if we use a source which only implement LookupTableSource but not > implement ScanTableSource, as a scan source, it cannot get a property plan > and give a ' > Cannot generate a valid execution plan for the given query' which can be > improved. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-33064) Improve the error message when the lookup source is used as the scan source

[ https://issues.apache.org/jira/browse/FLINK-33064?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764498#comment-17764498 ] Shengkai Fang commented on FLINK-33064: --- Merged into master: be509e6d67471d886e58d3ddea6ddd3627a191a8 > Improve the error message when the lookup source is used as the scan source > --- > > Key: FLINK-33064 > URL: https://issues.apache.org/jira/browse/FLINK-33064 > Project: Flink > Issue Type: Improvement > Components: Table SQL / Planner >Affects Versions: 1.18.0 >Reporter: Yunhong Zheng >Assignee: Yunhong Zheng >Priority: Major > Labels: pull-request-available > Fix For: 1.19.0 > > > Improve the error message when the lookup source is used as the scan source. > Currently, if we use a source which only implement LookupTableSource but not > implement ScanTableSource, as a scan source, it cannot get a property plan > and give a ' > Cannot generate a valid execution plan for the given query' which can be > improved. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Closed] (FLINK-33064) Improve the error message when the lookup source is used as the scan source

[ https://issues.apache.org/jira/browse/FLINK-33064?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Shengkai Fang closed FLINK-33064. - Resolution: Fixed > Improve the error message when the lookup source is used as the scan source > --- > > Key: FLINK-33064 > URL: https://issues.apache.org/jira/browse/FLINK-33064 > Project: Flink > Issue Type: Improvement > Components: Table SQL / Planner >Affects Versions: 1.18.0 >Reporter: Yunhong Zheng >Assignee: Yunhong Zheng >Priority: Major > Labels: pull-request-available > Fix For: 1.19.0 > > > Improve the error message when the lookup source is used as the scan source. > Currently, if we use a source which only implement LookupTableSource but not > implement ScanTableSource, as a scan source, it cannot get a property plan > and give a ' > Cannot generate a valid execution plan for the given query' which can be > improved. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] reswqa commented on a diff in pull request #23404: [FLINK-33076][network] Reduce serialization overhead of broadcast emit from ChannelSelectorRecordWriter.

reswqa commented on code in PR #23404:

URL: https://github.com/apache/flink/pull/23404#discussion_r1324007518

##

flink-runtime/src/test/java/org/apache/flink/runtime/io/network/api/writer/BroadcastRecordWriterTest.java:

##

@@ -132,35 +132,34 @@ public void testRandomEmitAndBufferRecycling() throws

Exception {

List buffers =

Arrays.asList(bufferPool.requestBuffer(),

bufferPool.requestBuffer());

buffers.forEach(Buffer::recycleBuffer);

-assertEquals(3, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(3);

// fill first buffer

writer.broadcastEmit(new IntType(1));

writer.broadcastEmit(new IntType(2));

-assertEquals(2, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(2);

// simulate consumption of first buffer consumer; this should not free

buffers

-assertEquals(1, partition.getNumberOfQueuedBuffers(0));

+assertThat(partition.getNumberOfQueuedBuffers(0)).isEqualTo(1);

Review Comment:

Yes, good catch!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-33051) GlobalFailureHandler interface should be retired in favor of LabeledGlobalFailureHandler

[ https://issues.apache.org/jira/browse/FLINK-33051?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764496#comment-17764496 ] Panagiotis Garefalakis commented on FLINK-33051: Hey [~wangm92] – I already have a POC here but feel free to pick any of the remaining tickets. > GlobalFailureHandler interface should be retired in favor of > LabeledGlobalFailureHandler > > > Key: FLINK-33051 > URL: https://issues.apache.org/jira/browse/FLINK-33051 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Reporter: Panagiotis Garefalakis >Priority: Minor > > FLIP-304 introduced `LabeledGlobalFailureHandler` interface that is an > extension of `GlobalFailureHandler` interface. The later can thus be removed > in the future to avoid the existence of interfaces with duplicate functions. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] huwh commented on a diff in pull request #23399: [FLINK-33061][docs] Translate failure-enricher documentation to Chinese

huwh commented on code in PR #23399:

URL: https://github.com/apache/flink/pull/23399#discussion_r1323974700

##

docs/content/docs/deployment/advanced/failure_enrichers.md:

##

@@ -42,7 +42,7 @@ To implement a custom FailureEnricher plugin, you need to:

Then, create a jar which includes your `FailureEnricher`,

`FailureEnricherFactory`, `META-INF/services/` and all external dependencies.

Make a directory in `plugins/` of your Flink distribution with an arbitrary

name, e.g. "failure-enrichment", and put the jar into this directory.

-See [Flink Plugin]({% link deployment/filesystems/plugins.md %}) for more

details.

+See [Flink Plugin]({{< ref "docs/deployment/filesystems/plugins" >}}) for more

details.

Review Comment:

I haven’t reviewed it in detail yet. Can you separate this fix change into

an independent commit? This is unrelated to translation work.

##

docs/content/docs/deployment/advanced/failure_enrichers.md:

##

@@ -42,7 +42,7 @@ To implement a custom FailureEnricher plugin, you need to:

Then, create a jar which includes your `FailureEnricher`,

`FailureEnricherFactory`, `META-INF/services/` and all external dependencies.

Make a directory in `plugins/` of your Flink distribution with an arbitrary

name, e.g. "failure-enrichment", and put the jar into this directory.

-See [Flink Plugin]({% link deployment/filesystems/plugins.md %}) for more

details.

+See [Flink Plugin]({{< ref "docs/deployment/filesystems/plugins" >}}) for more

details.

Review Comment:

I haven’t reviewed it in detail yet. Can you separate this fix change into

an independent commit? This is unrelated to translation work.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #23406: [FLINK-32884] [flink-clients] PyFlink remote execution should support URLs with paths and https scheme

flinkbot commented on PR #23406: URL: https://github.com/apache/flink/pull/23406#issuecomment-1716944631 ## CI report: * 291433141e0c930c751c3e990a159b1f1ab9823c UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-32884) PyFlink remote execution should support URLs with paths and https scheme

[ https://issues.apache.org/jira/browse/FLINK-32884?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-32884: --- Labels: pull-request-available (was: ) > PyFlink remote execution should support URLs with paths and https scheme > > > Key: FLINK-32884 > URL: https://issues.apache.org/jira/browse/FLINK-32884 > Project: Flink > Issue Type: New Feature > Components: Client / Job Submission, Runtime / REST >Affects Versions: 1.17.1 >Reporter: Elkhan Dadashov >Assignee: Elkhan Dadashov >Priority: Major > Labels: pull-request-available > Fix For: 1.19.0 > > > Currently, the `SUBMIT_ARGS=remote -m http://:` format. For > local execution it works fine `SUBMIT_ARGS=remote -m http://localhost:8081/`, > but it does not support the placement of the JobManager behind a proxy or > using an Ingress for routing to a specific Flink cluster based on the URL > path. In the current scenario, it expects JobManager to access PyFlink jobs > at `http://:/v1/jobs` endpoint. Mapping to a non-root > location, > `https://:/flink-clusters/namespace/flink_job_deployment/v1/jobs` > is not supported. > This will use changes from > [FLINK-32885](https://issues.apache.org/jira/browse/FLINK-32885)(https://issues.apache.org/jira/browse/FLINK-32885) > Since RestClusterClient talks to the JobManager via its REST endpoint, the > right format for `SUBMIT_ARGS` is a URL with a path (also support for https > scheme). -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] elkhand opened a new pull request, #23406: [FLINK-32884] [flink-clients] PyFlink remote execution should support URLs with paths and https scheme

elkhand opened a new pull request, #23406: URL: https://github.com/apache/flink/pull/23406 [FLINK-32884](https://issues.apache.org/jira/browse/FLINK-32884) PyFlink remote execution should support URLs with paths and https scheme ## What is the purpose of the change Currently, the `SUBMIT_ARGS=remote -m http://:` format. For local execution it works fine `SUBMIT_ARGS=remote -m http://localhost:8081/`, but it does not support the placement of the JobManager behind a proxy or using an Ingress for routing to a specific Flink cluster based on the URL path. In the current scenario, it expects JobManager to access PyFlink jobs at `http://:/v1/jobs` endpoint. Mapping to a non-root location, `https://:/flink-clusters/namespace/flink_job_deployment/v1/jobs` is not supported. This will use changes from [FLINK-32885](https://issues.apache.org/jira/browse/FLINK-32885)(https://issues.apache.org/jira/browse/FLINK-32885) Since RestClusterClient talks to the JobManager via its REST endpoint, the right format for `SUBMIT_ARGS` is a URL with a path (also support for https scheme). ## Brief change log - Added 2 new options into RestOptions: RestOptions.PATH and RestOptions.PROTOCOL - Added `https` schema support and jobManagerUrl with path support to RestClusterClient - Added customHttpHeaders support to RestClusterClient (for example setting auth token from env variable) - Updated `NetUtils.validateHostPortString()` to handle both `http` and `https` schema for hostPort ## Verifying this change This change added tests and can be verified as follows: - Extended flink-clients/src/test/java/org/apache/flink/client/cli/DefaultCLITest.java to cover `http` and `https` schemes - Added unit test flink-core/src/test/java/org/apache/flink/util/NetUtilsTest.java to check `https` scheme and URL with path - Extended test flink-clients/src/test/java/org/apache/flink/client/program/rest/RestClusterClientTest.java to validate customHttpHeaders and jobmanagerUrl with path and `https` scheme ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-connector-mongodb] Jiabao-Sun commented on a diff in pull request #15: [FLINK-33013][connectors/mongodb] Shade flink-connector-base into flink-sql-connector-mongodb

Jiabao-Sun commented on code in PR #15: URL: https://github.com/apache/flink-connector-mongodb/pull/15#discussion_r1323965537 ## flink-sql-connector-mongodb/pom.xml: ## @@ -60,6 +60,10 @@ under the License. *:* + org.apache.flink:flink-connector-base + org.apache.flink:flink-connector-mongodb + org.mongodb:* + org.bson:* Review Comment: Thanks @ruanhang1993 to remind me of this. The old shaded package does not include `flink-connector-base` is that has provided scope. I removed the useless includes and it works. Please help review it again when you have time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-connector-mongodb] Jiabao-Sun commented on a diff in pull request #15: [FLINK-33013][connectors/mongodb] Shade flink-connector-base into flink-sql-connector-mongodb

Jiabao-Sun commented on code in PR #15: URL: https://github.com/apache/flink-connector-mongodb/pull/15#discussion_r1323965389 ## flink-sql-connector-mongodb/pom.xml: ## @@ -60,6 +60,10 @@ under the License. *:* + org.apache.flink:flink-connector-base + org.apache.flink:flink-connector-mongodb + org.mongodb:* + org.bson:* Review Comment: Thanks @ruanhang1993 to remind me of this. The old shaded package does not include `flink-connector-base` is that has provided scope. I removed the useless includes and it works. Please help review it again when you have time. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (FLINK-33052) codespeed server is down

[ https://issues.apache.org/jira/browse/FLINK-33052?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764484#comment-17764484 ] Yuan Mei edited comment on FLINK-33052 at 9/13/23 4:44 AM: --- Let me see whether i can get a new machine for this purpose and re-set up the environment. was (Author: ym): Let me see whether i can get a new machine for this purpose. > codespeed server is down > > > Key: FLINK-33052 > URL: https://issues.apache.org/jira/browse/FLINK-33052 > Project: Flink > Issue Type: Bug > Components: Test Infrastructure >Reporter: Jing Ge >Assignee: Yuan Mei >Priority: Major > > No update in #flink-dev-benchmarks slack channel since 25th August. > It was a EC2 running in a legacy aws account. Currently on one knows which > account it is. > > https://apache-flink.slack.com/archives/C0471S0DFJ9/p1693932155128359 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Updated] (FLINK-32884) PyFlink remote execution should support URLs with paths and https scheme

[ https://issues.apache.org/jira/browse/FLINK-32884?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Elkhan Dadashov updated FLINK-32884: Description: Currently, the `SUBMIT_ARGS=remote -m http://:` format. For local execution it works fine `SUBMIT_ARGS=remote -m http://localhost:8081/`, but it does not support the placement of the JobManager behind a proxy or using an Ingress for routing to a specific Flink cluster based on the URL path. In the current scenario, it expects JobManager to access PyFlink jobs at `http://:/v1/jobs` endpoint. Mapping to a non-root location, `https://:/flink-clusters/namespace/flink_job_deployment/v1/jobs` is not supported. This will use changes from [FLINK-32885](https://issues.apache.org/jira/browse/FLINK-32885)(https://issues.apache.org/jira/browse/FLINK-32885) Since RestClusterClient talks to the JobManager via its REST endpoint, the right format for `SUBMIT_ARGS` is a URL with a path (also support for https scheme). was: Currently, the `SUBMIT_ARGS=remote -m http://:` format. For local execution it works fine `SUBMIT_ARGS=remote -m [http://localhost:8081|http://localhost:8081/]`, but it does not support the placement of the JobManager befind a proxy or using an Ingress for routing to a specific Flink cluster based on the URL path. In current scenario, it expects JobManager access PyFlink jobs at `http://:/v1/jobs` endpoint. Mapping to a non-root location, `https://:/flink-clusters/namespace/flink_job_deployment/v1/jobs` is not supported. This will use changes from FLINK-32885(https://issues.apache.org/jira/browse/FLINK-32885) Since RestClusterClient talks to the JobManager via its REST endpoint, the right format for `SUBMIT_ARGS` is URL with path (also support for https scheme). > PyFlink remote execution should support URLs with paths and https scheme > > > Key: FLINK-32884 > URL: https://issues.apache.org/jira/browse/FLINK-32884 > Project: Flink > Issue Type: New Feature > Components: Client / Job Submission, Runtime / REST >Affects Versions: 1.17.1 >Reporter: Elkhan Dadashov >Assignee: Elkhan Dadashov >Priority: Major > Fix For: 1.19.0 > > > Currently, the `SUBMIT_ARGS=remote -m http://:` format. For > local execution it works fine `SUBMIT_ARGS=remote -m http://localhost:8081/`, > but it does not support the placement of the JobManager behind a proxy or > using an Ingress for routing to a specific Flink cluster based on the URL > path. In the current scenario, it expects JobManager to access PyFlink jobs > at `http://:/v1/jobs` endpoint. Mapping to a non-root > location, > `https://:/flink-clusters/namespace/flink_job_deployment/v1/jobs` > is not supported. > This will use changes from > [FLINK-32885](https://issues.apache.org/jira/browse/FLINK-32885)(https://issues.apache.org/jira/browse/FLINK-32885) > Since RestClusterClient talks to the JobManager via its REST endpoint, the > right format for `SUBMIT_ARGS` is a URL with a path (also support for https > scheme). -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-31788) Add back Support emitValueWithRetract for TableAggregateFunction

[ https://issues.apache.org/jira/browse/FLINK-31788?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764485#comment-17764485 ] lincoln lee commented on FLINK-31788: - [~qingyue] assigned to you. > Add back Support emitValueWithRetract for TableAggregateFunction > > > Key: FLINK-31788 > URL: https://issues.apache.org/jira/browse/FLINK-31788 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Feng Jin >Assignee: Jane Chan >Priority: Major > > This feature was originally implemented in the old planner: > [https://github.com/apache/flink/pull/8550/files] > However, this feature was not implemented in the new planner , the Blink > planner. > With the removal of the old planner in version 1.14 > [https://github.com/apache/flink/pull/16080] , this code was also removed. > > We should add it back. > > origin discuss link: > https://lists.apache.org/thread/rnvw8k3636dqhdttpmf1c9colbpw9svp -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Assigned] (FLINK-31788) Add back Support emitValueWithRetract for TableAggregateFunction

[ https://issues.apache.org/jira/browse/FLINK-31788?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] lincoln lee reassigned FLINK-31788: --- Assignee: Jane Chan > Add back Support emitValueWithRetract for TableAggregateFunction > > > Key: FLINK-31788 > URL: https://issues.apache.org/jira/browse/FLINK-31788 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Feng Jin >Assignee: Jane Chan >Priority: Major > > This feature was originally implemented in the old planner: > [https://github.com/apache/flink/pull/8550/files] > However, this feature was not implemented in the new planner , the Blink > planner. > With the removal of the old planner in version 1.14 > [https://github.com/apache/flink/pull/16080] , this code was also removed. > > We should add it back. > > origin discuss link: > https://lists.apache.org/thread/rnvw8k3636dqhdttpmf1c9colbpw9svp -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-33052) codespeed server is down

[ https://issues.apache.org/jira/browse/FLINK-33052?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764484#comment-17764484 ] Yuan Mei commented on FLINK-33052: -- Let me see whether i can get a new machine for this purpose. > codespeed server is down > > > Key: FLINK-33052 > URL: https://issues.apache.org/jira/browse/FLINK-33052 > Project: Flink > Issue Type: Bug > Components: Test Infrastructure >Reporter: Jing Ge >Assignee: Yuan Mei >Priority: Major > > No update in #flink-dev-benchmarks slack channel since 25th August. > It was a EC2 running in a legacy aws account. Currently on one knows which > account it is. > > https://apache-flink.slack.com/archives/C0471S0DFJ9/p1693932155128359 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Assigned] (FLINK-33052) codespeed server is down

[ https://issues.apache.org/jira/browse/FLINK-33052?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Yuan Mei reassigned FLINK-33052: Assignee: Yuan Mei (was: Yanfei Lei) > codespeed server is down > > > Key: FLINK-33052 > URL: https://issues.apache.org/jira/browse/FLINK-33052 > Project: Flink > Issue Type: Bug > Components: Test Infrastructure >Reporter: Jing Ge >Assignee: Yuan Mei >Priority: Major > > No update in #flink-dev-benchmarks slack channel since 25th August. > It was a EC2 running in a legacy aws account. Currently on one knows which > account it is. > > https://apache-flink.slack.com/archives/C0471S0DFJ9/p1693932155128359 -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] swuferhong commented on a diff in pull request #23313: [FLINK-20767][table planner] Support filter push down on nested fields

swuferhong commented on code in PR #23313:

URL: https://github.com/apache/flink/pull/23313#discussion_r1323892173

##

flink-table/flink-table-planner/src/main/scala/org/apache/flink/table/planner/plan/utils/RexNodeExtractor.scala:

##

@@ -395,9 +397,19 @@ class RexNodeToExpressionConverter(

inputNames: Array[String],

functionCatalog: FunctionCatalog,

catalogManager: CatalogManager,

-timeZone: TimeZone)

+timeZone: TimeZone,

+relDataType: Option[RelDataType] = None)

extends RexVisitor[Option[ResolvedExpression]] {

+ def this(

+ rexBuilder: RexBuilder,

+ inputNames: Array[String],

+ functionCatalog: FunctionCatalog,

+ catalogManager: CatalogManager,

+ timeZone: TimeZone) = {

+this(rexBuilder, inputNames, functionCatalog, catalogManager, timeZone,

null)

Review Comment:

There is no need to add `null`. `this(rexBuilder, inputNames,

functionCatalog, catalogManager, timeZone)` is ok.

##

flink-table/flink-table-common/src/main/java/org/apache/flink/table/expressions/NestedFieldReferenceExpression.java:

##

@@ -0,0 +1,126 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.expressions;

+

+import org.apache.flink.annotation.PublicEvolving;

+import org.apache.flink.table.types.DataType;

+

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.List;

+import java.util.Objects;

+

+/**

+ * A reference to a nested field in an input. The reference contains.

Review Comment:

`contains.` -> `contains:`

##

flink-table/flink-table-common/src/main/java/org/apache/flink/table/expressions/NestedFieldReferenceExpression.java:

##

@@ -0,0 +1,126 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.flink.table.expressions;

+

+import org.apache.flink.annotation.PublicEvolving;

+import org.apache.flink.table.types.DataType;

+

+import java.util.Arrays;

+import java.util.Collections;

+import java.util.List;

+import java.util.Objects;

+

+/**

+ * A reference to a nested field in an input. The reference contains.

+ *

+ *

+ * nested field names to traverse from the top level column to the

nested leaf column.

+ * nested field indices to traverse from the top level column to the

nested leaf column.

+ * type

+ *

+ */

+@PublicEvolving

+public class NestedFieldReferenceExpression implements ResolvedExpression {

+

+/** Nested field names to traverse from the top level column to the nested

leaf column. */

+private final String[] fieldNames;

+

+/** Nested field index to traverse from the top level column to the nested

leaf column. */

+private final int[] fieldIndices;

+

+private final DataType dataType;

+

+public NestedFieldReferenceExpression(

+String[] fieldNames, int[] fieldIndices, DataType dataType) {

+this.fieldNames = fieldNames;

+this.fieldIndices = fieldIndices;

+this.dataType = dataType;

+}

+

+public String[] getFieldNames() {

+return fieldNames;

+}

+

+public int[] getFieldIndices() {

+return fieldIndices;

+}

+

+public String getName() {

+return String.format(

+"`%s`",

+String.join(

+".",

+Arrays.stream(fieldNames)

+.map(this::quoteIdentifier)

+

[jira] [Resolved] (FLINK-33034) Incorrect StateBackendTestBase#testGetKeysAndNamespaces

[

https://issues.apache.org/jira/browse/FLINK-33034?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Hangxiang Yu resolved FLINK-33034.

--

Fix Version/s: 1.19.0

Resolution: Fixed

merged 36b9da50da9405b5b79f0d4da9393921982ab040 into master

> Incorrect StateBackendTestBase#testGetKeysAndNamespaces

> ---

>

> Key: FLINK-33034

> URL: https://issues.apache.org/jira/browse/FLINK-33034

> Project: Flink

> Issue Type: Bug

> Components: Runtime / State Backends

>Affects Versions: 1.12.2, 1.15.0, 1.17.1

>Reporter: Dmitriy Linevich

>Assignee: Dmitriy Linevich

>Priority: Minor

> Labels: pull-request-available

> Fix For: 1.19.0

>

> Attachments: image-2023-09-05-12-51-28-203.png

>

>

> In this test first namespace 'ns1' doesn't exist in state, because creating

> ValueState is incorrect for test (When creating the 2nd value state namespace

> 'ns1' is overwritten by namespace 'ns2'). Need to fix it, to change creating

> ValueState or to change process of updating this state.

>

> If to add following code for checking count of adding namespaces to state

> [here|https://github.com/apache/flink/blob/3e6a1aab0712acec3e9fcc955a28f2598f019377/flink-runtime/src/test/java/org/apache/flink/runtime/state/StateBackendTestBase.java#L501C28-L501C28]

> {code:java}

> assertThat(keysByNamespace.size(), is(2)); {code}

> then

> !image-2023-09-05-12-51-28-203.png!

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [flink] huwh commented on a diff in pull request #23386: [FLINK-33022] Log an error when enrichers defined as part of the configuration can not be found/loaded

huwh commented on code in PR #23386:

URL: https://github.com/apache/flink/pull/23386#discussion_r1323944084

##

flink-runtime/src/test/java/org/apache/flink/runtime/failure/FailureEnricherUtilsTest.java:

##

@@ -109,6 +109,15 @@ public void testGetFailureEnrichers() {

assertThat(enrichers).hasSize(1);

// verify that the failure enricher was created and returned

assertThat(enrichers.iterator().next()).isInstanceOf(TestEnricher.class);

+

+// Valid plus Invalid Name combination

+configuration.set(

+JobManagerOptions.FAILURE_ENRICHERS_LIST,

+FailureEnricherUtilsTest.class.getName() + "," +

TestEnricher.class.getName());

+final Collection validInvalidEnrichers =

+FailureEnricherUtils.getFailureEnrichers(configuration,

createPluginManager());

+assertThat(validInvalidEnrichers).hasSize(1);

+

assertThat(enrichers.iterator().next()).isInstanceOf(TestEnricher.class);

Review Comment:

It's appreciated if you could update line 109~111.

##

flink-runtime/src/test/java/org/apache/flink/runtime/failure/FailureEnricherUtilsTest.java:

##

@@ -109,6 +109,15 @@ public void testGetFailureEnrichers() {

assertThat(enrichers).hasSize(1);

// verify that the failure enricher was created and returned

assertThat(enrichers.iterator().next()).isInstanceOf(TestEnricher.class);

+

+// Valid plus Invalid Name combination

+configuration.set(

+JobManagerOptions.FAILURE_ENRICHERS_LIST,

+FailureEnricherUtilsTest.class.getName() + "," +

TestEnricher.class.getName());

+final Collection validInvalidEnrichers =

+FailureEnricherUtils.getFailureEnrichers(configuration,

createPluginManager());

+assertThat(validInvalidEnrichers).hasSize(1);

+

assertThat(enrichers.iterator().next()).isInstanceOf(TestEnricher.class);

Review Comment:

It is recommended to verify this way to make it clearer.

```suggestion

assertThat(validInvalidEnrichers)

.satisfiesExactly(

enricher ->

assertThat(enricher).isInstanceOf(TestEnricher.class));

```

##

flink-runtime/src/main/java/org/apache/flink/runtime/failure/FailureEnricherUtils.java:

##

@@ -105,6 +105,16 @@ static Collection getFailureEnrichers(

includedEnrichers);

}

}

+includedEnrichers.removeAll(

Review Comment:

We could remove the found enricher after line 91.

And shall we change the name of `includedEnrichers` to `enrichersToLoad`.

From the perspective of naming, the `includedEnrichers` is meaning the

enrichers that user configured, and it shouldn't be modified. But the

`enrichersToLoad` means the enrichers that should be load, when some of it is

loaded, it could be removed from this set since it's not need load any more.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] masteryhx closed pull request #23355: [FLINK-33034][runtime] Correct ValueState creating for StateBackendTestBase#testGetKeysAndNamespaces

masteryhx closed pull request #23355: [FLINK-33034][runtime] Correct ValueState creating for StateBackendTestBase#testGetKeysAndNamespaces URL: https://github.com/apache/flink/pull/23355 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] morhidi commented on a diff in pull request #670: [FLINK-31871] Interpret Flink MemoryUnits according to the actual user input

morhidi commented on code in PR #670:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/670#discussion_r1323925122

##

flink-kubernetes-operator/src/test/java/org/apache/flink/kubernetes/operator/config/FlinkConfigBuilderTest.java:

##

@@ -478,6 +478,50 @@ public void testTaskManagerSpec() {

Double.valueOf(1),

configuration.get(KubernetesConfigOptions.TASK_MANAGER_CPU));

}

+@Test

+public void testApplyJobManagerSpecWithBiByteMemorySetting() {

Review Comment:

Could you please break up that large test case to smaller ones? You can

follow the logic in the table you put together in the description.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] morhidi commented on pull request #670: [FLINK-31871] Interpret Flink MemoryUnits according to the actual user input

morhidi commented on PR #670: URL: https://github.com/apache/flink-kubernetes-operator/pull/670#issuecomment-1716915082 What happens when users overwrite the configuration with standard memory parameters? Could you please handle these cases and cover with tests? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-23411) Expose Flink checkpoint details metrics

[ https://issues.apache.org/jira/browse/FLINK-23411?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Hangxiang Yu updated FLINK-23411: - Component/s: Runtime / Checkpointing > Expose Flink checkpoint details metrics > --- > > Key: FLINK-23411 > URL: https://issues.apache.org/jira/browse/FLINK-23411 > Project: Flink > Issue Type: Improvement > Components: Runtime / Checkpointing, Runtime / Metrics >Affects Versions: 1.13.1, 1.12.4 >Reporter: Jun Qin >Assignee: Hangxiang Yu >Priority: Major > Labels: pull-request-available, stale-assigned > Fix For: 1.18.0 > > > The checkpoint metrics as shown in the Flink Web UI like the > sync/async/alignment/start delay are not exposed to the metrics system. This > makes problem investigation harder when Web UI is not enabled: those numbers > can not get in the DEBUG logs. I think we should see how we can expose > metrics. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink-kubernetes-operator] morhidi commented on a diff in pull request #670: [FLINK-31871] Interpret Flink MemoryUnits according to the actual user input

morhidi commented on code in PR #670:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/670#discussion_r1323925122

##

flink-kubernetes-operator/src/test/java/org/apache/flink/kubernetes/operator/config/FlinkConfigBuilderTest.java:

##

@@ -478,6 +478,50 @@ public void testTaskManagerSpec() {

Double.valueOf(1),

configuration.get(KubernetesConfigOptions.TASK_MANAGER_CPU));

}

+@Test

+public void testApplyJobManagerSpecWithBiByteMemorySetting() {

Review Comment:

Could you please break up that large test case to smaller ones? You can

follow the table you put together in the description.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Commented] (FLINK-23411) Expose Flink checkpoint details metrics

[

https://issues.apache.org/jira/browse/FLINK-23411?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764477#comment-17764477

]

Hangxiang Yu commented on FLINK-23411:

--

Thanks for the quick reply.

{quote}Anyway, I think best way would be to first think through OTEL/Traces

integration, add support for that, and then add those new checkpointing metrics

from this ticket in this new model. However it's indeed much more work

(including writing and voting on a FLIP)

{quote}

Yeah, Supprting OTEL/Traces integration makes sense.

Besides some task-level metrics like this, users could also report their

own-defined operator metrics to their own distributed tracing system which may

be traced together with other jobs or systems.

I could also try to improve this when I'm free if you also think it's helpful

for users.

{quote}One thing is that in order to not bloat metric system too much, we

should implement this as an opt-in feature, hidden behind a feature toggle,

that users would have to manually enable in order to see those metrics.

{quote}

Sure, I aggree. Thanks for the advice.

> Expose Flink checkpoint details metrics

> ---

>

> Key: FLINK-23411

> URL: https://issues.apache.org/jira/browse/FLINK-23411

> Project: Flink

> Issue Type: Improvement

> Components: Runtime / Metrics

>Affects Versions: 1.13.1, 1.12.4

>Reporter: Jun Qin

>Assignee: Hangxiang Yu

>Priority: Major

> Labels: pull-request-available, stale-assigned

> Fix For: 1.18.0

>

>

> The checkpoint metrics as shown in the Flink Web UI like the

> sync/async/alignment/start delay are not exposed to the metrics system. This

> makes problem investigation harder when Web UI is not enabled: those numbers

> can not get in the DEBUG logs. I think we should see how we can expose

> metrics.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [flink-kubernetes-operator] morhidi commented on pull request #670: [FLINK-31871] Interpret Flink MemoryUnits according to the actual user input

morhidi commented on PR #670: URL: https://github.com/apache/flink-kubernetes-operator/pull/670#issuecomment-1716912377 The last column looks good to me, we're not failing on any previous values which is important for backward compatibility reasons. I'm leaning towards accepting the corrected logic, while loosing the difference between `2147483648 b` vs `20 b`, where users intention was probably was to have `20 b` anyways. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-33013) Shade flink-connector-base info flink-sql-connector-connector

[ https://issues.apache.org/jira/browse/FLINK-33013?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-33013: --- Labels: pull-request-available (was: ) > Shade flink-connector-base info flink-sql-connector-connector > - > > Key: FLINK-33013 > URL: https://issues.apache.org/jira/browse/FLINK-33013 > Project: Flink > Issue Type: Improvement > Components: Connectors / MongoDB >Affects Versions: mongodb-1.0.2 >Reporter: Jiabao Sun >Priority: Major > Labels: pull-request-available > > Shade flink-connector-base info flink-sql-connector-connector -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink-connector-mongodb] ruanhang1993 commented on a diff in pull request #15: [FLINK-33013][connectors/mongodb] Shade flink-connector-base into flink-sql-connector-mongodb

ruanhang1993 commented on code in PR #15: URL: https://github.com/apache/flink-connector-mongodb/pull/15#discussion_r1323913992 ## flink-sql-connector-mongodb/pom.xml: ## @@ -60,6 +60,10 @@ under the License. *:* + org.apache.flink:flink-connector-base + org.apache.flink:flink-connector-mongodb + org.mongodb:* + org.bson:* Review Comment: I think these new includes are useless as `*:*` already contains them. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] TanYuxin-tyx commented on a diff in pull request #23404: [FLINK-33076][network] Reduce serialization overhead of broadcast emit from ChannelSelectorRecordWriter.

TanYuxin-tyx commented on code in PR #23404:

URL: https://github.com/apache/flink/pull/23404#discussion_r1323910378

##

flink-runtime/src/test/java/org/apache/flink/runtime/io/network/api/writer/BroadcastRecordWriterTest.java:

##

@@ -132,35 +132,34 @@ public void testRandomEmitAndBufferRecycling() throws

Exception {

List buffers =

Arrays.asList(bufferPool.requestBuffer(),

bufferPool.requestBuffer());

buffers.forEach(Buffer::recycleBuffer);

-assertEquals(3, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(3);

// fill first buffer

writer.broadcastEmit(new IntType(1));

writer.broadcastEmit(new IntType(2));

-assertEquals(2, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(2);

// simulate consumption of first buffer consumer; this should not free

buffers

-assertEquals(1, partition.getNumberOfQueuedBuffers(0));

+assertThat(partition.getNumberOfQueuedBuffers(0)).isEqualTo(1);

Review Comment:

We can simplify this.

```suggestion

assertThat(partition.getNumberOfQueuedBuffers(0)).isOne();

```

##

flink-runtime/src/test/java/org/apache/flink/runtime/io/network/api/writer/BroadcastRecordWriterTest.java:

##

@@ -132,35 +132,34 @@ public void testRandomEmitAndBufferRecycling() throws

Exception {

List buffers =

Arrays.asList(bufferPool.requestBuffer(),

bufferPool.requestBuffer());

buffers.forEach(Buffer::recycleBuffer);

-assertEquals(3, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(3);

// fill first buffer

writer.broadcastEmit(new IntType(1));

writer.broadcastEmit(new IntType(2));

-assertEquals(2, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(2);

// simulate consumption of first buffer consumer; this should not free

buffers

-assertEquals(1, partition.getNumberOfQueuedBuffers(0));

+assertThat(partition.getNumberOfQueuedBuffers(0)).isEqualTo(1);

ResultSubpartitionView view0 =

partition.createSubpartitionView(0, new

NoOpBufferAvailablityListener());

closeConsumer(view0, 2 * recordSize);

-assertEquals(2, bufferPool.getNumberOfAvailableMemorySegments());

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(2);

// use second buffer

writer.emit(new IntType(3), 0);

-assertEquals(1, bufferPool.getNumberOfAvailableMemorySegments());

-

+

assertThat(bufferPool.getNumberOfAvailableMemorySegments()).isEqualTo(1);

// fully free first buffer

-assertEquals(1, partition.getNumberOfQueuedBuffers(1));

+assertThat(partition.getNumberOfQueuedBuffers(1)).isEqualTo(1);

Review Comment:

```suggestion

assertThat(partition.getNumberOfQueuedBuffers(1)).isOne();

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Comment Edited] (FLINK-31788) Add back Support emitValueWithRetract for TableAggregateFunction

[ https://issues.apache.org/jira/browse/FLINK-31788?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764475#comment-17764475 ] Jane Chan edited comment on FLINK-31788 at 9/13/23 3:49 AM: Hi, according to the discussion, we're on the consensus that we should support this feature, please assign the ticket to me, thanks. was (Author: qingyue): Hi, according to the discussion, we're one the consensus that we should support this feature, please assign the ticket to me, thanks. > Add back Support emitValueWithRetract for TableAggregateFunction > > > Key: FLINK-31788 > URL: https://issues.apache.org/jira/browse/FLINK-31788 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Feng Jin >Priority: Major > > This feature was originally implemented in the old planner: > [https://github.com/apache/flink/pull/8550/files] > However, this feature was not implemented in the new planner , the Blink > planner. > With the removal of the old planner in version 1.14 > [https://github.com/apache/flink/pull/16080] , this code was also removed. > > We should add it back. > > origin discuss link: > https://lists.apache.org/thread/rnvw8k3636dqhdttpmf1c9colbpw9svp -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-31788) Add back Support emitValueWithRetract for TableAggregateFunction

[ https://issues.apache.org/jira/browse/FLINK-31788?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764475#comment-17764475 ] Jane Chan commented on FLINK-31788: --- Hi, according to the discussion, we're one the consensus that we should support this feature, please assign the ticket to me, thanks. > Add back Support emitValueWithRetract for TableAggregateFunction > > > Key: FLINK-31788 > URL: https://issues.apache.org/jira/browse/FLINK-31788 > Project: Flink > Issue Type: Bug > Components: Table SQL / Planner >Reporter: Feng Jin >Priority: Major > > This feature was originally implemented in the old planner: > [https://github.com/apache/flink/pull/8550/files] > However, this feature was not implemented in the new planner , the Blink > planner. > With the removal of the old planner in version 1.14 > [https://github.com/apache/flink/pull/16080] , this code was also removed. > > We should add it back. > > origin discuss link: > https://lists.apache.org/thread/rnvw8k3636dqhdttpmf1c9colbpw9svp -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-33065) Optimize the exception message when the program plan could not be fetched

[ https://issues.apache.org/jira/browse/FLINK-33065?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764472#comment-17764472 ] Rui Fan commented on FLINK-33065: - Merged via d5b2cdb618cd7a50818d7eaa575c0fac6aaeac9a > Optimize the exception message when the program plan could not be fetched > - > > Key: FLINK-33065 > URL: https://issues.apache.org/jira/browse/FLINK-33065 > Project: Flink > Issue Type: Improvement >Reporter: Rui Fan >Assignee: Rui Fan >Priority: Major > Labels: pull-request-available > > When the program plan could not be fetched, the root cause may be: the main > method doesn't call the `env.execute()`. > > We can optimize the message to help user find this root cause. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Resolved] (FLINK-33065) Optimize the exception message when the program plan could not be fetched

[ https://issues.apache.org/jira/browse/FLINK-33065?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] Rui Fan resolved FLINK-33065. - Fix Version/s: 1.19.0 Resolution: Fixed > Optimize the exception message when the program plan could not be fetched > - > > Key: FLINK-33065 > URL: https://issues.apache.org/jira/browse/FLINK-33065 > Project: Flink > Issue Type: Improvement >Reporter: Rui Fan >Assignee: Rui Fan >Priority: Major > Labels: pull-request-available > Fix For: 1.19.0 > > > When the program plan could not be fetched, the root cause may be: the main > method doesn't call the `env.execute()`. > > We can optimize the message to help user find this root cause. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[jira] [Commented] (FLINK-33051) GlobalFailureHandler interface should be retired in favor of LabeledGlobalFailureHandler

[ https://issues.apache.org/jira/browse/FLINK-33051?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764473#comment-17764473 ] Matt Wang commented on FLINK-33051: --- [~pgaref] Have you started this tricket? If you agree, I will be happy to contribute this. > GlobalFailureHandler interface should be retired in favor of > LabeledGlobalFailureHandler > > > Key: FLINK-33051 > URL: https://issues.apache.org/jira/browse/FLINK-33051 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Coordination >Reporter: Panagiotis Garefalakis >Priority: Minor > > FLIP-304 introduced `LabeledGlobalFailureHandler` interface that is an > extension of `GlobalFailureHandler` interface. The later can thus be removed > in the future to avoid the existence of interfaces with duplicate functions. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] flinkbot commented on pull request #23405: [FLINK-31895] Add End-to-end integration tests for failure labels

flinkbot commented on PR #23405: URL: https://github.com/apache/flink/pull/23405#issuecomment-1716894944 ## CI report: * 6ec84c4729ced192747cff0778d42fa69dc68a39 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] wangzzu commented on pull request #23405: [FLINK-31895] Add End-to-end integration tests for failure labels

wangzzu commented on PR #23405: URL: https://github.com/apache/flink/pull/23405#issuecomment-1716892702 @huwh @pgaref when you have time, help me review this -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-31895) End-to-end integration tests for failure labels

[ https://issues.apache.org/jira/browse/FLINK-31895?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-31895: --- Labels: pull-request-available (was: ) > End-to-end integration tests for failure labels > --- > > Key: FLINK-31895 > URL: https://issues.apache.org/jira/browse/FLINK-31895 > Project: Flink > Issue Type: Sub-task >Reporter: Panagiotis Garefalakis >Assignee: Matt Wang >Priority: Major > Labels: pull-request-available > -- This message was sent by Atlassian Jira (v8.20.10#820010)

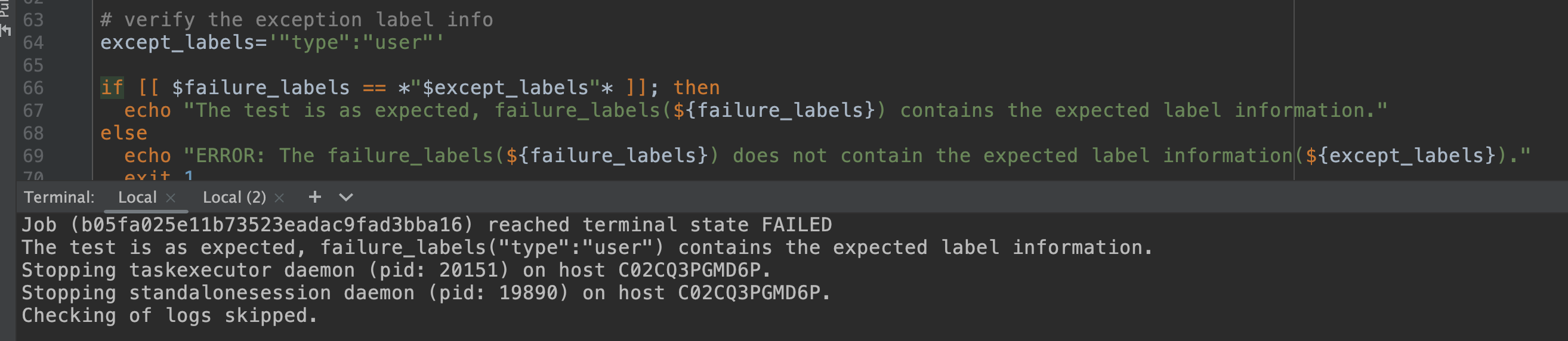

[GitHub] [flink] wangzzu opened a new pull request, #23405: [FLINK-31895] Add End-to-end integration tests for failure labels

wangzzu opened a new pull request, #23405: URL: https://github.com/apache/flink/pull/23405 ## What is the purpose of the change *(For example: This pull request makes task deployment go through the blob server, rather than through RPC. That way we avoid re-transferring them on each deployment (during recovery).)* ## Brief change log - Add an end-to-end failure enricher test ## Verifying this change - run the test in local, the test is expected.  ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no - If yes, how is the feature documented? not applicable -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-32863) Improve Flink UI's time precision from second level to millisecond level

[ https://issues.apache.org/jira/browse/FLINK-32863?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764464#comment-17764464 ] Jufang He commented on FLINK-32863: --- Hi [~guoyangze], I have created a PR([https://github.com/apache/flink/pull/23403)] for this issue, could you assign this to me? > Improve Flink UI's time precision from second level to millisecond level > > > Key: FLINK-32863 > URL: https://issues.apache.org/jira/browse/FLINK-32863 > Project: Flink > Issue Type: Sub-task > Components: Runtime / Web Frontend >Affects Versions: 1.17.1 >Reporter: Runkang He >Priority: Major > Labels: pull-request-available > > This an UI improvement for OLAP jobs. > OLAP queries are generally small queries which will finish at the seconds or > milliseconds, but currently the time precision displayed is second level and > not enough for OLAP queries. Millisecond part of time is very important for > users and developers, to see accurate time, for performance measurement and > optimization. The displayed time includes job duration, task duration, task > start time, end time and so on. > It would be nice to improve this for better OLAP user experience. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] masteryhx commented on a diff in pull request #14611: [FLINK-13194][docs] Add explicit clarification about thread-safety of state

masteryhx commented on code in PR #14611: URL: https://github.com/apache/flink/pull/14611#discussion_r1323873654 ## flink-runtime/src/main/java/org/apache/flink/runtime/state/KeyedStateBackend.java: ## @@ -30,6 +30,11 @@ /** * A keyed state backend provides methods for managing keyed state. * + * Not Thread-Safe + * + * State access in keyed state backend dose not require thread safety as each task is executed by + * one thread. Current implementations (Heap/RocksDB keyed state backend) are not thread-safe. + * Review Comment: Sure, I aggree. Could you update your pr as we disscussed? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] flinkbot commented on pull request #23404: [FLINK-33076][network] Reduce serialization overhead of broadcast emit from ChannelSelectorRecordWriter.

flinkbot commented on PR #23404: URL: https://github.com/apache/flink/pull/23404#issuecomment-1716849649 ## CI report: * ae67b21f40ba17fb643acd1e5a410e37562e7119 UNKNOWN Bot commands The @flinkbot bot supports the following commands: - `@flinkbot run azure` re-run the last Azure build -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Updated] (FLINK-33076) broadcastEmit of ChannelSelectorRecordWriter should reuse the serialized record

[ https://issues.apache.org/jira/browse/FLINK-33076?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel ] ASF GitHub Bot updated FLINK-33076: --- Labels: pull-request-available (was: ) > broadcastEmit of ChannelSelectorRecordWriter should reuse the serialized > record > --- > > Key: FLINK-33076 > URL: https://issues.apache.org/jira/browse/FLINK-33076 > Project: Flink > Issue Type: Bug > Components: Runtime / Network >Reporter: Weijie Guo >Assignee: Weijie Guo >Priority: Major > Labels: pull-request-available > > ChannelSelectorRecordWriter#broadcastEmit serialize the record to ByteBuffer > but didn't use it. It will re-serialize this record per-channel. We should > allows all channels to reuse the serialized buffer. -- This message was sent by Atlassian Jira (v8.20.10#820010)

[GitHub] [flink] reswqa opened a new pull request, #23404: [FLINK-33076][network] Reduce serialization overhead of broadcast emit from ChannelSelectorRecordWriter.

reswqa opened a new pull request, #23404: URL: https://github.com/apache/flink/pull/23404 ## What is the purpose of the change *ChannelSelectorRecordWriter#broadcastEmit serialize the record to ByteBuffer but didn't use it. It will re-serialize this record per-channel. We should allows all channels to reuse the serialized buffer.* ## Brief change log - *Reduce serialization overhead of broadcast emit from ChannelSelectorRecordWriter.* ## Verifying this change This change is already covered by existing tests, such as `RecordWriterTest`*. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): no - The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no - The serializers: no - The runtime per-record code paths (performance sensitive): no - Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no - The S3 file system connector: no ## Documentation - Does this pull request introduce a new feature? no -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Commented] (FLINK-33066) Enable to inject environment variable from secret/configmap to operatorPod

[

https://issues.apache.org/jira/browse/FLINK-33066?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17764455#comment-17764455

]

dongwoo.kim commented on FLINK-33066:

-

Thanks [~gyfora], I have opened the pr :)

> Enable to inject environment variable from secret/configmap to operatorPod

> --

>

> Key: FLINK-33066

> URL: https://issues.apache.org/jira/browse/FLINK-33066

> Project: Flink

> Issue Type: Improvement

> Components: Kubernetes Operator

>Reporter: dongwoo.kim

>Assignee: dongwoo.kim

>Priority: Minor

> Labels: pull-request-available

>

> Hello, I've been working with the Flink Kubernetes operator and noticed that

> the {{operatorPod.env}} only allows for simple key-value pairs and doesn't

> support Kubernetes {{valueFrom}} syntax.

> How about changing template to support more various k8s syntax?

> *Current template*

> {code:java}

> {{- range $k, $v := .Values.operatorPod.env }}

> - name: {{ $v.name | quote }}

> value: {{ $v.value | quote }}

> {{- end }}{code}

>

> *Proposed template*

> 1) Modify template like below

> {code:java}

> {{- with .Values.operatorPod.env }}

> {{- toYaml . | nindent 12 }}

> {{- end }}

> {code}

> 2) create extra config, *Values.operatorPod.envFrom* and utilize this

>

> I'd be happy to implement this update if it's approved.

> Thanks in advance.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (FLINK-33066) Enable to inject environment variable from secret/configmap to operatorPod

[

https://issues.apache.org/jira/browse/FLINK-33066?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

ASF GitHub Bot updated FLINK-33066:

---

Labels: pull-request-available (was: )

> Enable to inject environment variable from secret/configmap to operatorPod

> --

>

> Key: FLINK-33066

> URL: https://issues.apache.org/jira/browse/FLINK-33066

> Project: Flink

> Issue Type: Improvement

> Components: Kubernetes Operator

>Reporter: dongwoo.kim

>Assignee: dongwoo.kim

>Priority: Minor

> Labels: pull-request-available

>

> Hello, I've been working with the Flink Kubernetes operator and noticed that

> the {{operatorPod.env}} only allows for simple key-value pairs and doesn't

> support Kubernetes {{valueFrom}} syntax.

> How about changing template to support more various k8s syntax?

> *Current template*

> {code:java}

> {{- range $k, $v := .Values.operatorPod.env }}

> - name: {{ $v.name | quote }}

> value: {{ $v.value | quote }}

> {{- end }}{code}

>

> *Proposed template*

> 1) Modify template like below

> {code:java}

> {{- with .Values.operatorPod.env }}

> {{- toYaml . | nindent 12 }}

> {{- end }}

> {code}

> 2) create extra config, *Values.operatorPod.envFrom* and utilize this

>

> I'd be happy to implement this update if it's approved.

> Thanks in advance.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[GitHub] [flink-kubernetes-operator] dongwoo6kim opened a new pull request, #671: [FLINK-33066] Support all k8s methods to configure env variable in operatorPod

dongwoo6kim opened a new pull request, #671: URL: https://github.com/apache/flink-kubernetes-operator/pull/671 ## What is the purpose of the change To enhance Helm chart by adding full support for setting environment variables in values.yaml, including valueFrom and envFrom options, to align closely with native Kubernetes features and reduce user confusion. ## Brief change log - *Add support for valueFrom in env field* - *Add envFrom field* ## Verifying this change This change is a trivial work, locally checked the generation of manifest files. ## Does this pull request potentially affect one of the following parts: - Dependencies (does it add or upgrade a dependency): (yes / no) - The public API, i.e., is any changes to the `CustomResourceDescriptors`: (yes / no) - Core observer or reconciler logic that is regularly executed: (yes / no) ## Documentation - Does this pull request introduce a new feature? (yes) - If yes, how is the feature documented? (not documented) **Q)** Should I open a new PR to document about ```envFrom``` field if this PR is merged? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] dalelane commented on pull request #23395: [FLINK-33058][formats] Add encoding option to Avro format

dalelane commented on PR #23395: URL: https://github.com/apache/flink/pull/23395#issuecomment-1716773854 Apologies for the flurry of follow-on commits - this is my first contribution to Flink so I'd missed the checkstyle and spotless rules when testing locally. I think it's ready to review now, but I'm sure there are still other things I've unwittingly missed! Please let me know if there is anything else that I should do to get this PR into an acceptable state. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [flink] architgyl commented on a diff in pull request #23164: [FLINK- 32775]Add parent dir of files to classpath using yarn.provided.lib.dirs

architgyl commented on code in PR #23164:

URL: https://github.com/apache/flink/pull/23164#discussion_r1323745882

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -143,6 +165,22 @@ private static Map getLibJars() {

return libJars;

}

+private static Map getFilesWithParentDir() {

+final Map filesWithParentDir = new HashMap<>(2);

+final String xmlContent = "XML Content";

+

+filesWithParentDir.put("conf/hive-site.xml", xmlContent);

+filesWithParentDir.put("conf/ivysettings.xml", xmlContent);

+

+return filesWithParentDir;

+}

+

+private static List getExpectedClassPathWithParentDir() {

Review Comment:

Done.

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -143,6 +165,22 @@ private static Map getLibJars() {

return libJars;

}

+private static Map getFilesWithParentDir() {

Review Comment:

Done.

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -73,6 +73,28 @@ void testRegisterProvidedLocalResources(@TempDir File

flinkLibDir) throws IOExce

}

}

+@Test

+void testRegisterProvidedLocalResourcesWithParentDir(@TempDir File

flinkLibDir)

+throws IOException {

+final Map filesWithParentDir = getFilesWithParentDir();

Review Comment:

Done.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink] venkata91 commented on a diff in pull request #23164: [FLINK- 32775]Add parent dir of files to classpath using yarn.provided.lib.dirs

venkata91 commented on code in PR #23164:

URL: https://github.com/apache/flink/pull/23164#discussion_r1323679045

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -143,6 +165,22 @@ private static Map getLibJars() {

return libJars;

}

+private static Map getFilesWithParentDir() {

+final Map filesWithParentDir = new HashMap<>(2);

+final String xmlContent = "XML Content";

+

+filesWithParentDir.put("conf/hive-site.xml", xmlContent);

+filesWithParentDir.put("conf/ivysettings.xml", xmlContent);

+

+return filesWithParentDir;

+}

+

+private static List getExpectedClassPathWithParentDir() {

Review Comment:

nit: Currently this is used only by one test, why not just inline it?

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -73,6 +73,28 @@ void testRegisterProvidedLocalResources(@TempDir File

flinkLibDir) throws IOExce

}

}

+@Test

+void testRegisterProvidedLocalResourcesWithParentDir(@TempDir File

flinkLibDir)

+throws IOException {

+final Map filesWithParentDir = getFilesWithParentDir();

Review Comment:

nit: `s/filesWithParentDir/xmlResources`?

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -143,6 +165,22 @@ private static Map getLibJars() {

return libJars;

}

+private static Map getFilesWithParentDir() {

+final Map filesWithParentDir = new HashMap<>(2);

+final String xmlContent = "XML Content";

+

+filesWithParentDir.put("conf/hive-site.xml", xmlContent);

+filesWithParentDir.put("conf/ivysettings.xml", xmlContent);

+

+return filesWithParentDir;

+}

+

+private static List getExpectedClassPathWithParentDir() {

Review Comment:

It can be rewritten as:

```

List expectedClassPathWithParentDir = Arrays.asList("conf");

```

##

flink-yarn/src/test/java/org/apache/flink/yarn/YarnApplicationFileUploaderTest.java:

##

@@ -143,6 +165,22 @@ private static Map getLibJars() {

return libJars;

}

+private static Map getFilesWithParentDir() {

Review Comment:

Inline as below?

```

Map xmlResources = ImmutableMap.of("conf/hive-site.xml",

xmlContent, "conf/ivysettings.xml", xmlContent);

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [flink-kubernetes-operator] srpraneeth commented on a diff in pull request #670: [FLINK-31871] Interpret Flink MemoryUnits according to the actual user input

srpraneeth commented on code in PR #670:

URL:

https://github.com/apache/flink-kubernetes-operator/pull/670#discussion_r1323676402

##

flink-kubernetes-operator/src/main/java/org/apache/flink/kubernetes/operator/config/FlinkConfigBuilder.java:

##

@@ -420,13 +421,24 @@ private void setResource(Resource resource, Configuration

effectiveConfig, boole

? JobManagerOptions.TOTAL_PROCESS_MEMORY

: TaskManagerOptions.TOTAL_PROCESS_MEMORY;

if (resource.getMemory() != null) {

-effectiveConfig.setString(memoryConfigOption.key(),

resource.getMemory());

+effectiveConfig.setString(

+memoryConfigOption.key(),

parseResourceMemoryString(resource.getMemory()));

}

configureCpu(resource, effectiveConfig, isJM);

}

}

+// Using the K8s units specification for the JM and TM memory settings

+private String parseResourceMemoryString(String memory) {

+try {

+return MemorySize.parse(memory).toString();

Review Comment:

@morhidi The issue is that Flink interprets values only in Bibytes (gi, mi,

ki) format. For instance, a simple value like '2g' is currently interpreted as

'2gi = 2147483648 b' and there is no way to use both Bibyte and decimal byte

formats as in K8s Spec.

This change will ensure that '2gi' is interpreted as '2g' accordingly, and

the appropriate memory will be used. I assume that by 'backward change,' here

means that the existing CR memory configuration should remain functional and

not be disrupted, even though the values interpreted by the operator will

change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscr...@flink.apache.org

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Updated] (FLINK-33018) GCP Pubsub PubSubConsumingTest.testStoppingConnectorWhenDeserializationSchemaIndicatesEndOfStream failed

[

https://issues.apache.org/jira/browse/FLINK-33018?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Flink Jira Bot updated FLINK-33018:

---

Labels: pull-request-available stale-blocker (was: pull-request-available)

I am the [Flink Jira Bot|https://github.com/apache/flink-jira-bot/] and I help

the community manage its development. I see this issues has been marked as a

Blocker but is unassigned and neither itself nor its Sub-Tasks have been

updated for 1 days. I have gone ahead and marked it "stale-blocker". If this

ticket is a Blocker, please either assign yourself or give an update.

Afterwards, please remove the label or in 7 days the issue will be

deprioritized.

> GCP Pubsub

> PubSubConsumingTest.testStoppingConnectorWhenDeserializationSchemaIndicatesEndOfStream

> failed

>

>

> Key: FLINK-33018

> URL: https://issues.apache.org/jira/browse/FLINK-33018

> Project: Flink

> Issue Type: Bug

> Components: Connectors / Google Cloud PubSub

>Affects Versions: gcp-pubsub-3.0.2

>Reporter: Martijn Visser

>Priority: Blocker

> Labels: pull-request-available, stale-blocker

>

> https://github.com/apache/flink-connector-gcp-pubsub/actions/runs/6061318336/job/16446392844#step:13:507

> {code:java}

> [INFO]

> [INFO] Results:

> [INFO]

> Error: Failures:

> Error:

> PubSubConsumingTest.testStoppingConnectorWhenDeserializationSchemaIndicatesEndOfStream:119

>

> expected: ["1", "2", "3"]

> but was: ["1", "2"]

> [INFO]

> Error: Tests run: 30, Failures: 1, Errors: 0, Skipped: 0

> [INFO]

> [INFO]

>

> {code}

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (FLINK-30774) Introduce flink-utils module

[

https://issues.apache.org/jira/browse/FLINK-30774?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Flink Jira Bot updated FLINK-30774:

---

Labels: auto-deprioritized-major starter (was: stale-major starter)

Priority: Minor (was: Major)

This issue was labeled "stale-major" 7 days ago and has not received any

updates so it is being deprioritized. If this ticket is actually Major, please

raise the priority and ask a committer to assign you the issue or revive the

public discussion.

> Introduce flink-utils module

>

>

> Key: FLINK-30774

> URL: https://issues.apache.org/jira/browse/FLINK-30774

> Project: Flink

> Issue Type: Improvement

> Components: Build System

>Affects Versions: 1.17.0

>Reporter: Matthias Pohl

>Priority: Minor

> Labels: auto-deprioritized-major, starter

>

> Currently, utility methods generic utility classes like {{Preconditions}} or

> {{AbstractAutoCloseableRegistry}} are collected in {{flink-core}}. The flaw

> of this approach is that we cannot use those classes in modules like

> {{fink-migration-test-utils}}, {{flink-test-utils-junit}},

> {{flink-metrics-core}} or {{flink-annotations}}.

> We might want to have a generic {{flink-utils}} analogously to

> {{flink-test-utils}} that collects Flink-independent utility functionality

> that can be access by any module {{flink-core}} is depending on to make this

> utility functionality available in any Flink-related module.

--

This message was sent by Atlassian Jira

(v8.20.10#820010)

[jira] [Updated] (FLINK-32564) Support cast from BYTES to NUMBER

[

https://issues.apache.org/jira/browse/FLINK-32564?page=com.atlassian.jira.plugin.system.issuetabpanels:all-tabpanel

]

Flink Jira Bot updated FLINK-32564:

---

Labels: pull-request-available stale-assigned (was: pull-request-available)