[GitHub] [kafka] ijuma commented on a change in pull request #10466: KAFKA-12417 "streams" module: switch deprecated Gradle configuration `testRuntime`

ijuma commented on a change in pull request #10466:

URL: https://github.com/apache/kafka/pull/10466#discussion_r620886540

##

File path: build.gradle

##

@@ -1491,13 +1491,14 @@ project(':streams') {

}

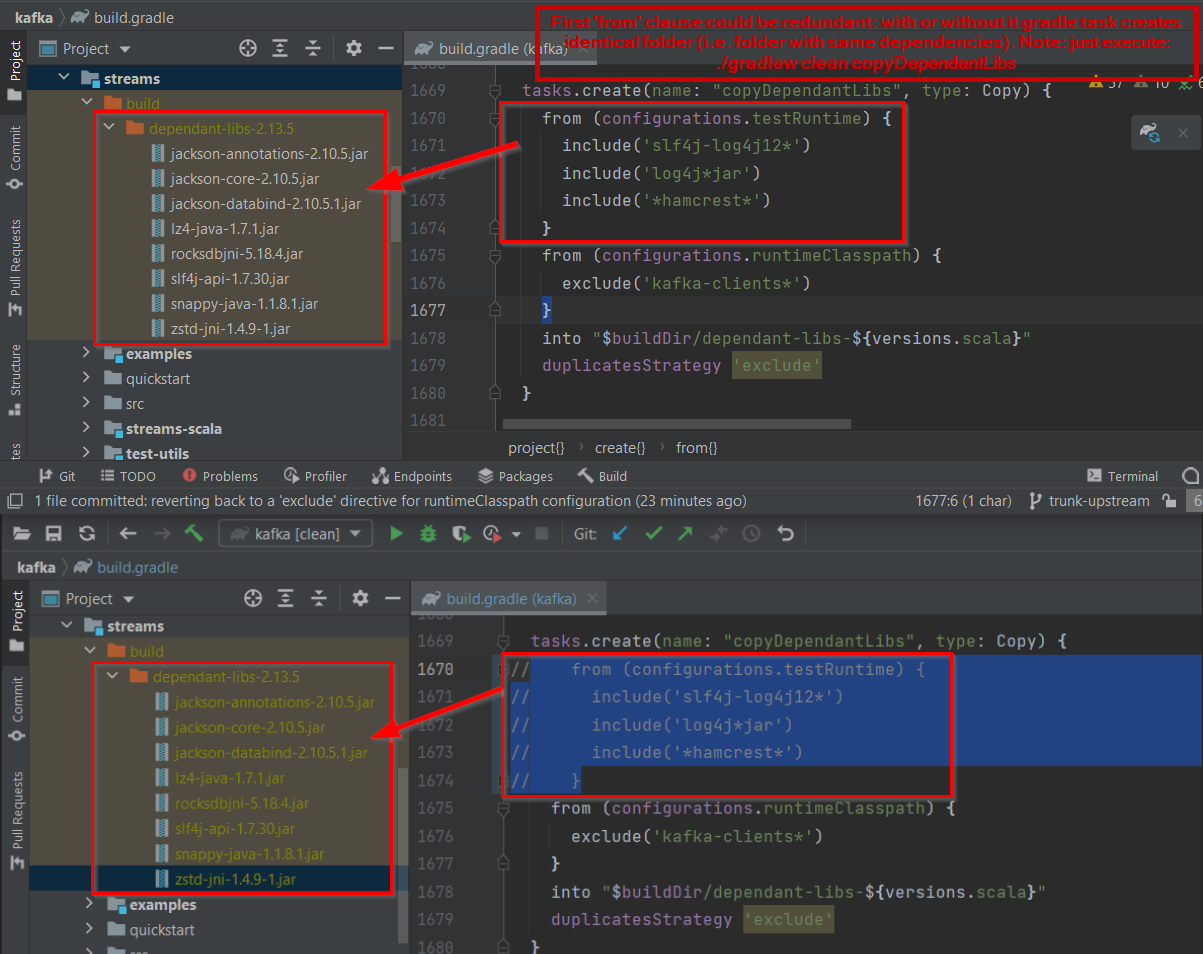

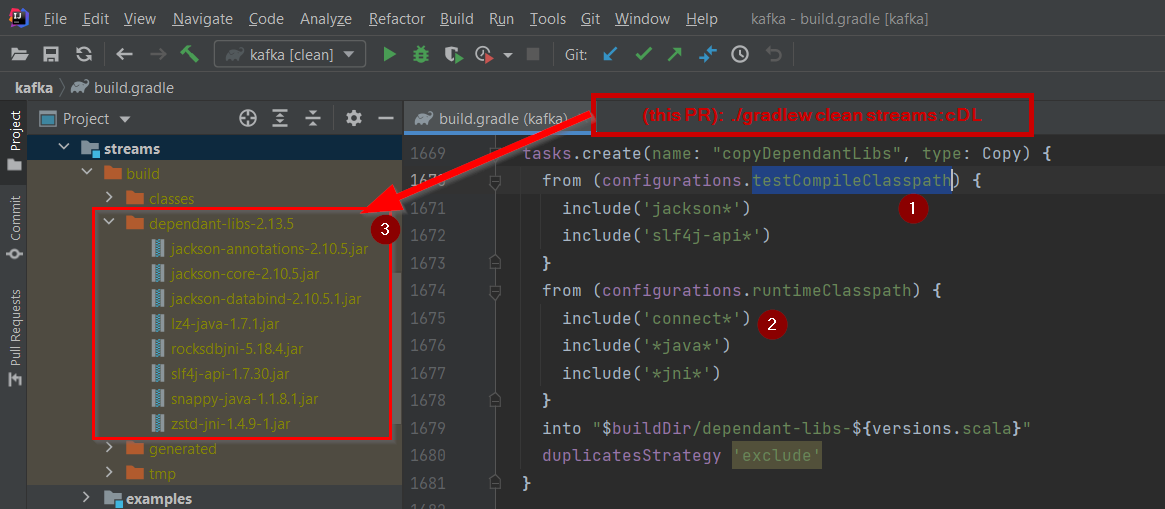

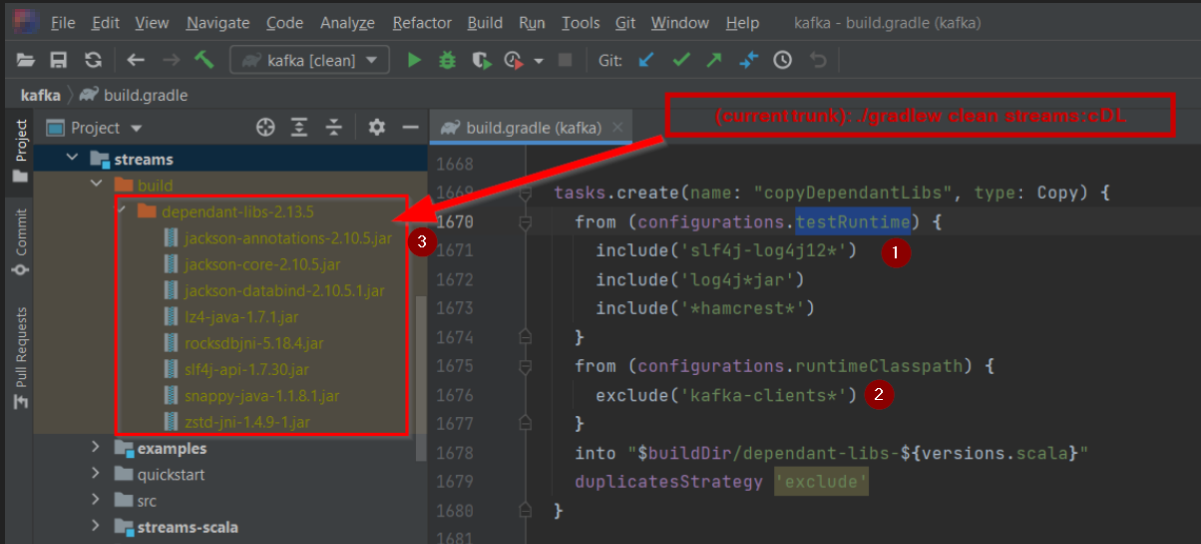

tasks.create(name: "copyDependantLibs", type: Copy) {

-from (configurations.testRuntime) {

Review comment:

That line should be including test dependencies like log4j, etc. But I

don't know why that makes sense for streams. It does make sense for core. So, I

would remove it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] vitojeng edited a comment on pull request #10597: KAFKA-5876: Apply StreamsNotStartedException for Interactive Queries

vitojeng edited a comment on pull request #10597: URL: https://github.com/apache/kafka/pull/10597#issuecomment-827317919 @ableegoldman Got it, thank you for the explanation. :) I am fine with this change. Let me apply `StreamsNotStartedException` to `validateIsRunningOrRebalancing()` in this PR, and update the KIP and discussion thread. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vitojeng commented on pull request #10597: KAFKA-5876: Apply StreamsNotStartedException for Interactive Queries

vitojeng commented on pull request #10597: URL: https://github.com/apache/kafka/pull/10597#issuecomment-827317919 @ableegoldman Got it, thank you for the explanation. :) I am fine with this change. Let me apply StreamsNotStartedException to `validateIsRunningOrRebalancing()` in this PR, and update the KIP and discussion thread. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #10597: KAFKA-5876: Apply StreamsNotStartedException for Interactive Queries

ableegoldman commented on pull request #10597: URL: https://github.com/apache/kafka/pull/10597#issuecomment-827302955 >It is a bit strange for me if use StreamsNotStartedException to replace IllegalStateException in the validateIsRunningOrRebalancing() Isn't that what we're doing with the `KafkaStreams#store` method, though? It also invokes `validateIsRunningOrRebalancing()` and thus presumably will throw an IllegalStateException if a user invokes this on a KafkaStreams that hasn't been started. Maybe you can run the test you added with the actual changes commented out and see what gets thrown. >I wonder if it worth to break the API compatibility? The trivial improvement will break the API compatibility(allMetadata(), allMetadataForStore(), queryMetadataForKey). It seems this change means that KIP-216 is no longer API Compatibility and the scope of the KIP will exceed Interactive Query related. WDYT? Those methods are all, ultimately at least, related to Interactive Queries. My understanding is that they would be used to find out where to route a query for a specific key, for example. Plus they do take a particular store as a parameter, thus even `InvalidStateStore` makes about as much sense for these methods as it does for KafkaStreams#store. Also just fyi, by sheer coincidence it seems this PR will end up landing in 3.0 which is a major version bump and thus (some) breaking changes are allowed. I think changing up exception handling in this way is acceptable (and as noted above it seems we are already doing so for the `#store` case, unless I'm missing something). Plus, it seems like compatibility is a bit nuanced in this particular case. Presumably a user will hit this case just because they forgot to call `start()` before invoking this API, therefore I would expect that any existing apps which are upgraded would already be sure to call start() first and it's only the new applications/new users which are likely to hit this particular exception. That's my read of the situation, anyway. Thoughts? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] vitojeng commented on pull request #10597: KAFKA-5876: Apply StreamsNotStartedException for Interactive Queries

vitojeng commented on pull request #10597: URL: https://github.com/apache/kafka/pull/10597#issuecomment-827296663 > LGTM, just some suggestions for the wording. Can we add a note to the section in the upgrade-guide that you added for the last exception? Sure, will do. Thank for your reminder. > By the way, I wonder if we should also throw this exception for the `allMetadata, `allMetadataForStore`, and `queryMetadataForKey`methods? It seems these methods along with`KafkaStreams#store`all do the same thing of calling`validateIsRunningOrRebalancing` which then checks on the KafkaStreams state and throws IllegalStateException if not in one of those two states. Imo all of these would benefit from the StreamsNotStartedException and it doesn't make sense to single that one out. One thing may need remind, in the KIP-216, `StreamsNotStartedException` is sub class of `InvalidStateStoreException`. That means `StreamsNotStartedException` is an exception related to the StateStore. It is a bit strange for me if use `StreamsNotStartedException` to replace `IllegalStateException` in the `validateIsRunningOrRebalancing()`. > Thoughts? I know it's not in the KIP but in this case it seems like a trivial improvement that would merit just a quick update on the KIP discussion thread but not a whole new KIP of its own I wonder if it worth to break the API compatibility? The trivial improvement will break the API compatibility(`allMetadata()`, `allMetadataForStore()`, `queryMetadataForKey`). It seems this change means that KIP-216 is no longer API Compatibility and the scope of the KIP will exceed Interactive Query related. WDYT? -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dielhennr commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

dielhennr commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620833566

##

File path:

raft/src/test/java/org/apache/kafka/raft/internals/BatchAccumulatorTest.java

##

@@ -65,6 +66,34 @@

);

}

+@Test

+public void testLeaderChangeMessageWritten() {

Review comment:

@hachikuji Why would 2 ever happen in practice? The accumulator is

created and then the leaderChangeMessage is written right after.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] dielhennr commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

dielhennr commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620833566

##

File path:

raft/src/test/java/org/apache/kafka/raft/internals/BatchAccumulatorTest.java

##

@@ -65,6 +66,34 @@

);

}

+@Test

+public void testLeaderChangeMessageWritten() {

Review comment:

@hachikuji Why would 2 ever happen in practice?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] bruto1 commented on a change in pull request #10590: KAFKA-5761: support ByteBuffer as value in ProducerRecord and avoid redundant serialization when it's used

bruto1 commented on a change in pull request #10590: URL: https://github.com/apache/kafka/pull/10590#discussion_r620829421 ## File path: clients/src/main/java/org/apache/kafka/clients/producer/Partitioner.java ## @@ -34,10 +34,9 @@ * @param key The key to partition on (or null if no key) * @param keyBytes The serialized key to partition on( or null if no key) * @param value The value to partition on or null - * @param valueBytes The serialized value to partition on or null * @param cluster The current cluster metadata */ -public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster); Review comment: I can pass a dummy byte array to the partitioner instead I guess -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-12713) Report "REAL" follower/consumer fetch latency

[ https://issues.apache.org/jira/browse/KAFKA-12713?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17331590#comment-17331590 ] Ming Liu edited comment on KAFKA-12713 at 4/27/21, 3:25 AM: The idea is: # Add waitTimeMs in FetchResponse # In processResponseCallback() of handleFetchRequest, set the waitTimeMs as the time spent in purgatory. # In FetcherStats, we will add a new meter to track the fetch latency, by deduct the waitTimeMs from the latency. Also, in FetchLatency, we should also report a time called TotalEffectiveTime = TotalTime-RemoteTime. Created KIP: https://cwiki.apache.org/confluence/display/KAFKA/KIP-736%3A+Track+the+real+fetch+latency was (Author: mingaliu): The idea is: # Add waitTimeMs in FetchResponse # In processResponseCallback() of handleFetchRequest, set the waitTimeMs as the time spent in purgatory. # In FetcherStats, we will add a new meter to track the fetch latency, by deduct the waitTimeMs from the latency. Also, in FetchLatency, we should also report a time called TotalEffectiveTime = TotalTime-RemoteTime. Let me know for any suggestion/feedback. I like to propose a KIP on that change. > Report "REAL" follower/consumer fetch latency > - > > Key: KAFKA-12713 > URL: https://issues.apache.org/jira/browse/KAFKA-12713 > Project: Kafka > Issue Type: Bug >Reporter: Ming Liu >Priority: Major > > The fetch latency is an important metrics to monitor for the cluster > performance. With ACK=ALL, the produce latency is affected primarily by > broker fetch latency. > However, currently the reported fetch latency didn't reflect the true fetch > latency because it sometimes need to stay in purgatory and wait for > replica.fetch.wait.max.ms when data is not available. This greatly affect the > real P50, P99 etc. > I like to propose a KIP to be able track the real fetch latency for both > broker follower and consumer. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

https://github.com/apache/kafka/pull/6592#discussion_r370513438

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

As per ignoring the choice, also from @mjsax

https://github.com/apache/kafka/pull/6592#issuecomment-606277356:

> I guess it's called freedom of choice :) If we feel strong about it, we

could of course disallow the "negative size" strategy for primitive types.

However, it would have the disadvantage that we have a config that, depending

on the data type you are using, would either be ignored or even throw an error

if set incorrectly. From a usability point of view, this would be a

disadvantage. It's always a mental burden to users if they have to think about

if-then-else cases.

...

Personally, I have a slight preference to allow both strategies for all

types as I think easy of use is more important, but I am also fine otherwise.

Here is my thought process, if a user chooses a serialization strategy, then

she probably knows what she is doing. Ofc, the user will have a larger payload,

and we certainly will notify her that the serialization strategy she chose is

not optimal for the current type of data, but I don't think we should strictly

forbid the user from "shooting herself in the foot".

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

https://github.com/apache/kafka/pull/6592#discussion_r370513438

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

As per ignoring the choice, also from @mjsax

https://github.com/apache/kafka/pull/6592#issuecomment-606277356:

> I guess it's called freedom of choice :) If we feel strong about it, we

could of course disallow the "negative size" strategy for primitive types.

However, it would have the disadvantage that we have a config that, depending

on the data type you are using, would either be ignored or even throw an error

if set incorrectly. From a usability point of view, this would be a

disadvantage. It's always a mental burden to users if they have to think about

if-then-else cases.

...

Personally, I have a slight preference to allow both strategies for all

types as I think easy of use is more important, but I am also fine otherwise.

Here is my thought process, if a user chooses a serialization strategy, then

she probably knows what she is doing. Ofc, the user will have a larger payload,

and we certainly will notify her that the serialization strategy she chose is

not optimal for the current type of data, but I don't think we should simply

forbid the user from "shooting herself in the foot".

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

https://github.com/apache/kafka/pull/6592#discussion_r370513438

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

As per ignoring the choice, also from @mjsax

https://github.com/apache/kafka/pull/6592#issuecomment-606277356:

> I guess it's called freedom of choice :) If we feel strong about it, we

could of course disallow the "negative size" strategy for primitive types.

However, it would have the disadvantage that we have a config that, depending

on the data type you are using, would either be ignored or even throw an error

if set incorrectly. From a usability point of view, this would be a

disadvantage. It's always a mental burden to users if they have to think about

if-then-else cases.

...

Personally, I have a slight preference to allow both strategies for all

types as I think easy of use is more important, but I am also fine otherwise.

Here is my thought process, if a user chooses a serialization strategy, then

she probably knows what she is doing. Ofc, the user will have a larger payload,

and we certainly will notify her that the serialization strategy is not optimal

for the current type of data, but I don't think we should simply forbid the

user from "shooting herself in the foot".

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

ableegoldman commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620804518

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

My feeling is, don't over-optimize for the future. If/when we do want to

add new serialization strategies it won't be that hard to pass a KIP that

deprecates the current API in favor of whatever new one they decide on. And it

won't be much work for users to migrate from the deprecated API. I'm all for

future-proofness but imo it's better to start out with the simplest and best

API for the current moment and then iterate on that, rather than try to address

all possible eventualities with the very first set of changes. The only

exception being cases where the overhead of migrating from the initial API to a

new and improved one would be really high, either for the devs or for the user

or both. But I don't think that applies here.

That's just my personal take. Maybe @mjsax would disagree, or maybe not.

I'll try to ping him and see what he thinks now, since it's been a while since

that last set of comments. Until then, what's your opinion here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

https://github.com/apache/kafka/pull/6592#discussion_r370513438

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

As per ignoring the choice, also from @mjsax

https://github.com/apache/kafka/pull/6592#issuecomment-606277356:

> I guess it's called freedom of choice :) If we feel strong about it, we

could of course disallow the "negative size" strategy for primitive types.

However, it would have the disadvantage that we have a config that, depending

on the data type you are using, would either be ignored or even throw an error

if set incorrectly. From a usability point of view, this would be a

disadvantage. It's always a mental burden to users if they have to think about

if-then-else cases.

...

Personally, I have a slight preference to allow both strategies for all

types as I think easy of use is more important, but I am also fine otherwise.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

(https://github.com/apache/kafka/pull/6592#discussion_r370513438)

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

https://github.com/apache/kafka/pull/6592#discussion_r370513438

> However, to allow us to support different serialization format in the

future, we should add one more magic byte in the very beginning that encodes

the choose serialization format

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] yeralin commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

yeralin commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620802248

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Hmmm, @mjsax reasoning was that in the future we could introduce

**more** serialization strategies

(https://github.com/apache/kafka/pull/6592#discussion_r370513438)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #10554: Expand AdjustStreamThreadCountTest by writing some data to Kafka topics

ableegoldman commented on pull request #10554: URL: https://github.com/apache/kafka/pull/10554#issuecomment-827263009 Thanks for the PR, I think this is step forward since just processing some data at all may have caught that cache bug a while back. But I wonder if we can take it a step further in this PR and also try to validate that some data did get processed in each of the tests? I can think of two ways of doing this. One is obviously just to wait on some data showing up in the output topic. Not sure what topology all of these tests are running at the moment but if there's a 1:1 ratio between input records and output then you can just wait until however many records you pipe in. There should be some methods in IntegrationTestUtils that do this already, check out `waitUntilMinRecordsReceived` Another way would be to use a custom processor/transformer that sets a flag once all the expected data has been processed. But tbh that first method is probably the best since it tests end-to-end including writing to an output topic, plus you can use an existing IntegrationTestUtils method so there's less custom code needed -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10554: Expand AdjustStreamThreadCountTest by writing some data to Kafka topics

ableegoldman commented on a change in pull request #10554:

URL: https://github.com/apache/kafka/pull/10554#discussion_r620794381

##

File path:

streams/src/test/java/org/apache/kafka/streams/integration/AdjustStreamThreadCountTest.java

##

@@ -121,6 +125,21 @@ public void setup() {

);

}

+

+private void publishDummyDataToTopic(final String inputTopic, final

EmbeddedKafkaCluster cluster) {

+final Properties props = new Properties();

+props.put("acks", "all");

+props.put("retries", 1);

+props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,

cluster.bootstrapServers());

+props.put(ProducerConfig.CLIENT_ID_CONFIG, "test-client");

+props.put("key.serializer",

"org.apache.kafka.common.serialization.StringSerializer");

+props.put("value.serializer",

"org.apache.kafka.common.serialization.StringSerializer");

+final KafkaProducer dummyProducer = new

KafkaProducer<>(props);

+dummyProducer.send(new ProducerRecord(inputTopic,

Integer.toString(4), Integer.toString(4)));

Review comment:

It might be a good idea to send a slightly larger batch of data, for

example I think in other integration tests we did like 10,000 records. We don't

necessarily need that many here but Streams should be fast enough that we may

as well do something like 1,000 - 5,000

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ncliang commented on pull request #10475: KAFKA-12610: Implement PluginClassLoader::getResource

ncliang commented on pull request #10475: URL: https://github.com/apache/kafka/pull/10475#issuecomment-827234589 Ping @mageshn @gharris1727 , the failed tests don't seem to be related to this change. -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] dielhennr commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

dielhennr commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620734980

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

https://github.com/apache/kafka/blob/c8ee242811778be5c59bb1a8cdc92682eeececbc/raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java#L257

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

Why doesn't an exception get thrown for `startNewBatch`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[jira] [Comment Edited] (KAFKA-12713) Report "REAL" follower/consumer fetch latency

[ https://issues.apache.org/jira/browse/KAFKA-12713?page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel=17331590#comment-17331590 ] Ming Liu edited comment on KAFKA-12713 at 4/27/21, 12:17 AM: - The idea is: # Add waitTimeMs in FetchResponse # In processResponseCallback() of handleFetchRequest, set the waitTimeMs as the time spent in purgatory. # In FetcherStats, we will add a new meter to track the fetch latency, by deduct the waitTimeMs from the latency. Also, in FetchLatency, we should also report a time called TotalEffectiveTime = TotalTime-RemoteTime. Let me know for any suggestion/feedback. I like to propose a KIP on that change. was (Author: mingaliu): The idea is: 0. Add waitTimeMs in Request() 1. In delayedOperation DelayedFetch class, add some code to track the actual wait time. 2. In processResponseCallback() of handleFetchRequest, we can add additional parameter of waitTimeMs invoked from DelayedFetch. It will set request.waitTimeMs. 3. In updateRequestMetrics() function, if waitTimeMs is not zero, we will deduct that out of RemoteTime and TotalTime. Let me know for any suggestion/feedback. I like to propose a KIP on that change. > Report "REAL" follower/consumer fetch latency > - > > Key: KAFKA-12713 > URL: https://issues.apache.org/jira/browse/KAFKA-12713 > Project: Kafka > Issue Type: Bug >Reporter: Ming Liu >Priority: Major > > The fetch latency is an important metrics to monitor for the cluster > performance. With ACK=ALL, the produce latency is affected primarily by > broker fetch latency. > However, currently the reported fetch latency didn't reflect the true fetch > latency because it sometimes need to stay in purgatory and wait for > replica.fetch.wait.max.ms when data is not available. This greatly affect the > real P50, P99 etc. > I like to propose a KIP to be able track the real fetch latency for both > broker follower and consumer. > -- This message was sent by Atlassian Jira (v8.3.4#803005)

[GitHub] [kafka] dielhennr commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

dielhennr commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620734980

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

https://github.com/apache/kafka/blob/c8ee242811778be5c59bb1a8cdc92682eeececbc/raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java#L257

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] dielhennr commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

dielhennr commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620734462

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

Why doesn't an exception get thrown for startNewBatch?

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

Why doesn't an exception get thrown for `startNewBatch`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on pull request #10542: KAFKA-12313: Streamling windowed Deserialiser configs.

ableegoldman commented on pull request #10542: URL: https://github.com/apache/kafka/pull/10542#issuecomment-827215366 Hey @vamossagar12 , I think we're good to go on the update you proposed to this KIP. Take a look at the other feedback I left and just ping me when the PR is ready for review again. Thanks! -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10573: KAFKA-12574: KIP-732, Deprecate eos-alpha and replace eos-beta with eos-v2

ableegoldman commented on a change in pull request #10573:

URL: https://github.com/apache/kafka/pull/10573#discussion_r620726308

##

File path:

streams/src/main/java/org/apache/kafka/streams/processor/internals/StreamThread.java

##

@@ -603,7 +606,7 @@ boolean runLoop() {

log.error("Shutting down because the Kafka cluster seems

to be on a too old version. " +

"Setting {}=\"{}\" requires broker version 2.5

or higher.",

StreamsConfig.PROCESSING_GUARANTEE_CONFIG,

- EXACTLY_ONCE_BETA);

+ StreamsConfig.EXACTLY_ONCE_V2);

Review comment:

I'll just put in both of them

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10573: KAFKA-12574: KIP-732, Deprecate eos-alpha and replace eos-beta with eos-v2

ableegoldman commented on a change in pull request #10573: URL: https://github.com/apache/kafka/pull/10573#discussion_r620725616 ## File path: docs/streams/upgrade-guide.html ## @@ -93,6 +95,12 @@ Upgrade Guide and API Changes Streams API changes in 3.0.0 + + The StreamsConfig.EXACTLY_ONCE and StreamsConfig.EXACTLY_ONCE_BETA configs have been deprecated, and a new StreamsConfig.EXACTLY_ONCE_V2 config has been Review comment: I guess I'll just try to mention StreamsConfig in there somewhere... -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

hachikuji commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620722131

##

File path:

raft/src/test/java/org/apache/kafka/raft/internals/BatchAccumulatorTest.java

##

@@ -65,6 +66,34 @@

);

}

+@Test

+public void testLeaderChangeMessageWritten() {

Review comment:

A couple extra test cases to add:

1. Can we add a test case for `flush()` to ensure that it forces an

immediate drain?

2. Can we add a test case in which we have undrained data when

`appendLeaderChangeMessage` is called?

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

Review comment:

I think I was a little off in my suggestion to add this. I don't think

this is sufficient to ensure the current batch gets completed before we add the

leader change message since `maybeCompleteDrain` will only do so if a drain has

been started. Maybe the simple thing is to call `flush()`?

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -202,6 +203,25 @@ private void completeCurrentBatch() {

currentBatch = null;

}

+public void addControlBatch(MemoryRecords records) {

+appendLock.lock();

+try {

+drainStatus = DrainStatus.STARTED;

Review comment:

How about `forceDrain`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10573: KAFKA-12574: KIP-732, Deprecate eos-alpha and replace eos-beta with eos-v2

ableegoldman commented on a change in pull request #10573: URL: https://github.com/apache/kafka/pull/10573#discussion_r620722055 ## File path: docs/streams/upgrade-guide.html ## @@ -93,6 +95,12 @@ Upgrade Guide and API Changes Streams API changes in 3.0.0 + + The StreamsConfig.EXACTLY_ONCE and StreamsConfig.EXACTLY_ONCE_BETA configs have been deprecated, and a new StreamsConfig.EXACTLY_ONCE_V2 config has been + introduced. This is the same feature as eos-beta, but renamed to highlight its production-readiness. Users of exactly-once semantics should plan to migrate to the eos-v2 config and prepare for the removal of the deprecated configs in 4.0 or after at least a year Review comment: Well, I kind of thought that we did intentionally choose to call it `beta` because we weren't completely confident in it when it was first released. But we are now, and looking back we can say with hindsight that it turned out to be production-ready back then. Still, I see your point, I'll make it more explicit with something like that suggestion -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

ableegoldman commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620720140

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Ah, sorry if that wasn't clear. Yes I was proposing to ignore the choice

if a user selects the `VARIABLE_SIZE` strategy with primitive type data. And to

also log a warning in this case so at least we're not just silently ignoring it.

But I think you made a good point that perhaps we don't need to expose this

flag at all. There seems to be no reason for a user to explicitly opt-in to the

`VARIABLE_SIZE` strategy. Perhaps a better way of looking at this is to say

that this strategy is the default, where the default will be overridden in two

cases: data is a primitive/known type, or the data is a custom type that the

user knows to be constant size and thus chooses to opt-in to the

`CONSTANT_SIZE` strategy.

WDYT? We could simplify the API by making this a boolean parameter instead

of having them choose a `SerializationStrategy` directly, something like

`isConstantSize`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

ableegoldman commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620720140

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListSerializer.java

##

@@ -77,21 +87,39 @@ public void configure(Map configs, boolean

isKey) {

}

}

+private void serializeNullIndexList(final DataOutputStream out,

List data) throws IOException {

+List nullIndexList = IntStream.range(0, data.size())

+.filter(i -> data.get(i) == null)

+.boxed().collect(Collectors.toList());

+out.writeInt(nullIndexList.size());

+for (int i : nullIndexList) out.writeInt(i);

+}

+

@Override

public byte[] serialize(String topic, List data) {

if (data == null) {

return null;

}

-final int size = data.size();

try (final ByteArrayOutputStream baos = new ByteArrayOutputStream();

final DataOutputStream out = new DataOutputStream(baos)) {

+out.writeByte(serStrategy.ordinal()); // write serialization

strategy flag

+if (serStrategy == SerializationStrategy.NULL_INDEX_LIST) {

+serializeNullIndexList(out, data);

+}

+final int size = data.size();

out.writeInt(size);

for (Inner entry : data) {

-final byte[] bytes = inner.serialize(topic, entry);

-if (!isFixedLength) {

-out.writeInt(bytes.length);

+if (entry == null) {

+if (serStrategy == SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(Serdes.ListSerde.NEGATIVE_SIZE_VALUE);

+}

+} else {

+final byte[] bytes = inner.serialize(topic, entry);

+if (!isFixedLength || serStrategy ==

SerializationStrategy.NEGATIVE_SIZE) {

+out.writeInt(bytes.length);

Review comment:

Ah, sorry if that wasn't clear. Yes I was proposing to ignore the choice

if a user selects the `VARIABLE_SIZE` strategy with primitive type data. And to

also log a warning in this case so at least we're not just silently ignoring it.

But I think you may have a point that perhaps we don't need to expose this

flag at all. There seems to be no reason for a user to explicitly opt-in to the

`VARIABLE_SIZE` strategy. Perhaps a better way of looking at this is to say

that this strategy is the default, where the default will be overridden in two

cases: data is a primitive/known type, or the data is a custom type that the

user knows to be constant size and thus chooses to opt-in to the

`CONSTANT_SIZE` strategy.

WDYT? We could simplify the API by making this a boolean parameter instead

of having them choose a `SerializationStrategy` directly, something like

`isConstantSize`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #6592: KAFKA-8326: Introduce List Serde

ableegoldman commented on a change in pull request #6592:

URL: https://github.com/apache/kafka/pull/6592#discussion_r620716670

##

File path:

clients/src/main/java/org/apache/kafka/common/serialization/ListDeserializer.java

##

@@ -0,0 +1,196 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ *http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+package org.apache.kafka.common.serialization;

+

+import org.apache.kafka.clients.CommonClientConfigs;

+import org.apache.kafka.common.KafkaException;

+import org.apache.kafka.common.config.ConfigException;

+import org.apache.kafka.common.errors.SerializationException;

+import org.apache.kafka.common.utils.Utils;

+

+import java.io.ByteArrayInputStream;

+import java.io.DataInputStream;

+import java.io.IOException;

+import java.lang.reflect.Constructor;

+import java.lang.reflect.InvocationTargetException;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.Map;

+

+import static

org.apache.kafka.common.serialization.Serdes.ListSerde.SerializationStrategy;

+import static org.apache.kafka.common.utils.Utils.mkEntry;

+import static org.apache.kafka.common.utils.Utils.mkMap;

+

+public class ListDeserializer implements Deserializer> {

+

+private Deserializer inner;

+private Class listClass;

+private Integer primitiveSize;

+

+static private Map>, Integer>

fixedLengthDeserializers = mkMap(

+mkEntry(ShortDeserializer.class, 2),

+mkEntry(IntegerDeserializer.class, 4),

+mkEntry(FloatDeserializer.class, 4),

+mkEntry(LongDeserializer.class, 8),

+mkEntry(DoubleDeserializer.class, 8),

+mkEntry(UUIDDeserializer.class, 36)

+);

+

+public ListDeserializer() {}

+

+public > ListDeserializer(Class listClass,

Deserializer innerDeserializer) {

+this.listClass = listClass;

+this.inner = innerDeserializer;

+if (innerDeserializer != null) {

+this.primitiveSize =

fixedLengthDeserializers.get(innerDeserializer.getClass());

+}

+}

+

+public Deserializer getInnerDeserializer() {

+return inner;

+}

+

+@Override

+public void configure(Map configs, boolean isKey) {

+if (listClass == null) {

Review comment:

>Maybe, if a user tries to use the constructor when classes are already

defined in the configs, we simply throw an exception? Forcing the user to set

only one or the other

That works for me. Tbh I actually prefer this, but thought you might

consider it too harsh. Someone else had that reaction to a similar scenario in

the past. Let's do it

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] junrao commented on a change in pull request #10534: KAFKA-806: Index may not always observe log.index.interval.bytes

junrao commented on a change in pull request #10534:

URL: https://github.com/apache/kafka/pull/10534#discussion_r620704283

##

File path: core/src/main/scala/kafka/log/LogSegment.scala

##

@@ -162,15 +162,43 @@ class LogSegment private[log] (val log: FileRecords,

// append the messages

val appendedBytes = log.append(records)

trace(s"Appended $appendedBytes to ${log.file} at end offset

$largestOffset")

+

+

+ def appendIndex(): Unit = {

+var validBytes = 0

+var maxTimestampSoFarTmp = RecordBatch.NO_TIMESTAMP

+var offsetOfMaxTimestampSoFarTmp = 0L

+var lastIndexEntry = 0

+val originalLastOffset = offsetIndex.lastOffset

+

+for (batch <- log.batches.asScala) {

+ batch.ensureValid()

+ ensureOffsetInRange(batch.lastOffset)

+

+ if (batch.maxTimestamp > maxTimestampSoFarTmp) {

+maxTimestampSoFarTmp = batch.maxTimestamp

+offsetOfMaxTimestampSoFarTmp = batch.lastOffset

+ }

+

+ if (validBytes - lastIndexEntry > indexIntervalBytes) {

+if (batch.lastOffset > originalLastOffset) {

+ offsetIndex.append(batch.lastOffset, validBytes)

+ timeIndex.maybeAppend(maxTimestampSoFarTmp,

offsetOfMaxTimestampSoFarTmp)

+}

+lastIndexEntry = validBytes

Review comment:

Could we just have a single val accumulatedBytes and reset it to 0 after

each index insertion?

##

File path: core/src/main/scala/kafka/log/LogSegment.scala

##

@@ -162,15 +162,43 @@ class LogSegment private[log] (val log: FileRecords,

// append the messages

val appendedBytes = log.append(records)

trace(s"Appended $appendedBytes to ${log.file} at end offset

$largestOffset")

+

+

+ def appendIndex(): Unit = {

+var validBytes = 0

+var maxTimestampSoFarTmp = RecordBatch.NO_TIMESTAMP

+var offsetOfMaxTimestampSoFarTmp = 0L

+var lastIndexEntry = 0

+val originalLastOffset = offsetIndex.lastOffset

+

+for (batch <- log.batches.asScala) {

+ batch.ensureValid()

+ ensureOffsetInRange(batch.lastOffset)

+

+ if (batch.maxTimestamp > maxTimestampSoFarTmp) {

+maxTimestampSoFarTmp = batch.maxTimestamp

+offsetOfMaxTimestampSoFarTmp = batch.lastOffset

+ }

+

+ if (validBytes - lastIndexEntry > indexIntervalBytes) {

Review comment:

It seems that we need to take bytesSinceLastIndexEntry into

consideration and we also need to update bytesSinceLastIndexEntry properly here

instead of the caller.

##

File path: core/src/main/scala/kafka/log/LogSegment.scala

##

@@ -162,15 +162,43 @@ class LogSegment private[log] (val log: FileRecords,

// append the messages

val appendedBytes = log.append(records)

trace(s"Appended $appendedBytes to ${log.file} at end offset

$largestOffset")

+

+

+ def appendIndex(): Unit = {

+var validBytes = 0

+var maxTimestampSoFarTmp = RecordBatch.NO_TIMESTAMP

+var offsetOfMaxTimestampSoFarTmp = 0L

+var lastIndexEntry = 0

+val originalLastOffset = offsetIndex.lastOffset

+

+for (batch <- log.batches.asScala) {

+ batch.ensureValid()

Review comment:

We already did validation in Log.analyzeAndValidateRecords(). So we

don't need to do this again here.

##

File path: core/src/main/scala/kafka/log/LogSegment.scala

##

@@ -162,15 +162,43 @@ class LogSegment private[log] (val log: FileRecords,

// append the messages

val appendedBytes = log.append(records)

trace(s"Appended $appendedBytes to ${log.file} at end offset

$largestOffset")

+

+

+ def appendIndex(): Unit = {

+var validBytes = 0

+var maxTimestampSoFarTmp = RecordBatch.NO_TIMESTAMP

+var offsetOfMaxTimestampSoFarTmp = 0L

+var lastIndexEntry = 0

+val originalLastOffset = offsetIndex.lastOffset

+

+for (batch <- log.batches.asScala) {

+ batch.ensureValid()

+ ensureOffsetInRange(batch.lastOffset)

+

+ if (batch.maxTimestamp > maxTimestampSoFarTmp) {

Review comment:

Since this is done in the caller for all batches, we don't need to do it

here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #10480: KAFKA-12265: Move the BatchAccumulator in KafkaRaftClient to LeaderState

hachikuji commented on a change in pull request #10480:

URL: https://github.com/apache/kafka/pull/10480#discussion_r620708379

##

File path: raft/src/main/java/org/apache/kafka/raft/KafkaRaftClient.java

##

@@ -1876,12 +1836,12 @@ private void appendBatch(

}

private long maybeAppendBatches(

-LeaderState state,

+LeaderState state,

long currentTimeMs

) {

-long timeUnitFlush = accumulator.timeUntilDrain(currentTimeMs);

+long timeUnitFlush = state.accumulator().timeUntilDrain(currentTimeMs);

Review comment:

I was just interested in the spelling fix . I am fine with

`timeUntilDrain` as well.

##

File path:

raft/src/main/java/org/apache/kafka/raft/internals/BatchAccumulator.java

##

@@ -194,14 +196,50 @@ private void completeCurrentBatch() {

MemoryRecords data = currentBatch.build();

completed.add(new CompletedBatch<>(

currentBatch.baseOffset(),

-currentBatch.records(),

+Optional.of(currentBatch.records()),

data,

memoryPool,

currentBatch.initialBuffer()

));

currentBatch = null;

}

+public void appendLeaderChangeMessage(LeaderChangeMessage

leaderChangeMessage, long currentTimeMs) {

+appendLock.lock();

+try {

+maybeCompleteDrain();

+ByteBuffer buffer = memoryPool.tryAllocate(256);

+if (buffer != null) {

Review comment:

How about in the `else` case? Probably we need to raise an exception?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] junrao merged pull request #10592: MINOR: Remove redudant test files and close LogSegment after test

junrao merged pull request #10592: URL: https://github.com/apache/kafka/pull/10592 -- This is an automated message from the Apache Git Service. To respond to the message, please log on to GitHub and use the URL above to go to the specific comment. For queries about this service, please contact Infrastructure at: us...@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9441: KAFKA-10614: Ensure group state (un)load is executed in the submitted order

hachikuji commented on a change in pull request #9441:

URL: https://github.com/apache/kafka/pull/9441#discussion_r620695517

##

File path: core/src/main/scala/kafka/coordinator/group/GroupCoordinator.scala

##

@@ -905,19 +908,35 @@ class GroupCoordinator(val brokerId: Int,

*

* @param offsetTopicPartitionId The partition we are now leading

*/

- def onElection(offsetTopicPartitionId: Int): Unit = {

-info(s"Elected as the group coordinator for partition

$offsetTopicPartitionId")

-groupManager.scheduleLoadGroupAndOffsets(offsetTopicPartitionId,

onGroupLoaded)

+ def onElection(offsetTopicPartitionId: Int, coordinatorEpoch: Int): Unit = {

+epochForPartitionId.compute(offsetTopicPartitionId, (_, epoch) => {

+ val currentEpoch = Option(epoch)

+ if (currentEpoch.forall(currentEpoch => coordinatorEpoch >

currentEpoch)) {

Review comment:

One final thing I was considering is whether we should push this check

into `GroupMetadataManager.loadGroupsAndOffsets`. That would give us some

protection against any assumptions about ordering in `KafkaScheduler`.

##

File path: core/src/main/scala/kafka/coordinator/group/GroupCoordinator.scala

##

@@ -905,19 +908,32 @@ class GroupCoordinator(val brokerId: Int,

*

* @param offsetTopicPartitionId The partition we are now leading

*/

- def onElection(offsetTopicPartitionId: Int): Unit = {

-info(s"Elected as the group coordinator for partition

$offsetTopicPartitionId")

-groupManager.scheduleLoadGroupAndOffsets(offsetTopicPartitionId,

onGroupLoaded)

+ def onElection(offsetTopicPartitionId: Int, coordinatorEpoch: Int): Unit = {

+val currentEpoch = Option(epochForPartitionId.get(offsetTopicPartitionId))

+if (currentEpoch.forall(currentEpoch => coordinatorEpoch > currentEpoch)) {

+ info(s"Elected as the group coordinator for partition

$offsetTopicPartitionId in epoch $coordinatorEpoch")

+ groupManager.scheduleLoadGroupAndOffsets(offsetTopicPartitionId,

onGroupLoaded)

+ epochForPartitionId.put(offsetTopicPartitionId, coordinatorEpoch)

+} else {

+ warn(s"Ignored election as group coordinator for partition

$offsetTopicPartitionId " +

+s"in epoch $coordinatorEpoch since current epoch is $currentEpoch")

+}

}

/**

* Unload cached state for the given partition and stop handling requests

for groups which map to it.

*

* @param offsetTopicPartitionId The partition we are no longer leading

*/

- def onResignation(offsetTopicPartitionId: Int): Unit = {

-info(s"Resigned as the group coordinator for partition

$offsetTopicPartitionId")

-groupManager.removeGroupsForPartition(offsetTopicPartitionId,

onGroupUnloaded)

+ def onResignation(offsetTopicPartitionId: Int, coordinatorEpoch:

Option[Int]): Unit = {

+val currentEpoch = Option(epochForPartitionId.get(offsetTopicPartitionId))

+if (currentEpoch.forall(currentEpoch => currentEpoch <=

coordinatorEpoch.getOrElse(Int.MaxValue))) {

Review comment:

I have probably not been doing a good job of being clear. It is useful

to bump the epoch whenever we observe a larger value whether it is in

`onResignation` or `onElection`. This protects us from all potential

reorderings.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

us...@infra.apache.org

[GitHub] [kafka] ableegoldman commented on a change in pull request #10597: KAFKA-5876: Apply StreamsNotStartedException for Interactive Queries

ableegoldman commented on a change in pull request #10597:

URL: https://github.com/apache/kafka/pull/10597#discussion_r620653928

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -1524,6 +1527,12 @@ public void cleanUp() {

*an InvalidStateStoreException is

thrown upon store access.

*/

public T store(final StoreQueryParameters storeQueryParameters) {

+synchronized (stateLock) {

+if (state == State.CREATED) {

+throw new StreamsNotStartedException("KafkaStreams is not

started, you can retry and wait until to running.");

Review comment:

Technically we don't need to wait for it to be running, they just need

to start it:

```suggestion

throw new StreamsNotStartedException("KafkaStreams has not

been started, you can retry after calling start()");

```

##

File path: streams/src/main/java/org/apache/kafka/streams/KafkaStreams.java

##

@@ -1516,6 +1517,8 @@ public void cleanUp() {

*

* @param storeQueryParameters the parameters used to fetch a queryable

store

* @return A facade wrapping the local {@link StateStore} instances

+ * @throws StreamsNotStartedException if Kafka Streams state is {@link

KafkaStreams.State#CREATED CREATED}. Just

+ * retry and wait until to {@link KafkaStreams.State#RUNNING

RUNNING}

Review comment:

```suggestion

* @throws StreamsNotStartedException If user has not started the

KafkaStreams and it's still in {@link KafkaStreams.State#CREATED CREATED}.

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the